Google DeepMind explores the collaboration of several AI agents.

Artificial intelligence is a field in which a large number of engineers and scientists are currently engaged. Almost every day there are news about the development of a form of weak AI that performs certain functions that may be useful to a person. Now developers from DeepMind, a division of Alphabet Inc. holding, are engaged in solving an interesting and topical problem for modern society. Namely, they find out under what conditions several AI agents will cooperate or compete with each other.

The problem that specialists from DeepMind are trying to solve is essentially similar to the so-called “prisoner's dilemma” . It can be formulated as follows. In almost all countries, the punishment of participants in a criminal gang is much tougher than the punishment of lone criminals who commit the same crimes. What if the police grabbed two criminals who were caught at about the same time for committing similar crimes, and there is reason to believe that the criminals acted in concert? The dilemma appears in the event that both criminals assume that they want to minimize their own sentence.

Suppose the police offer the criminals the same deal: if one of the criminals testifies against the other, who is silent, then this “silence” gets 10 years in prison, and his comrade, who has told everything, is released. If both are silent, the criminals receive a prison term of six months. If both testify against each other, then they receive a prison sentence of 2 years. In such a situation, you can act in different ways: based on the interests of the group or your own interests.

')

This dilemma is the simplest example of what developers of various AI agents will have to face in the near future. If several algorithms work on solving the same problem (for example, solving a road traffic issue during a weather disaster), then how can we get them to work together to solve the problem as efficiently as possible? In real life, cooperation or competition can be much more complex problems than those described above. And the consequences of cooperation or competition can also be much more complex.

That is why the DeepMind employees decided to search for possible ways of cooperation of AI agents.

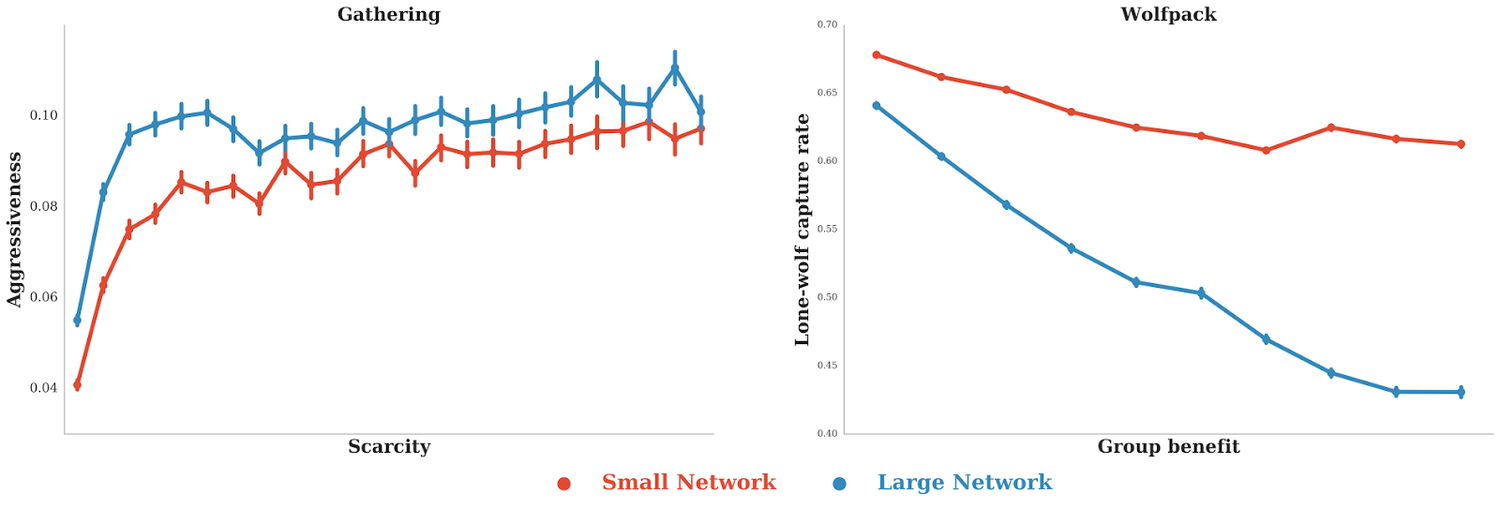

To test how weak forms of AI will behave when solving a problem, specialists have developed several games. One of them was named “Gathering”, that is, “Build”. Two computer players take part in the game, the goal of which is to collect as many apples from one pile as possible. Any player can temporarily immobilize an opponent with the help of a “laser beam”. In this case, per unit of time you can collect more virtual apples than the opponent.

In the second game, Wolfpack, two players must hunt for a third in a virtual space filled with obstacles. Players get points when capturing loot. But the parties get the maximum points only if they are together at a minimum distance from the victim.

What happened at the start of both games is hardly an unpredictable situation. In the first case, the AI agents vied with each other, because they found this as the most rational principle of interaction under the conditions set by the developers. In the second case, the former rivals began to actively cooperate, since in the second game this was a much more advantageous line of conduct than the competition.

In the first game, the rivals initially worked with the laser not too actively, while the pile of apples was large. But as the number of useful resources decreased, the laser was used more and more. Over time, the AI agents became “smarter,” and began to shoot each other with a laser even at the very beginning, when there were many more apples. We can say that the algorithm, by self-learning, found the most effective way of behavior in this game.

The graph on the left shows the dependence of the players' aggression towards each other as resources are depleted. The graph on the right shows the dependence of the frequency of catching the victim by only one of the players and the total amount of points received by a group of hunters.

Conversely, in the second game, the AI agents became more and more open to cooperation over time. If at first both of them tried to catch the prey alone, then at the end both agents cooperated, waiting for a partner next to the victim.

Actually, the results of the study also can not be called a surprise. Experts found out that the behavior of AI agents changes in accordance with the conditions and restrictions of their environment. If the rules assume a benefit in the case of aggressive behavior, the AI will become more and more aggressive. Otherwise, the AI will become increasingly more willing to cooperate with a partner or partners.

In general, the experiment somewhat clarified the future of mankind at a time when our life will largely depend on artificial intelligence. If the AI benefits from working with other forms of AI, it will happen. “As a consequence (of studying the results of the study, ed.), We can better understand the dynamics of complex systems with several AI agents,” the project authors say. Current results can be useful for the economy, traffic systems, ecology and other areas of science and life.

Source: https://habr.com/ru/post/373155/

All Articles