Why the way to the future of robozahvatov lies through tactile intelligence

The best tactile capabilities, and not just sight, will allow robots to take any object.

The simplest task of raising something is not as simple as it seems at first glance. At least for a robot. The developers of robots seek to create a mechanism that can lift anything — but now most of the robots perform "blind grasp" and are designed to lift a given object from the same place. If something changes - the shape, texture, position of the object, the robot will not know how to react to it, and the attempt to pick up the object will fail.

Robots for a long time can not perfectly take any object on the first attempt. Why seizure is such a difficult problem? When people try to take something, they use a combination of feelings, of which the main are visual and tactile. But so far, most attempts to solve the problem of capture relied solely on vision.

')

This approach is unlikely to lead to results comparable to human capabilities, since, although vision plays an important role in capturing objects (for example, you need to aim at the desired object), vision cannot give you all the information about capture. This is how Steven Pinker describes the possibilities of touch. “Imagine that you are raising a bag of milk. If you take it too weakly, it will fall; too much - he remembers; and after shaking the bag, you can even estimate the amount of milk inside! ”he writes in the book How Consciousness Works [How the Mind Works]. The robot does not have such opportunities, therefore they are lagging behind people in the simplest tasks “to lift and shift”.

As the lead researcher for the tactile and mechatronic group at École de Technologie Supérieure's Control and Robotics (CoRo) laboratory in Montreal,

(Canada), I have long been tracking the most outstanding achievements in the method of captures. And I am convinced that the current concentration on computer vision will not provide an opportunity to create an ideal capture system. In addition to vision, tactile intelligence is also needed for the future of robotic captures.

Previous studies have focused on vision, not tactile intelligence.

So far, most research has focused on creating AI based on visual feedback. This can be done, for example, through a search for matches in the database — this method is used in the Brown Humans to Robots Lab in the Million Objects Challenge project. The idea is that the robot uses the camera to search for the desired object and tracks its movements while attempting to capture. In the process, the robot compares the visual information with a three-dimensional image from the base. When he finds a match, he can choose the right algorithm for the current situation.

Brown's approach is aimed at collecting visual data for different objects, but it is unlikely that robot developers will ever be able to create a visual base for all objects that a robot can meet. Moreover, the database search approach does not include the nuances of the current environment, and therefore does not allow the robot to adapt its capture strategy in different contexts.

Other researchers turned to machine learning technology to improve grips. These technologies allow robots to learn from experience, so that, in the end, the robot will select the best way to grab something on its own. Unlike the database approach, machine learning requires little initial data. Robots do not need access to the finished database - they only need to train a lot.

The magazine has already written how Google conducted an experiment with capture technologies, combining computer vision and machine learning. In the past, researchers tried to improve the capture system by teaching the robot to follow the human choice of a method for this. Google’s biggest breakthrough was that they showed how robots study on their own — using a deep convolutional neural network, a vision system and a lot of data (collected from 800,000 capture attempts) to improve based on past experience.

The results are extremely promising: since the reaction of the robots was not programmed in advance, all their success was due to training. But the ability to view the capture process has limitations, and Google may already have come to their borders.

Focusing solely on vision leads to problems.

There are three reasons why tasks pursued by Google and other teams are hard to solve by working exclusively with vision. First, vision has many technical limitations and even the best of these systems have difficulty recognizing objects in particularly difficult conditions (translucent objects, reflections, low color contrast) or objects that are too thin.

Secondly, in many tasks when capturing an object it is hard to see and the vision cannot provide all the necessary information. If the robot tries to pick up a piece of wood from the table, a simple system will see only its top and the robot will not know what it looks like from the other side. In the more complex task of extracting various objects from the basket, the target object can be closed, partially or completely, with surrounding objects.

And, finally, the most important reason is that the vision is not adapted to the essence of the problem: the seizure occurs through contact and the force of compression, which the eye does not track. At best, the vision can provide information about the most suitable combination of fingers, but the robot still needs tactile information to find out the physical parameters for a given grip.

How tactile intelligence can help

Touching plays a central role in the tasks of capturing and manipulating objects by man. People with amputated limbs are most annoying that they cannot feel what they touch with prostheses. Without touch, they have to keep a close eye on objects during grabs and manipulations, and the average person can pick up something without looking.

Researchers are aware of the important role of touch, and over the past 30 years, many attempts have been made to create a tactile sensor that recreates human feeling. But the signals sent by the sensor are complex, there are many of them, and adding sensors to a robotic arm does not mean an immediate improvement in capture capabilities. A way to convert raw low-level data into high-level information is required, leading to improved capture and manipulation. Tactile intelligence can give robots the ability to predict the success of capture through touch, the recognition of sliding and the definition of objects through their tactile properties.

In the ÉTS's CoRo Lab, my colleagues and I create the main parts of the system that will form the core of such intelligence. One of the latest developments is a machine learning algorithm that uses pressure maps to predict successful and unsuccessful capture attempts. The system was developed by Deen Cockburn and Jean-Philippe Roberge and tries to bring robots closer to human capabilities. People learn to recognize whether a given configuration of fingers will lead to a good hold, through touch. Then we adjust the configuration until we make sure the grip works. Before robots can learn to adapt quickly, they need to learn how to predict the outcome of a capture.

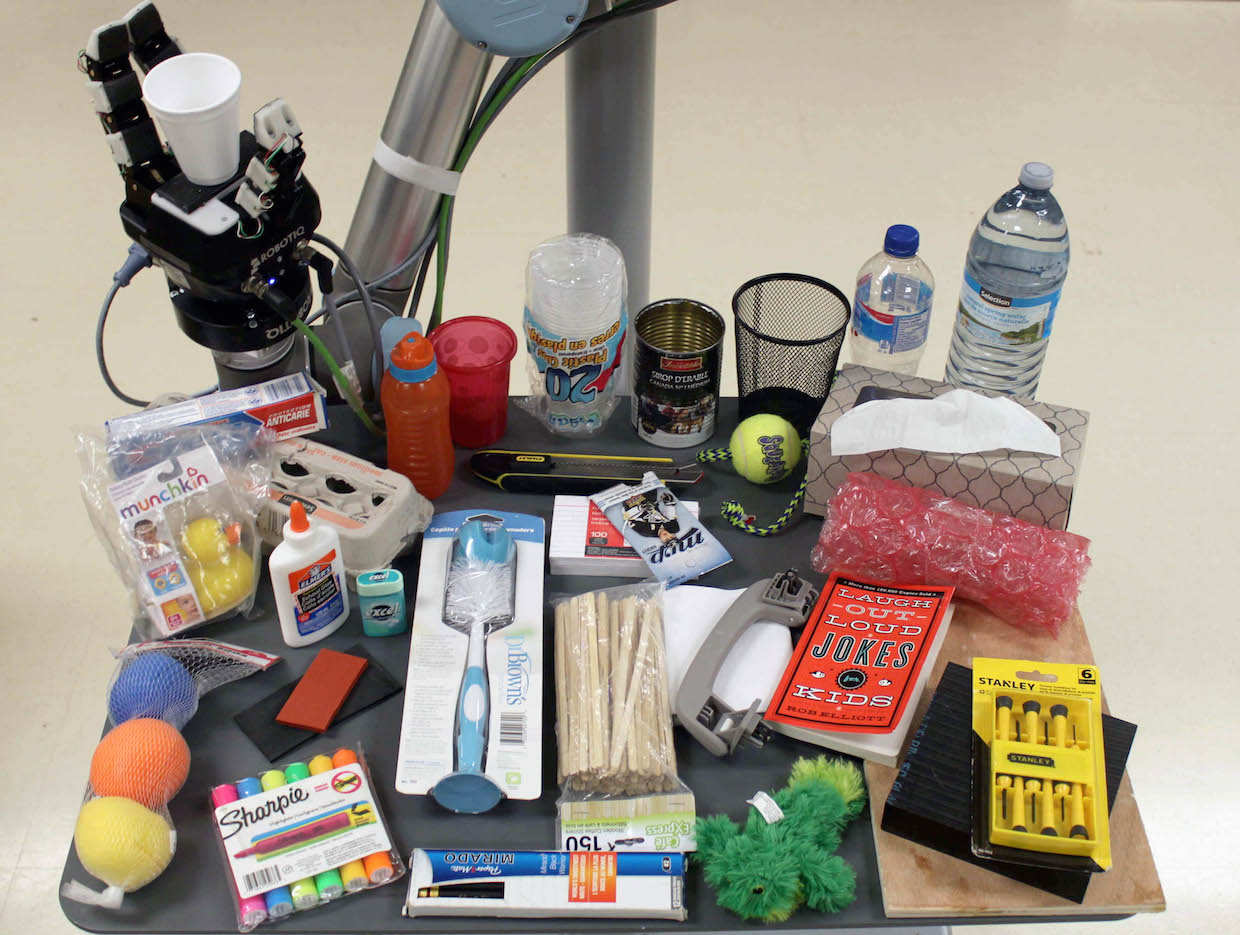

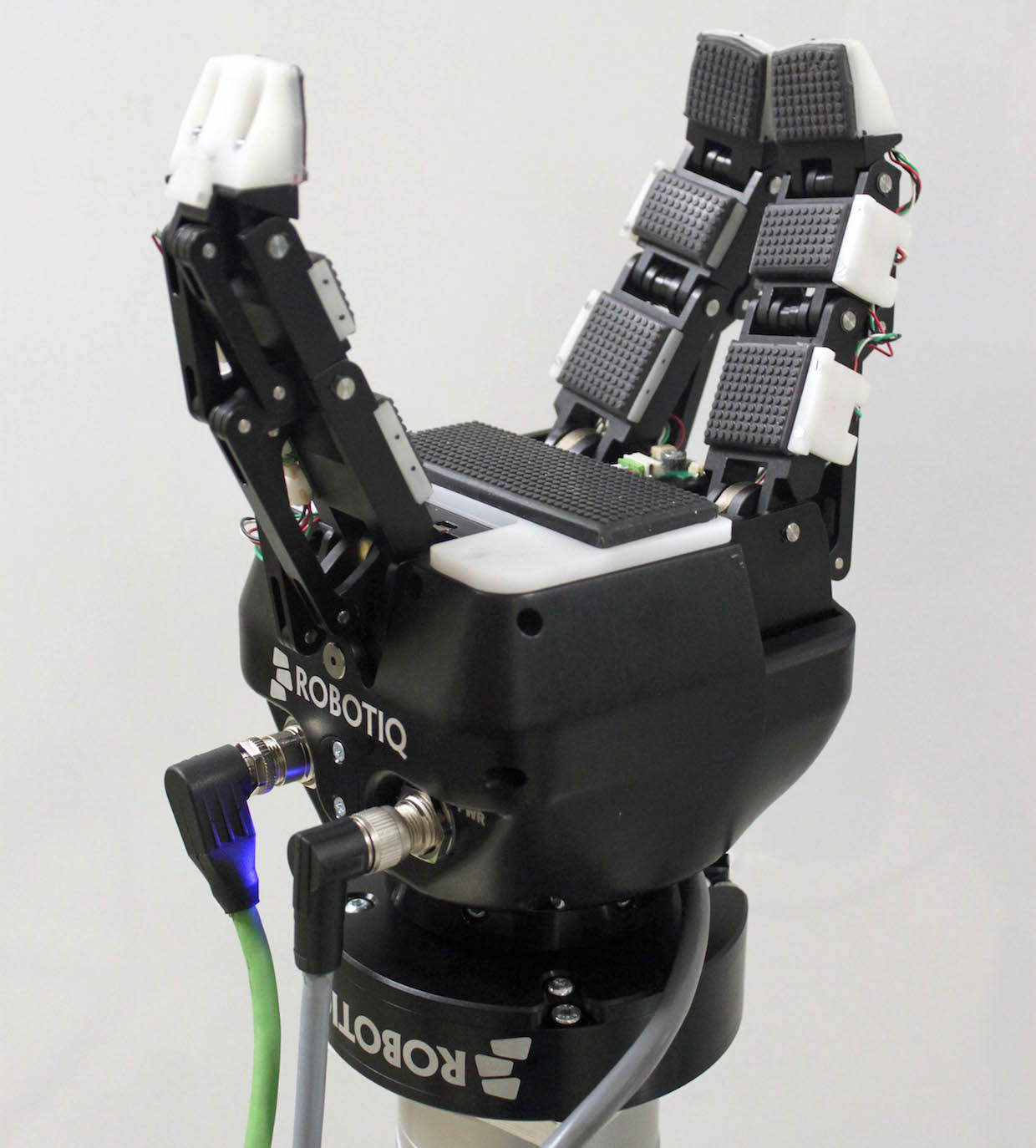

In my opinion, the CoRo Lab has achieved success in this. Combining the Robotiq arm with the Universal Robots UR10 manipulator and adding a few multimodal tactile sensors, we built a system that uses Kinect vision (which is only needed to find the geometric center of the object). As a result, the robot managed to pick up many different objects and use their differences for learning. As a result, we have created a system that correctly predicts that the capture is unsuccessful, in 83% of cases.

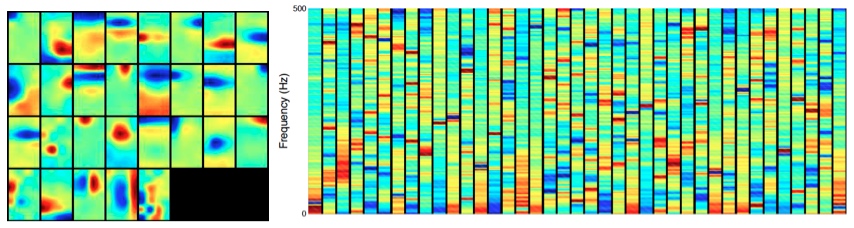

Another team at the CoRo Lab, under the direction of Jean-Philippe Roberge, concentrated on determining the slippage of objects. People can quickly determine when an object slips out of the grip, because there are fast-acting mechanoreceptors in the fingers. These are receptors in the skin that recognize rapid changes in pressure and vibration. Slip leads to vibrations on the surface of the hand, so the researchers used vibration maps (spectrograms) rather than pressure maps to train the algorithm. Using the same robot, the system was able to learn how to recognize vibration patterns corresponding to a sliding object and determined the slip in 92% of cases.

Learning to slip out of a robot can seem like a simple matter, since it’s just a collection of vibrations. But how to teach the robot the difference between the vibrations that occur during slipping and vibrations that occur when dragging an object over a surface (for example, a table)? Do not forget that the manipulator vibrates a little when moving. Three different events generate similar signals, but require different reactions. Machine learning helps to find the differences between these events.

The tactile sensor from CoRo Lab is able to provide data even about a drop of water

From the point of view of machine learning, the two laboratory teams have something in common: they do not use the manual tuning of the functions of the algorithm. The system itself determines which data will be significant for the slip classification (or prediction of the capture result) and does not rely on the researchers' best guess about the best indicator.

High-level functions in the past were always written out manually, that is, the researchers manually selected those parameters that were supposed to help distinguish the types of vibrations (or the difference between good and bad gripping). For example, they could decide that a pressure map showing that the robot had captured only the top of the object was a bad gripper. But it will be much more accurate to let the robot learn how to do this, especially since the guesses of researchers do not always correspond to reality.

"Sparse coding" [sparse coding], one of the techniques for building neural networks, is particularly well suited in this case. This is an unsupervised learning algorithm that creates a “sparse” dictionary to present new data. First, the dictionary is created using spectrograms (raw pressure maps) input to the algorithm. That gives out the dictionary consisting of representations of high-level functions. When new data is received after the next capture attempts, the dictionary is used to convert new data into ideas about data called “sparse vectors”. Finally, vectors are grouped by different sources of vibration (or by successful and unsuccessful captures).

Now CoRo Lab is testing ways to automatically update a sparse algorithm, such that each capture attempt helps the robot to make better predictions. The idea is that the robot eventually learns to use this information to correct its actions during the capture. This study is a great example of how tactile intelligence and machine vision work together.

Future tactile intelligence

The key point of the study is not that vision should be thrown out of consideration. Vision is still critical for seizing. But now, when computer vision has reached a certain level, it is better to focus on developing new aspects of tactile intelligence, rather than trying to push at the sight.

Roberge compares the potential of vision research with the study of tactile intelligence with the Pareto 80/20 rule: now that 80% of pattern recognition has already been achieved, it is very difficult to achieve the ideal for the remaining 20% and this will not add anything special to the manipulation task. And, on the contrary, researchers are still working on 80% of the task of tactile sensations of objects. Bringing to perfection the first 80% of the work on touch will be a fairly simple matter and it has the potential to make a huge contribution to the capabilities of robotic captures.

It may also be very far from the day when the robot can recognize any object through touch, not to mention getting out of your room - but when it comes, we will certainly thank the researchers in the field of tactile intelligence for this.

Source: https://habr.com/ru/post/372507/

All Articles