Nvidia has developed a robot that learns how to perform tasks, watching a person

Baxter

Usually, all industrial robots operate on the same principle. They are exactly asked what to do. And they perform this predetermined algorithm until the operator inserts another command into them. As a rule, such industrial devices are not allowed to work close to the fragile creatures that programmed them. But a team of Nvidia scientists from Seattle found a solution to this problem, and made the first robot that learns from the example of man. Inside it has only a tangle of neural networks and a “dictionary”, which allows to describe what is happening around. You can read a scientific paper here , and yesterday Nvidia published a video with examples of how to perform tasks on its YouTube channel.

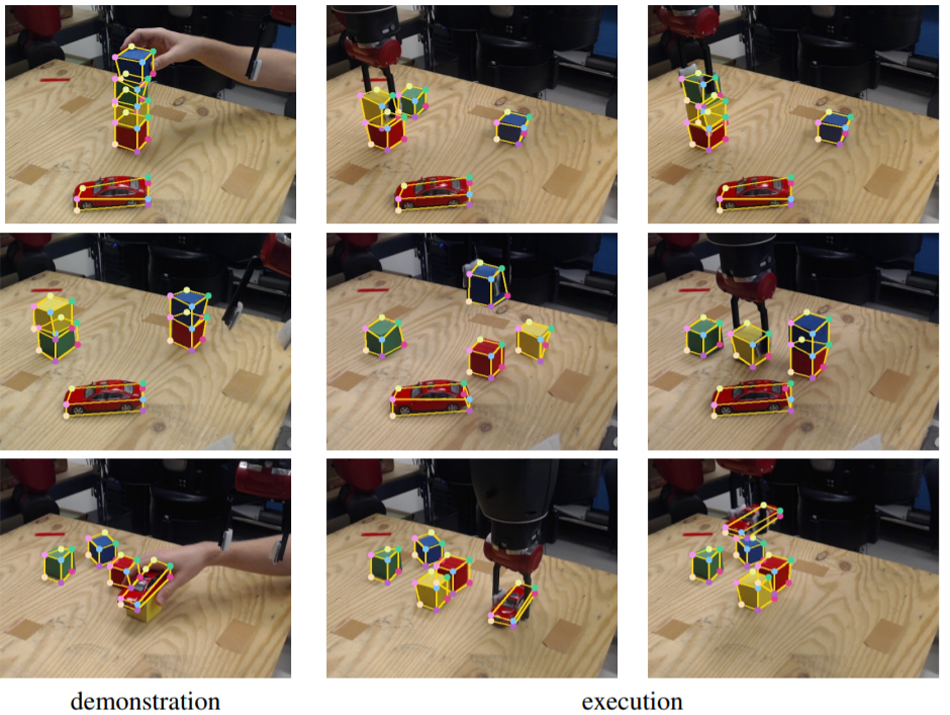

All decisions for the robot take the trained neural networks that understand their tasks, based on the demonstration. They are able to observe the environment, generate a program for themselves, and then execute it. The robot takes into account the relationship between objects (for example, it understands that if you remove the cube from the bottom, the whole structure collapses), creates plans for itself (for example, “I want to carefully remove the lower cube”), and then brings them into action (to remove the lowest cube). - you must first clear all the previous ones). Training of neural networks is carried out completely in the simulation, and in the real world, scientists only check the quality of their work, installing them in one or another robot. In the case of cubes, it was the industrial robot Baxter ( Baxter ), since its hands can perform the same functions as human hands.

Dieter Fox, head of robotics engineering at Nvidia and a professor at the University of Washington, says the team wants to create the next generation of robots that can safely work in extreme close proximity to humans. But for this, such robots must be able to identify people, distinguish them from the environment, monitor their activities and understand when they need help. Dieter expects to see such robots in small industries and in private homes, especially among people with disabilities. They are able to adapt to new situations on the go, and can work without a special operator trained in their configuration.

A self-learning algorithm can be easily taught to play games — simply by repeating the same segment many times, corrected for error. But Fox says that this training is not suitable for a robot. He works in the real world, so he has a much wider decision space available to him, and in case of an error, the result can be catastrophic. Therefore, the task of the team was to train the neural network to accurately follow the example of a person, and if there is an unforeseen deviation from such a program, to understand that an error occurred and try to eliminate it.

Neural networks operating in Baxter

')

So far, the tasks assigned to Baxter are as simple as possible. Differences cubes from each other in color and shape. See what man does to them. Repeat for him all the operations. This can make a three year old child. But for robotics, this is an important step in an attempt to create a universal robot capable of learning new “tricks” without the intervention of a team of programmers.

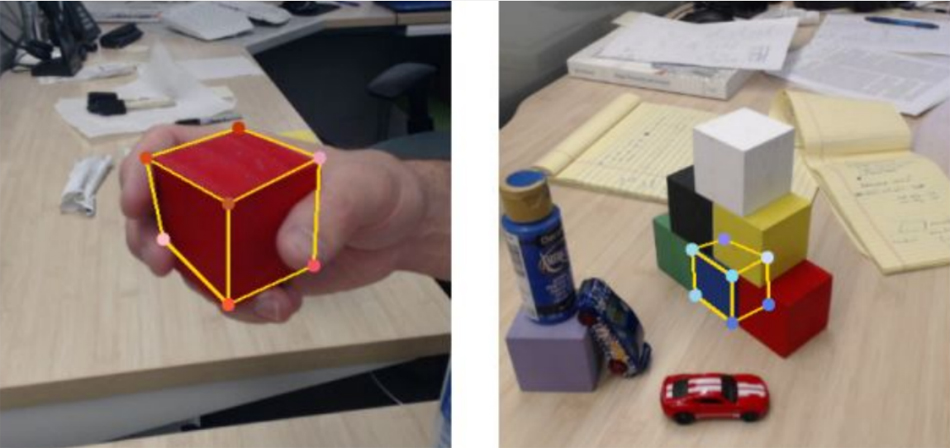

And already at this stage, not everything is as smooth as it may seem. For example, developers are very proud that the system was able to independently distinguish the red typewriter from the red cube, although nobody had shown it in the real world before. Or, for example, the robot is now hard to feel the depth, he has flat “eyes” of the camera, but he understands perfectly well when the cube was not placed on top of his friend, and he himself realizes how this can be corrected so that everything works just like person

A group of scientists (by the way, there was a Russian in it - Artem Molchanov ) achieved that a single demonstration is enough for the machine to repeat all the actions. Moreover, all the commands that the robot formulates to itself in its neural network brain are easily readable by humans. “Put yellow on red”, “Move green to blue”. Someone who has never encountered such a robot, if necessary, can easily come up and “fix his brain”.

For training the neural networks, the team mainly used synthetic data from a simulated environment. Given the speed of movement of the robot arm, in the real world networks would have to learn for years, not to mention the fact that you can break the car. Stan Burchfield, who led this project, says that creating free simulations that are close to the real world, in which algorithms can learn from their mistakes, is the only way to sufficiently speedy self-learning of robots. Therefore, Nvidia and is engaged in this development: the company seems that their hardware is ideal for such tasks. An important component of training is the visual aspect. Machines need to understand what a person looks like, and how the objects on which to work differ from each other. Nvidia's experience in creating hardware and software that works with graphics, according to Burchfield, is indispensable here.

Now the team is engaged in the creation of more photo-realistic simulations, so that neural networks can more easily be transferred to the real world, and expands the scope of tasks that they can remember.

Nvidia robot at work

PS When you buy Nvidia cards on Newegg and Amazon, the savings will be between 30%. And Pochtoy.com delivers them from the USA to Russia. When you register with us with the code Geektimes - $ 7 to your account.

Source: https://habr.com/ru/post/371531/

All Articles