Four fairly simple but intelligent tools for writing music for your movie masterpiece.

Creativity with the use of artificial intelligence now includes the use of programs for writing music that can be controlled by anyone.

In the good old days, you could spend the day shooting your own home-made video , compose music for it using cutting from your favorite songs and upload it to the Internet without any problems sharing it with everyone. But after the robotic tools for tracking copyright compliance began to scan new downloads, society gradually became increasingly concerned about the correct licensing of songs, and now you can’t use any kind of music for publicly available videos.

So what should a beginner, at best, a musician? I marched in college, so I understand what rhythm, phrasing and tempo are, but my ability to create music stopped somewhere at the high school level.

Fortunately, we, authors of films and podcasts with problems with music, now have special robots. Recently, several good-quality AI projects have appeared - one of the most notable, perhaps, will be Flow Machines from Sony, whose debut album was released in January - and slowly but surely these tools are being moved from research laboratories and professional studios to public access.

So, when I recently turned out to be left on my own for the weekend, and only my dog made me company and the desire to eat tacos in the evening, I decided that it was time to return to the scene of short films. My documentary about Ernie from the Shih Tzu breed is voiced entirely with the help of smart music writing tools that everyone has access to right now.

')

Chrome song maker

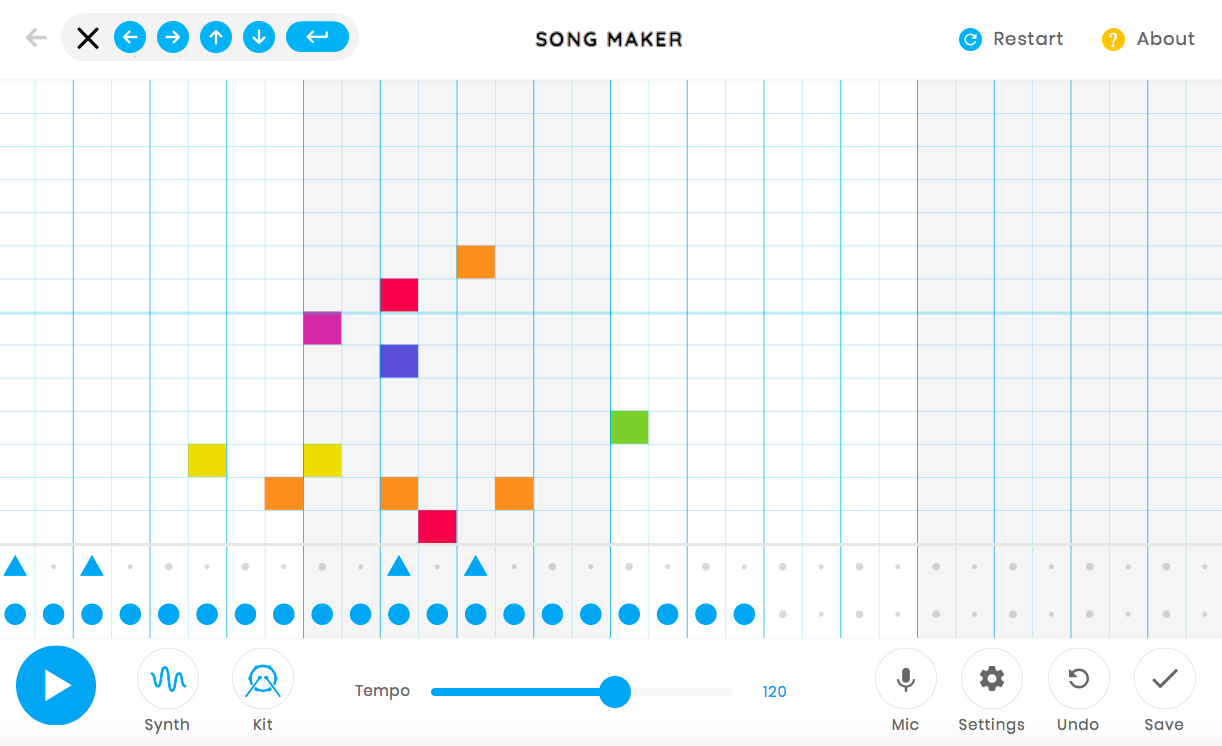

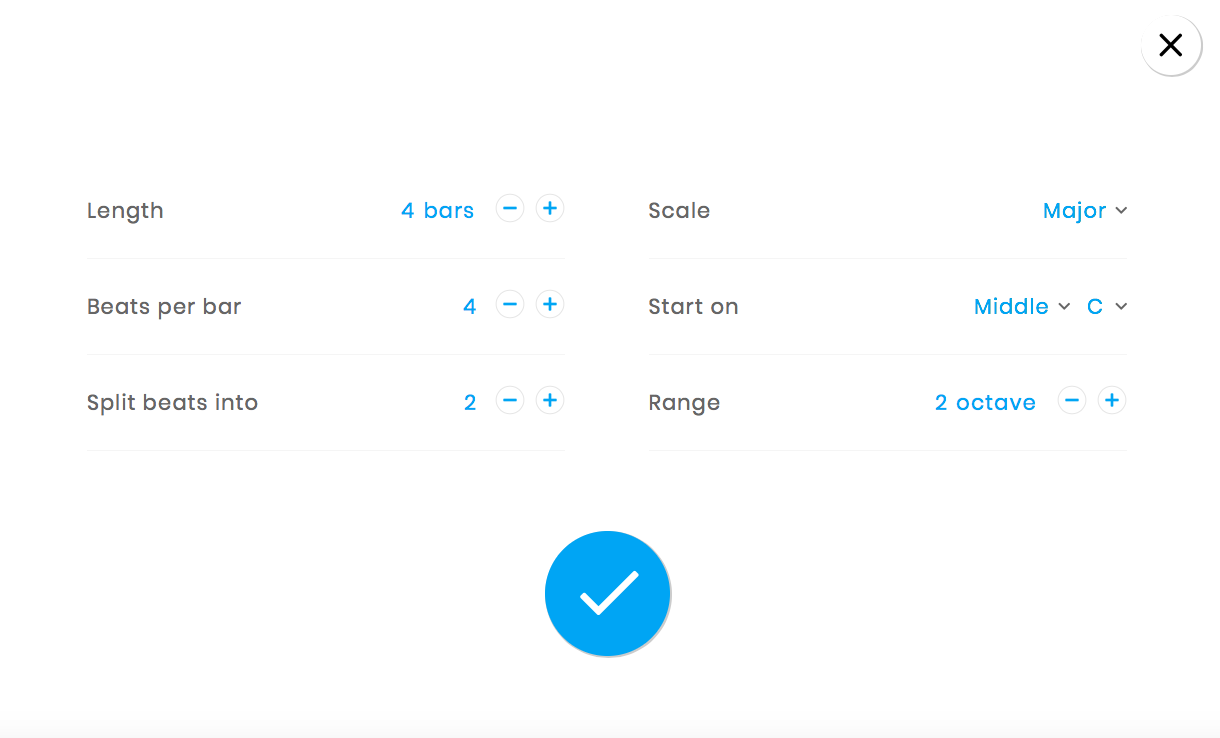

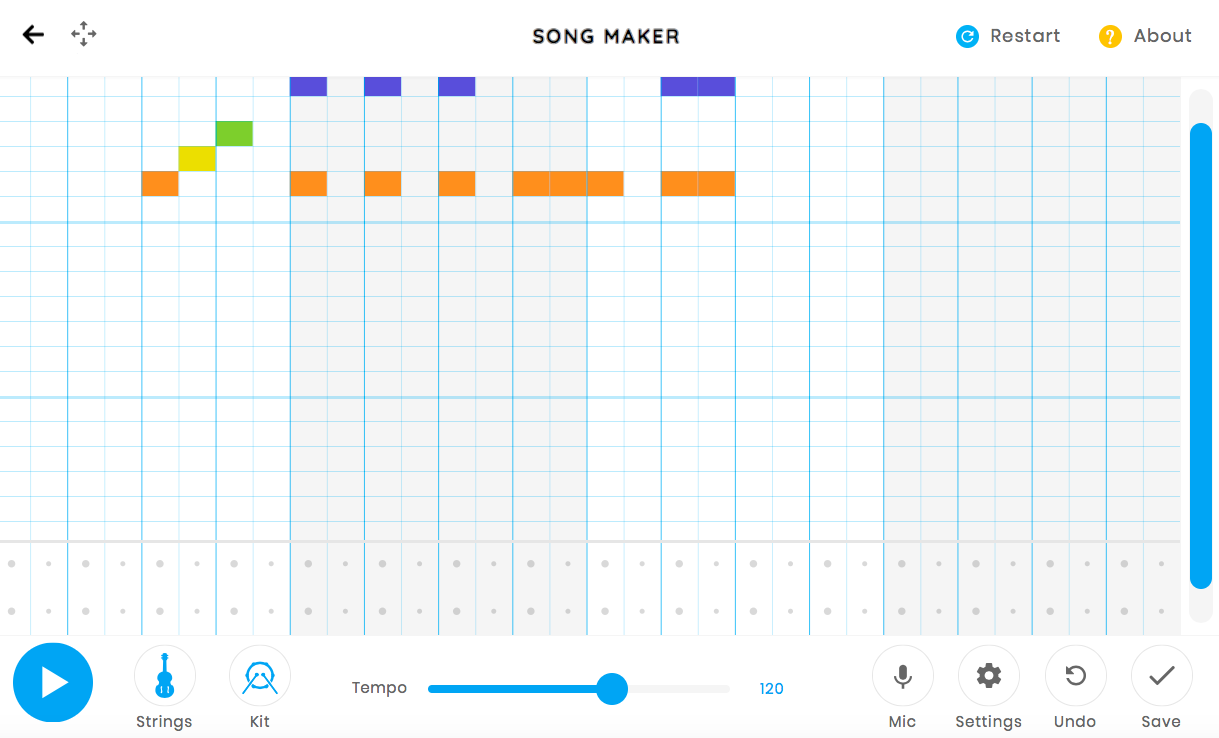

Song Maker was released on March 1, and is the newest technical tool for writing melodies. Technically, this is not an AI composer. It is a tool that simplifies composition to the point where everyone can do it, regardless of whether you know things like tone or rhythm. It meets users with a grid representing the bars on the x axis and the tonality on the y axis, and filling in any square produces a sound. The choice of instruments is limited to five tones (piano, synthesizer, strings, marimba, woodwind instrument) and four percussion instruments (electronic, xylophone, drums and conga). You can enter data with the mouse, you can use a MIDI keyboard or sing into the microphone, you can even use the keyboard.

Song Maker is part of Google’s browser-based Chrome Music Lab, which helps you learn basic music principles. There are already half a dozen instruments in the Music Lab that focus on a variety of topics, from oscillators and rhythm to arpeggios . And although they are aimed at approximately the elementary school level, there are enough settings in the Song Maker program so that any beginning composer can work effectively. You can loop up to 16 bars, change the size to something exotic like 5/4 or 12/8, break notes into triplets or sixteenths, choose the tonality and starting note, and use three octaves.

As for my task, Song Maker allows you to select only one tonal and percussion instrument. I needed something more juicy, something from the category of spy thrillers, for the moment when the dog reveals his work. Ideally, I wanted to combine the basic rhythm I created with the high-octave strings. But Song Maker does not allow you to lay tracks on each other and create more serious compositions, even if you choose the same number of measures and tempo. This will require a simple editor program and the ability to record the sound produced by the browser. The limitations of Song Maker turn out to be positive and negative - everything is simple enough to create something unusable for listening, but the songs have a ceiling of difficulty.

Google Magenta tools

Google would not be Google if the company did not have several projects to update the process of creating music. Compared to Chrome Music Labs, the Magenta project has a more direct approach.

The project was first presented in 2016 at Moogfest (an increasingly popular conference for technology lovers in the music industry). Magenta aims to take advantage of AI and machine learning to give everyone the ability to create music.

“The goal of Magenta is not just to create new music-generating algorithms, but to“ close the creative loop, ”the team wrote in the announcement of the N Synth tool. “We want to give creators tools created with machine learning, inspiring future research directions. Instead of replacing human creativity with AI, we want to provide our tools with a deeper understanding, so that they are more intuitive and inspiring. ”

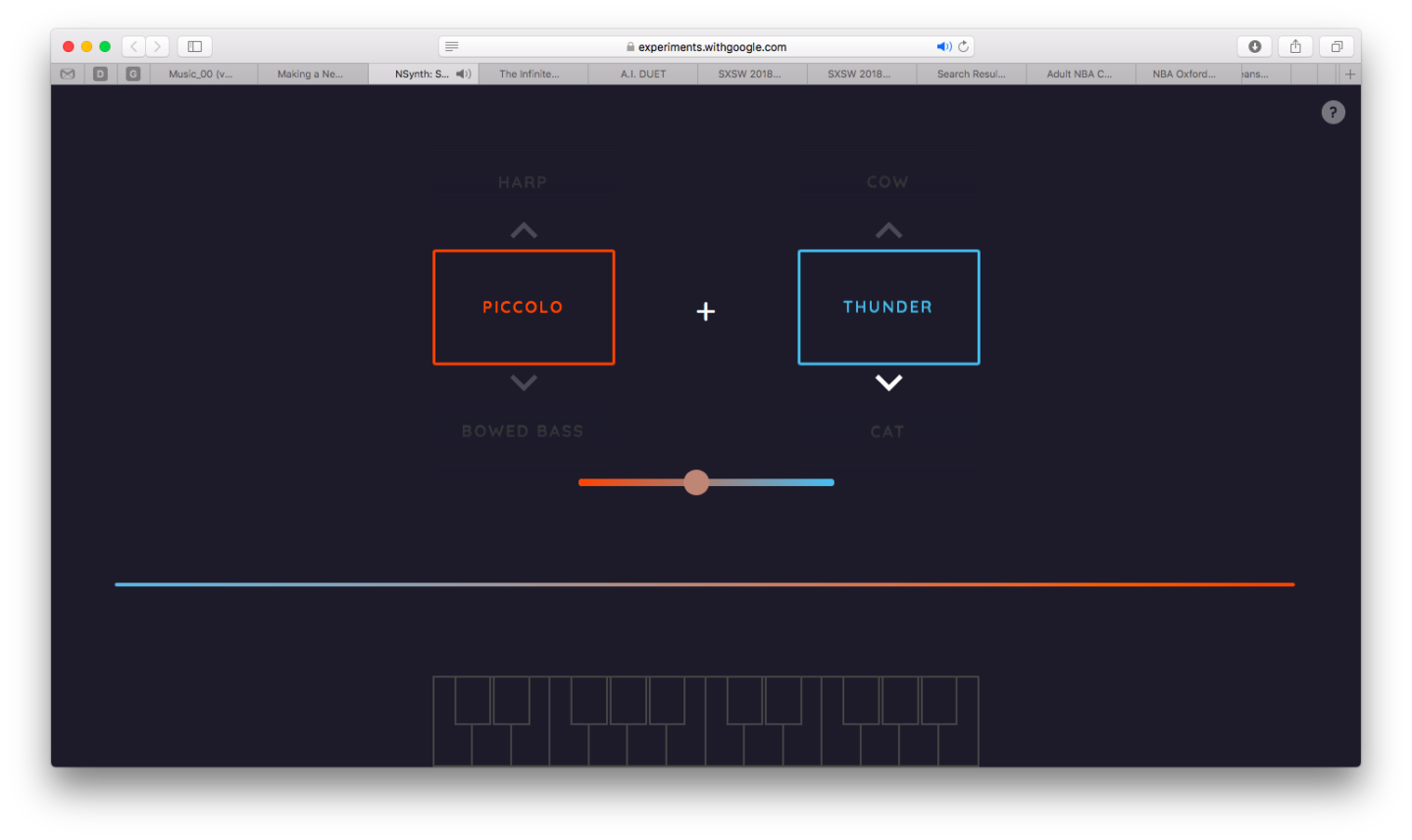

The N Synth tool, which allows you to cross two instruments and get a new sound, is just above my abilities. The same applies to other tools, access to which can be obtained by anyone who wants to go deeper into the work of Magenta - the team releases all the tools and models into free access to GitHub . For my purposes, I chose two instruments from the project that are in working condition: Infinite Drum and AI Duet.

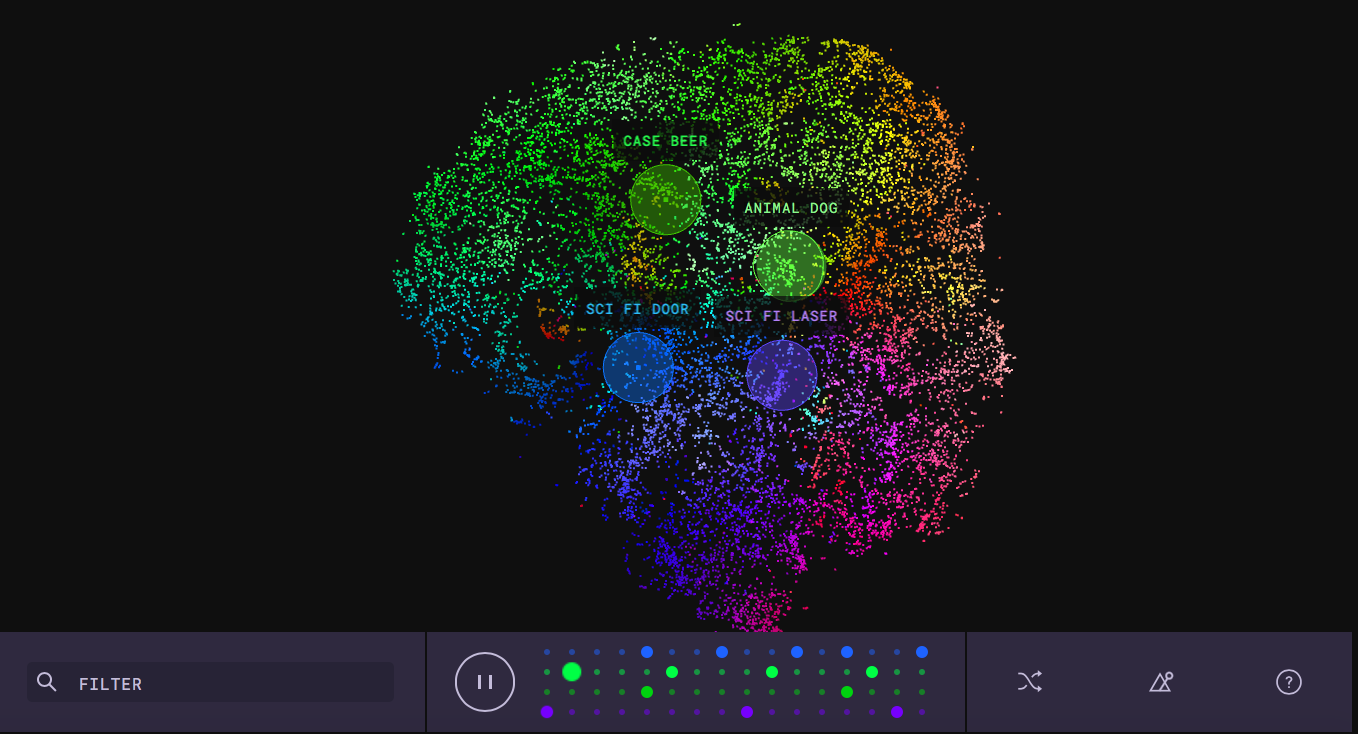

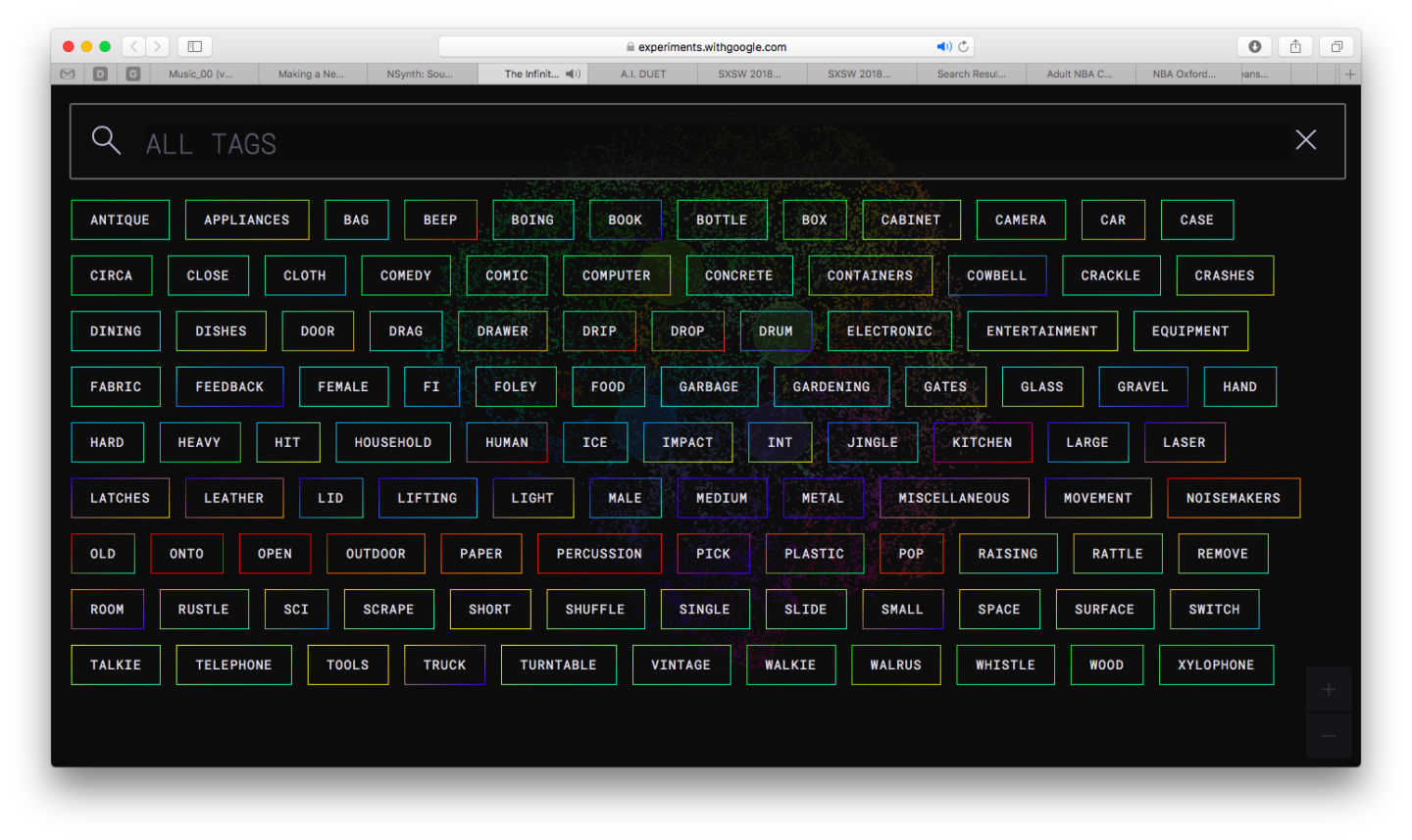

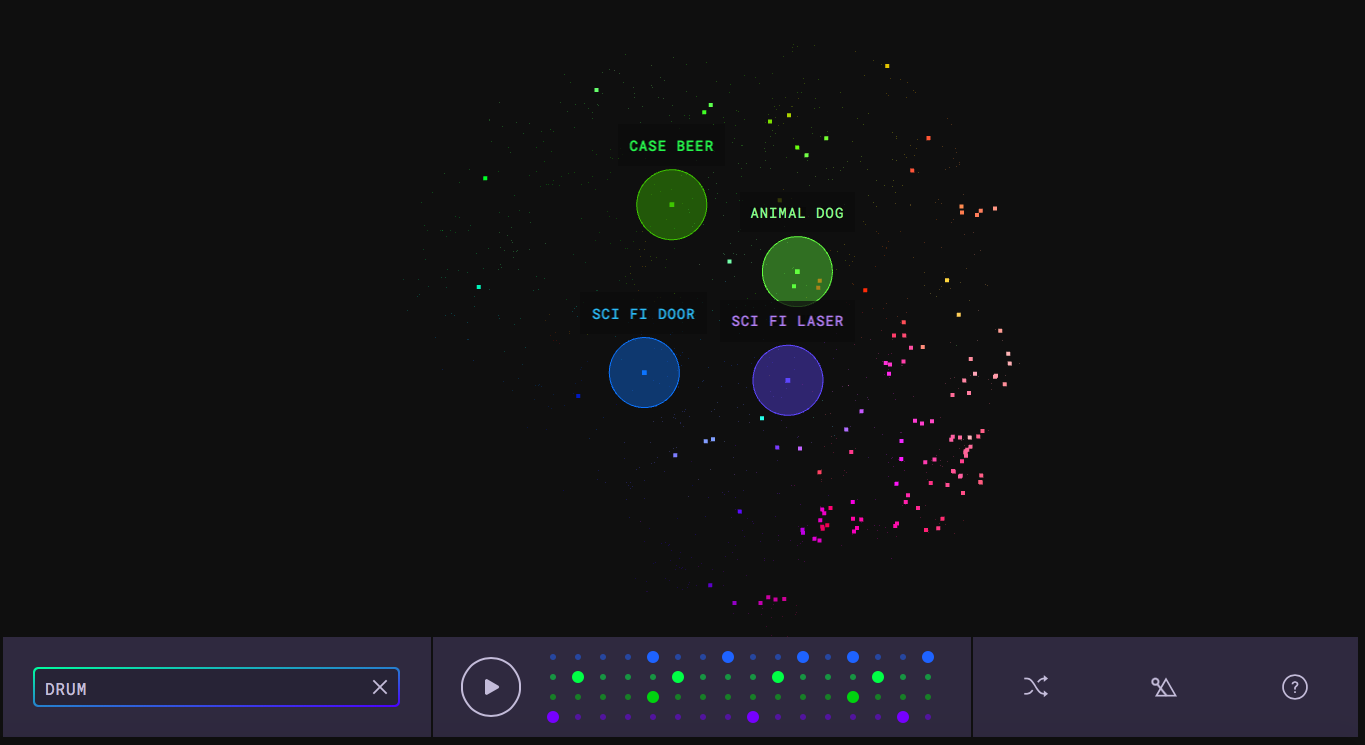

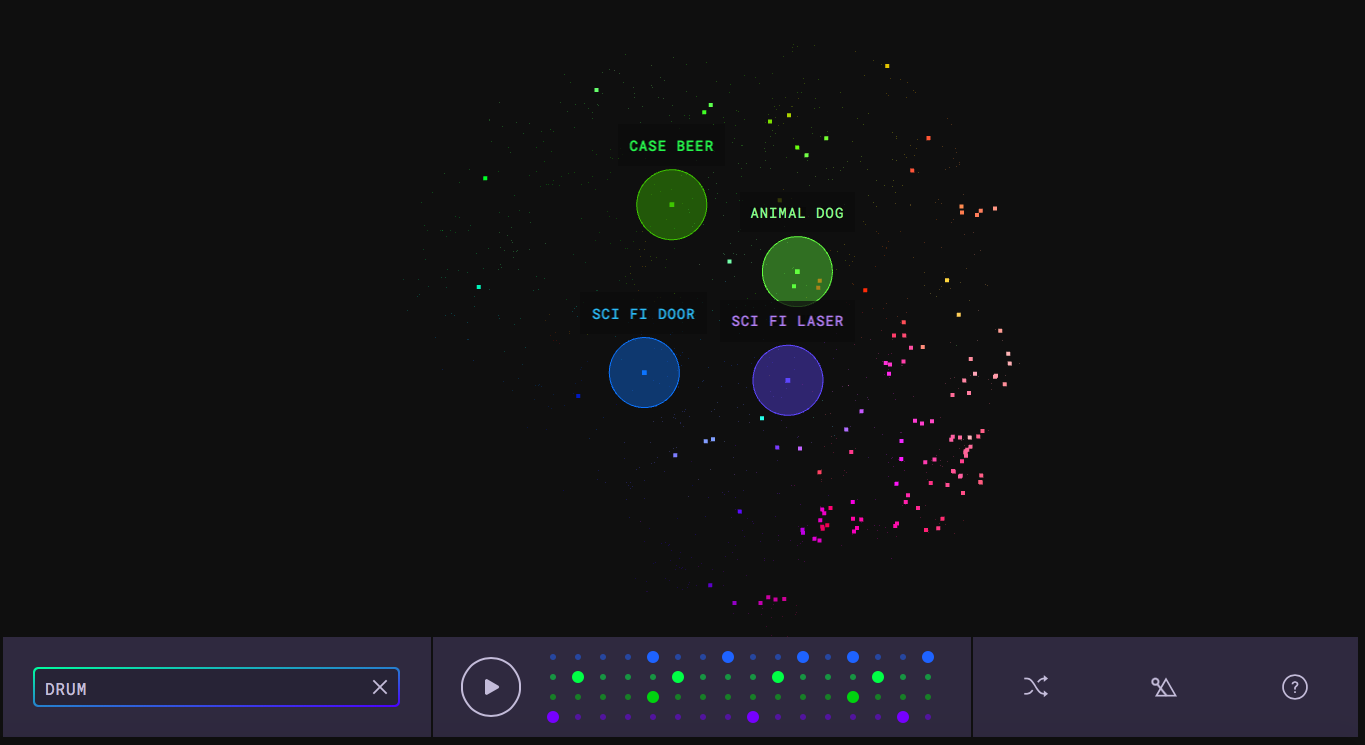

Infinite Drum , in essence, is a drum machine made from everyday sounds, organized with the help of machine learning. “No descriptions or tags were given to the computer — only sounds,” is indicated in the description on GitHub. “Using t-SNE technology, the computer places similar sounds closer together. You can use the map to explore the surroundings of similar sounds and even create a rhythm using a sequencer. ”

From the user's point of view, the tool becomes extremely simple. You scroll through the list looking for the category of sound, and select four sounds to combine. You can choose a random mix or change the tempo, but on the whole you just click “play” and get a looped rhythm.

AI Duet, on the contrary, will help you create the perfect melody. You press any keys with the skills to play the piano at any level, and the instrument with the help of machine learning plays the answer to you, trying to create a melody. The AI uses machine learning on a pile of songs to then respond to your own input (given the tonality and rhythm). As the tool developer, Yotam Mann, says, “It’s even fun to just press the keys randomly. The neural network is trying to produce something coherent based on any input “.

These programs, like Chrome Music Labs, relate more to individual instruments than to complex creative platforms. Therefore, to create a final product, I needed a separate program for editing audio.

Amper AI

If the listed tools seem too complicated to you, do not worry. While Google is promoting its Magenta, and Sony is putting into the hands of real musicians the product of FlowMachine running by AI, for everyone else there is a real project that allows you to create music: Amper AI .

This is not the first project to compose music using AI, which has become available to everyone (for example, the British, who made Jukedeck , have been working since 2015), but this is the best combination of simplicity, settings and final quality. Last year, Amper hit the headlines when the youngest Tarin Southern created a single that was almost indistinguishable from the songs in the Top 40. Now, Southern is about to release an entire album, recorded using AI, titled "I Am AI". Various compositions helped create tools such as Amper, IBM Watson, Aiva and Google Magenta.

"Our goal is to make music as good as John Williams , and to make it sound like it was recorded at Abbey Road Studios and produced by Quincy Jones , " Amper CEO Drew Silstein told me in December. "We are striving for similar musical standards, but Of course, they haven’t reached them yet. Much needs to be done on the musical side of the project before Amper cannot be distinguished from music created by people, and then it will be the most valuable tool for creative personalities. ”

The company was founded by musicians, not programmers, which is why Silverstein and his comrades consider their AI to be a tool, not a substitute (therefore, they cooperate with Sathern instead of simply promoting a single as a bot job). They trained their AI on the work of real composers in the same way that AlphaGo learned from real match games. The result is a tool that even in a simplified beta version offers many settings, and creates almost perfect and useful melodies.

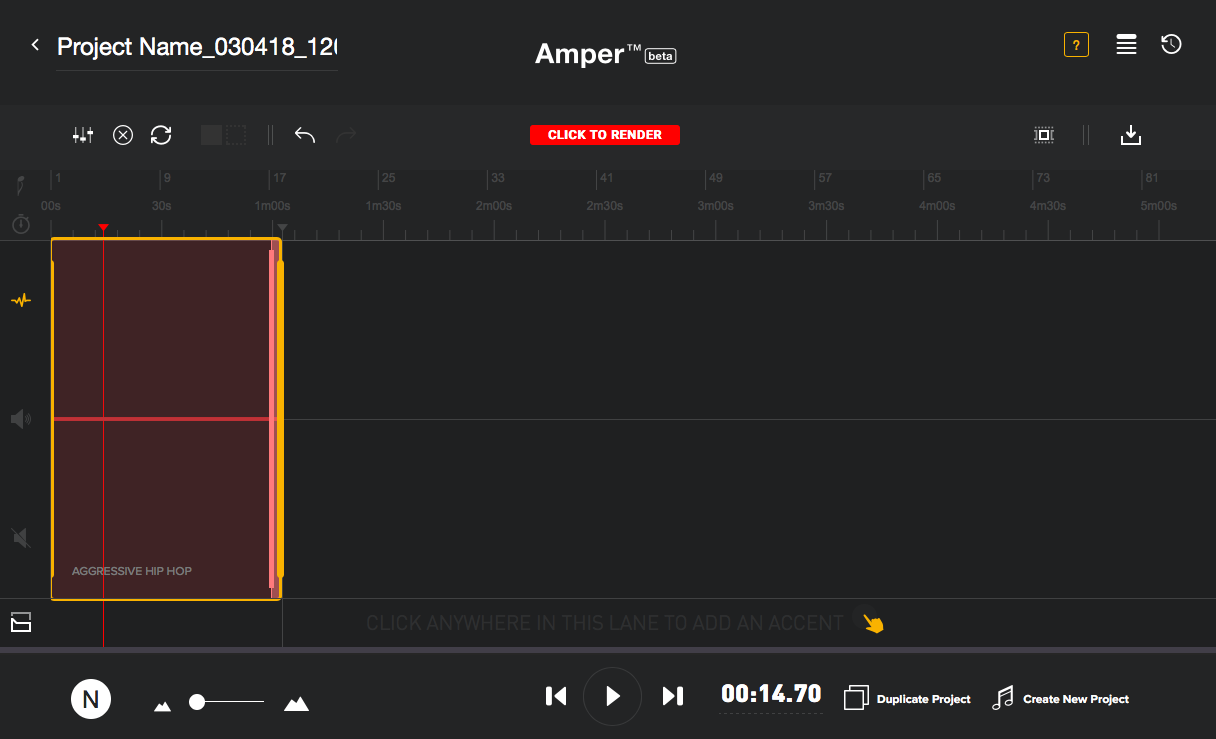

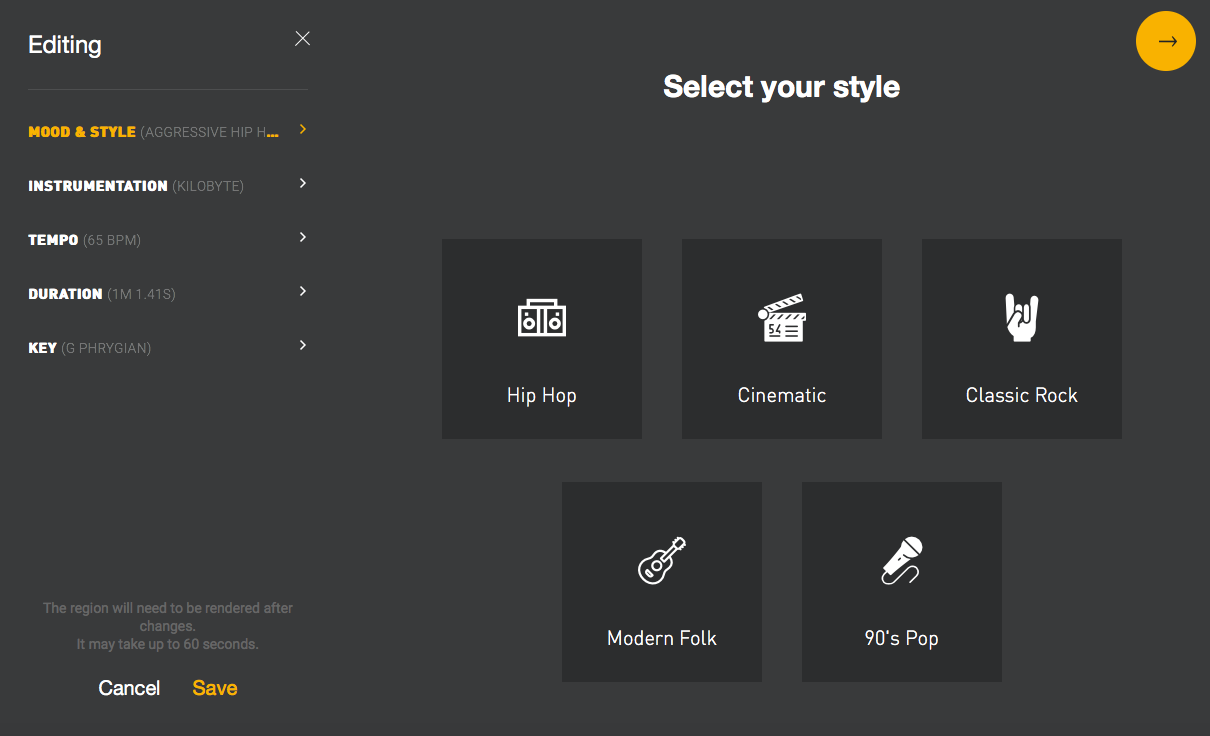

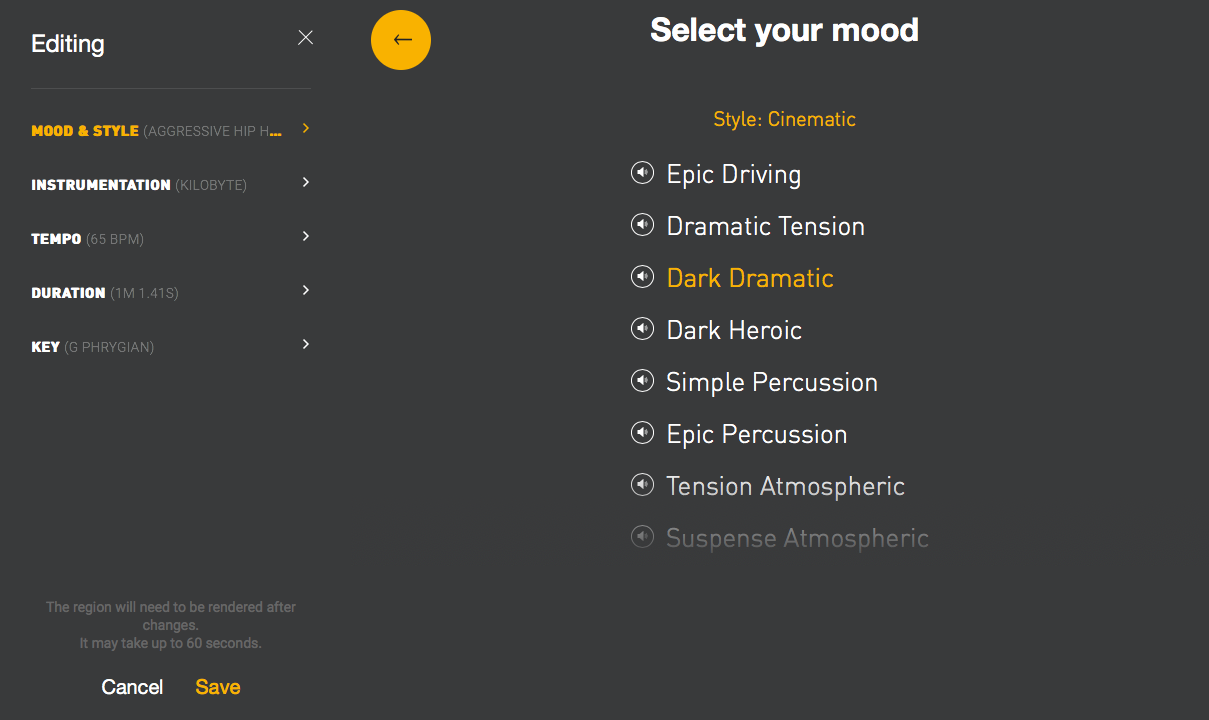

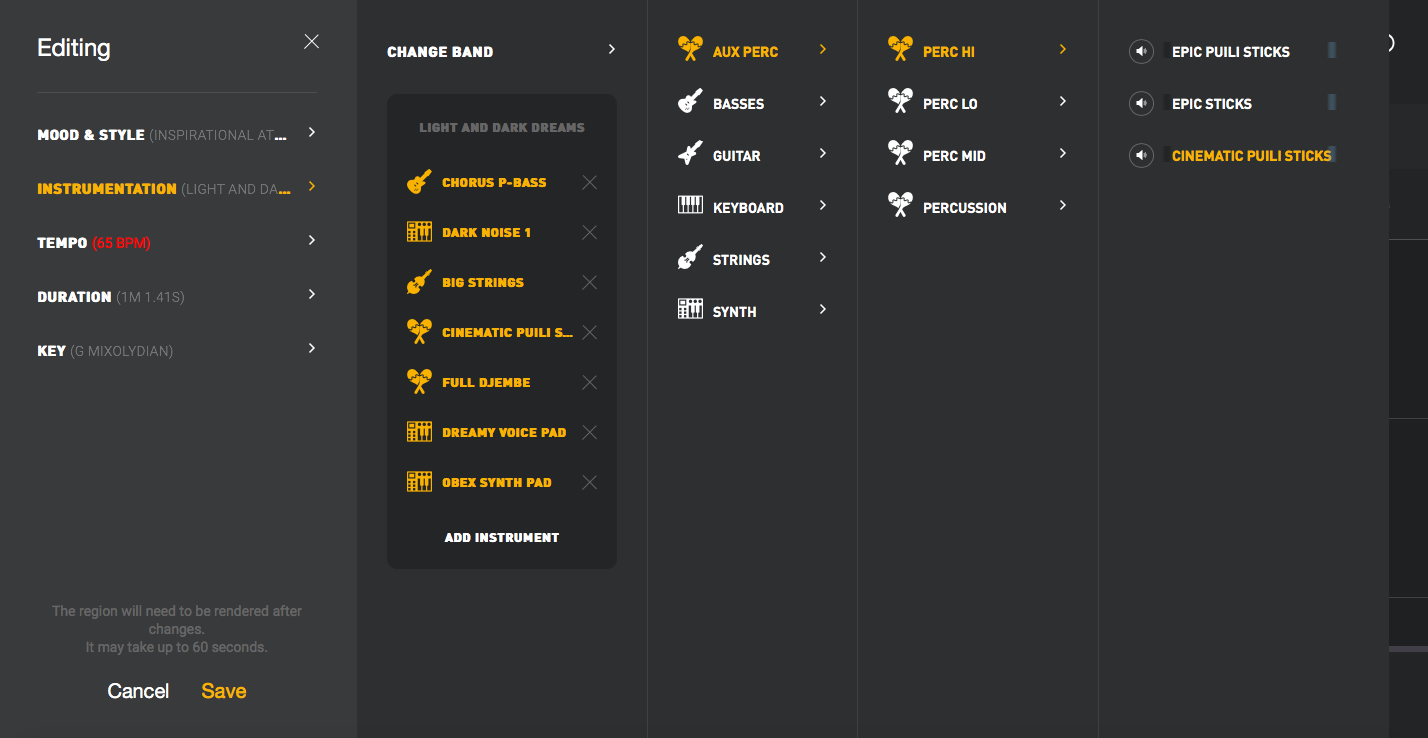

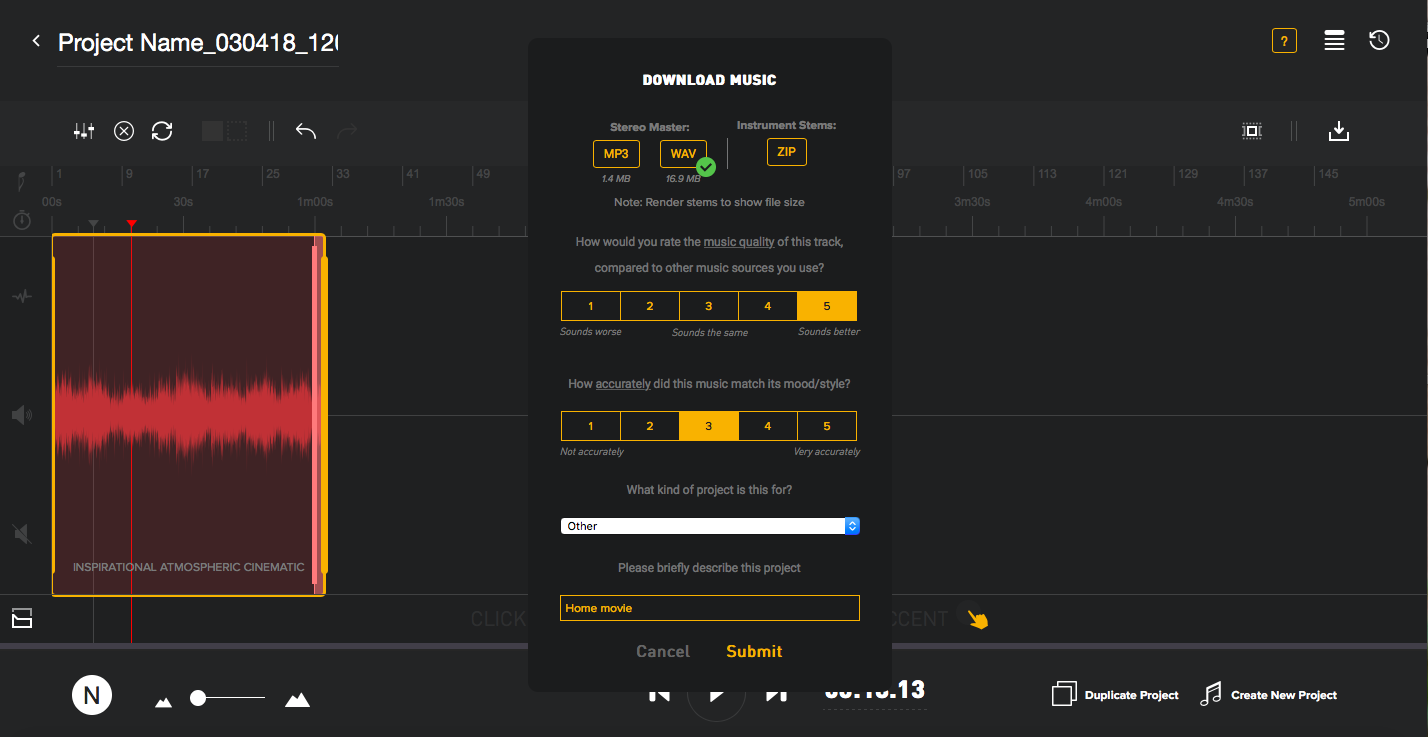

Amper now has two different interfaces to choose from: Simple and Pro. The first one lets you choose between the preset styles and set the duration of the melody. The second allows you to start with similar parameters associated with the style, but then you can dig deeper and change, apparently, in general, everything: the number of instruments, types of instruments, tonality, temporal characteristics, and so on.

For 30 seconds in my video you can hear the result of how the simple version of Amper implemented the chosen style of “modern folk music”. At around 2:00 there is an inspiring composition created in Amper Pro. I imagined brass instruments in the style of something like “Gonna Fly Now”, but current versions of AI composers cannot produce realistic brass. And yet in this case, the composition was not the worst.

Will I soon receive an Oscar for the best original composition using AI tools? Probably not. But even in the early stages, these technological toys for non-professional composers work. They are simple enough so that I can not spend the whole day on the melody with a duration of 30 seconds, and I do not need to understand anything at all in music theory. However, these tools are enough for me not to get upset about the fact that in the film about Ernie I can not use the music written by Vagabon . Real musicians will probably always be invited first, even if there are AI composers, but the position of creative people who write music on weekends will get better.

Source: https://habr.com/ru/post/371529/

All Articles