The behavior of machines must be made a scientific discipline.

Why should the study of AI be limited only to the circle of creators of AI?

What if the behavior of people on all scales would be studied only by physiologists; from the functioning of the human body to the emergence of social norms, from the work of financial markets to the creation, distribution and consumption of culture? What if only neuroscientists were engaged in the study of criminal behavior, developed educational standards and came up with rules to combat tax evasion?

Despite the growing influence from them on our lives, our study of machines with artificial intelligence occurs in just this vein - they are engaged in a very limited circle of people. AI scientists — computer science and robotics specialists — are almost always those who study AI behavior.

Specialists in computer science and robotics, creating their own AI for solving certain problems - and this in itself is not an easy task - most often focus on ensuring that their offsives perform their intended function. To do this, they use different test data sets and tasks that make it possible to objectively and consistently compare different algorithms. For example, email classification programs must comply with checks for the accuracy of splitting mail into spam and non-spam, using some kind of "reference point" defined by people. Computer vision algorithms must correctly recognize objects in human-marked sets of images, such as ImageNet. Robomobili must successfully pass from point A to point B in different weather conditions. Game AIs must defeat the best available algorithms, or people who have earned a certain reputation — for example, go go world champions or poker.

')

Such a task-oriented study of the behavior of AI is, albeit narrow, but very useful for progress in the areas of AI and robotics. It allows you to quickly compare algorithms based on objective and generally accepted criteria. But is this enough for society?

The representative of the tribe of artificial intelligence (Artificial Intelligence, AI)

No, not enough. The study of the behavior of an intellectual subject (human or artificial intelligence) must be carried out at other levels of abstraction in order to correctly identify problems and come up with solutions. Therefore, we have so many disciplines that study human behavior on different scales. From physiology to sociology, from psychology to political science, from game theory to macroeconomics - we acquire complementary perspectives describing the individual and collective behavior of people.

In his landmark 1969 book, The Science of the Artificial, Nobel laureate Herbert Simon wrote: “Natural science is the knowledge of natural objects and phenomena. I would like to ask whether there can exist “artificial” science - the knowledge of artificial objects and phenomena ”. In accordance with his presentation, we are agitating for the need to create a new, separate scientific discipline "machine behavior": the scientific study of the behavior shown by intelligent machines.

This new discipline is interested in the specific study of machines not as engineering artifacts, but as a new class of actors, with their unique patterns of behavior and ecology. What is important, this area intersects with, but differs from computer science and robotics, as it approaches the study of machine behavior from an observational and experimental point of view, without necessarily considering the internal mechanisms of the machine. Machine behavior is similar to how the study of animal behavior - known as ethology - and behavioral ecology study the behavior of animals without a focus on physiology and biochemistry.

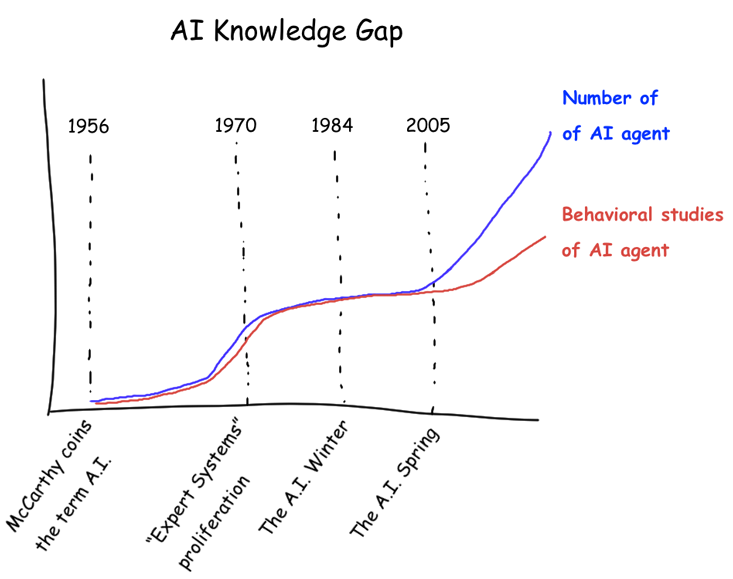

Gap in knowledge - the estimated discrepancy between the number of AI systems and the number of studies describing the behavior of these systems

To our definition of a new field of "machine behavior" it is necessary to make clarifications. The study of machine behavior does not imply that cars have their own will - that is, social responsibility for their actions. If someone’s dog bites a passerby, the owner will be responsible for it. Nevertheless, it is useful to study - and effectively predict - the behavior of dogs. In the same way, the machines are woven into a larger social and technical fabric, which includes the people responsible for the operation of the machines, and the harm that the machines can cause to others.

The second clarification is that machines exhibit behavior that is fundamentally different from animals and people, so it is necessary to refrain from the desire to anthropomorphizing or defaming machines. Even if methods taken from the field of studying the behavior of people and animals can be useful for studying machines, machines can show qualitatively different, completely alien to us forms of intelligence and behavioral patterns.

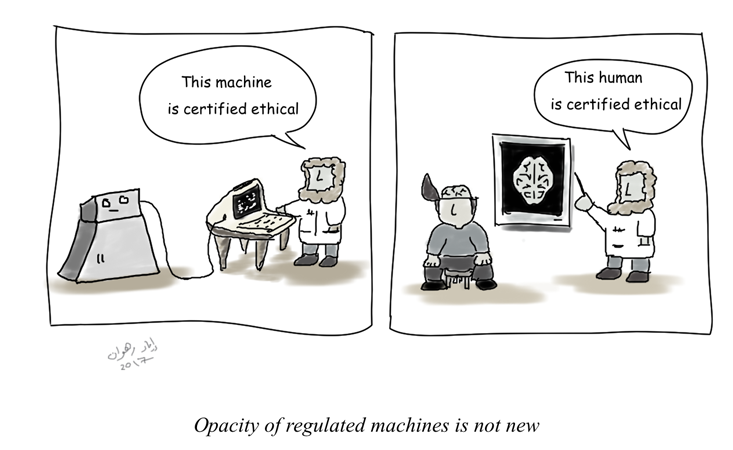

Given these reservations, we argue that a separate new discipline dealing with the study of machine behavior will be both new and useful. There are many reasons why you cannot study machines simply by examining their source code or internal structure. The first is opacity due to complexity: many AI architectures, such as deep-learning neural networks, exhibit internal states that cannot be easily interpreted. We often cannot vouch for the fact that AI is optimal or ethically suitable by studying its source code, just as we cannot vouch for a person’s fitness by scanning his brain. Moreover, what we consider ethical and suitable for society today may gradually change over time. Sociologists can track these processes, but the AI community may not keep track of them, because they measure their achievements differently.

- This car is definitely ethical.

- This person is definitely ethical.

Another motivation for the study of machine behavior without regard to their internal structure is the opacity caused by the protection of intellectual rights. Many of the algorithms that are used on an ongoing basis - for example, filtering news or recommending purchases - are black boxes because of the need to store industrial secrets. This means that the internal mechanisms that control these algorithms are not transparent to anyone outside of corporations owning and controlling them.

Third, complex AIs often have their inherent unpredictability: they demonstrate emerging patterns of behavior that no one can accurately predict, even their programmers. This behavior manifests itself only when interacting with the world and other actors. Programs for algorithmic exchange trading, which can demonstrate unprecedented patterns of behavior through the complex dynamics of markets, are definitely subject to this. Alan Turing and Alonzo Church showed the fundamental impossibility of ensuring that the algorithm will have certain properties without actually running this algorithm. There are fundamental theoretical limits to our ability to verify that a certain part of the code will always satisfy the necessary parameters, unless we run the code and see how it behaves.

But, most importantly, the scientific study of machine behavior, conducted by people who do not belong to computer science and robotics specialists, opens up new perspectives in important economic, social and political phenomena that are influenced by machines. Specialists in computer science and robotics today are among the most talented people on the planet. But they did not go through formal training in the areas of studying such phenomena as racial discrimination, ethical dilemmas, stability of financial markets, the spread of rumors in social networks. And although computer scientists have studied similar phenomena, in other areas there are also capable scientists with important skills, methods and attitudes that they can share. We, as specialists in computer science, in our own work often encounter the superiority of our companions, specialists in sociology and behaviorism . They often showed us how our primary research questions were incorrectly formed, or how our application of certain methods of sociology was inappropriate — for example, it lacked the necessary reservations, or the conclusions made were too sure. We learned from our own mistakes to slow down our desire to simply grind a large amount of data or build machine learning models.

In the present state of affairs, contributions from commentators and scientists outside of computer science and robotics are often poorly integrated. Many of these scientists tried to sound the alarm. They are worried about the wide and unintended consequences of AI actions capable of acting, adapting and displaying unexpected behavior contrary to the intentions of their creators — behavior that may be fundamentally unpredictable.

Together with the lack of predictability, there are fears of a potential loss of control by people over smart machines. Many cases have been described in which seemingly peaceful algorithms can harm individual people or communities. Others expressed concerns that the AI systems are black boxes, the decision-making process of which is not visible to those affected by these decisions, which undermines the ability of the latter to put these decisions into question.

And although these important statements have already achieved a great deal in communicating to the public the potentially unpleasant effects of using AI, they still work from the periphery - and usually cause a negative reaction within the AI community. Moreover, most of the current disputes are based on isolated evidence collected by specialists and limited to special cases. We still lack a consolidated, scalable, scientific approach to the behavioral study of AI, in which sociologists and computer scientists could cooperate seamlessly. As Kathy O'Neill wrote in The New York Times: “There is no separate area of academic research that is seriously responsible for understanding and criticizing the role of technology - and, in particular, the algorithms responsible for making so many decisions - in our lives.” Given the speed of development of new AI algorithms, this gap — between the number of implemented algorithms and our understanding of these algorithms — is likely to increase over time.

Finally, we expect that learning machine behavior can reveal to us greater economic value. For example, if it can be guaranteed that this algorithm meets certain ethical, cultural or economic standards of behavior, we will be able to advertise it that way. Consequently, consumers and the corporations responsible for it may begin to require such certification. This is similar to how consumers began to demand certain ethical and environmental standards for supply chains that produce goods and services consumed by them. Studying machine behavior can lay the foundations for such an objective certification of AI.

AI is increasingly involved in our social, economic, and political interactions. Credit evaluation algorithms determine who gets a loan; algorithmic trading bots buy and sell financial assets on the stock exchange; algorithms optimize the work of the police; algorithmic assessment programs for prisoners affect parole; robomobils carry multi-ton metal boxes in our cities; robots mark our houses and clean them; algorithms affect the match pairs on dating sites; Soon, deadly autonomous weapons will be able to determine who will live and who will die in armed conflicts. And this is only the tip of the future iceberg. In the near future, both programs and equipment complexes operating under AI control will penetrate into all aspects of our society.

They have anthropomorphic signs, or not, these machines represent a new class of actors - someone can even say, a new species - inhabiting our world. We must use all the tools available to understand and regulate their influence on our human race. If this is done correctly, then perhaps people and machines will be able to flourish together in a healthy, mutually beneficial cooperation. In order to realize this opportunity, we need to diligently take up the study of machine behavior with the help of a new scientific discipline.

Iyad Ravan is an associate professor of media studies at the MIT Media Lab, director and principal investigator of her Scalable Collaboration group. Manuel Cebrian is a researcher at the MIT Media Lab, a research advisor for the Scalable Collaboration team.

Source: https://habr.com/ru/post/371527/

All Articles