"False positive operation." It became known why Uber autopilot did not brake in front of a pedestrian

Late in the evening on Sunday, March 18, 2018, an accident involving a Uber unmanned vehicle occurred near Tempe, Arizona. Unfortunately, the woman crossing the roadway died with a bicycle. That incident was repeatedly discussed at Geektimes. The videotape , which a local police department published a few days later, was particularly actively discussed. On a 22-second video, there are two fragments: shooting from the front camera on the road and shooting the driver-man, who sits behind the wheel and controls the work of the autopilot.

A woman driving a car at the time of the accident lowered her eyes to a smartphone and did not have time to react to an obstacle that suddenly jumped out of the dark into a dim light circle.

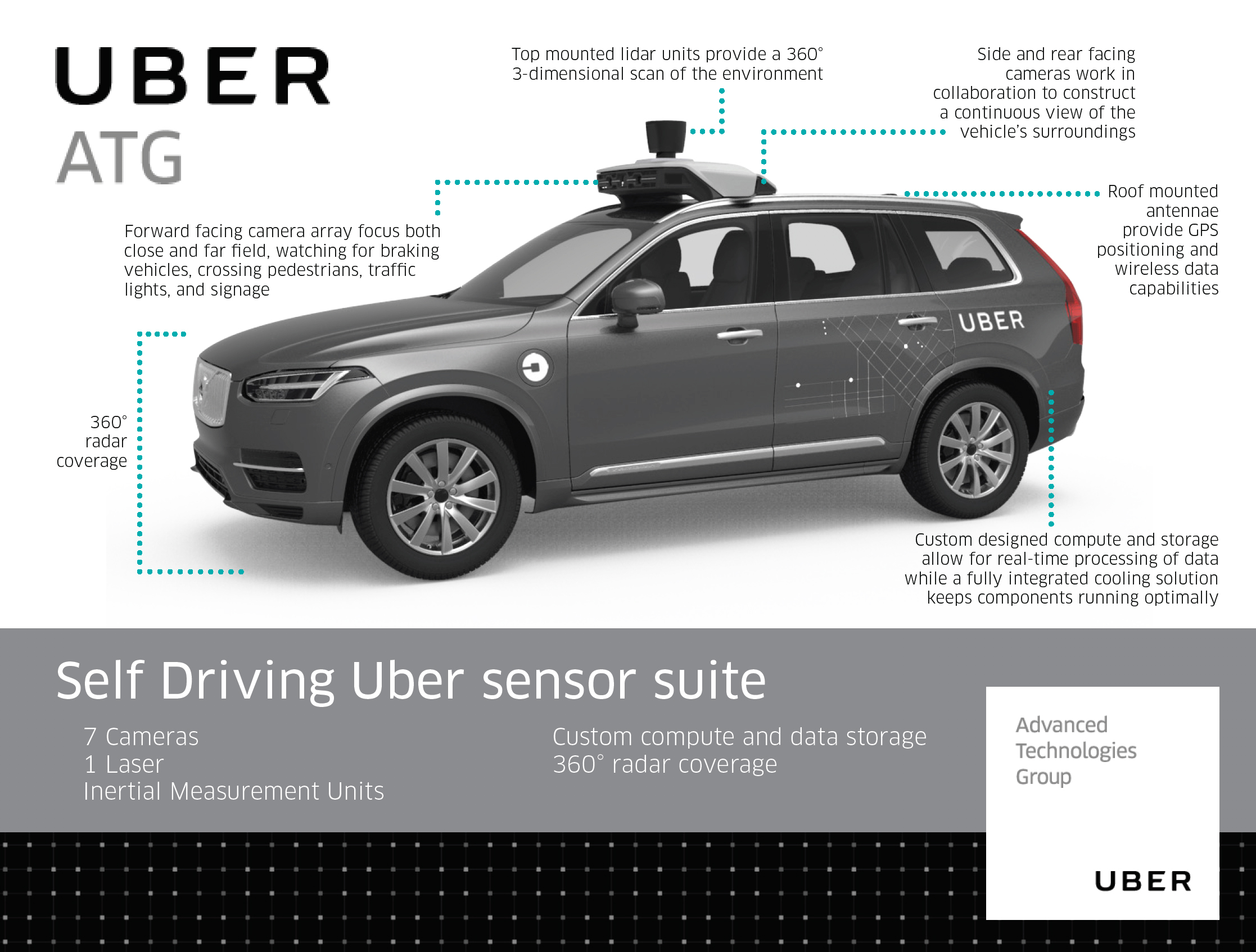

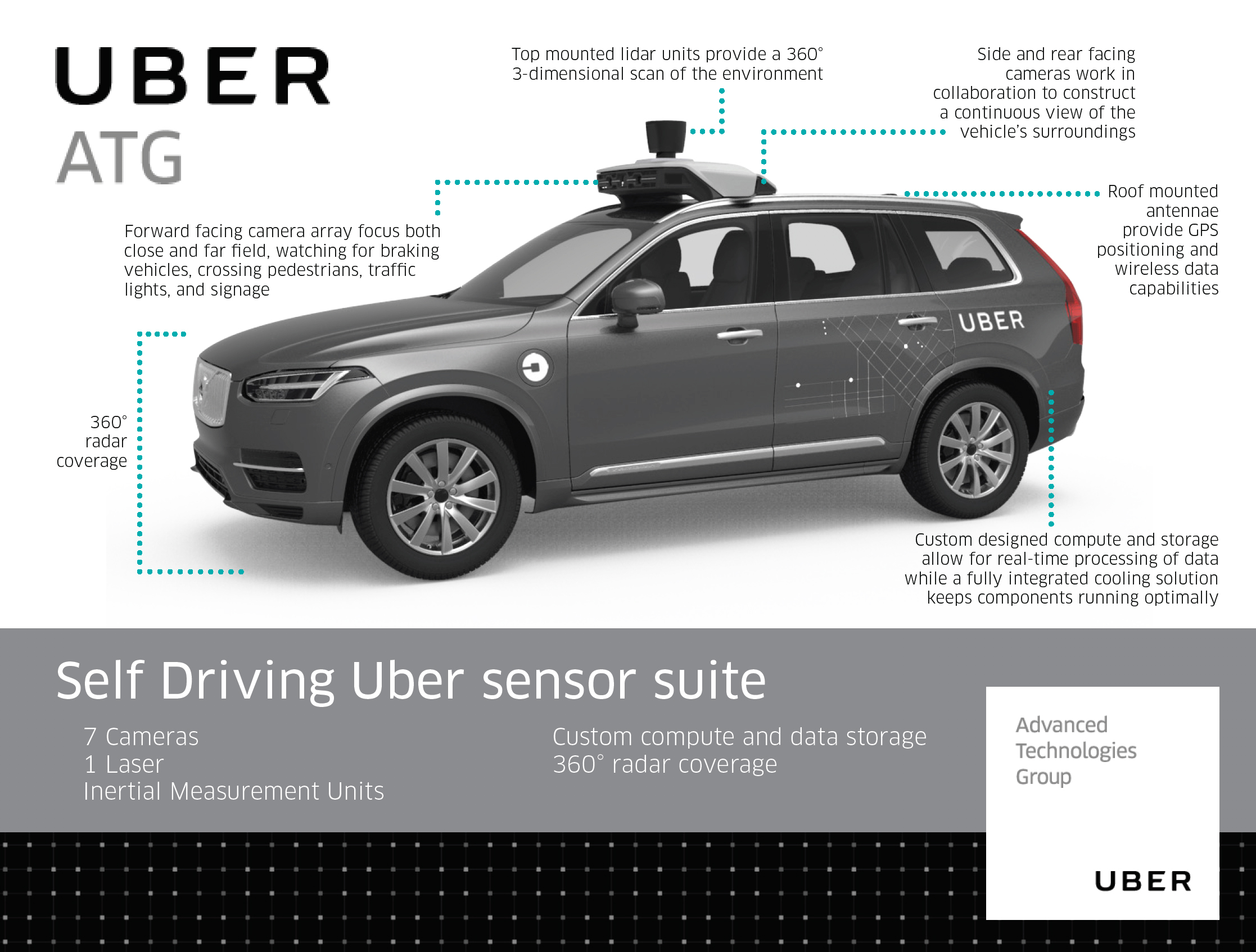

The most lively discussion was caused by the fact that the autopilot hit the pedestrian at full speed, not even trying to slow down or avoid a collision . This is surprising, given the large amount of equipment for computer vision. After all, the woman was crossing the road directly in front of the lidar and several video cameras with cars, and judging by the video recordings of other road users, the site was well lit.

According to experts , judging by the lack of autopilot response to an obstacle, we can assume two options for the problem:

')

Judging by the information received now, in the case of Uber, a second type problem arose: the autopilot considered the pedestrian with the bike to be a “false positive” (false positive) computer vision system and ignored it in accordance with the software settings. Preliminary results were reported by The Information , citing two confidential sources familiar with the investigation.

Apparently, the cause of the fatal accident is a program error. In particular, the incorrect operation of the function, which determines which objects to ignore and which ones should react. If the commission of inquiry comes to such conclusions and approves them definitively, then the question will arise about the direct responsibility of Uber for the incident. However, the company had previously concluded a pre-trial agreement with the family of the knocked down cyclist, so there would be no trial.

In addition, this case suggests the weakest link in the computer vision system: it is a high-level logic system that processes information from the lidar, cameras and sensors - and makes decisions in real time. If we compare sensors and cameras with “eyes”, then the error in this case occurred in the “brain” of the autopilot.

Uber has published a statement regarding the new information on the progress of the investigation: “We are actively cooperating with the US National Transportation Safety Board (NTSB) in investigating the incident. Out of respect for this process and a trusting relationship with the NTSB, we cannot comment on the specifics of the incident. At the same time, we launched an end-to-end security audit of our autopilot program, and also invited former NTSB executive Christopher Hart to consult on a common safety culture. We are reviewing all aspects, starting with system security and ending with the training of vehicle operators, and we hope that we will soon be able to say more about this. ”

A joint investigation by Uber and the National Transportation Safety Board continues.

Video

A woman driving a car at the time of the accident lowered her eyes to a smartphone and did not have time to react to an obstacle that suddenly jumped out of the dark into a dim light circle.

The most lively discussion was caused by the fact that the autopilot hit the pedestrian at full speed, not even trying to slow down or avoid a collision . This is surprising, given the large amount of equipment for computer vision. After all, the woman was crossing the road directly in front of the lidar and several video cameras with cars, and judging by the video recordings of other road users, the site was well lit.

"False positive operation"

According to experts , judging by the lack of autopilot response to an obstacle, we can assume two options for the problem:

')

- Malfunction in the object recognition system that could not classify a woman and her bicycle as a pedestrian. But this option seems unlikely, because bicycles and people belong to those classes of objects that the system should recognize best.

- Error in the higher logic of the car, which makes decisions on which objects to pay attention to and what to do with them. For example, there is no need to slow down due to a parked bike on the side of the road, but the slightest maneuver of another vehicle in the lane in front of the autopilot is a reason for immediate action. Here, the autopilot logic follows the mechanism of human attention and decision making by a live driver. The system protects the car from panic at every detected object.

Judging by the information received now, in the case of Uber, a second type problem arose: the autopilot considered the pedestrian with the bike to be a “false positive” (false positive) computer vision system and ignored it in accordance with the software settings. Preliminary results were reported by The Information , citing two confidential sources familiar with the investigation.

Apparently, the cause of the fatal accident is a program error. In particular, the incorrect operation of the function, which determines which objects to ignore and which ones should react. If the commission of inquiry comes to such conclusions and approves them definitively, then the question will arise about the direct responsibility of Uber for the incident. However, the company had previously concluded a pre-trial agreement with the family of the knocked down cyclist, so there would be no trial.

In addition, this case suggests the weakest link in the computer vision system: it is a high-level logic system that processes information from the lidar, cameras and sensors - and makes decisions in real time. If we compare sensors and cameras with “eyes”, then the error in this case occurred in the “brain” of the autopilot.

Uber has published a statement regarding the new information on the progress of the investigation: “We are actively cooperating with the US National Transportation Safety Board (NTSB) in investigating the incident. Out of respect for this process and a trusting relationship with the NTSB, we cannot comment on the specifics of the incident. At the same time, we launched an end-to-end security audit of our autopilot program, and also invited former NTSB executive Christopher Hart to consult on a common safety culture. We are reviewing all aspects, starting with system security and ending with the training of vehicle operators, and we hope that we will soon be able to say more about this. ”

A joint investigation by Uber and the National Transportation Safety Board continues.

Source: https://habr.com/ru/post/371495/

All Articles