The future of technology: AR / VR in design and design

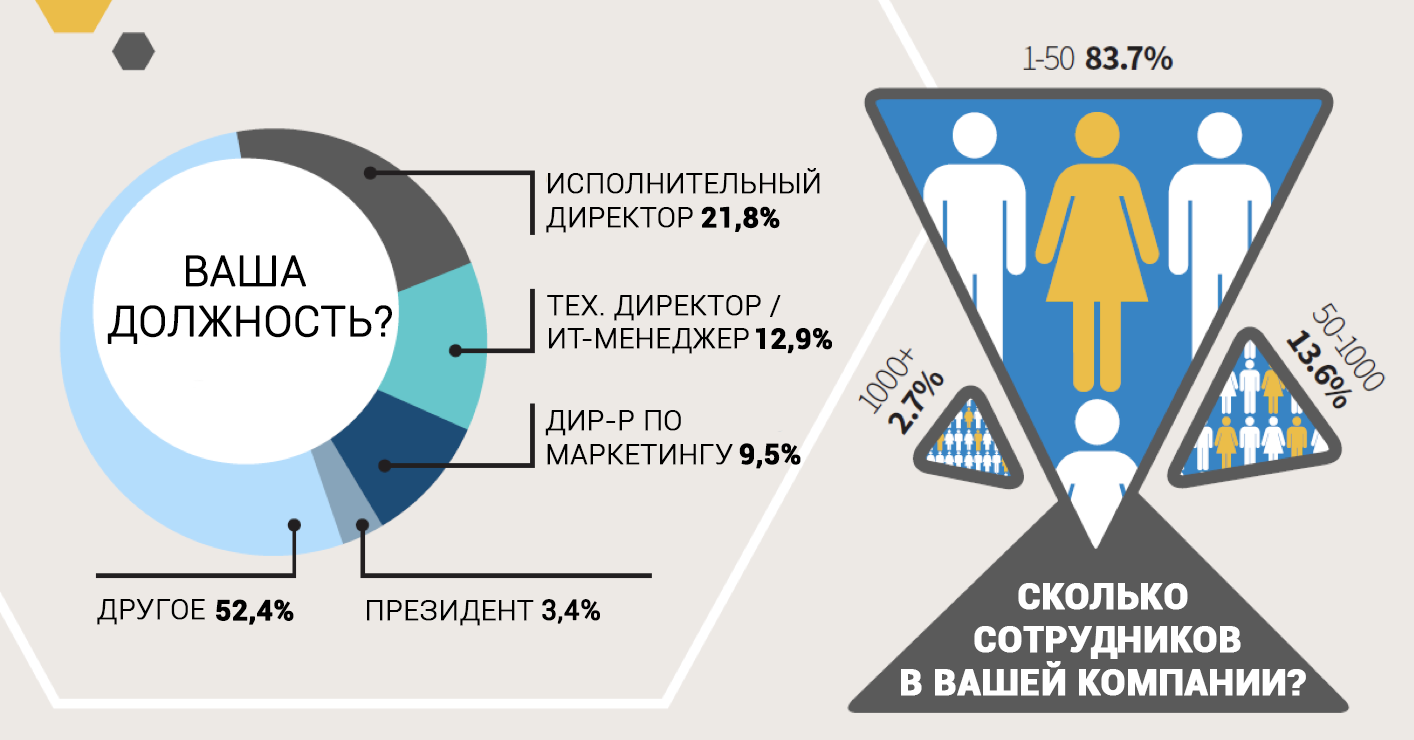

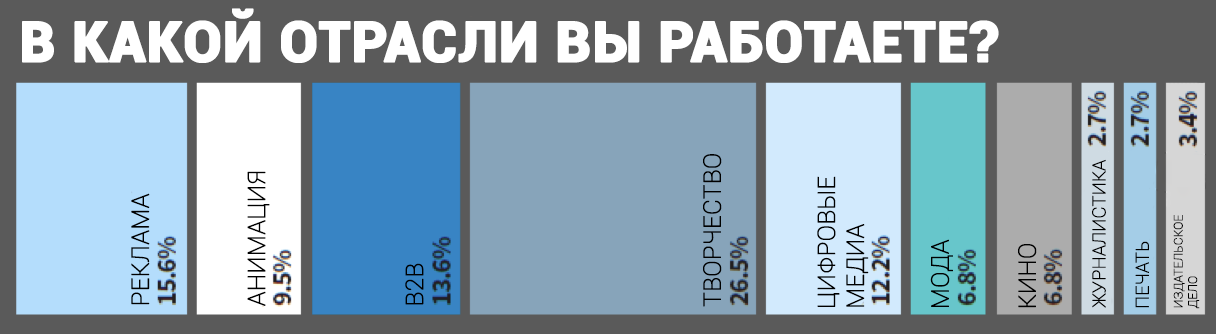

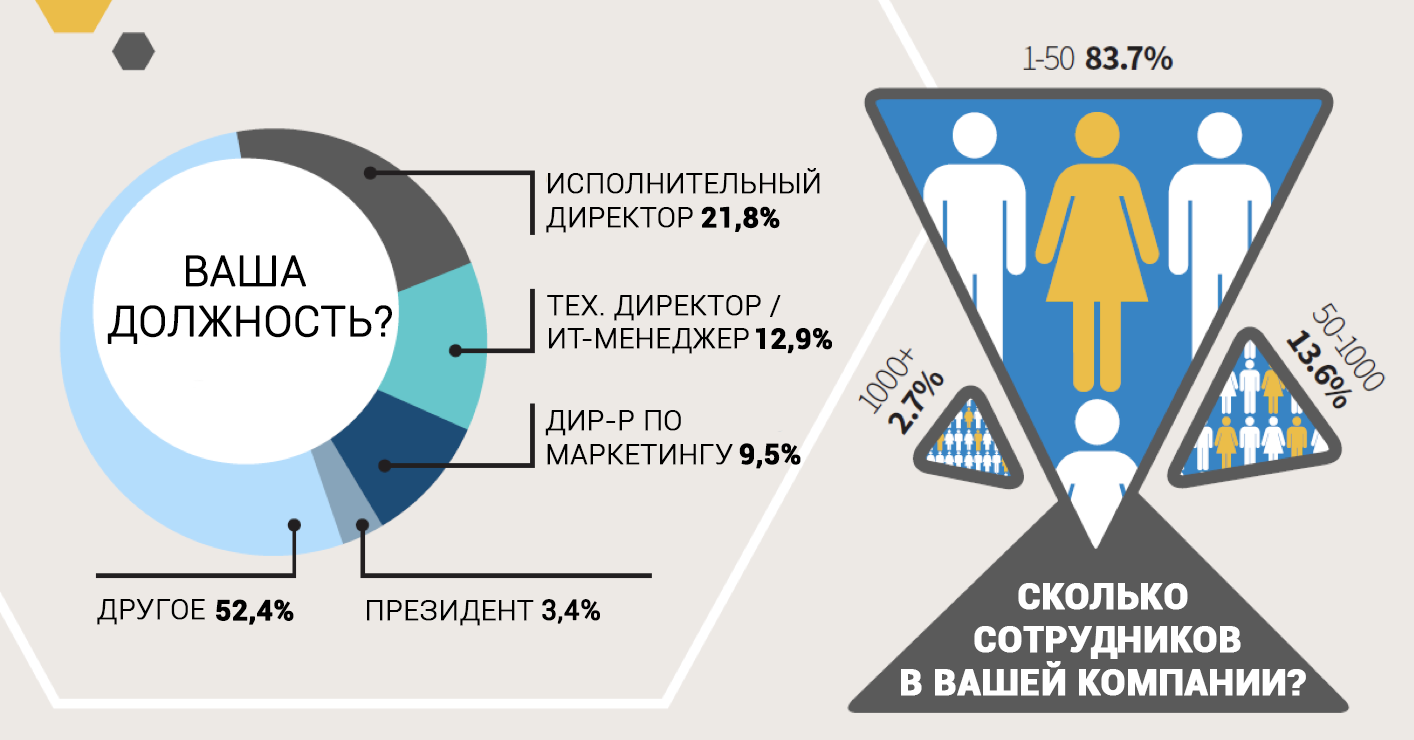

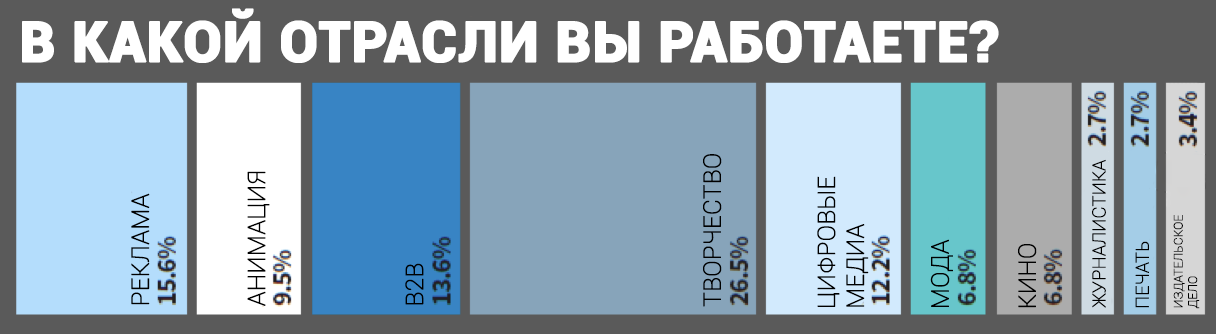

In 2017, Dell and AMD conducted a survey, analyzing the opinions of 147 representatives of creative professions from various fields. Companies wanted to get an idea of the technical problems that designers and designers face, look into the near future and get a picture of what the next year could bring.

We will share the results of this survey and show the opinions of leading industry experts. In addition, we will discuss a new technology that is gradually penetrating into large companies specializing in design and design.

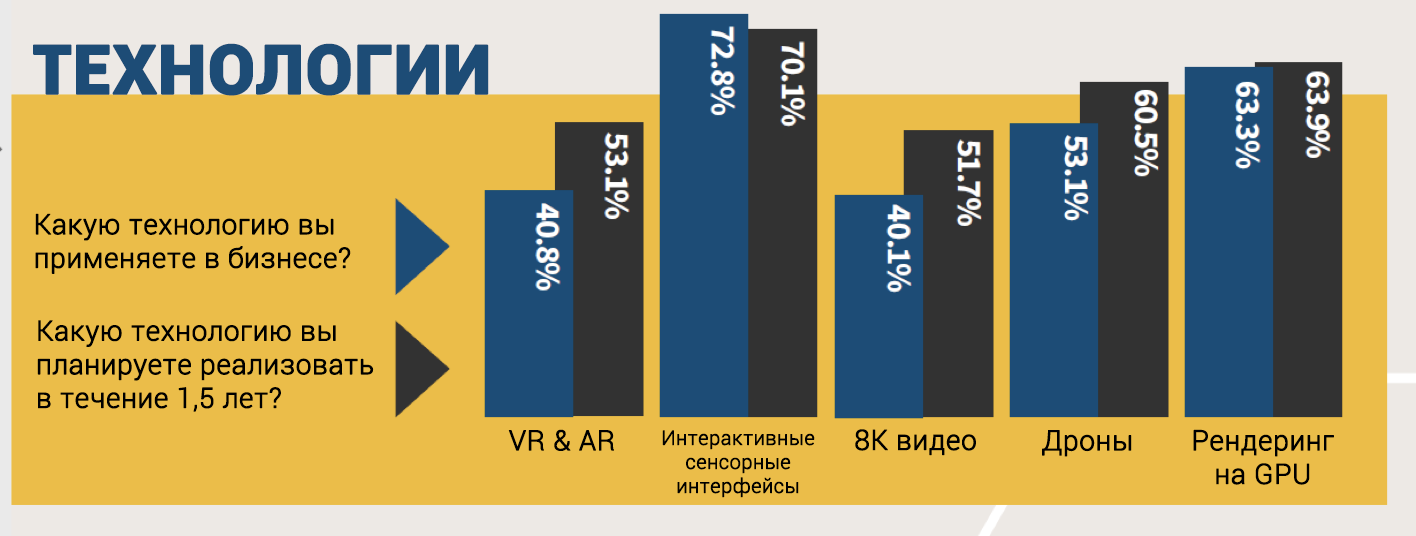

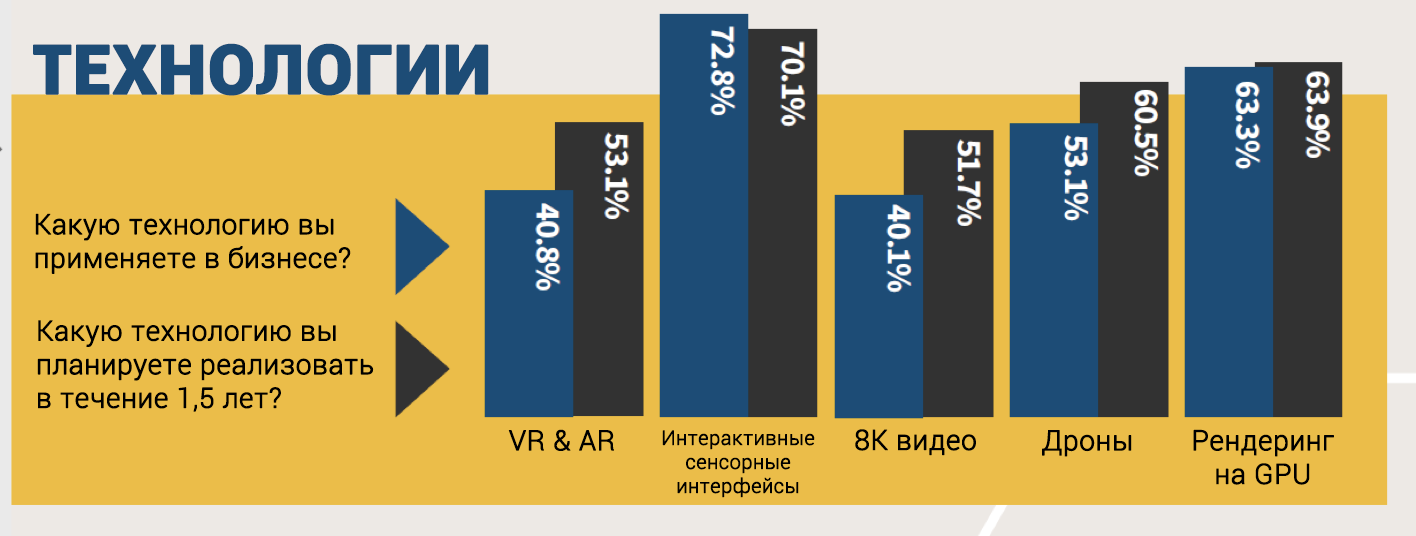

According to the survey, 41% of respondents are already introducing a virtual (VR) or augmented (AR) reality as part of their business strategy. Another 53% plan to do this over the next year and a half.

')

Practice shows that VR technologies have already gone away from simply being a fad of narrow specialists, and some companies gain a competitive advantage due to their implementation.

One of the clearest examples is Area SQ, a well-known British company that designs and repairs office space. Virtual reality technology has allowed it to give customer service a “new dimension” and demonstrate its work in ways it was impossible to imagine several years ago.

“VR has a huge impact on how we work throughout the project’s life cycle,” explains Daniel Calgary, Area SQ Design Director (London). “We pride ourselves on how Area SQ is advancing in technology design. One of the foundations of our strategy is innovation, and VR fits in perfectly with it. ” Calgary notes that for Area SQ it is very important to be able to "overcome existing and future challenges." And virtual reality has become a great opportunity for the company to introduce new developments.

The company works with virtual reality technology at different levels depending on the type of project. Sometimes this is a static picture with a 360-degree view, sometimes a whole virtual space through which you can navigate (in this case, the Unreal Engine is used). But technology will never replace the creative process.

“It’s important for us to have VR-ready systems“ ready for virtual reality, ”said Gary Hunt, Area SQ visualization department manager. “Thus, we can simply use a piece of equipment for creativity.” We do not want to think about what is inside the system, how it functions, we want to connect VR accessories and work with it. ”

With the increasing resolution of the screens of monitors and televisions, people of creative professions have to constantly review their workflow and product quality. According to the survey, photos and videos with a resolution of 8K have already become part of the business strategy for 40% of respondents, and 51% plan to implement it over the next year and a half.

However, as the resolution grows, the requirements for graphics (GPU) and central (CPU) processors also grow: software must cope with large projects, and increasing the size of monitors requires more powerful graphics cards.

Paul Wyatt, director of short documentaries and commercials about the problems he faces when working with high-definition video, told Paul Wyatt.

How do you see the most significant technological advances in video production in the next year and a half?

A couple of years ago, 4K-video in a decent bitrate and with a normal frame rate was something unattainable for most professionals, not to mention ordinary consumers. We needed an expensive external recorder and “dancing with a tambourine” in order to make friends with it a non-linear video editing program. But now cameras like the Panasonic Lumix DMC-GH5 and the APS-C sensor with a LUMIX DMC-FZ2500 lens really raise the bar when you need to shoot 4K video without recording time limits. This makes the task of a film director or videographer incredibly easy, as you carry with you a smaller set of equipment and at the same time do not sacrifice the quality of the material. We also see the appearance in the last cameras of a 10-bit 4: 2: 2 color space. The video contains more information about the colors, which allows us to more actively apply color correction or, perhaps, enhance halftones (with 8-bit colors 4: 2: 0, the image may slightly decay when intense shades are applied to it).

What challenges do you face?

The biggest problem of all these achievements is that they require reorganization of working processes and additional power for processing high-resolution content. Fortunately, non-linear editors, such as Adobe Premiere Pro, initially work with 4K. They can even create low-resolution proxy clips if the system does not have enough power to operate at full 4K resolution.

How has your work changed over the past few years from a technical and creative point of view?

Technology has freed up creative thought, and the opportunity has arisen to do more with a smaller budget. I still like to work with the team and use high-end cameras (for example, Sony FS7 or Canon c400), but all this equipment is usually expensive and time consuming. Compact mirrorless cameras allow me as a director to make the most of a limited budget. I can use the Sony A7s ii, RX10 iii or Panasonic GH4 and I know that I am getting the required 4K resolution, focusing and exposure tools: you do not need additional equipment to do this. I'm already tired of seeing all these creepy cameras, bulky installations with a lot of cables, monitors and external recorders. This is now much less necessary, which frees the time of the producer and director. So creatively, you can do a lot more. It's simple.

What problems causes the increase in video resolution? How do you plan to solve them?

This year there is a real demand for 4K, so my system needed to be upgraded to process video of such a resolution at speeds up to 250 Mbps. Some non-linear editing systems handle 4K better, others worse. If I edit the build on a laptop, I will use the proxy process in Premiere Pro. This allows you to create files with lower resolution to work, which reduces the requirements for the processor. Then, if I transfer all this to my Dell workstation , I can change the work clips to a higher resolution version.

It is also important not to lose sight of the acceptance stage. I am usually asked to present the result in 1080p format. Typically, the resolution of 4K video is reduced to 1080p and then output. However, the plus is that later you can open this project with a reference to the 1080p time scale to the 4K time scale and re-scale the video to 4K. In Premiere and Final Cut this is done very easily.

When it comes to capturing 4K video on the camera, the tools that help in working with the focus are very important, because the slightest flaws in the image will be immediately visible to viewers. With 4K resolution, it is easy to assess the availability of tools that allow you to zoom in on the image in the viewfinder. A home audience when viewing material on a 4K TV, this will give a higher level of control.

What technology are you currently using?

I worked for many years in creative studios, where the video was always in the background: the set of technologies that we used, always lagged behind, and the rendering was very slow. When I started to create my own movies, I wanted all these compromises not to interfere with my creative work, so I bought the Dell XPS 8300 system. At that time, it was equipped with an Intel Core i7-2600 processor, a 1 TB SATA disk and a 28-inch Full HD monitor from Dell. I worked with this system (with several updates) for five years when I was making HD movies. And even made a half-hour television documentary.

However, there was a need to create films with higher resolution and with a higher bitrate, so I again turned to Dell — I like how the company works with customers. Experts gave good advice on the configuration that I need to work with 4K. I chose the Dell Precision Tower 7000 with a memory upgrade and an UltraSharp 27 monitor. This is a serious investment, but I am sure that with several upgrades the workstation will last a long time - as much as the previous model.

Touch screens have gone from mobile phones and tablets to hybrids, and now large-format interactive devices are becoming quite viable tools for creative professionals in many industries.

Most studios use tablet computers to demonstrate their work. However, companies such as Adobe, Autodesk, and Avid create separate versions of their applications for touch interfaces, and you can not only view, but also create content on touchscreen devices.

Area SQ bases its business on innovation, considering them as a key success factor. Analyzing what competitive advantages a new technology will provide is an important part of the company's philosophy.

We believe that touch interfaces will be a breakthrough, ”explains Andrea Williams-Vedberg, creative director of Area SQ. “When you need to show your customers something new, a product like Dell Canvas will come in handy.” You can easily move the image around the screen, zoom in on CAD models, as well as view the files you are working on, along with your colleagues. ”

Virtual reality technology and the increase in video resolution make it necessary to change the workflow. There is a constant search for compromises between the introduction of technical innovations and a rational approach to the distribution of the budget. The majority (63%) of survey participants stated that rendering using graphics processors plays a certain role in their current approach to doing business. 80% of these respondents indicated that they reduced the rendering time by more than half. However, it is important to find a balance between price and performance.

MPC (Moving Picture Company) is one of the world's leading studios in the special effects industry. She has worked on The Survivor, The Jungle Book and the Harry Potter series.

MPCs use a variety of hardware technologies, including Dell mobile workstations, to create effects with the Oscars. It is interesting to discuss the technical problems faced by the world leader in the special effects industry. This is what Damien Fagnow, Chief Technical Specialist at MPC Film, tells.

What are the main technical problems that you already face or will face in the coming year?

Such a large studio like ours is not easy to cope with the growing needs for data storage and rendering. Therefore, we continue to invest a lot in the optimization process. The Universal Scene Description and VR technologies help us stay in the forefront.

How important will VR play in the special effects industry next year?

We are already experimenting with very interesting workflows, where VR is an attractive platform for viewing and even creating virtual environments. At the same time, there is a growing demand for a full “interactive experience” of virtual reality that complements the films created with the participation of the studio.

What changes do you need to work with VR?

In order to use virtual reality in our industry, you need to solve a few problems. Take the game engines: although they have been improved over the past few years, they are still not able to really cope with special effects. In addition, most of the special effects are done in Linux, where there are few game engines - just like the VR headsets with which they need to be integrated. We invest in various work processes, as well as conduct research, and we are confident that the situation will improve in the coming year.

What problems does high definition video create? How do you overcome them?

The increase in resolution affects the amount of detail and texture, but in general we have found good solutions and have been using them for many years. The most difficult task is the final rendering. Its ray-tracing cost almost linearly increases with the number of pixels, and 4K video rendering takes 4 times longer. This requires efficiency in terms of both workflow and speed.

We are also working with our partners, the Technicolor research team, on noise reduction and scaling algorithms specially adapted for special effects.

Which unit is facing the most serious technical problems?

In fact, everything. For animation, you need more pixels on the screen and more details, the special effects department wants to use more powerful modeling, faster rendering with higher detail, etc. All those who are engaged in creative work want the technologies to free them from restrictions as much as possible.

What worries you most from a technical point of view, if we talk about the prospects of the next year or two?

Various technologies, especially cloud technologies, are already reaching a level of maturity and are able to transform the industry of special effects. Now we have not just more features of a computer or server “on demand”, but a more distributed and dynamic workflow. On the other hand, technologies like deep learning change the very approach to solving problems.

What were the main difficulties in working on the Jungle Book, and what has changed in the last 15 years?

In the "Book of the Jungle" we needed to create a lot of photo-realistic environments, incredibly complex and rich in details - more than 80 minutes of special effects. The world was “settled” with hundreds of realistic animals that were supposed to act and behave quite naturally. It was almost impossible in 2001. For 15 years, computing and data storage have made such progress that now we can completely simulate the environment using ray tracing, creating billions of polygons and curves. The Pixar and Autodesk software, which runs on these advanced computing platforms, has also evolved tremendously.

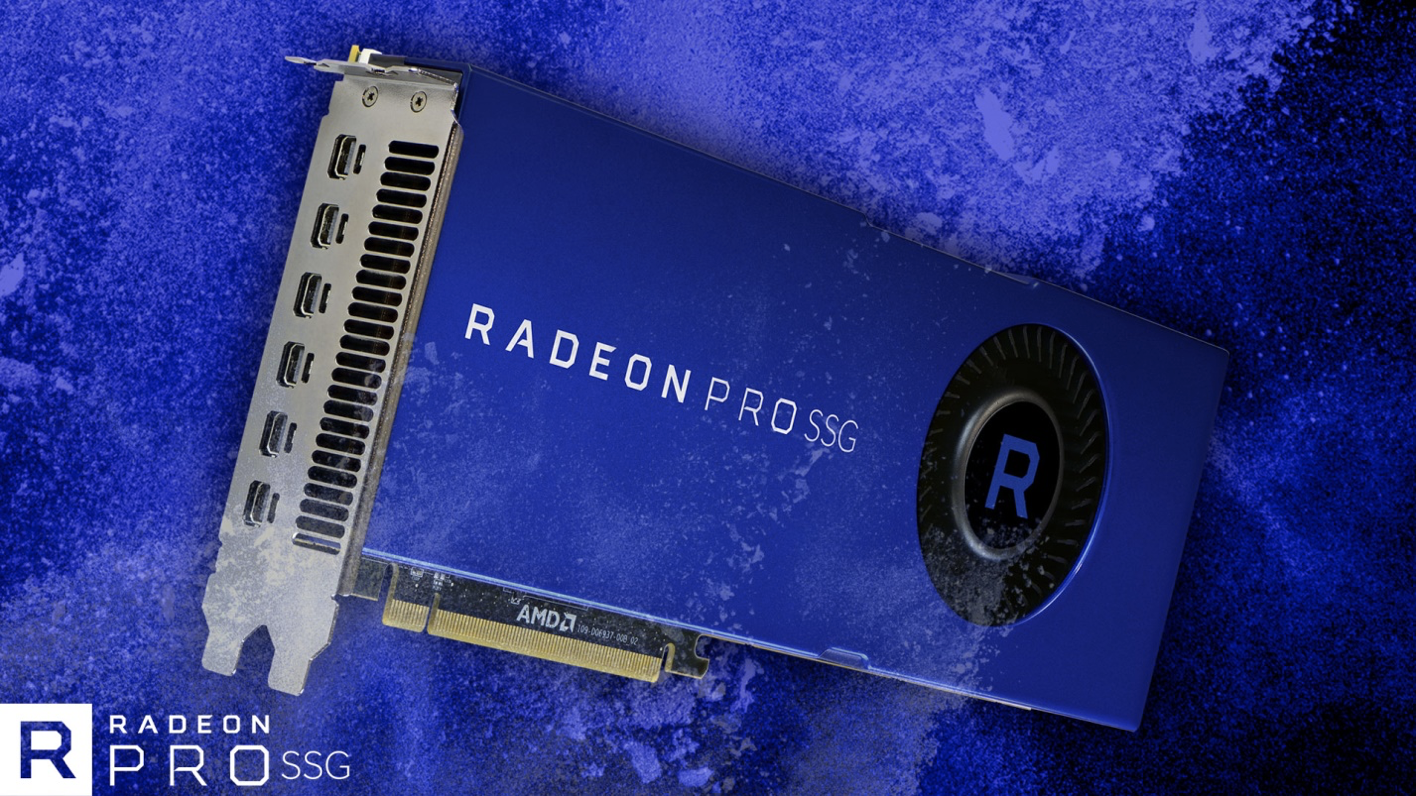

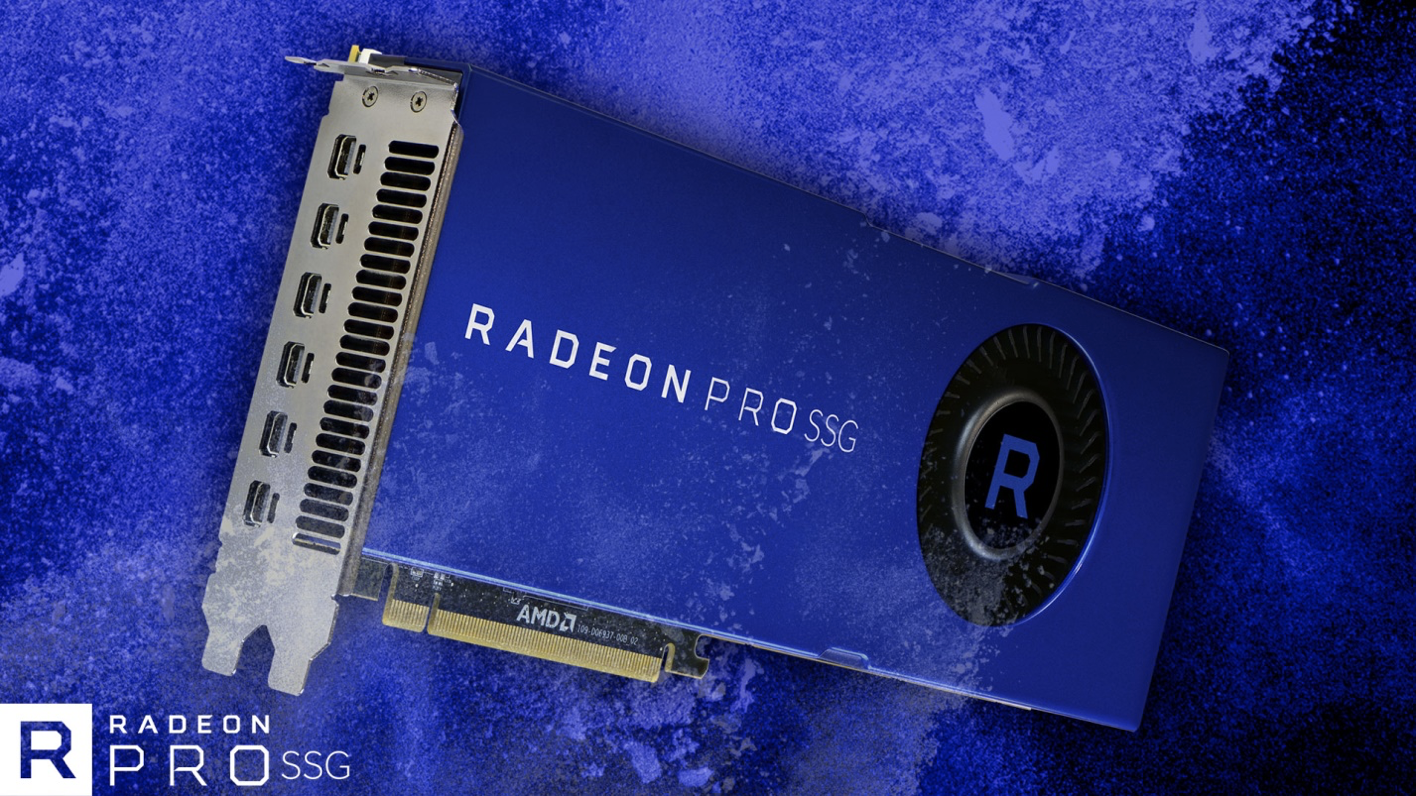

The results of the latest survey highlight the ever-growing need for a GPU, and the new Radeon Pro product line demonstrates AMD's desire to set new benchmarks. “We announced a partnership with AMD on Radeon technology to optimize Nuke for OpenCL and enable users to work with the new product,” comments Alex Maon, CEO of Foundry.

Thanks to the Radeon Pro lineup, AMD provides a range of tools - from WX Series graphics cards to SSG technology that meet the entire spectrum of the needs of creative people. “The goal of the WX series is to create the most accessible workstation for the professional preparation of virtual reality content,” said Raja Koduri, senior vice president and chief architect of RTG (Radeon Technologies Group). - We added a terabyte of memory to the graphics processor. And with our SSG technology, you can edit 8K video in real time. It allows you to work at 90 frames per second and 45 Gbit / s directly on the Radeon Pro SSG. ”

Every visual effects company is experimenting with VR, and this imposes certain requirements on the GPU. “Game engines have become more powerful and are widespread in many industries. In fact, they can no longer be called "game", - said Roy Taylor, vice president of AMD. “We create tools that will help artists and directors come up and tell their story.”

The performance of the older model Radeon Pro WX7100 with 2048 stream processors exceeds 5 teraflops. This card is equipped with 8 gigabytes of GPU memory on a 256-bit bus. The effective frequency is 7 GHz.

The graphics processor is designed to handle huge amounts of data. However, at the system level, it cannot instantly gain access to large arrays. The Radeon Pro SSG (Solid State Graphics) solution aims to take another step in this direction.

The 3D card is equipped with two M.2 slots for SSDs with PCIe 3.0 x4 interface. Drives can be used to store data that is processed by the graphics adapter. SSDs connect to the PCIe bus using the PEX8747 bridge.

SSG is a suitable option for designers and developers, since the card can significantly increase the performance of high-load systems. In addition to design, it will be able to help in those areas where it is required to render images in real time: for example, in medicine for 3D-animation of the work of the patient's heart, in the oil and gas industry and other industries.

We will share the results of this survey and show the opinions of leading industry experts. In addition, we will discuss a new technology that is gradually penetrating into large companies specializing in design and design.

VR & AR: New Technologies for Business

According to the survey, 41% of respondents are already introducing a virtual (VR) or augmented (AR) reality as part of their business strategy. Another 53% plan to do this over the next year and a half.

')

Practice shows that VR technologies have already gone away from simply being a fad of narrow specialists, and some companies gain a competitive advantage due to their implementation.

One of the clearest examples is Area SQ, a well-known British company that designs and repairs office space. Virtual reality technology has allowed it to give customer service a “new dimension” and demonstrate its work in ways it was impossible to imagine several years ago.

“VR has a huge impact on how we work throughout the project’s life cycle,” explains Daniel Calgary, Area SQ Design Director (London). “We pride ourselves on how Area SQ is advancing in technology design. One of the foundations of our strategy is innovation, and VR fits in perfectly with it. ” Calgary notes that for Area SQ it is very important to be able to "overcome existing and future challenges." And virtual reality has become a great opportunity for the company to introduce new developments.

The company works with virtual reality technology at different levels depending on the type of project. Sometimes this is a static picture with a 360-degree view, sometimes a whole virtual space through which you can navigate (in this case, the Unreal Engine is used). But technology will never replace the creative process.

“It’s important for us to have VR-ready systems“ ready for virtual reality, ”said Gary Hunt, Area SQ visualization department manager. “Thus, we can simply use a piece of equipment for creativity.” We do not want to think about what is inside the system, how it functions, we want to connect VR accessories and work with it. ”

Shooting in Ultra HD

With the increasing resolution of the screens of monitors and televisions, people of creative professions have to constantly review their workflow and product quality. According to the survey, photos and videos with a resolution of 8K have already become part of the business strategy for 40% of respondents, and 51% plan to implement it over the next year and a half.

However, as the resolution grows, the requirements for graphics (GPU) and central (CPU) processors also grow: software must cope with large projects, and increasing the size of monitors requires more powerful graphics cards.

40% of respondents said that 8K video is part of their business strategy.

Paul Wyatt, director of short documentaries and commercials about the problems he faces when working with high-definition video, told Paul Wyatt.

How do you see the most significant technological advances in video production in the next year and a half?

A couple of years ago, 4K-video in a decent bitrate and with a normal frame rate was something unattainable for most professionals, not to mention ordinary consumers. We needed an expensive external recorder and “dancing with a tambourine” in order to make friends with it a non-linear video editing program. But now cameras like the Panasonic Lumix DMC-GH5 and the APS-C sensor with a LUMIX DMC-FZ2500 lens really raise the bar when you need to shoot 4K video without recording time limits. This makes the task of a film director or videographer incredibly easy, as you carry with you a smaller set of equipment and at the same time do not sacrifice the quality of the material. We also see the appearance in the last cameras of a 10-bit 4: 2: 2 color space. The video contains more information about the colors, which allows us to more actively apply color correction or, perhaps, enhance halftones (with 8-bit colors 4: 2: 0, the image may slightly decay when intense shades are applied to it).

What challenges do you face?

The biggest problem of all these achievements is that they require reorganization of working processes and additional power for processing high-resolution content. Fortunately, non-linear editors, such as Adobe Premiere Pro, initially work with 4K. They can even create low-resolution proxy clips if the system does not have enough power to operate at full 4K resolution.

How has your work changed over the past few years from a technical and creative point of view?

Technology has freed up creative thought, and the opportunity has arisen to do more with a smaller budget. I still like to work with the team and use high-end cameras (for example, Sony FS7 or Canon c400), but all this equipment is usually expensive and time consuming. Compact mirrorless cameras allow me as a director to make the most of a limited budget. I can use the Sony A7s ii, RX10 iii or Panasonic GH4 and I know that I am getting the required 4K resolution, focusing and exposure tools: you do not need additional equipment to do this. I'm already tired of seeing all these creepy cameras, bulky installations with a lot of cables, monitors and external recorders. This is now much less necessary, which frees the time of the producer and director. So creatively, you can do a lot more. It's simple.

What problems causes the increase in video resolution? How do you plan to solve them?

This year there is a real demand for 4K, so my system needed to be upgraded to process video of such a resolution at speeds up to 250 Mbps. Some non-linear editing systems handle 4K better, others worse. If I edit the build on a laptop, I will use the proxy process in Premiere Pro. This allows you to create files with lower resolution to work, which reduces the requirements for the processor. Then, if I transfer all this to my Dell workstation , I can change the work clips to a higher resolution version.

It is also important not to lose sight of the acceptance stage. I am usually asked to present the result in 1080p format. Typically, the resolution of 4K video is reduced to 1080p and then output. However, the plus is that later you can open this project with a reference to the 1080p time scale to the 4K time scale and re-scale the video to 4K. In Premiere and Final Cut this is done very easily.

51% of respondents plan to start working with 8K video in the next 18 months

When it comes to capturing 4K video on the camera, the tools that help in working with the focus are very important, because the slightest flaws in the image will be immediately visible to viewers. With 4K resolution, it is easy to assess the availability of tools that allow you to zoom in on the image in the viewfinder. A home audience when viewing material on a 4K TV, this will give a higher level of control.

What technology are you currently using?

I worked for many years in creative studios, where the video was always in the background: the set of technologies that we used, always lagged behind, and the rendering was very slow. When I started to create my own movies, I wanted all these compromises not to interfere with my creative work, so I bought the Dell XPS 8300 system. At that time, it was equipped with an Intel Core i7-2600 processor, a 1 TB SATA disk and a 28-inch Full HD monitor from Dell. I worked with this system (with several updates) for five years when I was making HD movies. And even made a half-hour television documentary.

However, there was a need to create films with higher resolution and with a higher bitrate, so I again turned to Dell — I like how the company works with customers. Experts gave good advice on the configuration that I need to work with 4K. I chose the Dell Precision Tower 7000 with a memory upgrade and an UltraSharp 27 monitor. This is a serious investment, but I am sure that with several upgrades the workstation will last a long time - as much as the previous model.

Interactive Touch Displays

Touch screens have gone from mobile phones and tablets to hybrids, and now large-format interactive devices are becoming quite viable tools for creative professionals in many industries.

Most studios use tablet computers to demonstrate their work. However, companies such as Adobe, Autodesk, and Avid create separate versions of their applications for touch interfaces, and you can not only view, but also create content on touchscreen devices.

70% of respondents hope to include interactive devices in their business processes over the next year and a half

Area SQ bases its business on innovation, considering them as a key success factor. Analyzing what competitive advantages a new technology will provide is an important part of the company's philosophy.

We believe that touch interfaces will be a breakthrough, ”explains Andrea Williams-Vedberg, creative director of Area SQ. “When you need to show your customers something new, a product like Dell Canvas will come in handy.” You can easily move the image around the screen, zoom in on CAD models, as well as view the files you are working on, along with your colleagues. ”

Virtual reality technology and the increase in video resolution make it necessary to change the workflow. There is a constant search for compromises between the introduction of technical innovations and a rational approach to the distribution of the budget. The majority (63%) of survey participants stated that rendering using graphics processors plays a certain role in their current approach to doing business. 80% of these respondents indicated that they reduced the rendering time by more than half. However, it is important to find a balance between price and performance.

The future of the special effects industry

MPC (Moving Picture Company) is one of the world's leading studios in the special effects industry. She has worked on The Survivor, The Jungle Book and the Harry Potter series.

MPCs use a variety of hardware technologies, including Dell mobile workstations, to create effects with the Oscars. It is interesting to discuss the technical problems faced by the world leader in the special effects industry. This is what Damien Fagnow, Chief Technical Specialist at MPC Film, tells.

What are the main technical problems that you already face or will face in the coming year?

Such a large studio like ours is not easy to cope with the growing needs for data storage and rendering. Therefore, we continue to invest a lot in the optimization process. The Universal Scene Description and VR technologies help us stay in the forefront.

How important will VR play in the special effects industry next year?

We are already experimenting with very interesting workflows, where VR is an attractive platform for viewing and even creating virtual environments. At the same time, there is a growing demand for a full “interactive experience” of virtual reality that complements the films created with the participation of the studio.

What changes do you need to work with VR?

In order to use virtual reality in our industry, you need to solve a few problems. Take the game engines: although they have been improved over the past few years, they are still not able to really cope with special effects. In addition, most of the special effects are done in Linux, where there are few game engines - just like the VR headsets with which they need to be integrated. We invest in various work processes, as well as conduct research, and we are confident that the situation will improve in the coming year.

What problems does high definition video create? How do you overcome them?

The increase in resolution affects the amount of detail and texture, but in general we have found good solutions and have been using them for many years. The most difficult task is the final rendering. Its ray-tracing cost almost linearly increases with the number of pixels, and 4K video rendering takes 4 times longer. This requires efficiency in terms of both workflow and speed.

We are also working with our partners, the Technicolor research team, on noise reduction and scaling algorithms specially adapted for special effects.

Which unit is facing the most serious technical problems?

In fact, everything. For animation, you need more pixels on the screen and more details, the special effects department wants to use more powerful modeling, faster rendering with higher detail, etc. All those who are engaged in creative work want the technologies to free them from restrictions as much as possible.

What worries you most from a technical point of view, if we talk about the prospects of the next year or two?

Various technologies, especially cloud technologies, are already reaching a level of maturity and are able to transform the industry of special effects. Now we have not just more features of a computer or server “on demand”, but a more distributed and dynamic workflow. On the other hand, technologies like deep learning change the very approach to solving problems.

What were the main difficulties in working on the Jungle Book, and what has changed in the last 15 years?

In the "Book of the Jungle" we needed to create a lot of photo-realistic environments, incredibly complex and rich in details - more than 80 minutes of special effects. The world was “settled” with hundreds of realistic animals that were supposed to act and behave quite naturally. It was almost impossible in 2001. For 15 years, computing and data storage have made such progress that now we can completely simulate the environment using ray tracing, creating billions of polygons and curves. The Pixar and Autodesk software, which runs on these advanced computing platforms, has also evolved tremendously.

Poll results

Overcoming barriers: AMD transforms the entertainment industry with SSG technology and WX series cards

The results of the latest survey highlight the ever-growing need for a GPU, and the new Radeon Pro product line demonstrates AMD's desire to set new benchmarks. “We announced a partnership with AMD on Radeon technology to optimize Nuke for OpenCL and enable users to work with the new product,” comments Alex Maon, CEO of Foundry.

Thanks to the Radeon Pro lineup, AMD provides a range of tools - from WX Series graphics cards to SSG technology that meet the entire spectrum of the needs of creative people. “The goal of the WX series is to create the most accessible workstation for the professional preparation of virtual reality content,” said Raja Koduri, senior vice president and chief architect of RTG (Radeon Technologies Group). - We added a terabyte of memory to the graphics processor. And with our SSG technology, you can edit 8K video in real time. It allows you to work at 90 frames per second and 45 Gbit / s directly on the Radeon Pro SSG. ”

For 63% of respondents, GPU rendering is part of a business strategy.

Every visual effects company is experimenting with VR, and this imposes certain requirements on the GPU. “Game engines have become more powerful and are widespread in many industries. In fact, they can no longer be called "game", - said Roy Taylor, vice president of AMD. “We create tools that will help artists and directors come up and tell their story.”

The performance of the older model Radeon Pro WX7100 with 2048 stream processors exceeds 5 teraflops. This card is equipped with 8 gigabytes of GPU memory on a 256-bit bus. The effective frequency is 7 GHz.

The graphics processor is designed to handle huge amounts of data. However, at the system level, it cannot instantly gain access to large arrays. The Radeon Pro SSG (Solid State Graphics) solution aims to take another step in this direction.

The 3D card is equipped with two M.2 slots for SSDs with PCIe 3.0 x4 interface. Drives can be used to store data that is processed by the graphics adapter. SSDs connect to the PCIe bus using the PEX8747 bridge.

SSG is a suitable option for designers and developers, since the card can significantly increase the performance of high-load systems. In addition to design, it will be able to help in those areas where it is required to render images in real time: for example, in medicine for 3D-animation of the work of the patient's heart, in the oil and gas industry and other industries.

Source: https://habr.com/ru/post/371371/

All Articles