Why is it still worth being afraid of killer robots?

The lethal autonomous weapon is not science fiction; this is a real threat to the safety of people that must be stopped now

A recent article by Paul Sharr, " Why you shouldn't be afraid of killer robots, " rejects the seriousness of the short video made by the Institute for the Future Life , with which the authors of this article are associated, under the pretext that this is just "propaganda." Sharr is a military expert and makes an important contribution to the discussion of the problem of autonomous weapons. But in this case we, with all due respect, are forced to disagree with him.

')

Why we shot this video

We have been working on the problem of autonomous weapons for several years. We presented our opinion to the UN in Geneva and at the International Economic Forum; we wrote an open letter signed by 3,700 researchers in the field of artificial intelligence (AI) and robotics and 20,000 other people, which was covered in more than 2,000 articles in the media; one of us (Russell) compiled a letter from the 40 leading AI researchers to President Obama and headed a delegation that was accepted at the White House in 2016 to discuss this issue with officials from the Ministry of Defense and members of the National Security Council; we presented our reports to various branches of the US military and to analysts; and we discussed this issue in numerous meetings and academic forums around the world.

Our main idea was the same: an autonomous weapon that does not require human supervision can potentially be scaled to a mass destruction weapon (WMD); in fact, a large number of such weapons may be launched by a small number of people. This is the inevitable logical consequence of autonomy. As a result, autonomous weapons can be expected to reduce the security of people at the personal, local, state, and international levels.

Despite this, we observed high-ranking officials noting risks on the grounds that their “experts” did not believe in the reality of the appearance of Skynet. Skynet is, of course, a fictional control system in the films of the Terminator series, rebelling against people. The risk of the appearance of Skynet has nothing to do with the risk of people using autonomous weapons as weapons of mass destruction or with any other risks mentioned by us and Sharr. But the situation has shown that, unfortunately, serious discussions and scientific arguments are not enough to get the message across. Even if high-ranking officials from the ministry of defense who are responsible for autonomous weapon development programs are not able to understand the main problems, we cannot expect from ordinary people and their chosen representatives to make appropriate decisions.

The main reason we made this video is to give a clear and understandable illustration of what we mean. The second reason is to give people a clear sense of the type of technology and the concept of autonomy that we have in mind. This is not science fiction - autonomous weapons do not have to be humanoid, have their own consciousness and evil intentions; and before such opportunities it was not at all the "decade", as stated by representatives of some countries in Geneva. Finally, we remember the precedent with the ABC art film The Next Day (1983), which, having shown the influence of nuclear war on people and their families, had a direct impact on state and international politics.

What we agree

Sharr agrees with the birth of real technology; He writes: "And although no one has yet combined these technologies, as shown in the movie, all the components are already real." He concludes that a terrorist group will be able to assemble autonomous weapons, regardless of whether the manufacture of such weapons is controlled by international agreements or not. On a small scale, this is probably the case; but on such a scale for terrorists there are no advantages in the use of autonomous weapons. And this is almost certainly not so on a large scale - it is highly unlikely that terrorists will be able to develop and produce thousands of efficiently operating autonomous weapon units without being detected - especially if the manufacturers of drones and other previous components cooperate according to the agreement reached. and happens, for example, with the Chemical Weapons Convention .

We agree with Sharr on the importance of taking counter measures, although we note that a ban on the development of lethal autonomous weapons clearly does not hinder the development of weapons aimed at the destruction of drones.

Finally, we agree with Sharr that the stakes in this matter are high. He writes: “Autonomous weapons raise important questions about obedience to the laws of war, about risk and controllability, and about the moral role of people in hostilities. These are important issues worthy of serious discussion. ” However, it is strange that he does not consider the issue of weapons of mass destruction worthy of serious discussion.

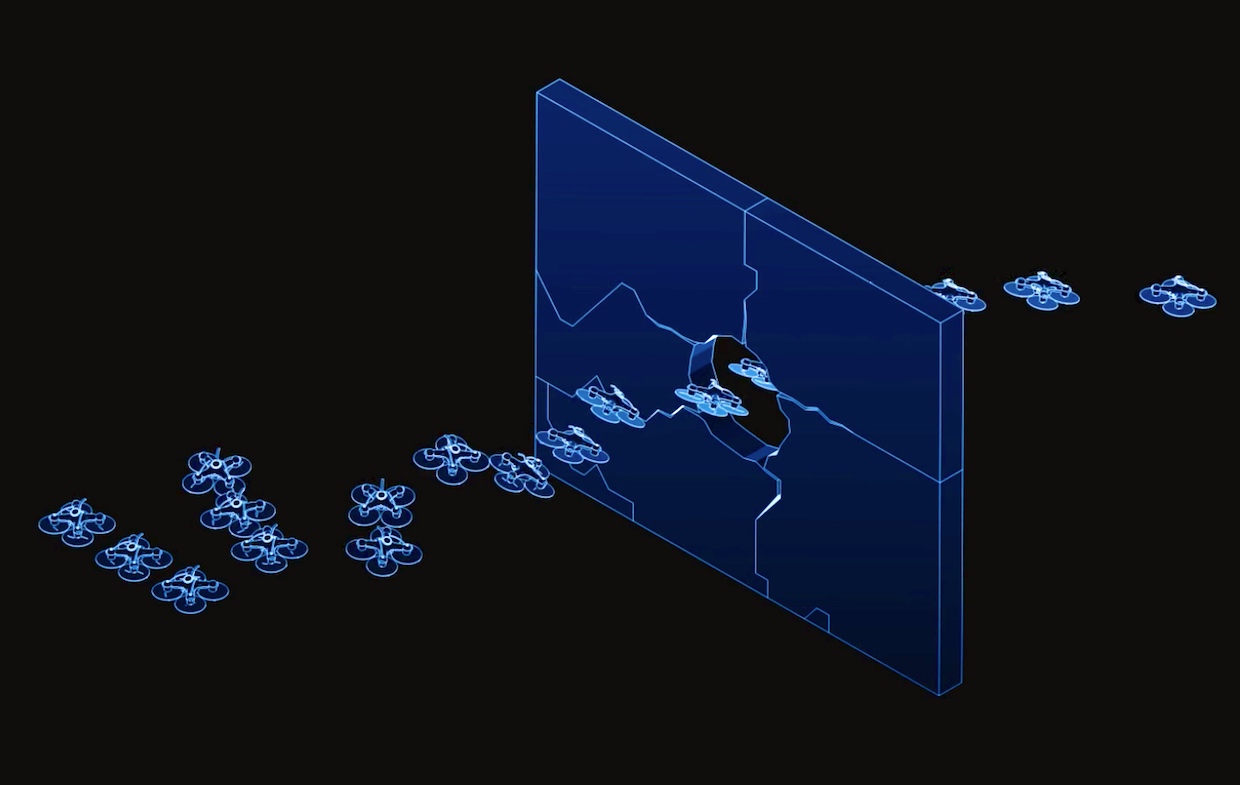

The creators of the film “Slaughterbots” believe that autonomous lethal microdrones will be cheap to produce and people will not have to follow each of them; thus, they are potentially scalable WMD.

What we do not agree

Sharr credits four statements to us and then tries to refute them. To simplify the discussion, we will refute these four statements in order to get the four statements made by Sharr in his article (we discussed the exact wording of these statements in a correspondence with Sharr):

1. Sharr: It is unlikely that governments will start mass production of lethal microdrones as weapons of mass destruction.

Then you can ask: “Why not ban them?” Before joining the Convention on the Prohibition of Chemical Weapons in 1997, the strongest countries of the world massively produced lethal chemical weapons, including various types of nerve gas, as its use like a maw After their ban warehouses were destroyed, and mass production - stopped. Banning lethal autonomous microdrones will make it illegal to manufacture and use them as weapons of mass destruction, which will greatly reduce the likelihood that terrorists and other people will be able to gain access to a large number of effective weapons.

There is reason to believe that this statement is not true. For example, such lethal microdrones, like AeroVironment's Switchblade , are already mass produced. Switchblade, a 60-cm-wide drone with a wingspan, is designed as a weapon to attack personnel [ drone-kamikaze, crashing into a target and detonating the explosives it carries / approx. trans. ]. In contrast to Sharr's statement, they can easily be remade to kill civilians instead of soldiers. Moreover, Switchblade already comes with a multi-unit launcher. Orbital ATK, a warhead manufacturer, describes the Switchblade as a “fully scalable” project.

Switchblade is not fully autonomous, it requires radio communication; the program of the Ministry of Defense CODE (Collaborative Operations in Denied Environments - joint operations in unfriendly environments) aims to move to the autonomy of drones, allowing them to work in conditions of interrupted communications; they will be “hunted in packs like wolves,” as the program manager says. Moreover, in 2016, the Air Force successfully demonstrated the combat deployment of 103 Perdix microdrones from F / A-18 fighters. According to the announcement, “Perdix are not pre-programmed units with general synchronization; they act as a joint organism, and together use a distributed brain for decision-making and adaptation, like any natural swarm. ” And although Perdix drones are not equipped with weapons, it is difficult to understand the need for 103 drones operating as a close group if their purpose is simple intelligence.

Under the pressure of an arms race, it can be expected that such weapons will continue to be miniaturized and produced in large quantities at a much lower cost. After entering autonomy, one operator will be able to deploy thousands of Switchblade drones or other lethal microdrones, instead of controlling the flight of a single drone to its target. At this point, the number of drones produced will increase significantly.

In the major wars of the 20th century, more than 50 million civilians died. This horrific record suggests that in armed conflict, states do not refrain from large-scale attacks. Scalable autonomous weapons in the role of weapons of mass destruction have advantages for the winner in comparison with nuclear weapons and carpet bombardments: they leave property intact and can be selectively applied to those who are able to carry a threat to the invaders. Finally, if the use of nuclear weapons represents a catastrophic threshold, which we have since 1945 managed not to cross, there is no such threshold for scalable autonomous weapons. Attacks can smoothly increase the number of victims from 100 to 1000, 10 000 and 100 000.

2. Sharr: States are likely to develop effective countermeasures for microdrones, especially if they turn into a serious threat.

Although in the article, Sharr attributes to us a statement that “there is no effective defense against lethal microdrones,” he ultimately admits that this is indeed true today. He is inclined to believe that the situation shown in the video, where weapons of mass production are available, is acting against people, but there is no effective defense against it, cannot come, or can only become a temporary imbalance.

As evidence, Sharr cites an article from the New York Times. The article does not inspire confidence: it describes the problem of lethal microdrones as "one of the most unpleasant puzzles for the Pentagon from the field of countering terrorism." The results of the Defense Ministry’s " Hard Kill Challenge " experiment, which had to decide "which of the new secret technologies and tactics are the most promising," she describes as "definitely mixed." The Hard Kill Challenge program is the heir to the Black Dart program, in which annual competitions have been held since 2002. After more than 15 years of work, we have not received effective remedies.

Sharr argues that lethal autonomous microdrones "can be defeated with something as simple as a wire mesh," perhaps imagining how whole cities are covered with such a grid. If this were a working form of protection, then there would be no Hard Kill Challenge; Switchblade drones would be useless; Iraqi soldiers would not die from the attacks of lethal microdrones.

Sharr rightly notes that the video shows how larger drones pierce the walls, but he obviously could not notice that the family house is shrouded in steel on the video with a steel grill - like parts of the university dormitory, on which the “safe zone” signs are fixed, indicating students on the opportunity to escape from attack. Sharr claims that the ratio of the cost of attack and defense plays in favor of the defender, but this is hardly the case if someone needs to be 100% protected for 100% of the time against attacks that can start anywhere. When the weapon has intelligence, one hole in the protective shell is enough. And the addition of a larger number of protective shells makes little difference.

Moreover, the weapon turns out to be cheap and disposable, as Sharr correctly points out in a recent interview : “The point is not only to attack these drones. We need to find a way to do it efficiently in terms of costs. If you shoot down a drone worth $ 1,000 with a missile worth $ 1 million, you will lose each time. ” We agree. This is not like the ratio of the price of defense and attack, playing in favor of the defender.

As for whether we can be completely confident in the capabilities of the government or defense companies to develop cheap, effective, large-scale means of protection against lethal microdrones in a short time: this reminds us of the history of British residents at the very beginning of World War II. One would expect that if anyone had the motivation to develop effective remedies, it was the British during the London blitz . But, by the end of Blitz, after 40,000 sorties against the main cities, the countermeasures proved effective by no more than 1.5% - even less than at the beginning, 9 months before.

3. Sarr: governments can prevent a large number of army-quality weapons from falling into the hands of terrorists.

According to Sharr, the video shows how "killer drones that fell into the hands of terrorists massacre innocent people." In fact, as diligently trying to explain the video, attackers could be anyone, not necessarily terrorists. In the future, after attacks with autonomous weapons, the attacker will often remain unexplained, which will make these attacks go unpunished. It is for this reason that governments around the world are extremely concerned about the problem of killing with autonomous weapons. In the film, the most likely suspects are people "involved in corruption at the highest levels," that is, people with significant economic and political influence.

Sharr writes: “Today we do not give terrorists hand grenades, anti-tank guns or machine guns.” Maybe not - except when terrorists were previously called “freedom fighters” - but there is no lack of effective weapons on the market. For example, between 75 and 100 million AK-47s are in circulation, and most of them are beyond the control of states. In only two years of the attack on Iraq , about 110,000 AK-47 assault rifles "have been lost" [ under this name in the USA all variants of the Kalashnikov assault rifle and similar weapons are known / approx. trans. ].

If the lethal autonomous microdrones are produced by large manufacturers, then they are likely to cost less than the AK-47. And they will be much cheaper to use: they will not need to be assigned a person whom you need to feed, maintain, train, equip and transport to the place of use of force. The budget of ISIL [a terrorist organization banned in the Russian Federation] for 2015 was estimated at $ 2 billion - that would be enough to buy millions of weapons on the black market.

4. Sharr: Terrorists are unable to launch simultaneous coordinated attacks on the scales shown in the video.

As noted above, the attacks in the film, conducted against several universities, were organized not by terrorists, but by unnamed individuals in high positions. We thought about showing a massive attack on the city within the framework of a military company, but decided that it would be unfavorable to accuse any particular country of future war crimes in terms of diplomacy. No matter who wants to carry out mass attacks with autonomous weapons - their task would be much more difficult if the weapons manufacturers officially banned them from doing so.

It is also important to understand that autonomous weapons enable non-state forces to conduct large-scale attacks. Coordination of attacks in different locations does not deteriorate, and the scale of each individual attack can greatly increase. Note that Sharr misinterpreted the video in this question. He saw about 50 drones fly out of the van, despite the fact that most of the large drones serve only as carriers of many small lethal microdrones of a small range, which are automatically deployed during the last stage of the attack. A single van is enough for one university, so the film implies the coordination of attacks in 12 places - this is not particularly different from the coordination of attacks in 10 places, described as quite possible in an article cited by Sharr.

And if the state is in principle capable of carrying out attacks with the help of thousands of tanks or airplanes (controlled remotely or otherwise) or tens of thousands of soldiers, such scales for non-state forces are achievable only with the use of autonomous weapons. Therefore, the arms race in this area shifts opportunities from states - which are mostly limited to international agreements, trade dependencies, and so on. - to non-state forces that do not have such restrictions.

Perhaps this is precisely what Sharr draws attention to in an earlier quoted interview when he says: “We are likely to see more attacks on a larger scale, potentially even larger than this, and in various theaters of action — in the air, on the ground and on sea".

In general, we, like many other experts, continue to consider it possible that autonomous weapons can become scalable weapons of mass destruction. Sharr's claims that the ban will be ineffective or counterproductive are inconsistent with historical data. And finally, the idea that human security will increase in the absence of regulation of the arms race in the field of autonomous weapons is, at best, the wishful thinking.

Stuart Russell is a computer science professor at the University of California at Berkeley, co-author of the textbook “Artificial Intelligence: a Modern Approach” [A Modern Approach to AI]

Anthony Éguire is a professor of physics at the University of California, Santa Cruz, and co-founder of the Future Life Institute.

Eriel Conn is involved in media and public relations at the Future Life Institute.

Max Tegmark is a professor of physics at the Massachusetts Institute of Technology, co-founder of the Future Life Institute, author of “Life 3.0: Being a Man in the Age of Artificial Intelligence” [Life 3.0: being a man in an AI era]

Source: https://habr.com/ru/post/371271/

All Articles