Intel Xeon Scalable - a new word in working with memory

Hi GT! In 2017, many interesting things happened in the server processor market. Perhaps the most significant event was the presentation of new Intel Xeon Scalable server processors, as well as the associated Purley platform. And today we will talk about how these new products work with memory, how they differ from their predecessors and what modules are better to use with them.

According to IDC, Intel owns more than 90% of the server processor market, but this year, AMD introduced new powerful EPYC processors. Leaders did not remain in debt and in the summer showed the world the Purley platform, which differs from previous solutions, as well as from competitors' products with a new memory scheme.

Perhaps the most important feature of Purley is architecture. The manufacturer introduced Intel Xeon Scalable processors with integrated controllers and special optimizers, as well as Intel Optane SSD components and Intel Xeon Phi chipsets. Subject to the installation of high-performance DRAM memory, all this will work at maximum speed, opening up new opportunities for "cloud computing, virtualization, telecommunications networks of the new generation (5G), machine learning and artificial intelligence."

')

The Intel Xeon Scalable processors themselves are much more productive than the previous generation. According to Intel, the growth is about 65%. This applies to top-end Intel Xeon Scalable Platinum processors, which can contain up to 28 cores on a chip (there are versions with a smaller number) operating at a frequency of up to 2.4 GHz. Due to new data transfer technologies between processors and computational cores, new items allow you to perform poorly parallelizable tasks when you cannot predict in advance what information will be needed in the next moment. Let's look at how the new platform works with data.

In addition to having a 6-channel DDR4 memory controller, Intel Xeon Scalable processors can work directly with Intel Optane SSDs. Thanks to special optimizations, connecting via a PCIe 3.0 interface they actually create a new level of operational data storage, providing processors with access to a vast memory field. Processors support up to 48 PCIe interfaces on the board, which allows you to install additional Intel Optane drives in a fairly large number. The data transfer rate on the PCIe bus is 8 gigatransactions per second (equivalent to 32 Gbps), and Optane can operate at a speed of about 2 Gbps for each drive.

According to Intel, when installing 6 Intel Optane drives and using Intel SPDK, you can reduce response time by up to 40 times, and increase IOPS (number of I / O operations) by up to 5.2 times and decrease delays by 3.3 times compared to work on traditional drives. This is due to the acceleration of access to information and Tier-ing data placement on various drives.

How much memory does the system get? Let's calculate: each Intel Xeon Scalable processor supports 6 memory channels of 2 modules each. Thus, you can set 12 * 128 GB = 1.5 TB of RAM. Supplementing them with 6 SSDs with a capacity of 512 GB, you can get 1.5 + 3 = 4.5 TB of high-speed memory for EACH processor. Moreover, the use of Intel Memory Drive Technology (MDT) allows you to create software-defined memory storage for each server. A special driver is loaded up to the OS and combines all the RAM and drives into a single two-tier storage. As a result, the operating system receives a ready-made memory storage with automated data distribution in “fast” and “slow” segments.

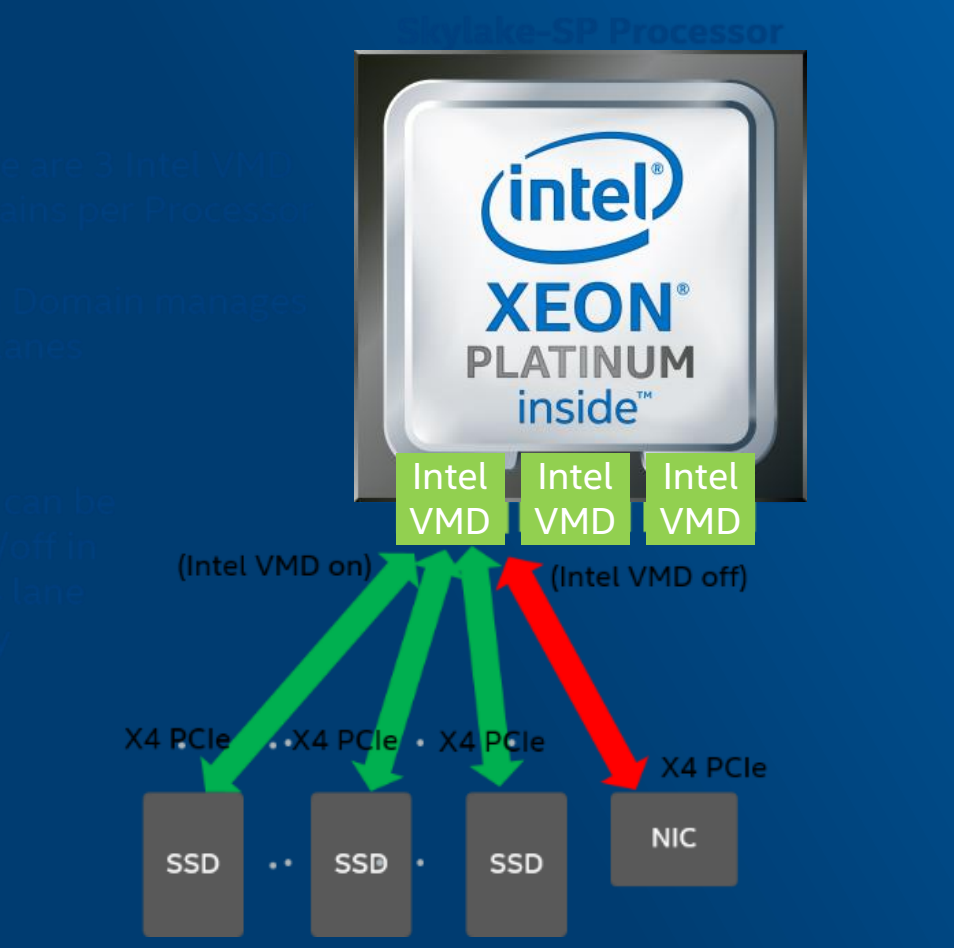

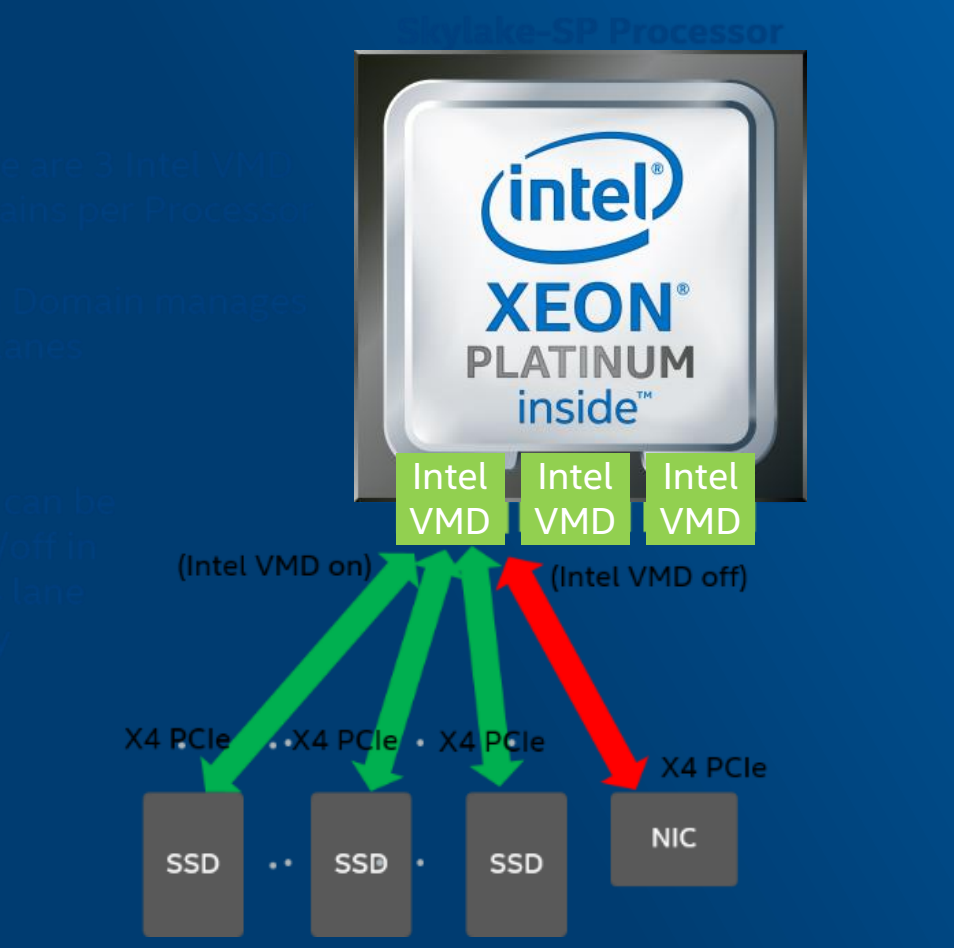

This is really an incredible result, given that you can install quite a lot of capacious, but slower disks in each server for the static storage of data sets. For example, 10 SATA drives with a capacity of 2 TB each can add 20 TB of “slow” storage; in order to achieve a higher speed, you can make a choice in favor of SSD drives. Intel Xeon Scalable processors have a built-in VMD (Virtual Management Device) module that independently creates RAID arrays from PCIe and SATA-connected drives, supporting hot swapping of failed components, and also interacts directly with the network controller to accelerate data handling. in the whole computing cluster.

Now let's go back to the processor itself. The SkyLake architecture changes the structure of the cache itself. The L1 cache is located inside the kernel, next to each core is an “extra” to the L2 cache of 768 KB, which allows it to reach 1 MB. And the L3 cache, from which each core can receive data directly, is located in a separate crystal layer and makes up 39 MB — that is, 1.375 MB per core. This cache is non-inclusive — data comes from memory directly into L2, and data lines that are unnecessary or common to several cores are pushed into the L3 cache.

As you can see in the above scheme, the internuclear interaction takes place not on a ring bus, as it was in the previous generation of processors, but according to the Mesh architecture. It speeds up the exchange of information and qualitatively improves the performance of new chips with high loads, characteristic of virtualization tasks and complex analytical systems, especially when the core memory requirements are almost impossible to predict.

By the way, the same architecture is used for data exchange between processors in a multiprocessor server. Thanks to the OmniPath bus, the communication between the chips is much faster, and the Remote Direct Memory Access architecture allows you to directly access the “alien” memory cells, bypassing the OS level. Thus, the computational cores can work with data that is in the memory field of another processor or even another node of the computing cluster.

The cache hierarchy, as well as data access technologies stored in the RAM of other processors, including the network, make a large and accessible field of RAM one of the main advantages of the new Intel platform. And if traditional drives connected via the SATA interface can be replaced in the hot swap mode, then the RAM should be initially selected as reliable and stable as possible. In cloud data centers and in heavy analytical systems, RAM plays a key role, and Kingston already has a proposal created specifically for new processors.

Purley platform allows you to install register memory modules RDIMM or modules with a reduced load LRDIMM, or 3DS LRDIMM to achieve energy efficiency. With the advent of new Intel and AMD platforms, Kingston has certified its memory modules for innovative server platforms.

By the way, please note that the Kingston server memory line now uses the KSM (Kingston Server Memory) label, not KVR, KCP, KTH, KTD, KTL, KCS - or something else. So far this concerns modules with a speed of 2666 MHz, but all new brand modules of Kingston server memory will be marked with KSM, including those operating at higher frequencies, the release of which is planned already in 2018. However, in the case of the Xeon Scalable this does not matter yet, since the integrated memory controller for top Intel Xeon Scalable runs at 2666 MHz and faster Purley memory is simply not needed. And for real-life tasks, the most expensive chips are not always needed at all. In most cases, it is possible to get along with the Gold 51xx, Silver 41xx and Bronze 31xx processors on the same architecture that support memory frequencies of 2400 MHz and 2133 MHz.

As you can see, with a reasonable approach, you can save on memory, the benefit of Kingston, of course, offers products with any frequencies from the above scheme. It is enough to determine the range of tasks that the server will perform and install memory in it that matches the capabilities of the processor. For example, in the Bronze 31xx series it makes no sense to buy even DDR4-2400 MHz, since the processor will not use its capabilities.

Ordering a new generation of memory - KSM modules - has become much easier. No more marking differences. If you bought Kingston memory for servers, you know perfectly well that we used to have two types of server memory - Server Premier and Value RAM. All KSM memory has Server Premier features, despite the fact that the price of the modules was reduced relative to the premium series. In addition, if earlier it was necessary to check whether there is an “i” suffix (referring to Intel certification) in the memory labeling, now you can forget about it - the entire KSM series is initially certified. Therefore, it will be easier to choose new items to both collectors and users of server systems.

All KSM modules use a fixed BOM (Bill of Materials). This means that Kingston specialists carefully select the manufacturers of the microcircuits themselves and allow only the highest quality products to be included in the series. Engineers conduct testing of each memory cell at the stage of production control, as well as check the printed circuit boards themselves. We control everything up to the revision of the chips and the manufacturer of the register chip. Thus, the KSM series modules represent the most thoroughly controlled Kingston memory series for professional tasks.

All memory information is now easily readable in her part number. For example, if you read the following number on the module:

This will mean that the manufacturer of the chip H is Hynix, the revision of the chip is A, and the manufacturer of the register chip is IDT. By the way, manufacturers of registered chips can be different companies. In addition to IDT (I), components from Rambus, previously known as Inphi ® and Montage (M), are also used.

Generally, a more transparent labeling not only reflects Kingston’s more holistic approach to Server Memory Release, but also helps produce module upgrades, controlling all parameters, up to the chip manufacturer. This will avoid possible conflicts or decrease in equipment performance due to incomplete compatibility, as well as purchase modules of the same type for several types of servers, simplifying logistics and system maintenance.

Let's summarize a little. To get the most out of the new platform, you need to carefully select all the components and use all the Intel optimization tools that help you take advantage of the new processors and the Purley platform as a whole. The advantages of Kingston memory for servers are already used by leading hosting companies , and if you install the most productive and reliable modules, switching to Intel Xeon Scalable will give the best possible effect for solving difficult tasks - from virtualization to analytics and modeling.

Subscribe and stay with us - it will be interesting!

For more information about Kingston and HyperX products, visit the company's official website .

Our country is broad, so we prepared shares at once in several network partner stores - everyone should be able to get HyperX accessories as close as possible to home and as quickly as possible. Until January 12, you can purchase peripherals with maximum discounts.

• 1000 rubles discount - for HyperX Cloud Stinger headset

• 2000 rubles discount - on HyperX Alloy FPS keyboard with Cherry MX switches (optional Brown / Blue / Red)

• 1000 rubles discount - for HyperX Cloud Silver headset

• 700 rubles discount for HyperX Pulsefire FPS mouse

According to IDC, Intel owns more than 90% of the server processor market, but this year, AMD introduced new powerful EPYC processors. Leaders did not remain in debt and in the summer showed the world the Purley platform, which differs from previous solutions, as well as from competitors' products with a new memory scheme.

Perhaps the most important feature of Purley is architecture. The manufacturer introduced Intel Xeon Scalable processors with integrated controllers and special optimizers, as well as Intel Optane SSD components and Intel Xeon Phi chipsets. Subject to the installation of high-performance DRAM memory, all this will work at maximum speed, opening up new opportunities for "cloud computing, virtualization, telecommunications networks of the new generation (5G), machine learning and artificial intelligence."

')

The Intel Xeon Scalable processors themselves are much more productive than the previous generation. According to Intel, the growth is about 65%. This applies to top-end Intel Xeon Scalable Platinum processors, which can contain up to 28 cores on a chip (there are versions with a smaller number) operating at a frequency of up to 2.4 GHz. Due to new data transfer technologies between processors and computational cores, new items allow you to perform poorly parallelizable tasks when you cannot predict in advance what information will be needed in the next moment. Let's look at how the new platform works with data.

New memory hierarchy

In addition to having a 6-channel DDR4 memory controller, Intel Xeon Scalable processors can work directly with Intel Optane SSDs. Thanks to special optimizations, connecting via a PCIe 3.0 interface they actually create a new level of operational data storage, providing processors with access to a vast memory field. Processors support up to 48 PCIe interfaces on the board, which allows you to install additional Intel Optane drives in a fairly large number. The data transfer rate on the PCIe bus is 8 gigatransactions per second (equivalent to 32 Gbps), and Optane can operate at a speed of about 2 Gbps for each drive.

According to Intel, when installing 6 Intel Optane drives and using Intel SPDK, you can reduce response time by up to 40 times, and increase IOPS (number of I / O operations) by up to 5.2 times and decrease delays by 3.3 times compared to work on traditional drives. This is due to the acceleration of access to information and Tier-ing data placement on various drives.

How much memory does the system get? Let's calculate: each Intel Xeon Scalable processor supports 6 memory channels of 2 modules each. Thus, you can set 12 * 128 GB = 1.5 TB of RAM. Supplementing them with 6 SSDs with a capacity of 512 GB, you can get 1.5 + 3 = 4.5 TB of high-speed memory for EACH processor. Moreover, the use of Intel Memory Drive Technology (MDT) allows you to create software-defined memory storage for each server. A special driver is loaded up to the OS and combines all the RAM and drives into a single two-tier storage. As a result, the operating system receives a ready-made memory storage with automated data distribution in “fast” and “slow” segments.

This is really an incredible result, given that you can install quite a lot of capacious, but slower disks in each server for the static storage of data sets. For example, 10 SATA drives with a capacity of 2 TB each can add 20 TB of “slow” storage; in order to achieve a higher speed, you can make a choice in favor of SSD drives. Intel Xeon Scalable processors have a built-in VMD (Virtual Management Device) module that independently creates RAID arrays from PCIe and SATA-connected drives, supporting hot swapping of failed components, and also interacts directly with the network controller to accelerate data handling. in the whole computing cluster.

Cache and special memory access

Now let's go back to the processor itself. The SkyLake architecture changes the structure of the cache itself. The L1 cache is located inside the kernel, next to each core is an “extra” to the L2 cache of 768 KB, which allows it to reach 1 MB. And the L3 cache, from which each core can receive data directly, is located in a separate crystal layer and makes up 39 MB — that is, 1.375 MB per core. This cache is non-inclusive — data comes from memory directly into L2, and data lines that are unnecessary or common to several cores are pushed into the L3 cache.

As you can see in the above scheme, the internuclear interaction takes place not on a ring bus, as it was in the previous generation of processors, but according to the Mesh architecture. It speeds up the exchange of information and qualitatively improves the performance of new chips with high loads, characteristic of virtualization tasks and complex analytical systems, especially when the core memory requirements are almost impossible to predict.

By the way, the same architecture is used for data exchange between processors in a multiprocessor server. Thanks to the OmniPath bus, the communication between the chips is much faster, and the Remote Direct Memory Access architecture allows you to directly access the “alien” memory cells, bypassing the OS level. Thus, the computational cores can work with data that is in the memory field of another processor or even another node of the computing cluster.

And again, the whole thing in memory!

The cache hierarchy, as well as data access technologies stored in the RAM of other processors, including the network, make a large and accessible field of RAM one of the main advantages of the new Intel platform. And if traditional drives connected via the SATA interface can be replaced in the hot swap mode, then the RAM should be initially selected as reliable and stable as possible. In cloud data centers and in heavy analytical systems, RAM plays a key role, and Kingston already has a proposal created specifically for new processors.

Purley platform allows you to install register memory modules RDIMM or modules with a reduced load LRDIMM, or 3DS LRDIMM to achieve energy efficiency. With the advent of new Intel and AMD platforms, Kingston has certified its memory modules for innovative server platforms.

By the way, please note that the Kingston server memory line now uses the KSM (Kingston Server Memory) label, not KVR, KCP, KTH, KTD, KTL, KCS - or something else. So far this concerns modules with a speed of 2666 MHz, but all new brand modules of Kingston server memory will be marked with KSM, including those operating at higher frequencies, the release of which is planned already in 2018. However, in the case of the Xeon Scalable this does not matter yet, since the integrated memory controller for top Intel Xeon Scalable runs at 2666 MHz and faster Purley memory is simply not needed. And for real-life tasks, the most expensive chips are not always needed at all. In most cases, it is possible to get along with the Gold 51xx, Silver 41xx and Bronze 31xx processors on the same architecture that support memory frequencies of 2400 MHz and 2133 MHz.

As you can see, with a reasonable approach, you can save on memory, the benefit of Kingston, of course, offers products with any frequencies from the above scheme. It is enough to determine the range of tasks that the server will perform and install memory in it that matches the capabilities of the processor. For example, in the Bronze 31xx series it makes no sense to buy even DDR4-2400 MHz, since the processor will not use its capabilities.

Ordering a new generation of memory - KSM modules - has become much easier. No more marking differences. If you bought Kingston memory for servers, you know perfectly well that we used to have two types of server memory - Server Premier and Value RAM. All KSM memory has Server Premier features, despite the fact that the price of the modules was reduced relative to the premium series. In addition, if earlier it was necessary to check whether there is an “i” suffix (referring to Intel certification) in the memory labeling, now you can forget about it - the entire KSM series is initially certified. Therefore, it will be easier to choose new items to both collectors and users of server systems.

All KSM modules use a fixed BOM (Bill of Materials). This means that Kingston specialists carefully select the manufacturers of the microcircuits themselves and allow only the highest quality products to be included in the series. Engineers conduct testing of each memory cell at the stage of production control, as well as check the printed circuit boards themselves. We control everything up to the revision of the chips and the manufacturer of the register chip. Thus, the KSM series modules represent the most thoroughly controlled Kingston memory series for professional tasks.

All memory information is now easily readable in her part number. For example, if you read the following number on the module:

This will mean that the manufacturer of the chip H is Hynix, the revision of the chip is A, and the manufacturer of the register chip is IDT. By the way, manufacturers of registered chips can be different companies. In addition to IDT (I), components from Rambus, previously known as Inphi ® and Montage (M), are also used.

Generally, a more transparent labeling not only reflects Kingston’s more holistic approach to Server Memory Release, but also helps produce module upgrades, controlling all parameters, up to the chip manufacturer. This will avoid possible conflicts or decrease in equipment performance due to incomplete compatibility, as well as purchase modules of the same type for several types of servers, simplifying logistics and system maintenance.

Conclusion

Let's summarize a little. To get the most out of the new platform, you need to carefully select all the components and use all the Intel optimization tools that help you take advantage of the new processors and the Purley platform as a whole. The advantages of Kingston memory for servers are already used by leading hosting companies , and if you install the most productive and reliable modules, switching to Intel Xeon Scalable will give the best possible effect for solving difficult tasks - from virtualization to analytics and modeling.

Subscribe and stay with us - it will be interesting!

For more information about Kingston and HyperX products, visit the company's official website .

Our country is broad, so we prepared shares at once in several network partner stores - everyone should be able to get HyperX accessories as close as possible to home and as quickly as possible. Until January 12, you can purchase peripherals with maximum discounts.

• 1000 rubles discount - for HyperX Cloud Stinger headset

• 2000 rubles discount - on HyperX Alloy FPS keyboard with Cherry MX switches (optional Brown / Blue / Red)

• 1000 rubles discount - for HyperX Cloud Silver headset

• 700 rubles discount for HyperX Pulsefire FPS mouse

Source: https://habr.com/ru/post/371147/

All Articles