Starcraft AI competition history

Introduction

Starting from the first Starcraft AI Competition, held in 2010, the topic of artificial intelligence in real-time strategy (RTS) has become increasingly popular. Participants in such competitions represent their Starcraft AI bots, which fight in the standard version of Starcraft: Broodwar . These RTS games, inspired by previous competitions such as Open RTS (ORTS) , are examples of the current state of artificial intelligence in real-time strategy games. Starcraft AI bots are controlled using the Brood War Application Programming Interface (BWAPI) , developed in 2009 as a way to interact and control Starcraft: Broodwar using C ++. With the growth of functionality and the popularity of BWAPI, the first AI bots (agents) for Starcraft began to appear and the possibility of organizing a real Starcraft AI competition appeared. We will tell you in detail about each major Starcraft AI competition, as well as the development of UAlbertaBot , our bot participating in these competitions. Please note that I have been the organizer of AIIDE competitions since 2011 and, of course, I have more information about these competitions. Each competition will be reviewed in chronological order, with full results and links to download the source codes of bots and AIIDE and CIG competition response files.

If you wish, you can read the report on the AIIDE Starcraft AI Competition 2015 here .

"Why not StarCraft 2?"

This question is constantly asked me when I say that we are organizing Starcraft: BroodWar AI competitions. In these competitions, only BWAPI is used as the BroodWar programming interface. BWAPI was created by BroodWar reverse engineering and uses BroodWar read and write to the memory space for reading data and sending commands to the game. Since any program involved in such actions will be considered a hack or cheat engine, Blizzard said it does not allow us to do something similar for StarCraft 2. In fact, most versions of the end-user license agreements (EULA) StarCraft 2 explicitly prohibits program change. We are pleased that Blizzard allowed us to conduct tournaments using BWAPI, it even helped us by providing prizes for the AIIDE tournament, but due to a change in its policies, we cannot repeat the same for StarCraft 2.

')

There are other RTS game engines that can be used in competitions. One of these engines is ORTS , a free RTS software engine where competitions were held until in 2010 BWAPI was released and the first AIIDE Starcraft AI Competition was held. Another engine is microRTS , this is the Java RTS engine that plays simplified grid-based RTS. It was designed specifically for testing AI techniques.

AI Techniques for RTS

I highly recommend exploring the wonderful reviews of the current state of AI techniques for StarCraft and the description of bot architectures in the following articles:

- StarCraft Bots and Competitions [2016]

D. Churchill, M. Preuss, F. Richoux, G. Synnaeve, A. Uriarte, S. Ontanon, and M. Certicky

Springer Encyclopedia of Computer Graphics and Games - RTS AI Problems and Techniques [2015]

S. Ontanon, G. Synnaeve, A. Uriarte, F. Richoux, D. Churchill, and M. Preuss

Springer Encyclopedia of Computer Graphics and Games - A Survey of the Real-Time Strategy Game |

S. Ontanon, G. Synnaeve, A. Uriarte, F. Richoux, D. Churchill, and M. Preuss

Accepted to TCIAIG (August 2013)

Thanks

AI research and competitions on RTS require a huge amount of labor for many people, so I want to thank those who helped to organize current and past competitions, developed bots and generally helped the development of AI for RTS. First, I thank the RTS AI Research Group at the University of Alberta (UofA), of which I am a member. The university has been involved in studying AI for RTS since the release of the motivating article by Michael Buro in 2003. UofA conducted the ORTS AI Competition in 2006–2009, and starting from 2011, it holds the AIIDE Starcraft AI Competition annually. I want to personally thank the former and current members of the RTS AI research team for all their many years of advertising, organizing and conducting these competitions, as well as for continuing to conduct world-class research in this area. In the photo below, from left to right: Nicolas Barriga, David Churchill, Marius Stanescu, and Michael Buro. The picture did not include former members of the group Tim Furtak, Tim Furtak, Sterling Oersten, Graham Erickson, Doug Schneider, Jason Lorenz and Abdullah Saididah Abedlah Said.

I also want to thank those who organized and conducted the current and past Starcraft AI Competitions. Thanks to Ben Weber for organizing the first AIIDE Starcraft AI Competition, which sparked international interest in the field of AI research for RTS. Michal Certicky annually makes a great effort to conduct and support the Student Starcraft AI Tournament , as well as the constant tournament ladder and video streaming bots. He also helped a lot in promoting the AI field for the RTS. The organizers of the CIG Starcraft AI Competition are Johan Hagelback, Mike Preuss, Ben Weber, Tobias Mahlmann, Kyung-Joong Kim, Ho-Chul Cho), Inseok Oh (In-Seok Oh) and Manche Kim (Man-Je Kim). I also thank Krasimir Krastev (Krasimir Krastev) (krasi0) for keeping the original Starcraft AI Bot Ladder . Also, many thanks to Santi Ontanon for developing the microRTS AI system. And special thanks go to Adam Heinermann for continuing to develop BWAPI. Without it, all these studies would have been impossible.

Competitions Starcraft AI Competition

AIIDE 2010

[Approx. Per.: The tables in the original article are collected by javascript, so transferring them to translation would be too time consuming. If you need working references in the table, they are available in the original .]

The AIIDE Starcraft AI Competition was first held in 2010 by Ben Weber at the Expressive Intelligence Studio of the University of California at Santa Cruz, as part of the AIIDE (Artificial Intelligence and Interactive Digital Entertainment) AIIDE conference . 26 participants competed in four different game modes, which ranged from simple battles to playing full Starcraft. Since this was the first year of the competition and the infrastructure was not yet developed, each tournament game was launched manually on two laptops. We also had to record the results on our own. In addition, bots did not save information that would allow them to learn more about opponents between matches. In the 2010 competition there were four tournament categories. The first tournament was a battle with the micromanagement of units on a flat surface, consisting of four separate games with different combinations of units. Of the six competitors, FreSCBot won, and Sherbrooke finished second. The second tournament was also a micromanagement of units, but in the same difficult terrain. There were two applicants in this category, and FreSCBot won again, overtaking Sherbrooke. The third tournament was a technically limited game in StarCraft on one well-known map with the “fog of war” turned off. Players could choose only the protoss race and the use of new units was prohibited. In this tournament with elimination after two defeats (double elimination) eight bots participated. In the final, MimicBot won the first place, defeating Botnik. Since this was a StarCraft variant with full information, MimicBot chose the strategy of “repeating the adversary’s order of construction, gaining an advantage in economics if possible,” and it worked quite well.

The fourth tournament was considered the main competition in which the bots played the full-format game StarCraft: Brood War with the “fog of war” turned on. The tournament was conducted in double elimination format with random pairs. The results of the top five games were chosen. Opponents could play for any of the three races, in the gameplay only actions considered to be "cheating" in the StarCraft community were prohibited. Since computer programs written in BWAPI have no restrictions on the number of commands sent to the Starcraft engine, some actions not foreseen by the developers were possible, for example, sliding buildings and walking ground units through walls. Such actions were considered cheating and were banned in the tournament. A set of cards of five well-studied professional cards were announced to opponents in advance. Of these, for each game was chosen randomly one. The fourth tournament was won by Overmind - a zerg bot, created by a large team from the University of California (Berkeley). Overmind defeated Krasi0 Krasimir Krastev’s Terra Bot Final.

Overmind actively used the powerful and deft flying unit of the zerg mutalisk (Mutalisk), which he very successfully managed using potential fields. In general, Overmind's strategy was to initial defense from Zergling and sinking colonies (Sunken Colony) (fixed defense towers) to protect the initial development and gather resources for creating the first Mutalisks. After creating the mutalisks, they were sent to the enemy base, patrolling and attacking its perimeter. If the bot did not win the first direct attack, he slowly patrolled and destroyed any unprotected units, gradually exhausting the enemy to the limit, after which he destroyed his last attack. The runner-up krasi0 bot used a terran defensive strategy. He built bunkers (Bunker), siege tanks (Siege Tank) and missile turrets (Missile Turret) for protection. After building a certain number of units, he sent an army of mechanical units to the enemy base. This bot worked quite well and lost only Overmind in competitions. In 2011, Ars Technica wrote an excellent article on Overmind . There was also a match of a man against a car, in which a professional player and the best AI participated. You can see it here:

The first version of UAlbertaBot was released in the summer of 2010 and sent to the AIIDE 2010 competition in September. A group of six students, under my guidance from Michael Buro of the University of Alberta, created the original version of UAlbertaBot using BWAPI and the Brood War Standard Add-on Library (BWSAL), which provided features such as simple layout of construction queues, placement of buildings and management of workers . The 2010 version of the UAlbertaBot bot played as a zerg race and used one main strategy, in which the flying unit of the zerg mutalisk was actively used. Although the bot and micromanagement functions of the bot were implemented well, serious logical errors at the beginning of the game and planning the construction procedure led to poor results in the 2010 competitions. He was defeated in the third round of the grid by the Terras bot krasi0. This first implementation of UAlbertaBot suffered from technical problems, so after the competition we decided to completely rewrite the bot for the next competition.

CIG 2010

After the success of the AIIDE 2010 competition, an attempt was made to conduct an AI competition for Starcraft at the Computational Intelligence in Games (CIG) conference. The CIG 2010 competition, organized by Johan Hagelback, Mike Preus and Ben Weber, used a single game mode similar to the third tournament with technical limitations from the AIIDE 2010 competition, but instead of the terran race, the bots played for the protoss race. Unfortunately, the first year of the CIG competition suffered from serious technical problems, which led to the decision to use specially created Starcraft cards instead of the traditional ones, which led to the critical errors of the Brood War Terrain Analysis (BWTA) of many bots. Because of these frequent “hangs,” it was decided that it was impossible to determine the winner in competitions. UAlbertaBot did not participate in this competition because it was in the process of complete rewriting.

AIIDE 2011

In 2011, the AIIDE competition was held at the University of Alberta and continues to this day. They are organized annually and are held by me and Michael Buro. Due to the small number of participants in the first, second and third tournaments of the 2010 competition, we decided that in 2011 AIIDE competitions will consist only of a full-featured game in Starcraft (with the same rules as in the fourth tournament of 2010), and from smaller micromanagement tournaments we refused. The tournament rules have also been changed: all participants must provide the source code of their bots and allow its publication after the end of the competition. There were several reasons for this. Firstly, the entry barrier for future competitions was reduced: programming AI-bot for Starcraft takes a lot of time, so future participants can download and change the source code of old bots to save time. Another reason is the simplification of protection against cheats: thousands of games are played in the tournament now, so we cannot observe each and track the use of cheat tactics, but they are much easier to detect by examining the source code (too strong code obfuscation is prohibited). And the last reason: assistance in the development of modern AI for Starcraft. Bots developers can borrow the strategies and techniques of bots of the past by studying their source code. Ideally, all bots in new competitions should be at least as strong as last year. The competition in 2011 was attended by 13 bots.

The 2010 tournaments were launched by Ben Weber on two laptops. I had to manually launch Starcraft and create games. Physical activity was quite high - in a single double elimination tournament with random pairs approximately 60 games were played. This led to negative reviews: the tournament in this style depended heavily on how lucky the pair was. Therefore, in the competition in 2011, we decided to get rid of any chance in tournaments and used the format of a circular cycle. In this format, you need to play a much larger number of games, so in the summer of 2011, Jason Lorenz (a summer bachelor student of Michael Buro) and I wrote software that automatically plotted and started round-robin tournaments in Starcraft on an arbitrary number of computers connected to the local network. This software used a client-server architecture with one server, creating a game schedule and storing results, and several clients running Starcraft and software to monitor the game process and record the results after it was completed. The bot files, game replays and final results were available to all clients in the Windows shared folder on the local network. The initial version of the software allowed to carry out 2340 games for the same time, during which we played 60 games in the 2010 competitions. Bots played thirty times with each of the opponents. The competition used 10 cards selected from professional tournaments and balanced for all races. They could be downloaded from the competition website in advance. AIIDE competitions were created on the model of "human" competitions, in which a set of cards and opponents are known in advance, so that you can prepare and model the actions of your opponents.

At the end of the five-day competition, Skynet took the first place, UAlbertaBot took the second place, and Aiur took the third place. Skynet is a protoss bot written by software developer Andrew Smith from the UK, he used many reliable protoss strategies: rush zealots (Zealot), army of dragoons (dragoon) / zealots in the middle of the game and army of zilots, dragoons and destroyers ( Reaver) at the end of the game. His reliable work with the economy and good protection in the early stages allowed him to hold out against the far more aggressive bots of the Protoss - UAlbertaBot and Aiur. UAlbertaBot then played for the protoss race, and is described in detail in the next paragraph. Aiur was written by Florian Richoux, a graduate student at the University of Nantes. He also played for the protoss and used several different strategies, such as the rash zealots and an army of zealots and dragoons. An interesting rock-scissors-paper situation arose between the top three finalists: Skynet won UAlbertaBot in 26 games out of 30, UAlbertaBot defeated Aiur in 29 games out of 30, and Aiur defeated Skynet in 19 games out of 30.

Bot Overmind, who won the AIIDE 2010 competition, did not participate in 2011, because it revealed its great vulnerability to aggression in the early stages of the game and it was easily defeated by all three races. The Overmind development team also said that it does not want to disclose the source code of the bot, so it cannot participate in the 2011 competitions. Instead, a team of undergraduate students from Berkeley participated with the Term bot Undermind, who ranked seventh.

UAlbertaBot was completely rewritten in 2011 by me and Sterling Oersten (student of Michael Buro) and instead of playing zerg I started playing for the protoss race. The most important reason for the transition to the protoss was that we found that implementing the strategies of the protoss is much simpler from a technical point of view, and these strategies are much more stable in testing. Zerg race strategies depend heavily on the smart location of buildings, and we didn’t study this problem very well. Since zerg are rather weak in defense in the early stages of the game, their buildings must be positioned in such a way that they form a “labyrinth” to the base, slowing the enemy's advancement towards the workers' units. The protoss race has no such problem and its defense in the early stages is good enough thanks to the powerful units - zealots and dragoons. Another reason for the transition to the protoss is the addition of a search system for construction , which was developed taking into account the simpler infrastructure of the protoss buildings, so it did not work for zerg and terrans. This planning system for the construction of buildings was designed to build a certain number of unit types. It was able to automatically plan the time-optimal construction orders in real time, and provided much better results than the priority ordering system of the BWSAL system used in the 2010 version of UAlbertaBot. The new construction queue planning system added to UAlbertaBot was the first version of a comprehensive search-based approach now used in all bots in Starcraft AI competitions. This new version of UAlbertaBot implemented a very aggressive strategy of early rush with zealots, which stunned opponents and allowed them to win many games in just a few minutes. If the initial rush strategy of the Zealot did not kill the enemy, it turned into the creation of remote units — dragoons for use in strategy in the middle and late stages of the game. UAlbertaBot worked quite well and reached second place in the competition. The ratio of victories and defeats he was comparable only with the results of Skynet and Undermind. Bot Skynet managed to stop the early rashies of UAlbertaBot with the help of an impressive use of dragoon at the beginning of the game, which allowed killing several zealots with one dragoon. Undermind's strategy was to build several terran bunkers as initial defense against the aggressive UAlbertaBot rush.

CIG 2011

The 2011 CIG competitions were organized by Tobias Malman of the University of Copenhagen Information Technology and Mike Preus of the Technical University of Dortmund. Taking into account the lessons of the previous year, this time the CIG competitions were conducted on a standard “human” set of five cards, but unlike AIIDE, the cards were not known to participants in advance. The competition used the same rules as in the AIIDE 2011 tournament: a full-featured game of Starcraft with the included "fog of war" and a ban on cheating. The two most important differences in the rules were that for the CIG it was not necessary to disclose the source code of the bots, and the set of maps was unknown in advance. Since both AIIDE and CIG took place in August (because of the conference schedule) and the same bots often took part in them, the CIG organizers decided to change the tournament rules a little by hiding a set of cards that would lead to more interesting results. Ten bots participated in the competitions, and since the CIG organizers did not have automation software, the games were started manually on just a few computers. Because of this, instead of one round-robin tournament, as in AIIDE, the competitions were divided into two groups of five bots with ten round-robin games for each pair. After this group stage, the best two bots from each group went into the final group and for each pair ten games were played again. Despite the fact that UAlbertaBot won Skynet in the first group, Skynet took the first place in the final, second place went to UAlbertaBot with a small margin, third - Xelnaga, and fourth - BroodwarBotQ.

Since there was a gap of only two weeks between the two competitions, no major changes were made to the CIGs either in Skynet or UAlbertaBot. The only changes to UAlbertaBot were the removal of fragments of manually entered information about maps and the location of buildings on the maps that participated in AIIDE. They were replaced by algorithmic solutions.

SSCAIT 2011 ( detailed results )

The first student AI tournament for Starcraft (Student Starcraft AI Tournament, SSCAIT) was held in the winter of 2011 and was organized by Michal Certizki from Comenius University in Bratislava. This tournament was conceived as part of the “Introduction to AI” course taught by Michal at the university. As part of the course, each student had to write a competition bot. Since there were a lot of students on the course, 50 people took part in the competition. They were all students. Specialized software, written by Michal, was used for automatic scheduling and running tournament games. The format of the tournament divided 50 participants into ten groups of five for group stages. 16 finalists moved to the double elimination stage. The winner of the final was Roman Danielis, a student at Comenius University. Not much is known about bots and strategies at these competitions, because they did not receive wide recognition outside the university, therefore the bot UAlbertaBot did not participate in them.

AIIDE 2012

Competitions AIIDE 2012 again held at the University of Alberta. A serious change was made to them: permanent file storage allowed bots to learn during the whole time of the competition. The tournament management software was improved, each bot gained access to the read and write folders contained in a shared folder accessible to all client machines. During each round, bots could read from the reading folder and write to the recording folder, and at the end of each round-up cycle (when one game was played on one map between all bots), the contents of the recording folder were copied to the reading folder, giving access to all information recorded in previous rounds. This copying method ensured that none of the bots would benefit from information during the round thanks to the schedule. Ten bots took part in the 2012 competition, and by the end of the fifth day 8279 games were played, 184 for each pair of bots.The final results were almost the same as in 2011. Skynet took the first place, Aiur - the second, and UAlbertaBot - the third. The work of Aiur was improved by introducing a new strategy called \ textit {cheese}: early rush strategies with a photon cannon (Photon Cannon), for which other bots were not ready.

Man's matches against the car in 2012 can be viewed here:

For these competitions, one major update was made to UAlbertaBot - the addition of the SparCraft combat simulation package. In the 2011 version, UAlbertaBot simply waited for the creation of a threshold number of zealots, and then constantly sent them to the enemy base and did not even retreat. In the 2012 version, the battle simulation module was updated, which allowed for the evaluation of the battle results. It was used in battle to predict who will win it. If our victory was predicted, the bot continued to attack, if the victory of the enemy - the bot retreated to its base. This new tactic has proven its strength in practice, but the defense of Aiur at the early stage of the game has improved significantly compared with last year, when UAlbertaBot took second place. UAlbertaBot for competitions in 2012 also implemented three clear strategies: rush with the zealots from the 2011 version, rush dragoons and rush dark templar (Dark Templar).The bot also used input-output to a permanent file for storing match data against specific opponents, and chose a strategy against the appropriate bot using the UCB-1 formula. This learning strategy worked quite well: 60% of the victories at the beginning of the tournament turned into 68.6% by the end of the tournament. One of the main reasons why Aiur won over UAlbertaBot in the tournament was that the strategies of rush dragoons and dark templar were poorly implemented. Therefore, the algorithm for learning how to choose strategies eventually came to the constant use of strategy by racists, and the study of other strategies on the example of past victories turned out to be useless. If the strategy of rush by zealots was chosen in each game, then UAlbertaBot would take second place.This learning strategy worked quite well: 60% of the victories at the beginning of the tournament turned into 68.6% by the end of the tournament. One of the main reasons why Aiur won over UAlbertaBot in the tournament was that the strategies of rush dragoons and dark templar were poorly implemented. Therefore, the algorithm for learning how to choose strategies eventually came to the constant use of strategy by racists, and the study of other strategies on the example of past victories proved to be useless. If the strategy of rush by zealots was chosen in each game, then UAlbertaBot would take second place.This learning strategy worked quite well: 60% of the victories at the beginning of the tournament turned into 68.6% by the end of the tournament. One of the main reasons why Aiur won over UAlbertaBot in the tournament was that the strategies of rush dragoons and dark templar were poorly implemented. Therefore, the algorithm for learning how to choose strategies eventually came to the constant use of strategy by racists, and the study of other strategies on the example of past victories proved to be useless. If the strategy of rush by zealots was chosen in each game, then UAlbertaBot would take second place.Therefore, the algorithm for learning how to choose strategies eventually came to the constant use of strategy by racists, and the study of other strategies on the example of past victories proved to be useless. If the strategy of rush by zealots was chosen in each game, then UAlbertaBot would take second place.Therefore, the algorithm for learning how to choose strategies eventually came to the constant use of strategy by racists, and the study of other strategies on the example of past victories proved to be useless. If the strategy of rush by zealots was chosen in each game, then UAlbertaBot would take second place.

CIG 2012

In 2012 CIG competitions, the tournament management software with AIIDE was used, so many more games could be played as part of the tournament. There were ten participants in the competition, and many bots participated in AIIDE. A set of six previously unknown cards that were not in the previous tournament was used. A total of 4050 games were played, each bot played with all the others 90 times. As in AIIDE, bots were able to read and write to a permanent file for learning, but due to differences in the structure of the network folders of the AIIDE and CIG competitions, they did not work exactly as intended. It is also worth noting that, compared with AIIDE, there were three times more bots crashes here, so it became apparent that there were technical problems with the AIIDE tournament management software. Skynet won again, UAlbertaBot took second place, Aiur - third,and Adjutant is the fourth. In these competitions, UAlbertaBot did not use the data file and training, because this technique did not perform well at the AIIDE 2012 competitions. A rush strategy was implemented that allowed the bot to climb into second place.

SSCAIT 2012 ( )

A few months later, in December, the second SSCAI tournament was held, which was much more widely advertised to participants outside Comenius University. Many bots from the course of Michal Ceritski on AI again participated in the competition, and the total number of participants was 52 bots. The tournament format was a simple round robin, where each bot played one game with the others, or 51 games for each bot. After the completion of the round robin, the final battles were divided into two categories: the student division and the mixed division. To participate in the student division, the bot was supposed to be written by a single student. Points were distributed as follows: 3 points for a win, 1 point for a draw. In the finals of the student division, the first place went to Matej Istenik (Dementor bot) from Zilina University (Slovakia),the second is Marcin Bartnicki from Gdansk University of Technology, and the third is UAlbertaBot. Mixed division was available to all participants. Eight best bots competed in the grid with a knockout after the first defeat. In the final, IceBot defeated Marcin Bartnitsky and won first place. In these competitions, the UAlbertaBot version with CIG 2012 was used, because a set of unknown cards was also used in SSCAIT.

CIG 2013

In 2013, CIG competitions were held a few weeks earlier than AIIDE. The tournament management software, which was supposed to be ready for the AIIDE competition, corresponded, so CIG used the 2012 software version. This meant that the learning file system did not work for the same reasons as in the previous year. Due to additional technical difficulties with setting up the tournament, only 1,000 games were played, only a quarter of last year’s number. The top three were the same as in the previous year: Skynet - first place, UAlbertaBot - second, and Aiur - third. Xelnaga moved from the sixth place to the fourth. UAlbertaBot has undergone major changes (see section below), which were not yet completed by the time of the 2013 CIG competition, so the version of the AIIDE 2012 bot participated.The update was completed for the AIIDE competition a few weeks later.

AIIDE 2013 ( detailed competition report )

For the AIIDE 2013 competition, the tournament management software was almost completely rewritten to be more stable in different networks. Previous software versions relied on the existence of Windows shared folders for storing files; now they began to use data exchange via Java sockets instead. All bot files, game replays, results and read / write folders were packed and sent via Java sockets. This meant that the tournament can now be held online with any configuration that supports TCP (for Java sockets) and UDP (for the Starcraft network game). A guide / demonstration of this tournament management software can be found here:

Only 8 bots participated in the competitions of 2013, and this is still the minimum number of all tournaments, although the quality of bots was quite high. A total of 5597 games were played, which allowed for 200 battles with each of the bots, twenty on each of the ten cards that remained unchanged from last year. The usual favorites again showed themselves well, but UAlbertaBot took the first place and overthrew Skynet from the throne, who moved to the second. In third place is Aiur, in fourth place is Ximp, a new bot written by Thomas Weida (Tomas Vajda), a student from the course on AI of Michail Certizka at Comenius University. Ximp played for the protoss race and his strategy was to expand the economy very early under the protection of photon guns. He played in deep defense and built an army of aircraft carriers (Carrier), very strong flying units in the later stages of the game.After creating a certain number of aircraft carriers, they flew across the map, destroying everything in their path. Unfortunately, an error in the Ximp code led to 100% of game crashes on the Fortress map, giving opponents an easy victory. If it were not for this failure, he would have easily taken the third place. Also noteworthy is the impressive learning strategy of the Aiur bot: the initial level of victories of 50% gradually increased to 58.51% by the end of the competition, which raised it from fourth to third place.the initial level of victories of 50% gradually increased by the end of the competition to 58.51%, which raised it from fourth to third place.the initial level of victories of 50% gradually increased by the end of the competition to 58.51%, which raised it from fourth to third place.

For these competitions, UAlbertaBot was modified, but the updated version of SparCraft was more important. In UAlbertaBot, a simpler version of SparCraft was used before, but it gave much less accurate results than the new SparCraft module. He correctly simulated all damage and armor types, which was not taken into account in the previous version. This provided a much more accurate simulation, which greatly improved the behavior of the bot at the beginning of the game. Botha was rescued from the strategies of dragoons and dark templar and left only rush by zealots, which was still the strongest strategy. The only exception was the Skynet battles, where the bot that performed the rush with the dark templar in the last competition showed that it was rather weak to this strategy. In the 2012 version of UAlbertaBot, a bug was found,forcing all working bot units to pursue any units, which led to the victory of Skynet and Aiur due to lack of resources when this happened. This serious mistake and a few minor ones were corrected, thanks to the winning percentage reached its maximum. UAlbertaBot overtook Skynet by almost 10% of victories.

SSCAIT 2013 ( )

The SSCAIT competition again involved many bots from the 2013 AI course and more than 50 participants. In the 2013 tournament, almost twice as many games were held as in 2012: two rounds of the cycle cycle were played between all bots on randomly chosen maps. The results were again divided into two categories, student and mixed, with the same rules as last year. In the student division, the first place was taken by Ximp, the second by the WOPR bot written by Soren Klett from the University of Bielefeld, and the UAlbertaBot again took the third place. The technical problems and mistakes in the early stages of the Ximp game from the AIIDE competition were corrected and it showed itself very well at the roundabout stage.In the mixed division, the top eight bots from the round-robin stage were played in the grid with a knockout after the first loss (single elimination). In the quarter-finals, UAlbertaBot was defeated by the IceBot bot. The final was held between IceBot and krasi0. Bot krasi0 won and IceBot took second place. The AIlberaBot version for AIIDE was used in these competitions without any changes.

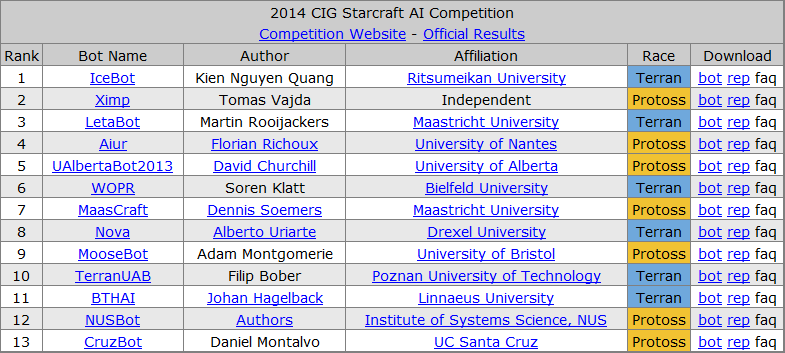

Cig 2014

The 2014 CIG competition was organized by Gonjun Kim, Hochhol Cho and Inseok O from Sejong University. 13 bots participated in them. In 2014, 20 cards, unknown to opponents, were used for CIG. Until now, it was the largest set of cards used in Starcraft AI competitions. In the tournament, an updated version of the AIIDE tournament management software was used, that is, reading and writing to the file was fully earned for the first time at CIG competitions. A total of 4680 games were played, each bot played three times with everyone else on each of the twenty cards. Major changes have been made to some of the powerful bots of the past, and this has affected the results.

IceBot won the competition, Ximp took second place, LetaBot third, and Aiur fourth. IceBot has participated in competitions since 2012, but never ranked higher than sixth. His strategies were completely reworked and more people worked on his creation. The result is a much more stable and reliable system. Thanks to a very strong defense in the early stages of the game, he could defend against many bots with early aggressive behavior. Ximp continued to use its strategy with aircraft carriers, making minor changes and correcting errors. LetaBot was a new terran bot written by Martin Rooijackers of Maastricht University. The source code is based on the 2012 version of UAlbertaBot and is adapted to the game for terrans. UAlbertaBot took fifth place in the competition,because it was not updated for this year's competition. Because of the victory of UAlbertaBot in AIIDE 2013, some bots implemented strategies specifically aimed at defeating him, and the combination with specially developed protection against early aggression from IceBot, Ximp and LetaBot rejected him in fifth place.

AIIDE 2014

A small number of participants in 2013 forced more broad coverage of the event in order to attract more interest in the 2014 competitions. In addition, if the team participating in the 2013 competitions did not send a new bot in 2014, the 2013 version automatically re-participated in the tournament to assess how much the new versions are improving. In total, 18 bots were sent to the competition, and this record has not yet been broken. Versions of UAlbertaBot, Aiur and Skynet 2013 re-participated, because their authors did not have time to make changes. Since only a couple of weeks passed between the CIG and AIIDE competitions, many bots were the same, and this affected the results: the top four remained the same as in the CIG. IceBot became the first, Ximp - the second, LetaBot - the third, and Aiur - the fourth.UAlbertaBot was in seventh place and was completely similar to the version sent to CIG 2014.

Man’s matches against the 2014 car can be viewed here:

- IceBot vs. Bakuryu - Game 1 , Game 2

- Ximp vs. Bakuryu - Game 1 , Game 2

- LetaBot vs. Bakuryu - Game 1 , Game 2

- Aiur vs. Bakuryu - Game 1 , Game 2

SSCAIT 2014 ( detailed results )

In 2014, the SSCAIT structure was updated and began to use the tournament management software, which allowed using the same structure of reading and writing files for training, as well as playing more games in less time. Since all three types of competitions now used this open source software that participants could use and test, sending bots and tournaments was made easier. Participants could be calm, their bots worked at all three competitions. The format and rules of the tournament were the same as in 2013: each of the 42 participants played with all other bots twice, that is, 861 games were played. The results were again divided into student and mixed divisions. In the student division, LetaBot took the first place, WOPR took the second place, and third place went to UAlbertaBot.In the mixed division, a tournament of the eight best bots was played with a knockout after the first loss. In the final, LetaBot defeated the Ximp bot and won the competition, while UAlbertaBot turned out to be stronger than IceBot in the bronze match and finished third.

The version of UAlbertaBot was the same as in AIIDE 2013, but with slight improvements in behavior in the area of unit positioning and building placement. After the rather poor results of CIG and AIIDE, it was surprising that this old version of UAlbertaBot ranked third in both divisions.

Cig 2015

In 2015, CIG, again organized by participants from Sejon University, made significant changes to the rules. The most important change: the disclosure of the source code of the participating bots is not necessary. This surprised many because the competition was held as part of a scientific conference. The second change: one author can participate with several bots. This is a controversial decision, because there was a possibility of a battle between two bots of one author, where one can automatically lose to another. Also, one bot could transfer information about previous matches to another. Fortunately, there were no such problems. The 2015 competition was held in half the time due to the technical difficulties that arose at the last moment, so only 2,730 games were played between 14 participants.

The results of these competitions were quite surprising, because all three best places were taken by new participants, and they all played for the zerg. The winner of ZZZBot is written by software developer Chris Coxe. It had a single strategy implemented: the rush with four zerglings, the fastest attack that can be made in Starcraft. Despite the relatively simple strategy and implementation, few of the bots were ready for such a quick attack and lost with lightning speed. In the second place was tscmoo-Z, a bot of zerg, written by Vegard Mella, an independent programmer from Norway. Bot Tscmoo basically used the strategies of the middle and late stages of the game, implemented almost a dozen different construction orders and strategies, and eventually learned to select the right one for each opponent. Third place went to Overkill,another Zerg bot, its author was the Chinese data processing engineer Xiju Xu (Sijia Xu). Overkill had several strategies in its arsenal, but mostly relied on Mutalisks and resembled Overmind from the AIIDE 2010 competition. a place.

AIIDE 2015 ( detailed competition report )

Since 2011, AIIDE competitions at the University of Alberta have been held in a student computer lab consisting of twenty machines with Windows XP. Since the laboratory was actively used by students, the games could only be started between the end of the school year and the beginning of the next (usually at the end of August). In 2015, the competition was held on virtual machines, so thanks to the great help of Nicholas Barriga (another student who studied AI for the RTS from Michael Buro), it was possible to hold many more games. In total, we had four servers for Linux, each of which had three virtual machines with Windows XP, that is, only 12 virtual machines for the tournament. The schedule of the AIIDE was also changed (the competition was postponed to November), which meant that they could be spent full two weeks, that is, twice as long as last year. Another advantage of virtual machines is that competitions can be monitored and managed through remote desktop software. With the help of KRDC, the ssh tunnel could control all 12 machines simultaneously, and the tournament could be stopped or restarted right from home.

25 bots were registered at AIIDE competitions, three of which did not participate for technical reasons. 22 participants represented 12 countries, which made these competitions the largest and covering the most countries. In 2015, the most even distribution of races was also obtained. Zerg were poorly represented last year, but this year five new zerg bots participated, many of which participated earlier in CIG competitions. The distribution of AIIDE races is shown below.

There was also the first case of bot participation (UAlbertaBot) with a random choice of race. By selecting Random, the bot would randomly receive one of the three Starcraft races after launching the game. This meant that the bot is much harder to program, because strategies are needed for all three races, but it gave an advantage, because the enemy did not know the race UAlbertaBot, until he found it in the game. Also, changes were made to the tournament management software, which corrected the error in storing a permanent file, sometimes leading to file overwriting. Due to the transition to the virtual machines in 2015, the competition could have any duration, so this time they lasted 14 days. A total of 20,788 games were played, nine games with each of the 22 bots on each of the ten cards. This is twice as high as the previous record of games played. Here is a comparative table of the number of games of each major Starcraft AI competition:

The finalists were very close to each other: statistically, the difference in the percentage of wins between the first and second, third and fourth places was less than 1%. Three new Zerg bots performed extremely well - tscmoo took first place with 88.52% wins, ZZZKBot - the second with 87.83% wins, and Overkill - the third with 80.69% wins. UAlbertaBot was on the fourth place with 80.2% in the mode of random selection of the race. It was a great achievement, because the game for several races is more difficult to implement. Another achievement of UAlbertaBot - he scored more than 50% against each bot in competitions, but did not reach the maximum total percentage of victories. The reason was that UAlbertaBot had 2/3 victories against weak bots, because one of the three races did not defeat these bots. You can explore the detailed results of the competition with the achievements of each bot. The general strategies of tscmoo, ZZZKBot and Overkill have not changed since CIG 2015, however, small bugs were fixed in each bot. We also held a man vs. car match, in which an experienced Starcraft player and the best bots of the competition participated. The game can be viewed here .

Almost all UAlbertaBot modules were rewritten several months before AIIDE 2015. The bot learned to play not only for Protoss, but also for other races. This required a much more reliable and general approach to micromanagement and planning of construction, which were previously adapted to the protoss. The search system of the order of construction was updated and now could perform a search of the order of construction for any of the three races. Fixed errors that previously resulted in crashes of UAlbertaBot during the competition. The new version of this software was given the name BOSS (build order search system, construction order search system) and it was published on github as part of the UAlbertaBot project. Another big change to UAlbertaBot was the creation of bot options configuration files. Files were recorded in JSON and their pars bot at the beginning of each game. Configuration files contain many options for making strategic and tactical decisions, for example, the choice of strategies for each of the races, the micromanagement options of the units and the debugging options. They also contain UAlbertaBot construction procedures for starting the game, which can be quickly and conveniently edited. Thanks to this configuration file, all options and order of construction can be edited without recompiling the bot, which speeds up the development and simplifies the modification and use of other programmers.

A few days before the competition, test games were played against many bots participating in AIIDE 2014 and CIG 2015. Manual analysis of these matches was carried out and building orders and strategies were created against some of the bots, such as Skynet, LetaBot, Ximp and Aiur. For example, against Ximp, the bot used a strategy of strong air defense, because it is known that Ximp always builds aircraft carriers. If the bot played against Aiur for Terrans, then it created a large number of vultures (Vulture), because they obstructed the Aiur sylots. It was risky, because any of the opponents could change the strategy before the AIIDE competitions, but many of them did not, and this model proved to be justified in the long run. If the bot played against an unknown enemy, he used one of the three rush strategies by default, depending on the race he was assigned to: rush zerglings in zerg, zealots in protoss and marines (Marine) in terrans. Thanks to this, the competitions became very successful for UAlbertaBot and the percentage of victories over each of the bots was high.

SSCAIT 2015 ( detailed results )

From the translator: I could not find the detailed results of AIIDE 2016, but this report gives a general idea.

The next AIIDE competition will be held in September 2017, registration ends on August 1.

Source: https://habr.com/ru/post/370431/

All Articles