Artificial intelligence and ghost in the car

The concept of AI dates back to much earlier times than the time of the emergence of modern computers - even to the Greek myths. Hephaestus, the Greek god of artisans and blacksmiths, created machines that worked for him. Another mythical character, Pygmalion, carved a statue of a beautiful ivory woman, which he later fell in love with. Aphrodite rewarded the statue with life as a gift to Pygmalion, who married an already living woman.

In the history, myths and legends about artificial creatures endowed with intelligence constantly met. They varied from simply supernatural sources (Greek myths) to more scientific methods, such as alchemy. In works of art, particularly in science fiction, AI began to appear more and more often in the XIX century.

')

But it was only when mathematics, philosophy, and scientific methods developed enough that in the 19th and 20th centuries, the AI began to take as a real opportunity. It was then that mathematicians such as George Boole , Bertrand Russell, and Alfred North Whitehead began to propose theories for the formalization of logical reasoning. With the development of digital computers in the second half of the 20th century, these concepts found practical application, and the question of AI began to be investigated for real.

"Pygmalion", Jean-Baptiste Reno, 1786

Over the past 50 years, interest in the development of AI has been reinforced by public interest and successes, as well as failures, in this industry. Sometimes the predictions of researchers and futurologists did not correspond to reality. This could usually be attributed to computer restrictions. But a deeper problem, an understanding of what intelligence is, has become a source of active controversy.

Despite these failures, the research and development of AI continued. Now they are being conducted by technology corporations who see the economic potential of such improvements, as well as academic groups from around the world. At what stage are these studies, and what can we expect from the near future? Before answering these questions, you must try to determine what AI is.

Strong, weak and generalized AI

It may surprise you that it is commonly believed that the AI already exists. As the AI researcher from Silicon Valley writes under the pseudonym Albert: “The AI monitors transactions on your credit card for strange expenses, reads the numbers that you write on your bank checks. If you are looking for a "sunset" among the pictures in your phone, it is the AI’s vision that finds them. " This type of AI in the industry is called "weak AI."

Weak AI

Weak AI works on narrow problems, for example, like Apple's Siri . It is believed that Siri is an AI, but it can only work in a predefined range, combining a small set of tasks. Siri processes the language, interprets requests and performs other simple tasks. But Siri has no consciousness, it is not rational, and therefore many believe that it cannot be defined as an AI.

Albreth believes that AI is a kind of moving target: “In the community of AI researchers, there is an internal joke, according to which, when we solve a problem, people immediately declare that this is not a real AI.” A few decades ago, the capabilities of such an assistant, like Siri, would be considered an AI. Albert continues: “People considered chess to be the pinnacle of intelligence, until we defeated the world champion. Then they said that we would never win go, because it has too much search space that requires “intuition”. Until last year we won the world champion. ”

Strong AI

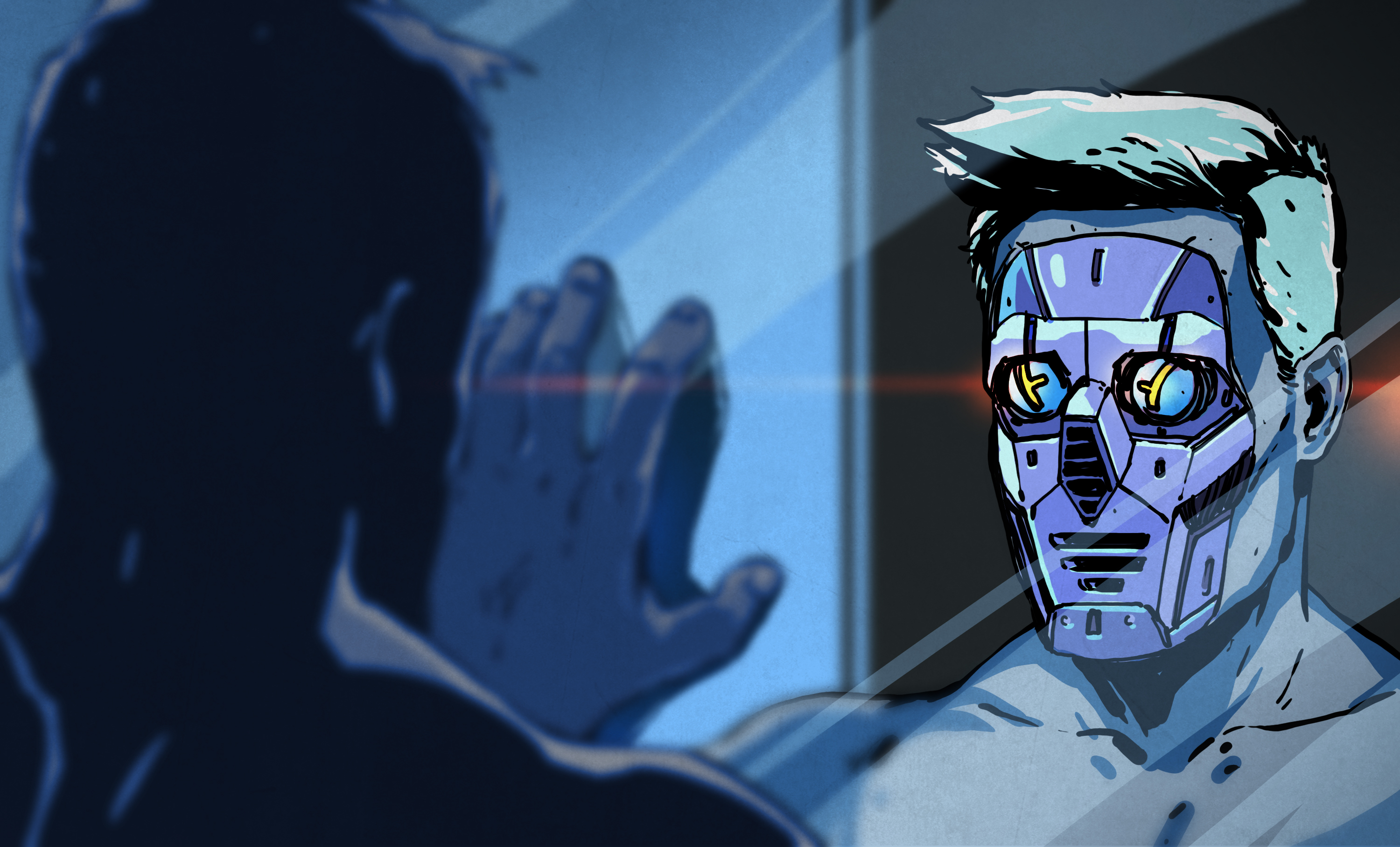

And yet, Albert defines such systems as weak AI. A strong AI is what non-professionals imagine when it comes to AI. A strong AI would be capable of real thinking and reasoning, possessing consciousness and intelligence. This kind of AI identified characters of NF type HAL 9000, KITT and Cortana (from the game Halo, not Microsoft Cortanta).

Generalized AI

What determines a strong AI, and how to check and determine such an entity - this topic is still controversial and serves as a source of heated debate. In any case, we have not yet come close to creating a strong AI. But there is another type of system, OII - this is something like a bridge between weak and strong AI. OII will not have the consciousness inherent in a strong AI, but it will be much more capable than a weak AI. This CSI will be trained on the basis of the information it receives, and will be able to answer any question about it (as well as perform the tasks associated with it).

And although most modern research is focused on OII, the end goal of many is a strong AI. After decades, and even centuries, a strong AI was the central theme of the NF, most of us take for granted that a reasonable AI will ever be created. However, many do not believe that this is possible in principle, and most of the debate on this issue goes around the philosophical concepts of mind, consciousness and intelligence.

Consciousness, AI, and Philosophy

Disputes begin with a very simple question: what is consciousness? Although the question is simple, everyone who has attended the “Introduction to Philosophy” course will tell you that the answer is not at all simple. We all collectively puzzled over this question for thousands of years, and few actually tried to give a satisfactory answer.

What is consciousness?

Some philosophers have even suggested that consciousness, as it is usually represented, does not exist. For example, in the book Consciousness Explained , Daniel Denett proves that consciousness is a complex illusion created by the mind. This is a logical extension of the philosophical concept of determinism , which states that everything is the result of the only possible consequence of the cause. If the idea is brought to the extreme, determinism will say that every thought, and therefore consciousness, is a physical reaction to previous events, including atomic interactions.

Most people consider such an explanation absurd - our experience of consciousness is so tightly connected with our existence that we do not recognize it. However, even if we accept the idea that consciousness is possible, and that someone possesses it - how to prove that another entity also has it? It goes into the intellectual field of solipsism and philosophical zombies .

Solipsism claims that a person can prove the existence of only his own consciousness. Recall the famous quote by Descartes “Cogito ergo sum” - I think, therefore, I exist. Although it seems to many to be a valid proof of the presence of consciousness in a person, it does not say anything about the existence of consciousnesses in other people. A popular thought experiment illustrating this riddle is the possibility of the existence of philosophical zombies.

Philosophical zombie

A philosophical zombie is an unconscious person who can imitate his presence. Quote from Wikipedia : "For example, a philosophical zombie can get a sharp object and not feel pain, but act as if he feels it (say" oh ", pull back from the stimulus, and report that it hurts)." This hypothetical creature may even assume that he feels pain, although in reality it does not feel it.

No, not such a zombie

Expanding this thought experiment, suppose philosophical zombies were born at some early point in human history, and gained an evolutionary advantage. Over time, this advantage allowed them to multiply and as a result completely replace all sensible people. Can you prove that all people around you are conscious, or maybe they just imitate it well?

This issue lies at the center of a debate about strong AI. If we cannot even prove that another person has consciousness, how can we prove that the AI has it? John Searle not only demonstrated this in his famous “ China Room ” thought experiment, but he also suggested that it was impossible to create a reasonable AI in a digital computer.

Chinese room

In the original wording of Searl, the experiment with the Chinese room is described as follows: Imagine that an AI was developed, taking Chinese characters as input, processing them, and issuing Chinese characters as output data. He even passes with this Turing test. Does this mean that AI "understands" Chinese characters by processing them?

Searle thinks not, and that the AI simply works as if he understands Chinese. He proves it this way: if a person who knows only English is placed in an isolated room, he, on the basis of correct instructions, will be able to do the same. A person can receive a request in Chinese, execute instructions written in English, explaining what to do with these characters, and issue Chinese characters for output. The person does not understand Chinese, but simply follows the instructions. In the same way, says Searle, the AI would not really understand what it processes, but would simply act as if it understands.

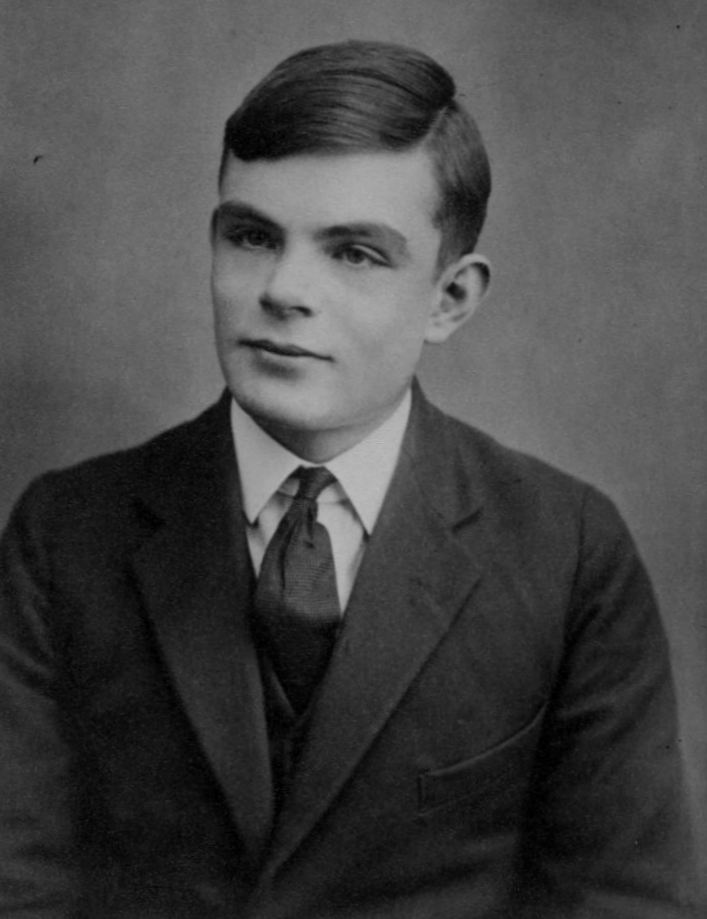

The idea of a Chinese room does not accidentally resemble the idea of a philosophical zombie, since they both talk about the difference between consciousness and external signs of consciousness. The Turing test is often criticized for being simplistic, but Alan Turing carefully considered a problem similar to the Chinese room before presenting his test. This happened 30 years before Searle’s publications, but Turing foresaw such a concept as an extension of the “problem of other minds” (underlying solipsism).

Polite agreement

Turing approached this problem by entering into a " polite agreement " with the cars that we make with other people. Although we cannot know whether other people actually have the same consciousness as we do, for practicality reasons, we assume that this is the case - we would not achieve anything by behaving differently. Turing believed that rejecting AI on the basis of such problems as the Chinese room would mean applying higher standards to it than to people. Therefore, the Turing test equates imitation of consciousness with real consciousness for practical reasons.

Alan Turing

Modern AI researchers believe that this rejection of the definition of what is “true” consciousness will be the best approach for philosophers. Trevor Sands (investigating AI at Lockheed Martin, clarifying what a personal opinion is expressing, and not that of an employer), says: “Consciousness, in my opinion, is not a necessary condition for OII, but simply appears as a result of intelligence”.

Albert agrees with Turing, saying: "if something behaves quite convincingly, demonstrating the presence of consciousness, we would be forced to treat it as if it were, even if in fact it might not be so." And while scientists argue with philosophers, researchers continue their work. Matters of consciousness are set aside in favor of the development of AIS.

History of AI development

Modern AI research began in 1956, at a conference at Dartmouth College. Many of its participants subsequently became experts in the field of AI, and were responsible for the early development of AI. Over the next decade, they developed software that spurred general excitement about the growing field of research. Computers could play (and win) chess, carry out mathematical proofs (in some cases creating more efficient solutions than mathematicians), and demonstrate rudimentary abilities to process a language.

Not surprisingly, the potential military use of AI attracted the attention of the US government, and by the 1960s, the Department of Defense poured research into finance. Due to the high degree of optimism, these studies are not very limited. It was believed that a serious breakthrough should happen soon, and the researchers worked as they saw fit. Marvin Minsky , a prolific AI researcher of the time, in 1967, stated that "in our generation, the task of creating" artificial intelligence "will be largely solved."

Unfortunately, no one fulfilled the promises to create AI, and by the 70th optimism subsided, and government funding was reduced. Lack of finance seriously slowed down research, and very little was achieved in the following years. It was only in the 1980s that the progress of “expert systems” in the private sector generated incentives to finance this area.

In the 80s, the development of AI was again well-sponsored, mainly by the US, British and Japanese governments. Often met reminiscent of the 60s optimism, and again made major promises about the imminent appearance of AI. The fifth-generation Japanese computer system project was supposed to provide a platform for developing AI. But the failures of this system and other failures led to the drying up of research funding.

At the end of the century, practical approaches to the development and use of AI looked promising. With access to a lot of information via the Internet and powerful computers, weak AI showed its advantages for business. Such systems have been successfully used in the financial market, for data processing and logistics, and in the field of medical diagnostics.

Over the past decade, the progress of neural networks and in-depth training has led to a revival of the field of AI. Now most of the research deals with the practical application of weak AI and potentially OII. Weak AI is already used everywhere, breakthroughs are being made in the creation of the AIS, and optimism about AI is again at its height.

Current approach to the development of AI

Today's researchers are actively engaged in neural networks , roughly repeating the work of the biological brain. And although the possibility of real virtual emulation of the biological brain with the simulation of individual neurons is being studied, in fact a more practical approach is used - in-depth training of neural networks. The idea is that the way the brain processes information is important, but not necessarily biologically implemented.

Albert, as a specialist in depth learning, is trying to train the neural network to answer questions. "The dream in this area is to get an oracle capable of digesting all human knowledge and able to answer any question about them." And although this is not yet possible, he says: “We have reached the point that we can let the AI read the document and question, and extract simple information from the document. The most interesting of the advanced achievements is that we are starting to see how these systems are starting to try to reason. ”

Trevor Sands is working on things like Lockheed Martin's neural networks. He creates "programs that use AI technologies so that people and autonomous systems work as a team." Like Albert, Sands uses neural networks and in-depth training to intelligently process large amounts of data. He hopes to come up with the right approach and create a system that could set the direction for self-study.

The difference between weak AI and modern neural networks Albert describes as follows: “Before that, some people were engaged in vision, others - in speech recognition, and others - in the processing of natural languages. But now they are all starting to use neural networks, in fact, the same technique for different tasks. I find such a beautiful universalization. Especially because there are people who believe that the brain and intellect are the result of a single algorithm. ”

Ideally, the neural network as OII should work with any data. Like the human mind, it would be a true intellect, able to process any data received. Unlike modern weak AI, it would not need to be developed for a specific purpose. A system capable of answering questions on history could at the same time advise on investments in securities or supply intelligence information.

Future AI

However, current neural networks are not sufficiently developed for this. They need to "train" on the data with which they work, and explain how to process them. Albert says that success is a matter of trial and error: “After receiving the data, we must develop a neural network architecture that, in our opinion, will cope well with the task. Usually we start with the implementation of a model known from the scientific literature that works well. After that, I'm trying to figure out how to improve it. Then I experiment to see if my changes have improved my model. ”

The main goal, of course, is to find the ideal model that works equally well everywhere. One that does not need to hold the hand and train in a special way, and which can learn from the data itself. When this happens, and the system will be able to respond properly, we will have general artificial intelligence on our hands.

Albert and Trevor have a good idea of the future of AI. I discussed this in detail with them, and in the next article we will talk about the future of AI and some other interesting issues from this area. Do not switch.

Source: https://habr.com/ru/post/370233/

All Articles