Catastrophic consequences of software errors

Practice does not get tired to prove that in any software the most important vulnerability is the human factor. It does not matter if the bug appeared due to the lack of specialist qualifications, survived after long hours of debugging in search of a mistake, or was considered a cleverly disguised feature. Among some developers, even the opinion was strengthened that, in principle, bugs exist always and everywhere. But if errors are abstract and difficult to reproduce in real conditions, then the problem is not critical.

In an ideal situation, bugs fix everything at once. But in life there are always a lot of tasks that push off the complete and irreversible bugfix (new functionality, urgent hotfixes, set priorities when fixing bugs). This means that, in the first place, obvious and obvious problems are found and corrected. The rest are quietly waiting in the wings, turning into time bombs. Sometimes mistakes lead not only to trouble in the life of an ordinary developer, but also cause real disasters. Today we have a selection and explanation of the most nightmarish bugs in the history of software development.

Irradiation and Radiation

')

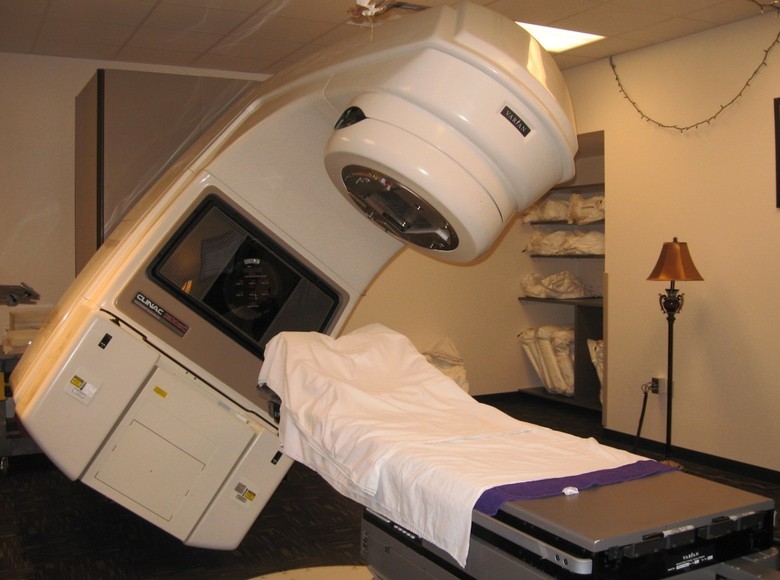

The famous case of the death of several people who received a lethal dose of radiation during sessions of radiation therapy using the medical accelerator Therac-25. Accelerators of this type use electrons to create high-energy rays that accurately destroy tumors. But some patients received doses not in several hundred glad, as prescribed treatment, but in 20,000 glad; a dose of 1000 glad for a person is considered incompatible with life, and death can occur immediately after exposure.

Only one programmer has created all the software used in the Therac devices - and these are 20 thousand instructions written in assembler. And at the same time in all Therac met the same library package, containing errors.

The Therac-25 accelerator, the third in a series of successful radiation therapy devices, could work with X-rays up to 25 MeV. For several years in the mid-80s, the Therac-25 devices worked flawlessly, but over time incidents began to accumulate, entailing grave consequences: from amputation of limbs to death of patients.

At first, the bugs simply did not notice, and all the problems were associated with hardware failures. The software, which worked flawlessly for thousands of hours, was perceived as ideal and secure. No action was taken to prevent the consequences of possible errors. In addition, the security-issued messages to the operator looked like routine ones. The device manufacturer investigated every incident, but could not reproduce the failure. It took several years of research by outside experts brought in after the trial after a series of deaths, in order to conclude that there was a large number of program errors.

So, with a certain sequence of typing commands from the keyboard, an incorrect procedure was called, which resulted in the irradiation of any arbitrary part of the patient's body. The absence on the device of the hardware blocker of critical and prolonged doses of radiation exacerbated the problem.

The same variable in Therac-25 software was used both to analyze the entered numbers and to determine the position of the turntable responsible for the direction of the radiator. Therefore, when quickly entering commands, the device could take the number of radiation dose for the coordinates of the place where the beam should be sent — for example, directly to the brain.

Sometimes Therac-25, when calculating radiation, divided it by zero and accordingly increased the irradiation amounts to the maximum possible. Setting the Boolean variable to the value “true” was performed by the command “x = x + 1”, which caused the program to skip information about the incorrect position of the emitter with a probability of 1/256 when the button “Set” was pressed.

In the manufacturer’s internal documentation it was found that the same error message was issued both in the case of an inappropriate (low) radiation dose and for a large dosage — it was impossible to distinguish one from the other by the error message.

If you are a developer or (even better) tester, you should study this case thoroughly - there is a good article on the wiki , you can start with it, and then read the long article “ Safe Software Lessons: Lessons from Famous Disasters ” nineteen years ago. The story absorbed most of the classic problems of testing.

Sadly, the problems of Therac-25 did not remain unique. In 2000, a series of accidents was caused by another software that similarly calculated the required dose of radiation for patients undergoing radiation therapy.

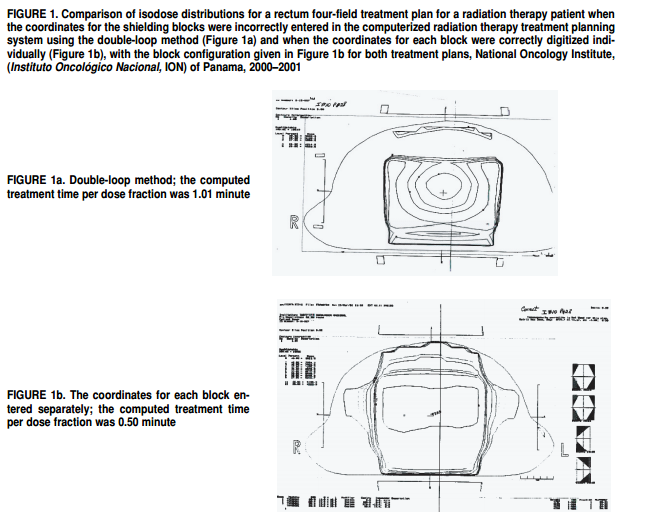

Multidata software allowed a medical professional to draw on the computer screen the order of placing metal blocks designed to protect healthy tissue from radiation. But the software allowed only four protection units to be used, while doctors, in order to increase security, wanted to use all five.

Doctors took advantage of "life hacking." It turned out that the program does not protect against the input of incorrect data - it was possible to draw all five blocks as one big block with a hole in the middle. The Panama Medical Oncology Center did not understand that the Multidata software set different configuration parameters depending on how the hole was placed: the correct radiation dose was calculated from the direction of its placement.

Eight patients died because of incorrectly entered data, while another 20 received an overdose resulting in serious health problems.

Blackout

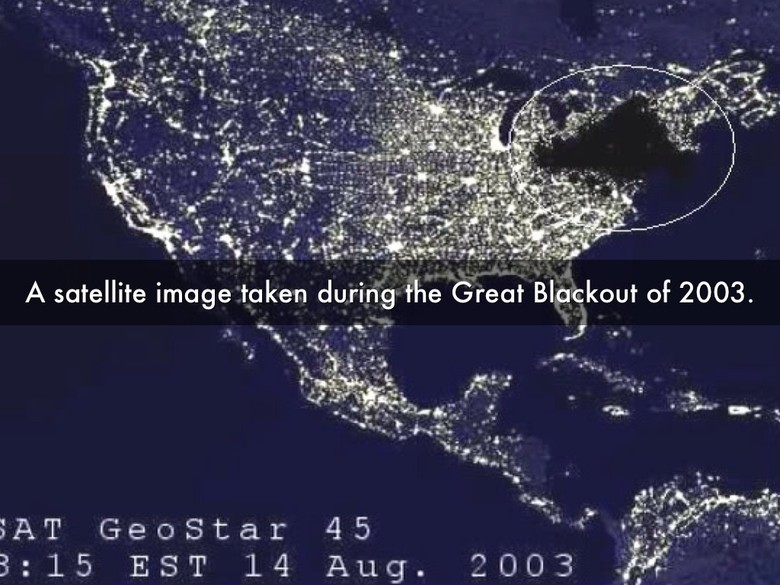

A small error in the software monitoring the operation of equipment of General Electric Energy led to the fact that 55 million people were left without electricity. On the East Coast of the USA, residential buildings, schools, hospitals, and airports were de-energized.

On August 14, 2003, at 0:15 am, the operator of the energy system in Indiana noticed a small problem with the help of the equipment monitoring tool. The problem caused an annoying error signal that the operator turned off. The operator managed to solve all the difficulties in a few minutes, but he forgot to restart monitoring - the alarm signal remained in the off position.

Disabling the signal did not become the main cause of blackout. But when in a few hours due to contact with a tree, sagging power lines in Ohio were cut down - no one found out about this. The problem assumed an avalanche-like character, overloaded transmission lines and power plants began to be cut down in Ontario, New York, New Jersey, Michigan and beyond.

None of the operators noticed a cascade of errors that were slowly killing the power grid, due to the single alarm that was turned off - no redundant systems were expected in this case.

Mars Climate Orbiter

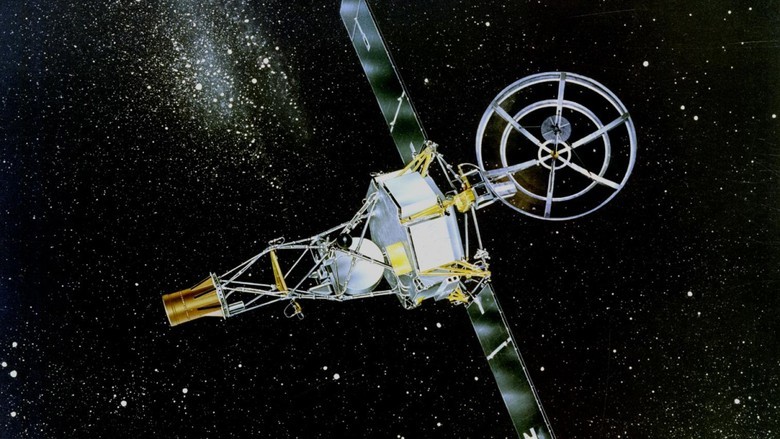

In 1998, NASA lost the Mars Climate Orbiter satellite worth $ 125 million due to the fact that the subcontractor who worked on engineering tasks did not convert English units of measure (pounds) to the metric system. As a result of the error, the satellite after a 286-day journey at high speed entered the Martian atmosphere, where, due to the congestion that occurred, its communication systems failed. The device was one hundred kilometers below the planned orbit and 25 km below the height at which it was possible to rectify the situation. As a result, the satellite crashed. The same fate befell the Mars Polar Lander spacecraft.

Mariner 1

In 1962, the spacecraft "Mariner 1" was destroyed from the ground after launch due to deviation from the course. The accident occurred on a rocket because of software, in which the developer missed only one character. As a result, a ship worth $ 18 million (in the money of those years) received the wrong control signals.

When working on a rocket control system, a programmer translated handwritten mathematical formulas into computer code. The symbol of the "upper dash" (index), he took the usual dash (or minus sign). The smoothing function began to reflect the normal variations in the speed of the rocket as critical and unacceptable.

However, even the mistake made could not lead to a critical failure, but unfortunately the rocket antenna lost contact with the suggestive system on Earth, and the on-board computer took over the control.

Launch ballistic missiles

On September 26, 1983, the satellite Eye of the USSR missile attack warning system mistakenly reported the launch of five ballistic missiles from the United States. The satellite was in a high elliptical orbit, observing the basing areas of the rockets at such an angle that they were on the edge of the visible disk of the Earth. This made it possible to detect the fact of launch against the background of dark outer space from the infrared radiation of a working rocket engine. In addition, the selected location of the satellite reduced the likelihood of sunlight reflected from the clouds or snow.

After a flawless year of work, it suddenly turned out that one day at a certain position of the satellite and the Sun, the light reflected from the clouds located at high altitudes, leaving the very infrared radiation that computers perceived as a trail of rockets. The lieutenant colonel Stanislav Petrov, who had been on duty, questioned the testimony of the system. The suspicion was caused by a report about five goals noted - in the event of a real military conflict, the United States would simultaneously make hundreds of launches. Lieutenant Colonel Petrov decided that this was a false positive of the system, and thus probably prevented the Third World War.

A similar mistake, which almost led to a global nuclear conflict, occurred on the other side of the ocean. On November 9, 1979, due to the failure of the North American aerospace defense computer, information was received about the launch of a rocket attack against the United States - in the amount of 2,200 launches. At the same time, early warning satellites and radars showed that no information was received about the Soviet attack - only thanks to a double-check of data made in 10 minutes, an order was not issued for mutual guaranteed destruction.

The reason for everything was the most dangerous vulnerability - the human factor. The operator of the computer, which is on duty, loaded into it a film with a training program that simulated the situation of a massive rocket attack.

In the first few years of operation, the National Control Center of the United States and Canada Aerospace Defense Command recorded 3,703 false alarms, most of which were due to atmospheric phenomena. However, there were computer errors. So one of the “combat” computers on June 3, 1980, showed constantly changing numbers of missiles launched by the Soviet Union. The problem arose due to a hardware failure in the chip.

Software update and division by 0

In 1997, the American rocket cruiser Yorktown (CG-48), on which 27 computers were installed (Pentium-Pro at 200 MHz), decided to divide by zero and completely failed.

Computers worked on Windows NT - and they worked exactly as you would expect when they learned the name of the axis. At that time, the US Navy tried to use commercial software as widely as possible to reduce the cost of military equipment. Computers also allowed to automate the control of the ship without human intervention.

On computers "Yorktown" put a new program that controls the engines. One of the operators, who calibrated the valves of the fuel system, wrote a zero value in one of the cells of the calculation table. On September 21, 1997, the program launched a division operation into this very zero, a chain reaction began, and the error quickly spread to other computers in the local network. As a result, the entire computer system "Yorktown" failed. It took almost three hours to connect the emergency control system.

A similar problem with zero arose when a fighter was tested in Israel. Flawlessly working aircraft on autopilot flew over the flat part, above the mountain part, over the Jordan Valley and approached the Dead Sea. At this point, a crash occurred, the autopilot turned off, and the pilots landed a fighter on manual control.

After long trials, it was found out that the autopilot programs, when calculating control parameters, made a division by the value of the current height of the fighter above the ocean level. Accordingly, at the Dead Sea lying below sea level, the height became zero, provoking an error.

There are a lot of stories in the world when software updates performed with the most good goals could lead to many problems. In 2008, a nuclear power plant in the state of Georgia (USA) with a capacity of 1,759 MW in emergency mode suspended work for 48 hours.

The engineer of the company engaged in the technological maintenance of the station installed an update on the main computer of the NPP network. The computer was used to monitor chemical data and diagnose one of the main systems of the power plant. After the updates were installed, the computer restarted regularly, erasing the data from the control systems. The plant's security system perceived the loss of part of the data as a release of radioactive substances into the cooling systems of the reactor. As a result, automatics gave an alarm and stopped all processes at the station.

F-22 Incident

Twelve F-22 Raptor (the fighter of the fifth generation, which is in service with the US), worth $ 140 million apiece, went to the first international flight to Okinawa. Everything went fine until the squadron crossed the date line, on the western side of which the date is shifted one day ahead relative to the east. After crossing the conditional line, all 12 fighters simultaneously gave an error message equivalent to the blue screen of death.

Aircraft lost access to data on the amount of fuel, speed sensors and altitude, partially disrupted communication. For several hours, America’s most modern fighter jets flew across the ocean completely helpless. In the end, they managed to land only thanks to the skill of the pilots.

So what was the mistake? Designers from Lockheed Martin did not even consider the possibility of crossing the date reversal line - they simply did not think that somewhere it would be necessary either to add or subtract one day.

Other stories

There are some more interesting stories in this endless subject. About them there was either a wrong opinion, or there were already detailed articles on GT and Habré.

The explosion on the Soviet gas transmission system in 1982 due to software errors, pledged by the CIA. Experts categorically deny not only the explosion on the Urengoy-Surgut-Chelyabinsk gas pipeline in 1982, but also the possibility of such an explosion in general.

Algorithmic error led to the accident of the A-330 aircraft - as a result of the incident, 119 passengers and crew members were injured, 12 of them heavy.

The Ariane 5 launch vehicle turned into “confetti” on June 4, 1996 - an error occurred in the software component designed to perform “adjustment” of the inertial platform. Lost 500 million dollars (the cost of the rocket with the cargo).

Toyota : due to clumsy electronics and software, 89 people died from 2000 to 2010.

Sources:

habrahabr.ru/company/mailru/blog/227743

www.wikiwand.com/en/Therac-25

www.baselinemag.com/c/a/Projects-Processes/We-Did-Nothing-Wrong en.wikipedia.org/wiki/Northeast_blackout_of_2003

lps.co.nz/historical-project-failures-mars-climate-orbiter www.jpl.nasa.gov/missions/mariner-1

inosmi.ru/inrussia/20071229/238739.html

https://www.revolvy.com/main/index.php?s=USS%20Yorktown%20(CG-48)

www.defenseindustrydaily.com/f22-squadron-shot-down-by-the-international-date-line-03087

Source: https://habr.com/ru/post/370153/

All Articles