Pseudoscience. Whether to believe scientific articles on psychology

Significance level and p-value in mathematical statistics

The number of publications in scientific journals, including publications in the humanities, is growing every year. According to Bakhtin's definition, “the subject of the humanities is an expressive and speaking being. This being never coincides with itself and therefore is inexhaustible in its meaning and meaning. "

The inexhaustibility of the meaning and meaning of being does not interfere with analyzing the results of scientific research using statistical methods. In particular, the findings in experimental psychology studies are often the result of testing the significance of the null hypothesis.

')

But there is a great suspicion that the authors of some scientific works are not very strong in mathematics.

The statistical hypothesis is a statement regarding an unknown parameter of the general population based on a sample study. To substantiate the conclusion, it is necessary to test the results on which the hypothesis is built, for statistical significance. Reliability is determined by how likely it is that the link found in the sample is confirmed on another sample of the same population. Obviously, it is almost impossible to conduct a study on the entire sample, and it is very difficult to conduct multiple studies on different samples. Therefore, statistical methods are widely used. They make it possible to estimate the probability of accidentally obtaining such a difference, provided that in reality there are no differences in the general population.

Null hypothesis (null hypothesis) - a hypothesis about the absence of differences (the statement about the absence of differences in values or about the absence of communication in the general population). According to the null hypothesis, the difference between the values is not significant enough, and the independent variable has no effect.

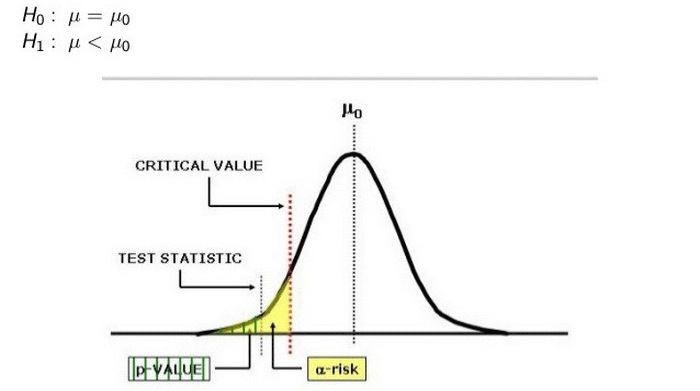

In modern scientific papers, null hypotheses are often tested using the p -value . This value is equal to the probability that a random variable with a given distribution will take a value not less than the actual value of the test statistics.

For example, a significance level of 0.05 means that no more than 5% chance of error is allowed. In other words, the null hypothesis can be rejected in favor of an alternative hypothesis, if according to the results of a statistical test, the probability of an accidental occurrence of the detected difference does not exceed 5%, i.e. p -value does not exceed 0.05. If this level of significance is not reached (the probability of error is above 5%), it is considered that the difference may well be accidental and therefore the null hypothesis cannot be rejected. Thus, the p- value corresponds to the risk of making a mistake of the first kind (deviations of the true null hypothesis).

The use of p- values to test null hypotheses in medical work has been criticized by many specialists. Moreover, in 2015, one of the scientific journals - Basic and Applied Social Psychology (BASP) - completely banned the publication of articles that use p-values. The magazine explained its decision by the fact that it is not very difficult to make a study in which p <0.05 is obtained, and such values of p become too often an excuse for low-grade studies. In practice, the use of p- values often leads to statistical errors of the first kind - errors to detect differences or relationships that actually do not exist .

In 2015, an article by Michelle Nuyten, a student from Tilburg University, and colleagues, published in the journal Behavior Research Methods (doi: 10.3758 / s13428-015-0664-2, pdf ), did a lot of noise.

In 2015, an article by Michelle Nuyten, a student from Tilburg University, and colleagues, published in the journal Behavior Research Methods (doi: 10.3758 / s13428-015-0664-2, pdf ), did a lot of noise.The girl found that about half of all scientific articles on clinical psychology (that is, articles that analyze the results of experiments and draw conclusions) contain at least one contradictory p -value. Moreover, in every seventh paper there is an extremely contradictory p -value, which leads to an error of the first kind. That is, to the detection of differences or relationships that do not actually exist.

Michel Nuytena states that often these statistical errors coincide with the conclusions made by the authors of scientific works. This suggests that some psychologists conduct research with a view to obtaining a specific result, under which they consciously or unconsciously adjust statistics.

To help scientists, to check the correctness of statistical calculations, Michel and his colleagues developed the statcheck program. This program extracts statistics from scientific articles and re-calculates p- values. For the program to work, you also need a tool to convert PDF documents to TXT format. For example, Xpdf . The program is written in the R programming language, which was created specifically for statistical calculations. The library is installed directly from the CRAN repository:

install.packages(“statcheck”) library(“statcheck”) Using the statcheck program , researchers tested more than 250,000 p- values in articles published in scientific journals in psychology from 1983 to 2013. The results were confirmed: indeed, about half of all articles contain errors in the calculation of the p- value.

In August 2016, the authors of the program went further and decided to de-anonymize the authors of scientific papers, in which errors were found. A data set with an analysis of 688,112 p- values in 50,945 scientific articles on psychology has been published on the PrePrints website.

According to experts, this is one of the largest in the history of audits of scientific articles after their publication. This kind of crowdsourcing audit of scientific papers (crowdsourcing - because the results of the automatic verification must also be manually checked by the community - this work will take months or years).

Not everyone liked this attempt. Some authors of articles, including reputable scientists, are unhappy that their work is put on display and plunges such an audit. For example, a well-known psychologist Dorothy Bishop from the University of Oxford expressed her dissatisfaction, two of her works are marked by the statcheck program, although no errors were found in one work.

Dorothy Bishop believes that such automatic reports with the indication “0 errors” are not the best way to report statistics. Allegedly getting into the list for auditing discredits the authors of such works. Regarding other work with errors, Dorothy Bishop is going to consult with his co-author and make corrections to the work. At the same time, she wants to audit the statcheck program itself , because if it allows at least 10% of false positives, it damages the scientific community.

Other authors, on the contrary, are proud that the bot issues an automatic report on their work with an indication of “0 errors”. Pretty professor Jennifer Tuckett asks if the report can be framed. This is the right approach, with a sense of humor.

The results of the automatic audit of 50,945 scientific articles on psychology have yet to be conducted. It can be assumed that in about half of them there will be errors, as it was shown last year's preliminary study on a more limited sample. In any case, the data set is published in the public domain. Works full-text search for the name of the scientific work and the author.

So, if you soon will get a link to some scientific research on psychology - be sure to check it out using the PubPeer database .

Source: https://habr.com/ru/post/369755/

All Articles