Artificial neural networks in simple words

When, over a bottle of beer, I started a conversation about neural networks - people usually started to look at me fearfully, were sad, sometimes their eyes started to twitch, and in extreme cases they climbed under the table. But, in fact, these networks are simple and intuitive. Yes Yes exactly! And let me, I will prove it to you!

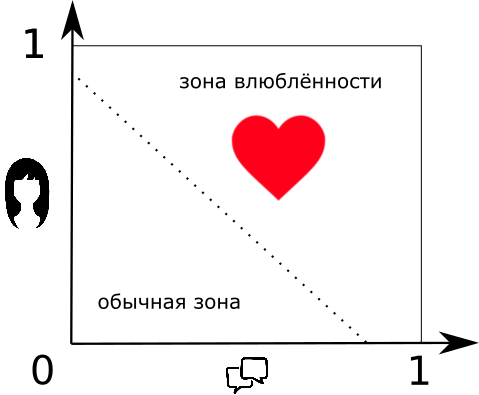

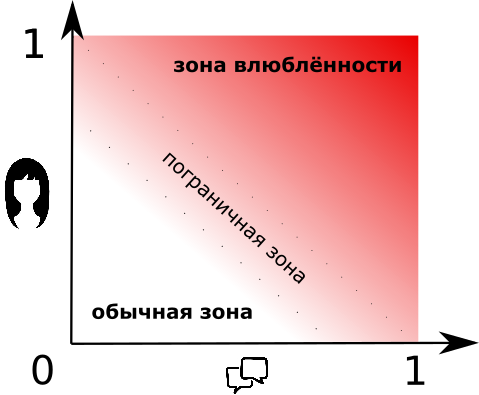

Suppose I know two things about a girl — whether she is cute to me or not, and whether there is anything to talk to her about. If there is, then we will consider it a unit, if not, then - zero. A similar principle we take for the exterior. Question: “What girl will I fall in love with and why?”

You can think simply and uncompromisingly: “If you are cute and have something to talk about, then you will fall in love. If neither one nor the other, then dismiss. ”

But what if the lady is cute to me, but she has nothing to talk about? Or vice versa?

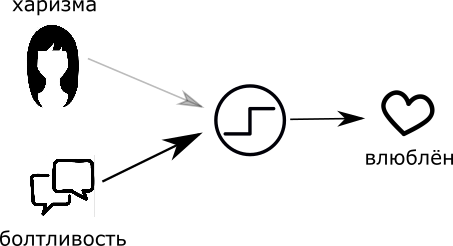

It is clear that for each of us one thing will be more important. More precisely, each parameter has its level of importance, or rather, weight. If we multiply a parameter by its weight, then we get, respectively, “the influence of appearance” and “influence talkativeness conversation. ”

And now I can answer my question with a clear conscience:

“If the influence of charisma and the influence of talkativeness in the sum is more than the value of“ falling in love ”, then I will fall in love ...”

That is, if I put a lot of weight on the boltology of the ladies and a small appearance weight, then in a controversial situation I will fall in love with a person with whom it is pleasant to chat. And vice versa.

Actually, this rule is the neuron.

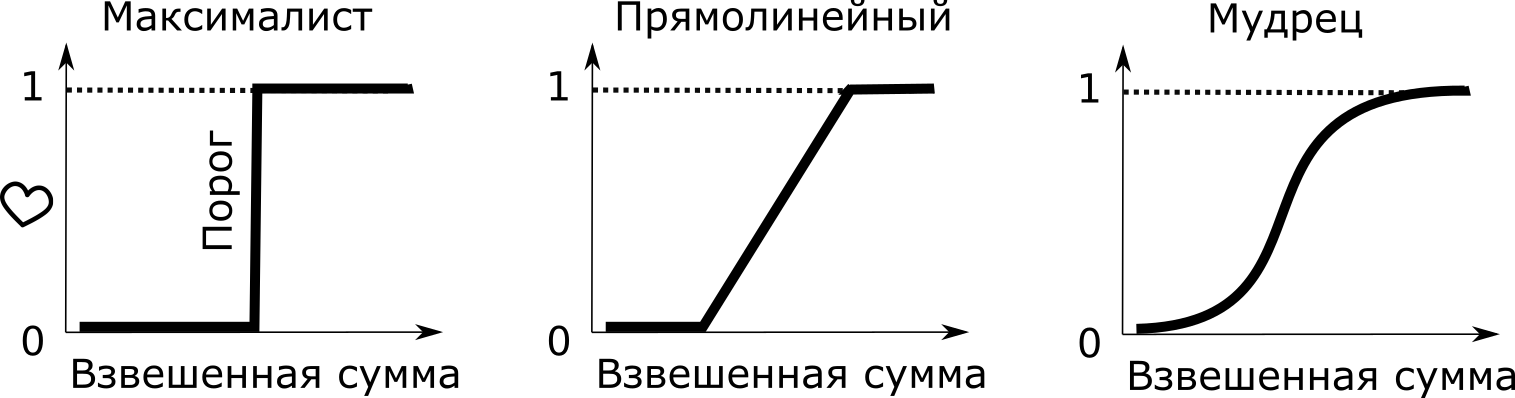

An artificial neuron is a function that converts several input facts into one output. By adjusting the weights of these facts, as well as the excitation threshold, we adjust the adequacy of the neuron. In principle, for many the science of life ends at this level, but this story is not about us, right?

Let's make some more conclusions:

- If both weights are small, then it will be difficult for me to fall in love with anyone.

- If both weights are too large, then I will fall in love with even a pole.

- You can also make me fall in love with a pillar by lowering the amorousness threshold, but please - don't do this to me! Better let's forget about him for now, ok?

It is ridiculous, but the parameter “amorousness” is called “threshold of arousal”. But, so that this article did not receive a rating of “18+”, let's agree to say simply “threshold”, ok?

Neural network

There are no definitely nice and sociable ladies. And falling in love with love is different, no matter what anyone says. Therefore, instead of brutal and uncompromising “0” and “1”, let's use percentages. Then you can say - “I'm very in love (80%), or“ this lady is not very talkative (20%) ”.

Our primitive “maximalist neuron” from the first part does not suit us. It is replaced by a “sage neuron”, the result of which will be a number from 0 to 1, depending on the input data.

“The neuron-sage” can tell us: “this lady is beautiful enough, but I don’t know what to say to her, so I’m not really excited about her”

By the way, the input facts of the neuron are called synapses, and the output judgment is called an axon. Connections with a positive weight are called excitatory, and those with a negative weight are called inhibitory. If the weight is zero, then it is considered that there is no connection (dead connection).

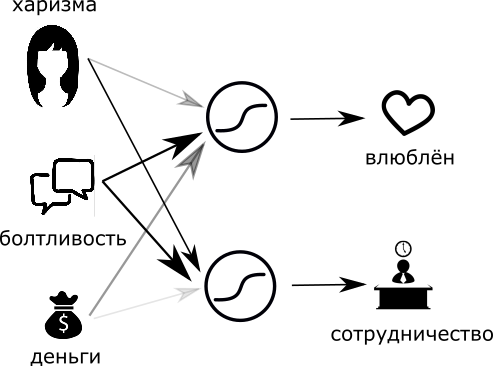

Let's go further. Let's make a different assessment on these two facts: how good is it to work with (cooperate) with such a girl? We will act in exactly the same way - add a wise neuron and adjust the weights in a comfortable way for us.

But, judging a girl by two characteristics is very rude. Let's judge her by three! Add one more fact - money. Which will vary from zero (absolutely poor) to one (Rockefeller's daughter). Let's see how our opinions will change with the arrival of money ...

For myself, I decided that, in terms of charm, money is not very important, but a smart look can still affect me, because I will make the weight of money small, but positive.

In my work, I don't really care how much money a girl has, so I’ll make the weight zero.

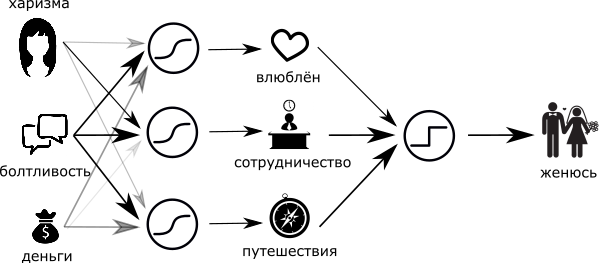

To evaluate a girl only for work and love is very stupid. Let's add how pleasant it will be to travel with her:

- Charisma in this problem is neutral (zero or low weight).

- Talk will help us (positive weight).

- When money ends up in real travels, the drive itself begins, so I’ll make the weight of the money slightly negative.

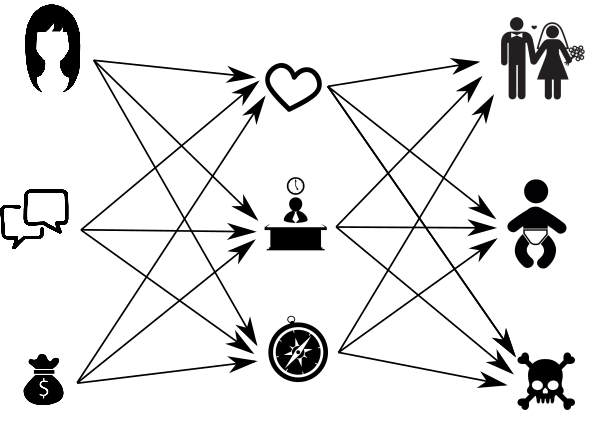

We combine all these three schemes into one and find that we have moved to a deeper level of judgment, namely, from charisma, money and talkativeness - to the admiration, cooperation and comfort of traveling together. And note - these are also signals from zero to one. So, now I can add the final “maximalist neuron”, and let it unambiguously answer the question “marry or not”?

Well, of course, not everything is so simple (in terms of women). Bring some drama and reality into our simple and rainbow world. First, let's make the neuron "marry - not marry" - wise. Doubts are inherent in all, one way or another. And yet - let's add a neuron “I want children from it” and, in order to be completely truthful, the neuron “stay away from it”.

I do not understand anything in women, and therefore my primitive network now looks like a picture at the beginning of the article.

Input judgments are called "input layer", the final - "output layer", and the one that is hidden in the middle, is called "hidden". The hidden layer is my judgments, semi-finished products, thoughts that no one knows about. There may be several hidden layers, and maybe none.

Down with maximalism.

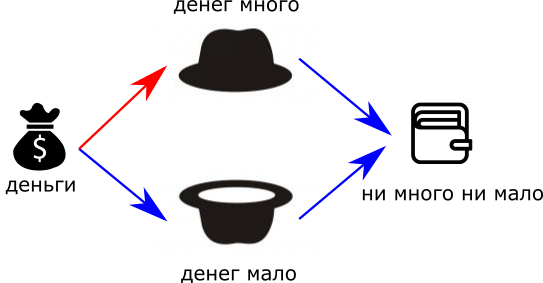

Remember, I talked about the negative impact of money on my desire to travel with a person? So - I was a goof. For travel is best suited person, whose money is not small, and not much. It's more interesting for me and I won't explain why.

But here I am faced with a problem:

If I put the weight of money negative, then the less money - the better for traveling.

If positive, the richer the better

If zero - then the money “poboku”.

It does not work out for me like this, with one weight, to make the neuron recognize the situation “not a lot — not a little”!

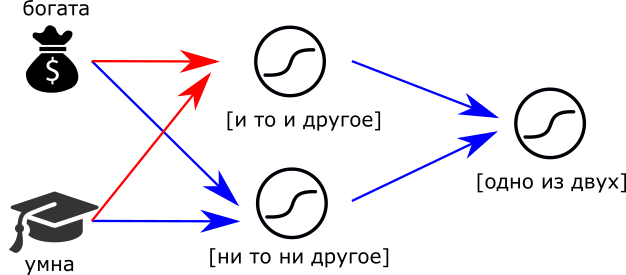

To get around this, I will make two neurons - “a lot of money” and “little money”, and give them the input cash flow from our lady.

Now I have two judgments: “many” and “little”. If both conclusions are insignificant, then literally “neither more nor less” will turn out. That is, add one more neuron to the output, with negative weights:

"Naming". Red arrows - positive connections, blue - negative

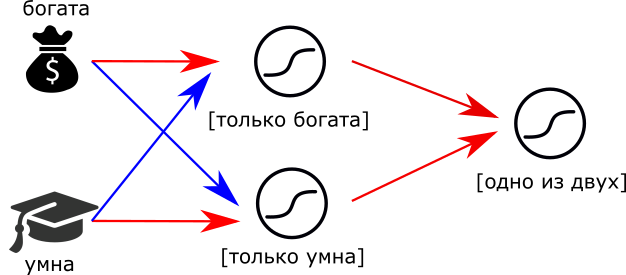

In general, this means that neurons are similar to the elements of the constructor. Just as a processor is made from transistors, we can assemble a brain from neurons. For example, the judgment “Either rich or smart” can be done like this:

Or or. Red arrows - positive connections, blue - negative

Or so:

you can replace the “wise” neurons with “maximalists” and then we get the logical operator XOR. The main thing - do not forget to set the thresholds of excitement.

Unlike transistors and the uncompromising logic of a typical “if-that” programmer, a neural network can make informed decisions. Their results will smoothly change, with a smooth change in input parameters. Here it is wisdom!

I would like to draw your attention to the fact that adding a layer of two neurons allowed the neuron to “do nothing more or less” to make a more complex and balanced judgment, to move to a new level of logic. From “many” or “little” - to a compromise solution, to a deeper, from a philosophical point of view, judgment. And what if you add hidden layers yet? We are able to reach that simple network with our minds, but what about a network that has 7 layers? Are we able to understand the depth of its judgments? And if in each of them, including the input, there are about a thousand neurons? What do you think she is capable of?

Imagine that I continued to complicate my example of marriage and falling in love, and came to such a network. Somewhere in it all our nine neurons are hidden, and this is more like the truth. With all the desire, to understand all the dependencies and the depth of judgments of such a network is simply impossible. For me, the transition from the 3x3 network to 7x1000 is comparable to the realization of the scale, if not of the universe, then of the galaxy, relative to my height. Simply put, I will not succeed. The solution of such a network, the fire output of one of its neurons - will be inexplicable logic. This is what in everyday life we can call “intuition” (at least - “one of ..”). Incomprehensible desire of the system or its hint.

But, unlike our synthetic example 3x3, where each neuron of the hidden layer is quite clearly formalized, this is not necessarily the case in this network. In a well-tuned network, whose size is not redundant to solve the problem, each neuron will detect some sign, but this absolutely does not mean that there will be a word or sentence in our language that can describe it. If you project on a person, then this is some characteristic of him that you feel, but you cannot explain with words.

Training.

A few lines earlier, I mentioned a well-tuned network, which probably provoked the dumb question: “How can we set up a network consisting of several thousand neurons? How many “man-years” and ruined lives do you need for this? .. I am afraid to suggest the answer to the last question. Where better to automate such a configuration process is to make the network configure itself. This process of automation is called learning. And in order to give a superficial idea of him, I will return to the original metaphor of the “very important issue”:

We appear in this world with a pure, innocent brain and a neural network that is absolutely not in tune with women. It needs to be somehow properly set up, so that happiness and joy come to our house. For this, we need some experience, and here there are several ways to extract it:

1) Training with a teacher (for romantics). Watch a lot of Hollywood melodramas and read tearful novels. Or look at their parents and / or friends. After that, depending on the sample, go to check the knowledge gained. After an unsuccessful attempt - to repeat everything anew, starting with the novels.

2) Teaching without a teacher (for desperate experimenters). To try to marry a dozen other women by the “spear” method. After each marriage, in bewilderment scratching a turnip. Repeat until you realize that you are tired, and you “already know how it happens.”

3) Education without a teacher, option 2 (the path of desperate optimists). To hammer on everything, to do something in life, and one day find yourself married. After that, reconfigure your network in accordance with the current reality, so that all arranged.

Further, according to the logic, I have to paint all this in detail, but without mathematics it will be too philosophical. Therefore I consider that I should stop at this. Perhaps another time?

All of the above is true for an artificial perceptron neural network. The rest of the networks are similar to it in basic principles, but they have their own nuances.

Good weights and excellent training samples! Well, if you don’t need it, then tell someone else about it.

P.S.

The weights of my neural network are not configured, and I just can not understand to which resource this article should belong.

')

Source: https://habr.com/ru/post/369349/

All Articles