How big is the internet?

We have become accustomed to the continuous and unrestrained growth of information in the network. No one can stop or slow down this process, and there is no sense in it. Everyone knows that the Internet is huge, both in terms of the amount of data and the number of sites. But how big is it? Is it possible to somehow estimate, at least approximately, how many petabytes are running along cables that entangle the planet? How many sites are waiting for visitors on hundreds of thousands of servers? This question is asked by many, including scientists, who are trying to develop approaches to the assessment of a vast sea of information, called the Internet.

The World Wide Web is a very lively place. According to the Internet Live Stats service, more than 50,000 search queries are being made to Google every second, 120,000 videos on Youtube are being viewed, and almost 2.5 million emails are being sent. Yes, it is very impressive, but still this data does not allow us to fully imagine the size of the Internet. In September 2014, the total number of sites exceeded one billion , and today there are approximately 1,018 billion . But the so-called “deep web” ( Deep Web ), that is, a collection of sites that are not indexed by search engines, has not been calculated here yet. As pointed out on Wikipedia, this is not a synonym for the “ dark web ”, which primarily includes the resources on which all sorts of illegal activities are conducted. Nevertheless, the content in the “deep web” can be both completely harmless (for example, online databases), and completely unsuitable for the law-abiding public (for example, black market trading platforms with access only through Tor). Although Tor'om is used not only by dishonest people, but also by users who are completely clean before the law, who are hungry for network anonymity.

Of course, the above estimate of the number of websites is approximate. Sites appear and disappear, besides the size of the deep and dark webs is almost impossible to determine. Therefore, even approximately estimate the size of the network by this criterion is very difficult. But one thing is certain - the network is constantly growing.

')

It's all about the data

If there are more than a billion websites alone, there are many more individual pages. For example, WorldWideWebSize presents an estimate of the size of the Internet by the number of pages. The calculation method was developed by Maurice de Kunder, who published it in February of this year. In brief: first, the system searches Google and Bing for a list of 50 common English words. Based on the assessment of the frequency of these words in printed sources, the results obtained are extrapolated, corrected, an amendment is introduced for the coincidence of the results for different search engines, and the result is a certain assessment. To date, the size of the Internet is estimated at 4.58 billion individual web pages. True, we are talking about the English segment of the network. For comparison, the size of the Dutch segment is also indicated there - 225 million pages.

But a web page as a unit of measurement is a thing too abstract. It is much more interesting to estimate the size of the Internet in terms of the amount of information. But there are nuances here. What kind of information to count? Transferred or processed? If, for example, we are interested in the information transmitted, then here it can be considered differently: how much data can be transmitted per unit of time, or how much data is actually transmitted .

One way to assess the information circulating on the Internet is to measure traffic. According to Cisco , by the end of 2016, 1.1 zettabytes of data will be transmitted worldwide. And in 2019, traffic will double, reaching 2 Zettabytes per year. Yes, it is a lot, but how can you try to imagine 10 21 bytes? As helpfully prompted in infographics from the same Cisco, 1 zettabyte is equivalent to 36,000 years of HDTV video. And it will take 5 years to watch the video transmitted around the world every second. True, there it was predicted that we will move this traffic threshold at the end of 2015, well, nothing, we didn’t guess a bit.

In 2011, a study was published , according to which, in 2007, mankind stored approximately 2.4 x 10 21 bits of information, that is, 0.3 zettabyte, on all its digital devices and media. The total computing power of the global “general purpose” computing device fleet reached 6.4 x 10 12 MIPS . It is curious that 25% of this value accounted for game consoles, 6% for mobile phones, 0.5% for supercomputers. At the same time, the total power of specialized computing devices was estimated at 1.9 x 10 14 MIPS (two orders of magnitude more), and 97% were accounted for by the ... video card. Of course, 9 years have passed since then. But it is very roughly to assess the current state of affairs based on the fact that for the period 2000–2007, the average annual growth in the volume of stored information was 26%, and the computing power — 64%. Considering the development and cheapening of carriers, as well as the slowing down of the increase in the computing power of the processors, let us assume that the amount of information on the media grows by 30% per year, and the computing power - by 60%. Then the amount of stored data in 2016 can be estimated at 1.96 x 10 22 bits = 2.45 zettabyte, and the computing power of personal computers, smartphones, tablets and set-top boxes at the level of 2.75 x 10 14 MIPS.

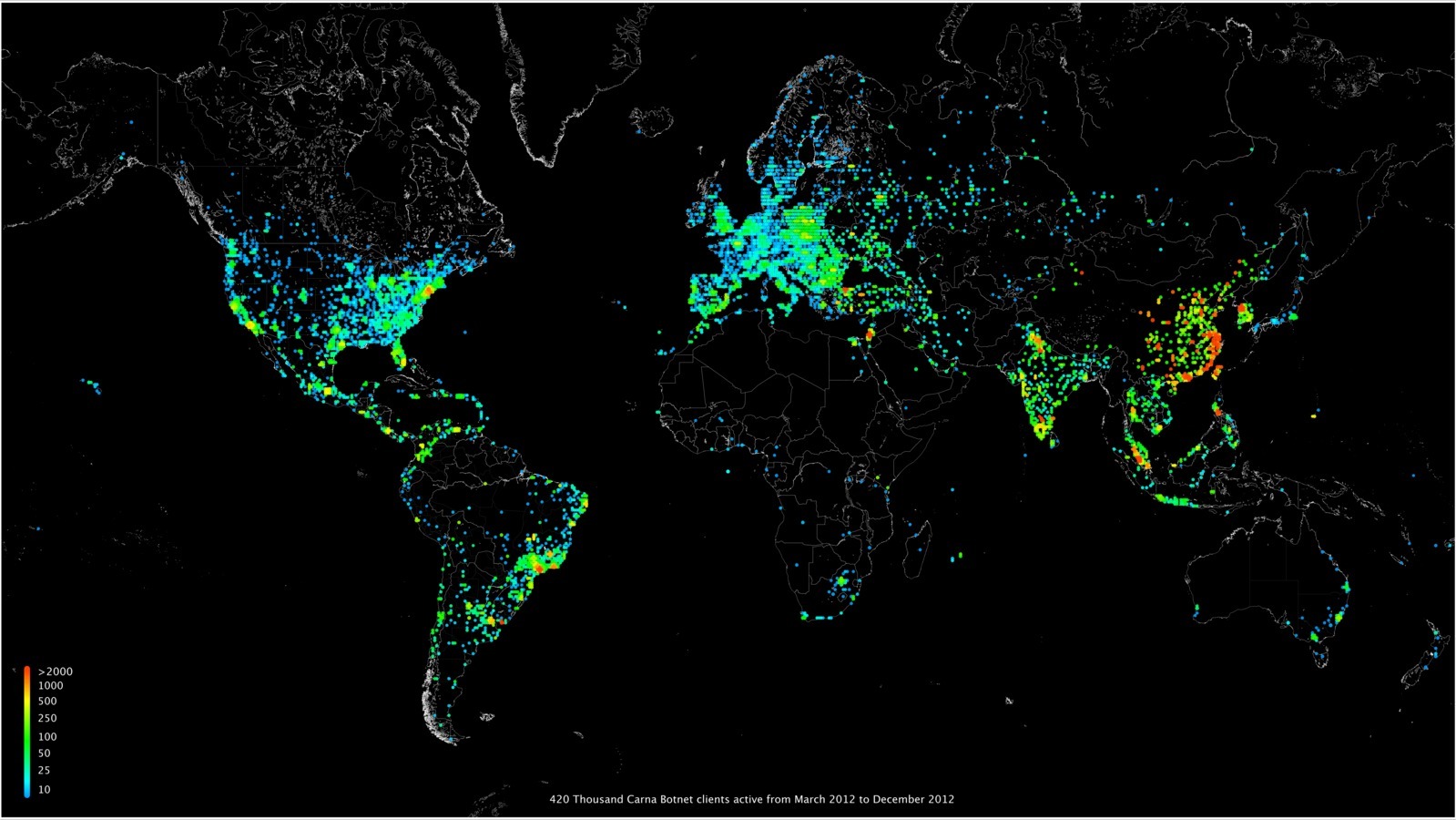

In 2012, a curious study of the number of IPv4 addresses used at that time appeared. The highlight is that the information was obtained with the help of a global Internet scan by a huge hacker botnet of 420 thousand nodes.

After collecting the information and algorithmic processing, it turned out that about 1.3 billion IP addresses were active at the same time. Another 2.3 billion inactive.

Physical incarnation

Despite the rise of the digital age, for many of us bits and bytes remain concepts somewhat abstract. Well, before memory was measured in megabytes, now gigabytes. And what if you try to imagine the size of the Internet in some real embodiment? In 2015, two scientists suggested using real A4 paper pages for evaluation. Based on data from the WorldWideWebSize service mentioned above, they decided to consider each web page equivalent to 30 pages of paper. Got 4.54 x 10 9 x 30 = 1.36 x 10 11 A4 pages. But from the point of view of human perception, this is no better than the same bytes. Therefore, the paper was tied to the ... Amazonian jungle. According to the authors, for the manufacture of the above amount of paper, 8,011,765 trees are needed, which is equivalent to 113 km 2 of the jungle, that is, 0.002% of the total area of the Amazonian thickets. Although later in the Washington Post newspaper it was suggested that 30 pages were too many, and it would be more correct to equate one web page to 6.5 A4 pages. Then the entire Internet can be printed on 305.5 billion paper sheets.

But all this is true only for textual information, which is far from the largest share of the total amount of data. According to Cisco , in 2015, video alone accounted for 27,500 petabytes per month, and the aggregate traffic of websites, email and “data” was 7,700 petabytes. A little less had to transfer files - 6,100 petabytes. If anyone forgot, a petabyte is equal to a million gigabytes. So the Amazon jungle will not allow to provide data volumes on the Internet.

In the aforementioned study from 2011, it was proposed to visualize using CDs. According to the authors, in 2007, 94% of all information was presented in digital form - 277.3 optimally compressed exabytes (a term denoting data compression using the most efficient algorithms available in 2007). If we record all this wealth on DVD (4.7 GB each), then we get 59,000,000,000 discs. If we assume that the thickness of one disc is 1.2 mm, then this stack will be 70,800 km high. For comparison, the length of the equator is 40,000 km, and the total length of the state border of Russia is 61,000 km. And this is the amount of data as of 2007! Now we will try in the same way to estimate the total traffic volume, which is predicted for this year - 1.1 zettabyte. We get a stack of DVDs with a height of 280,850 km. There is the time to go to space comparisons: the average distance to the moon is 385,000 km.

Another analogy: the overall performance of all computing devices in 2007 reached 6.4 x 10 18 instructions / sec. If we accept that there are 100 billion neurons in the human brain, each of which has 1000 connections with neighboring neurons and sends up to 1000 pulses per second, then the maximum number of neural impulses in the brain is 10 17 .

Looking at all these dozens in large degrees, there is a steady feeling of the informational flood. Pleases at least the fact that our computing power grows faster than the accumulation of information. So it remains to hope only that we will be able to develop artificial intelligence systems that will be able, at the very least, to process and analyze all the increasing data volumes. After all, it is one thing to teach a computer to analyze text, and what to do with images? Not to mention the cognitive video processing. In the end, the world will be ruled by those who can get the most out of all these petabytes that fill the worldwide network.

Source: https://habr.com/ru/post/368853/

All Articles