Childhood dream or search for alternatives to the Turing machine

In the year somewhere in 1993 (I was studying then in 2nd grade), after watching a video recorder with an “underground” translation of the movie “Terminator 2: Judgment Day”, I had a childhood dream to make such a robot that could not only fight, but also to do homework in the Russian language for me with my handwriting so that the teacher would not notice (I didn’t like this subject wildly).

The time has passed, but even now, without exaggeration, we can say that the capabilities of artificial intelligence, embedded in the so-called neuroprocessor of the robot terminator, whose role was played by Arnold Schwarzenegger, still remain fantastic. After all, it is obvious that in order for a task to be solved with the help of computer technology, it must first of all be formalized. And since, as of today, there is no single and complete formal description of artificial intelligence in the World, this question remains unsolved. And so far, the very expression “artificial intelligence” is more of a certain subjective character, applicable only to individual tasks (well, this is my personal opinion, maybe I am wrong). But even if all the same, the processes occurring in the human brain can be described using mathematical formulas, that is, just to find the very way to formalize artificial intelligence, it is unlikely that the capabilities of modern computing will allow it to be realized. The point here is that all formalized algorithms, as of today, can be implemented in two ways:

Nowadays, of course, those (though not the same) neural processors (about one of them is written here ) have already appeared, although, in fact, an artificial neuron is nothing more than a mathematical model, or just that very attempt to formalize the work of a biological neuron, which, again, is realized either programmatically using processors, or hardware using programmable logic, well, or using ASIC (roughly speaking, the same as FPGAs, only in FPGA, the connections between logic gates are programmed, and in ASIC - hardware ), As you can see anything fundamentally new.

Microprocessors and FPGAs are two completely different topics, and here we will talk about microprocessors.

I think it is no secret to anyone that almost all modern processors are one way or another implementation (this is of course quite conditional, but nonetheless) Turing machines . Their peculiarity is that the next command to be executed is selected from memory sequentially, its address is generated in a row by a command counter, or it is contained in certain fields of the previous command. And it is obvious that processors working on such principles are as far as the terminator’s capabilities even farther than a copper pot to China on foot. And then the question arises: “Is there an alternative to this method of implementing software algorithms that could bring machine computing to a fundamentally new level without going beyond the capabilities of modern electronics? ". In higher education institutions, in specialties, one way or another, connected with computer technology, they read a course of lectures in which it is told about the organization of computers and systems, and, among other things, this course tells us that all the mechanisms of software implementation that exist today algorithms are executed as follows:

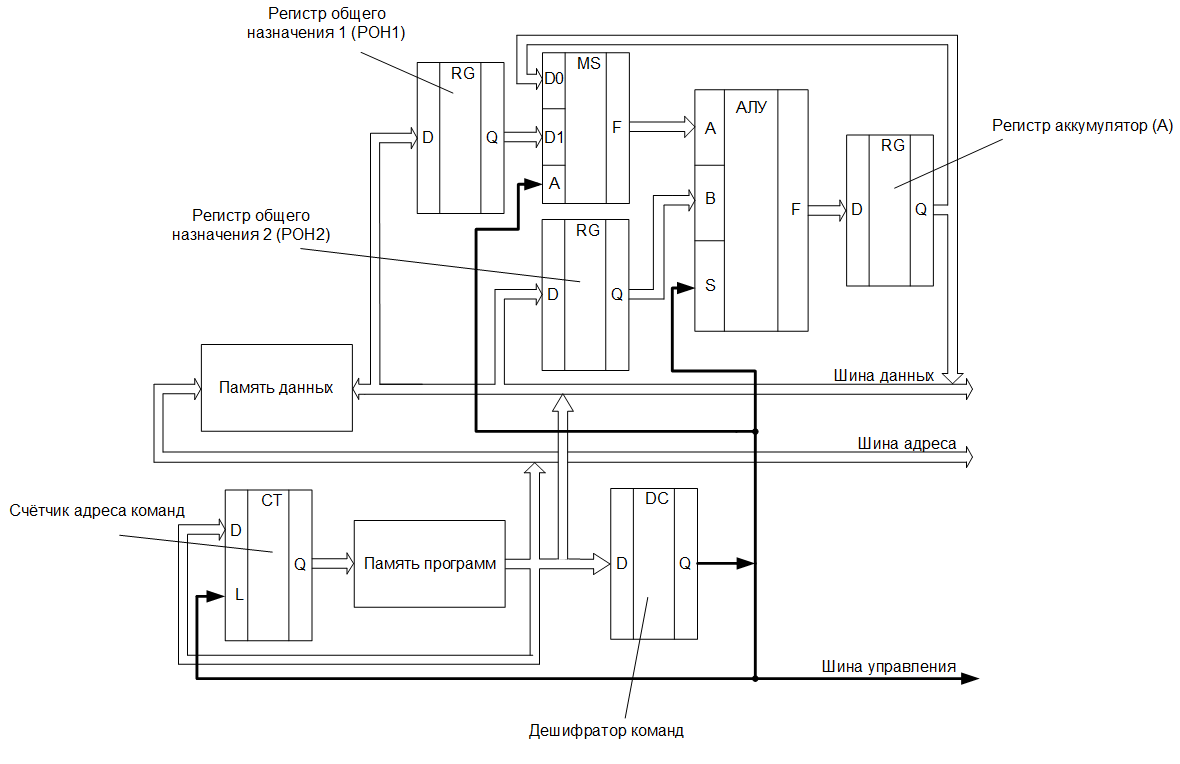

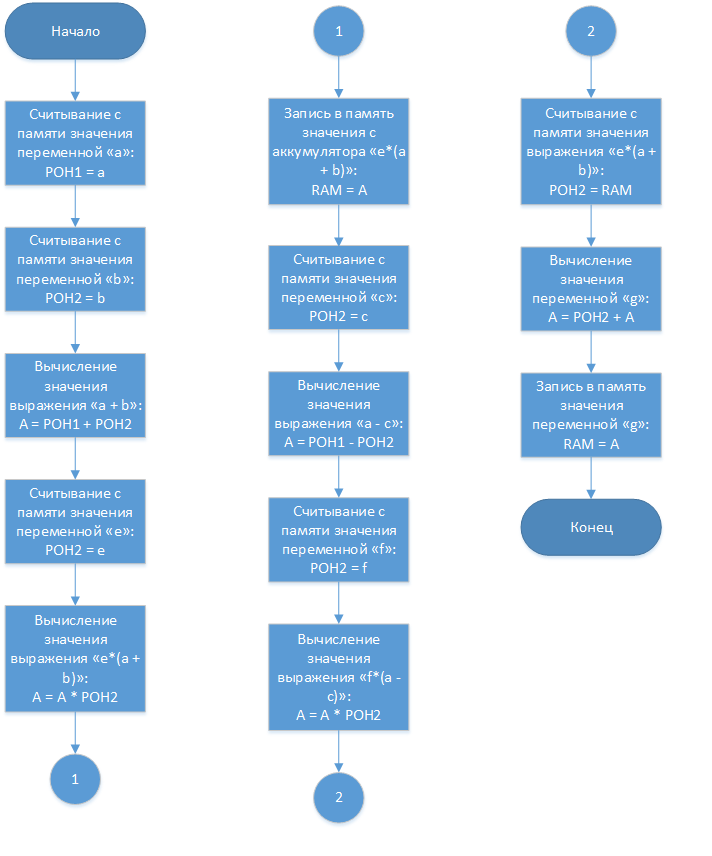

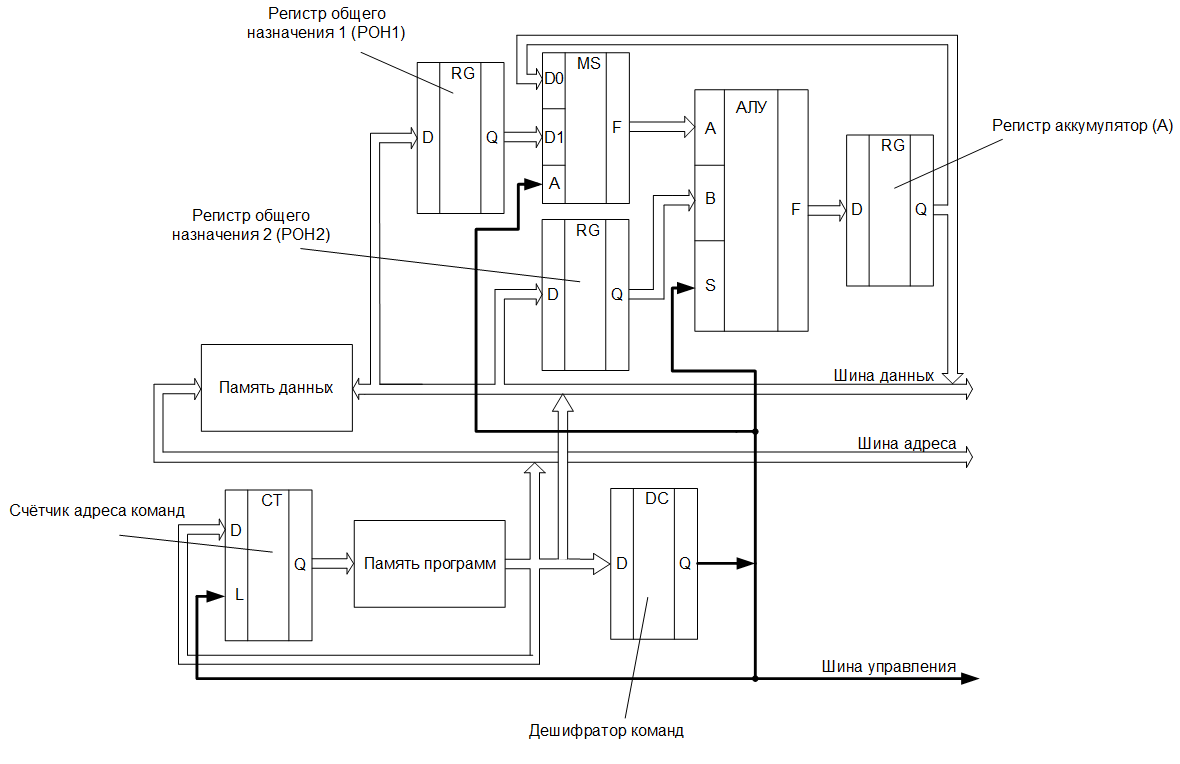

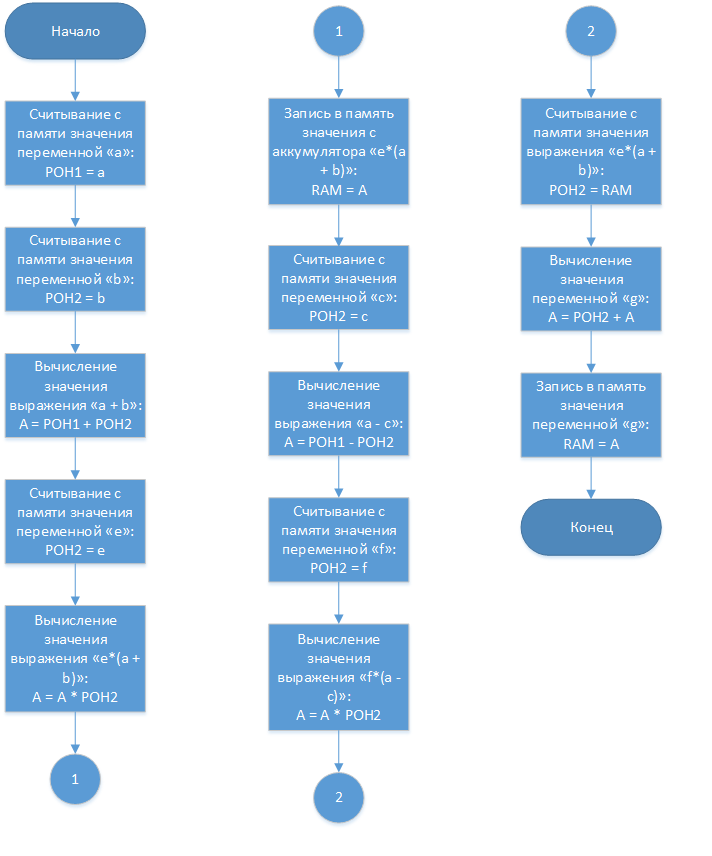

As it was said in one of the publications posted on the website habrahabr.ru , a more detailed link to which will be presented later in the text, any computational algorithm is a set of mathematical formulas, by means of which it is implemented. From the same publication, we take the formula presented in it for an example: g = e * (a + b) + (ac) * f, and draw up a flowchart for a classic processor with the following structure (simplified and very conditional (for example will do)):

“Our” processor has a Harvard architecture and consists of two general-purpose registers, an arithmetic logic unit, a register — a battery, a counter, and a command decoder. Moreover, the input port "A" of the ALU can be fed either from the general register or from the register to the battery. This is implemented using a multiplexer. So, the flowchart for this processor will look like this:

Each operational block on the block diagram is an analogue of a cell on a tape in a Turing machine, and the instruction address counter is an analog of a read head. So, to calculate the formula taken as an example, you need to count 13 cells (perform 13 actions).

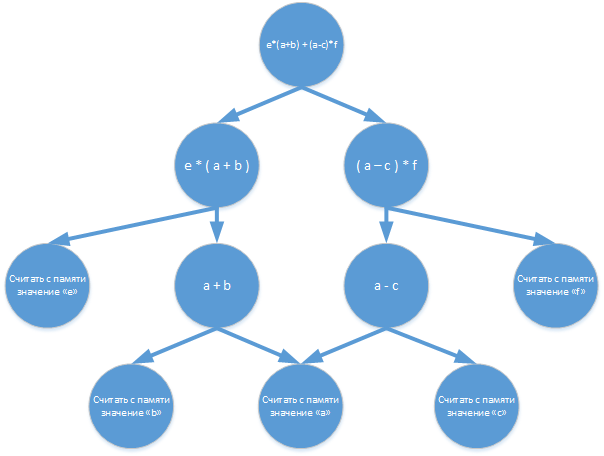

As for stream computing, here it is told what kind of calculations are and how they are implemented. For our case (taken as an example of a formula), the graph of stream computing (analog of the flowchart of the algorithm) will have the following form:

For a stream processor with the following structure:

calculations with the distribution of commands will look like this:

Comparatively, not so long ago, one of the domestic companies announced the end of the development of a processor with such an architecture. This company is called OAO Multiklet . In their development, each processor element is called a cell, hence the name - a multicellular processor. On habrahabr.ru there are many publications devoted to this processor, for example , this one . This publication is the same one, the link to which I, earlier, promised to give, and with which, for example, I took the formula and the graph of stream computing.

Generally, when OJSC “Multiklet” announced the development of such an architecture, this news was presented in such a way that I had, I thought that now there would be a revolution in the market of computing systems, but nothing happened. Instead, publications on the shortcomings of multi-cellular architecture began to appear on the Internet. Here is one of them. But, with all that, the development turned out to be working and competitive.

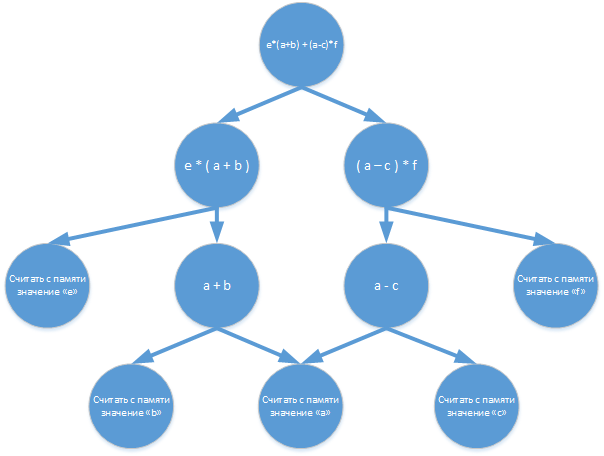

Another interesting way to organize calculations, other than the Turing machine, is reduction calculations. In the previous case, each command is executed when all its operands are available, but with this approach a situation may arise when the results of the executed command may no longer be needed, then it turns out that the time and hardware resources were wasted. In reduction calculations, this disadvantage was overcome by the fact that the execution of a command is initiated by a query for its results. The mathematical basis of such calculations are λ-calculus . To calculate our formula, the whole process begins with a request for the result g, which, in turn, will form requests for performing operations e * (a + b) and (ac) * f, and these operations will form requests for calculating the values a + b and ac, etc .:

By themselves, reduction calculations consist of the processes of recognition of redexes and then replacing them with the results of executed, requested earlier, commands. As a result, all calculations are reduced to the desired result. Nowhere, neither in literature, nor on the Internet did I find descriptions of real processors with such an architecture, I could not search well ...

At present, research is already being conducted on the creation of computing systems operating on fundamentally new principles, such as quantum computing , photon computing , etc. It is obvious that computers created using such technologies will surpass all modern ones and bring pupils closer to the moment when it will be possible to force the piece of iron to do the homework for the Russian language for itself. But the question of creating a fundamentally new way to implement machine computing, without going beyond the framework of modern electronics , is still relevant.

The time has passed, but even now, without exaggeration, we can say that the capabilities of artificial intelligence, embedded in the so-called neuroprocessor of the robot terminator, whose role was played by Arnold Schwarzenegger, still remain fantastic. After all, it is obvious that in order for a task to be solved with the help of computer technology, it must first of all be formalized. And since, as of today, there is no single and complete formal description of artificial intelligence in the World, this question remains unsolved. And so far, the very expression “artificial intelligence” is more of a certain subjective character, applicable only to individual tasks (well, this is my personal opinion, maybe I am wrong). But even if all the same, the processes occurring in the human brain can be described using mathematical formulas, that is, just to find the very way to formalize artificial intelligence, it is unlikely that the capabilities of modern computing will allow it to be realized. The point here is that all formalized algorithms, as of today, can be implemented in two ways:

- software implementation (on microprocessor technology);

- hardware implementation (usually on programmable logic ).

Nowadays, of course, those (though not the same) neural processors (about one of them is written here ) have already appeared, although, in fact, an artificial neuron is nothing more than a mathematical model, or just that very attempt to formalize the work of a biological neuron, which, again, is realized either programmatically using processors, or hardware using programmable logic, well, or using ASIC (roughly speaking, the same as FPGAs, only in FPGA, the connections between logic gates are programmed, and in ASIC - hardware ), As you can see anything fundamentally new.

Microprocessors and FPGAs are two completely different topics, and here we will talk about microprocessors.

I think it is no secret to anyone that almost all modern processors are one way or another implementation (this is of course quite conditional, but nonetheless) Turing machines . Their peculiarity is that the next command to be executed is selected from memory sequentially, its address is generated in a row by a command counter, or it is contained in certain fields of the previous command. And it is obvious that processors working on such principles are as far as the terminator’s capabilities even farther than a copper pot to China on foot. And then the question arises: “Is there an alternative to this method of implementing software algorithms that could bring machine computing to a fundamentally new level without going beyond the capabilities of modern electronics? ". In higher education institutions, in specialties, one way or another, connected with computer technology, they read a course of lectures in which it is told about the organization of computers and systems, and, among other things, this course tells us that all the mechanisms of software implementation that exist today algorithms are executed as follows:

- the next command or set of independent commands of the sequence is read from memory and executed when the previous command is executed (set of independent commands), as mentioned above, most modern processors work this way, implementing the principles of the Turing machine;

- a command is read from memory and is executed when all its operands (stream calculations) are available;

- commands are combined into procedures internally, each of which is performed as in the first case (that is, within each of these procedures, commands are executed sequentially according to the principles of the Turing machine), while, apparently, the procedures themselves are executed as in the second case, that is, as availability and availability of all necessary operands (macro thread computations representing a mixture of the first two options);

- the command is read from memory and is executed when other commands need the results of its execution (reduction calculations).

As it was said in one of the publications posted on the website habrahabr.ru , a more detailed link to which will be presented later in the text, any computational algorithm is a set of mathematical formulas, by means of which it is implemented. From the same publication, we take the formula presented in it for an example: g = e * (a + b) + (ac) * f, and draw up a flowchart for a classic processor with the following structure (simplified and very conditional (for example will do)):

“Our” processor has a Harvard architecture and consists of two general-purpose registers, an arithmetic logic unit, a register — a battery, a counter, and a command decoder. Moreover, the input port "A" of the ALU can be fed either from the general register or from the register to the battery. This is implemented using a multiplexer. So, the flowchart for this processor will look like this:

Each operational block on the block diagram is an analogue of a cell on a tape in a Turing machine, and the instruction address counter is an analog of a read head. So, to calculate the formula taken as an example, you need to count 13 cells (perform 13 actions).

As for stream computing, here it is told what kind of calculations are and how they are implemented. For our case (taken as an example of a formula), the graph of stream computing (analog of the flowchart of the algorithm) will have the following form:

For a stream processor with the following structure:

calculations with the distribution of commands will look like this:

Comparatively, not so long ago, one of the domestic companies announced the end of the development of a processor with such an architecture. This company is called OAO Multiklet . In their development, each processor element is called a cell, hence the name - a multicellular processor. On habrahabr.ru there are many publications devoted to this processor, for example , this one . This publication is the same one, the link to which I, earlier, promised to give, and with which, for example, I took the formula and the graph of stream computing.

Generally, when OJSC “Multiklet” announced the development of such an architecture, this news was presented in such a way that I had, I thought that now there would be a revolution in the market of computing systems, but nothing happened. Instead, publications on the shortcomings of multi-cellular architecture began to appear on the Internet. Here is one of them. But, with all that, the development turned out to be working and competitive.

Another interesting way to organize calculations, other than the Turing machine, is reduction calculations. In the previous case, each command is executed when all its operands are available, but with this approach a situation may arise when the results of the executed command may no longer be needed, then it turns out that the time and hardware resources were wasted. In reduction calculations, this disadvantage was overcome by the fact that the execution of a command is initiated by a query for its results. The mathematical basis of such calculations are λ-calculus . To calculate our formula, the whole process begins with a request for the result g, which, in turn, will form requests for performing operations e * (a + b) and (ac) * f, and these operations will form requests for calculating the values a + b and ac, etc .:

By themselves, reduction calculations consist of the processes of recognition of redexes and then replacing them with the results of executed, requested earlier, commands. As a result, all calculations are reduced to the desired result. Nowhere, neither in literature, nor on the Internet did I find descriptions of real processors with such an architecture, I could not search well ...

At present, research is already being conducted on the creation of computing systems operating on fundamentally new principles, such as quantum computing , photon computing , etc. It is obvious that computers created using such technologies will surpass all modern ones and bring pupils closer to the moment when it will be possible to force the piece of iron to do the homework for the Russian language for itself. But the question of creating a fundamentally new way to implement machine computing, without going beyond the framework of modern electronics , is still relevant.

')

Source: https://habr.com/ru/post/368499/

All Articles