Should robots understand morality and ethics?

What should a robot nurse do if a cancer patient asks to increase the dose of morphine, and you cannot contact the doctor? Should a robot driver forbid a drunk person to drive a car himself when he rushes to the hospital with his child? Whose voice should hurry the rescue robot in the ruins after an earthquake - a child or an adult? And if it so happens that an adult is you, but the child is not yours?

People for many years dream of an era of affordable robotics, when smart machines will save us from many labors. "What progress has reached, to unprecedented miracles." But at the same time, the development of robots will raise serious questions about how they should behave in difficult situations involving acute moral and ethical problems.

Scientific research can clarify what the notion of “understanding morals” means to people. Based on this knowledge, we will be able to design robots at least with an elementary level of knowledge about morality, and find out whether they are able to successfully interact with humans. First of all, we must understand how people make moral choices due to their unique ability to perceive social, moral and ethical norms. Next, we will be able to study whether robots are able to recognize, understand, and comply with these norms.

The technologies with the help of which artificial intelligence can be created have been steadily developing for 50 years now. But the ethical aspects of progress and its possible consequences have only recently begun to attract widespread attention. Until 2004, 41 academic articles devoted to “roboetics” were published in the West. Between 2005 and 2009, this number doubled - to 88. From 2010 to 2014, the number of articles doubled again - to 170 (search for “robot *” and “ethic *” in the EBSCOhost scientific databases). Conferences appear that raise questions of the growing number of ethical issues in fast-progressing robotics.

')

Necessity of norms

Social norms facilitate interaction with other people, prompting a pattern of behavior (What should I do?), Giving the opportunity to predict (What should happen?) And providing coordination (Who should do this?). It was necessary for the nomadic tribal order, who roamed the territories from burning Africa to the cold of Northern Europe. The rules governed life on sites, while hunting together, sharing food, and also during seasonal migration.

A qualitative leap in moral life occurred with the transition to agriculture and the communal system. The norms were supposed to regulate property relations (for example, the distribution of land), new forms of production (harvesting tools) and a huge number of social roles (from servant to king). Today, social norms govern countless cultural forms of behavior. We can assume that people know more social and moral norms than they know the words in their native language.

To assess the immensity of our system of norms, you can conduct a small experiment: take ten random words from the dictionary and count how many words you can think of for each word. Then think about how many variations each of these norms has, depending on the situations and the people involved.

People not only know a huge number of rules and adjust their actions in accordance with them; they also know when it is permissible to refuse to use any rules. A person easily deals with the context of variation in norms. We understand that a conversation can be a good option in some cases, but not in others, or that it is permissible to hit another person only in certain situations. In addition, people understand (not necessarily consciously) that certain norms apply to a given space, object, person, situation or process. The correct set of behavioral norms is activated by a variety of signals received from repetition of similar situations.

Given the complexity of human norms (and even there was not even a talk about cultural variations), is it possible to assume that robots are in principle capable of learning the unspoken rules? Before solving this problem, we first answer the question: why do we want to teach the robot to understand morality and ethics?

Why should machines be ethical?

If (when) robots become part of our daily life, then these new members of society will have to adapt appropriately in order to interact with us in a complex human culture. And if we ourselves follow the norms, shouldn't we give the robots the same system of norms, that is, create machines that know morality?

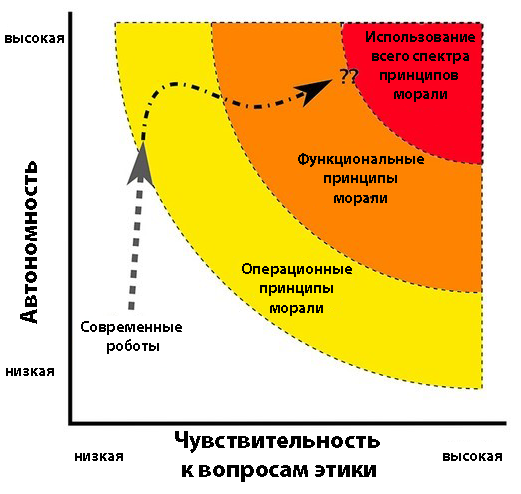

We have been using machines with the beginnings of morality for some time, mainly in the form of protective functions (for example, when a hot iron automatically shuts off after a certain period of time). Such characteristics of the device are needed to prevent possible harm - and this is one of the main functions of morality. Of course, robots must be safe and have a certain degree of protection. But this will not be enough if we want robots to make their own decisions that go beyond programmed situations.

Why should robots have the ability to decide for themselves? Because this is the only way for them to overcome the problem of interaction with people. Within the framework of cooperation or training, people cannot simply rely on the results of preliminary programming, because human behavior is too complex and changeable. People for thousands of years have developed the ability to adapt and create, which is why it is much harder to predict their behavior. Robot responses to changeable and unpredictable human behavior cannot be pre-programmed. The robot will have to monitor the human response to small changes in the environment and respond flexibly in response - this ability requires independence in decision making.

Although human behavior is complex and diverse, and may seem very unpredictable for a robot, it is quite obviously read by other people. Why is that?

- First, we make a number of fundamental assumptions about the individuals with whom we interact. We proceed from the fact that other people have some mental attitudes (feelings, emotions, desires, and beliefs) and behave in accordance with them. Since a person quickly determines the mental attitudes of others, the ability to predict and interpret the behavior of each other is greatly enhanced.

- Secondly, as mentioned above, human behavior is most often determined by the social and moral norms of a given society, and knowledge of these norms makes people much more predictable in communicating with each other.

The ability to mentally put oneself in the place of another, ascribe certain mental states and notions to other individuals, understanding that they may be false and may differ from our own, is often called the "theory of the mind."

Now we connect these two elements together: if the robot shared human assumptions about mind and behavior, and could make correct guesses about the mental state of people, and if the robot followed social and moral norms, then the AI’s ability to predict and understand human behavior would be greatly improved.

Of course, it is not enough to simply learn how to predict human behavior. But this is a means to achieve a more important goal: to ensure safe, effective and convenient communication between humans and robots. Only when robots can understand homo sapiens and respond adequately to their behavior, will people be able to trust cars to nurse babies or take care of elderly parents.

The requirements of social interaction force robots to be cognitively flexible and adaptive, and, therefore, more independent. To achieve such autonomy, they must have a system of norms and satisfy the provisions of the theory of mind. Not so long ago, the computer implementation of the theory of the mind was in the center of attention of researchers in the field of robotics. Recently, a new question has arisen: how can robots master the system of norms? To answer it, you need to carefully consider people.

Moral education of people and robots

Like robots, people begin life with a complete lack of understanding of the complex demands of society. Infants and toddlers gradually learn the rules of social interaction in the family, then expand these rules to situations and relationships outside the home. At school age, children learn a large list of norms, but are still not ready for many situations from the world of adults. Even adults are constantly faced with new norms for unfamiliar tasks, contexts, roles, and relationships.

How do we assimilate all these norms? This is a complex and poorly understood process, but there are two main mechanisms in it. The first is manifested in the very early stages of life, when babies discover statistical patterns in the physical and social world. They begin to understand the patterns of events occurring in the behavior of people reacting to different situations, especially if these patterns are repeated in the form of daily rituals. By getting ideas about the norm, children can then find violations in standard patterns and observe the reaction of people in response to violations. Noting the degree of response, they learn to distinguish between strict rules (strong reaction) and aspirations that generate benefits (moderate or absent reaction).

But many social and moral norms cannot be simply acquired by tracking patterns. For example, if a pattern is the absence of certain behavior, or if you have never been in a situation in which the relevant rules apply. The second mechanism governs such cases: it teaches rules and regulations in other ways, which range from demonstrating intentions to explicit requirements. Children (and adults) learn the rules and are ready to act “as they are told” because of their strong dependence on the opinions of others and their sensitivity to disapproval.

Can we implement these two mechanisms in robots so that they master the rules in the same way as humans? In terms of computation, behavioral patterns are easy to process; Assessing strong reactions to abnormalities is a more difficult problem. The “mind” of a robot must process not only pictures, but also videos containing a reaction to a violation of the norm. Robots cannot be brought up in families like children for years, so the only available option is the one in which we feed films and television shows for all of humanity. A robot viewing a video could get a large list of behavioral patterns, deviations, and reactions to deviations. Although in this case the choice of the right materials for training plays a big role. Obviously, action movies and crafts like “House 2” cannot be used to train robots.

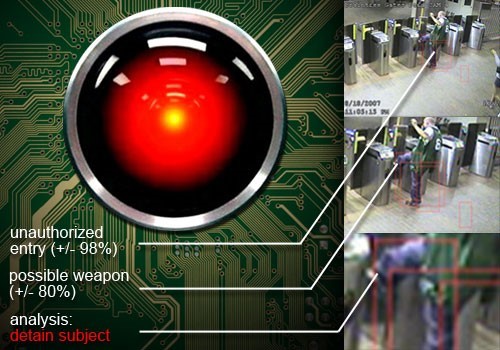

The DARPA program was launched " Mind's Eye ", designed for the development of computers with "visual intelligence." The agency plans to make a “smart” surveillance camera, which not only sees what is in front of it, but also is able to understand what is happening at the moment and even predict what may happen next.

The second mechanism of teaching norms, in principle, is also easily mastered: to teach a robot through demonstration and explanation of what can be done and what cannot be done. This method requires flexibility when storing data, because the robot must understand that the most explicit rules apply to the situations in which they were stated directly, and may not necessarily manifest themselves in other contexts.

If a combination of these approaches allows the robot to gain an understanding of the norms, and as a result the system of norms will be associated with the actions of the robot, the machine will be able to recognize the normative and non-normative behavior, as well as “follow” the rules. Artificial intelligence, like people, learns how to behave in any recognized situation.

Can robots one day become more moral than humans?

Robots have a huge potential to become more developed mentally than humans themselves.

- First, robots, unlike humans, will not be selfish. If we do everything right, then the priority of the robot will be the primacy of human interests without extracting its own benefit. A robot can follow its own goals and at the same time act in accordance with social norms, and not arrange competition between these activities.

- Secondly, robots will not be influenced by emotions, such as anger, jealousy, or fear, which can systematically lead to bias in moral judgments and decisions. Some even believe that the verified and logical actions of robots make them excellent soldiers who, unlike humans, do not violate the norms of international humanitarian law.

- Finally, the robots of the near future will have specific roles and will be used to solve a limited range of tasks. This significantly reduces the number of norms that each robot must learn, and reduces the problem of situational variability that people constantly face. Give the robot some time to work with older people, and he will know how to behave in nursing homes; at the same time, the robot may have a vague idea of how to act in kindergarten, but this is not required of him.

The likelihood that robots will perform limited duties helps to solve another question: what behavioral norms should a robot know? The answer is what the child must learn: the rules of this community. If robots are to be active participants in specific social communities, they will be designed and trained within the norms of their own community.

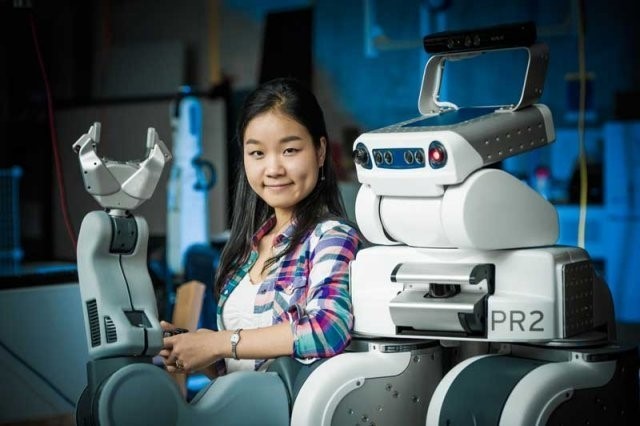

For example, AJung Moon, Ph.D. in mechanical engineering from the University of British Columbia, is developing methods for determining standards in different groups with different value systems. Her research demonstrates the level of complexity of solving even such simple things as the reaction of a robot carrying a package to people when entering the elevator. Ultimately, it was discovered that there are no hard and fast rules. The robot must, like humans, assess the context of the situation and use any means of interaction available to it. From the point of view of man, it turns out to be unacceptable that the robot simply does nothing and stands at the elevator.

The task of designing robots that can understand the situation and act in accordance with the moral norms of man is difficult, but not impossible. It’s good that the limited capabilities of modern robots give us time to teach them to understand moral and ethical issues. Yes, the robots will not be perfect, and the cooperation of the robot and the person will be based on a symbiosis that will allow each of the partners to do what he does best.

Source: https://habr.com/ru/post/367251/

All Articles