NVIDIA GTX 970 - the practice of inferiority, part 2

Hello! This is the third, final part of the material on the performance of the NVIDIA GTX 970 video card. In the first part, we talked about video chip generations, the internal structure of the GTX 970 and the causes of noise on the topic of three and a half gigs, in the second we studied how much it affects performance zhelezyachnye edition, and now let's do the actual tests and see what the GTX 970 is good, and what - not very.

For the tests, I used my personal PC: Core i7-3930K (6 cores @ 4.2 GHz), 16 gigabytes of fairly rare memory Kingston HyperX Beast 2400 MHz in four-channel mode (it is difficult to find anything faster on DDR3), all the toys and benchmarks stood on PCI-Express SSD disk to minimize the impact of other components on test results). The whole thing is connected to a monitor with a resolution of 2560x1440 pixels. As you can see, there’s really nothing to rest on: toys with 64-bit architecture and binaries - the cat cried, and the rest, in theory, should be enough for everything. Of course, all updates, patches, drivers, hotfixes and all that were the freshest, right from the garden.

')

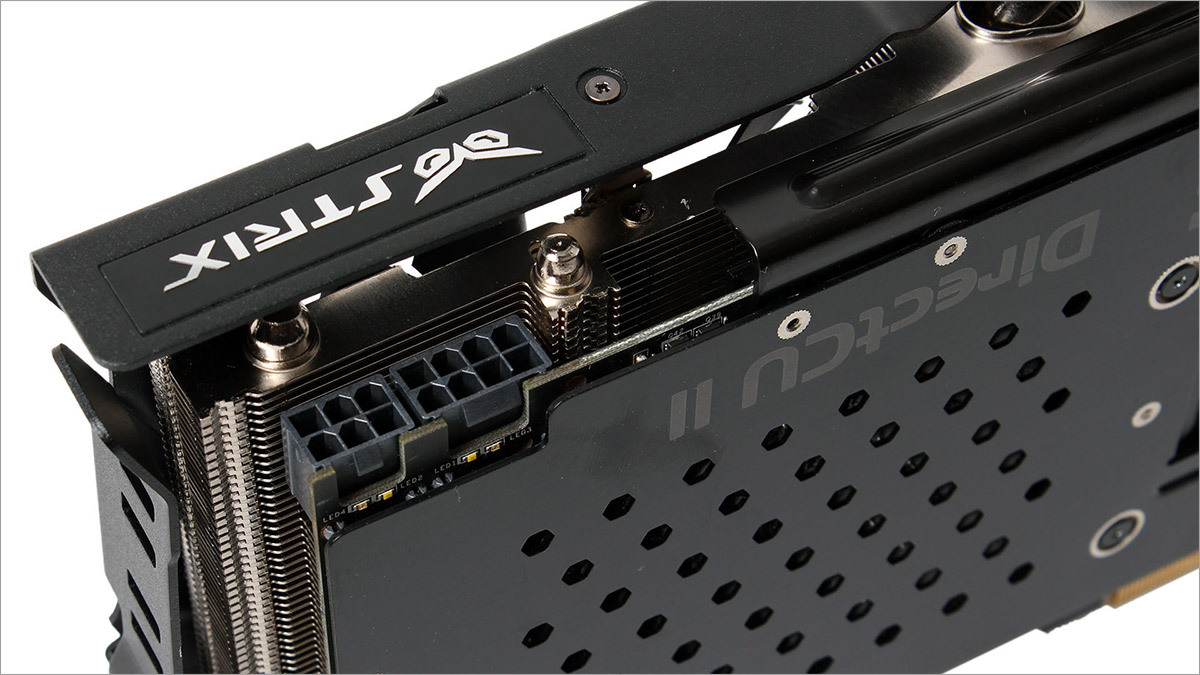

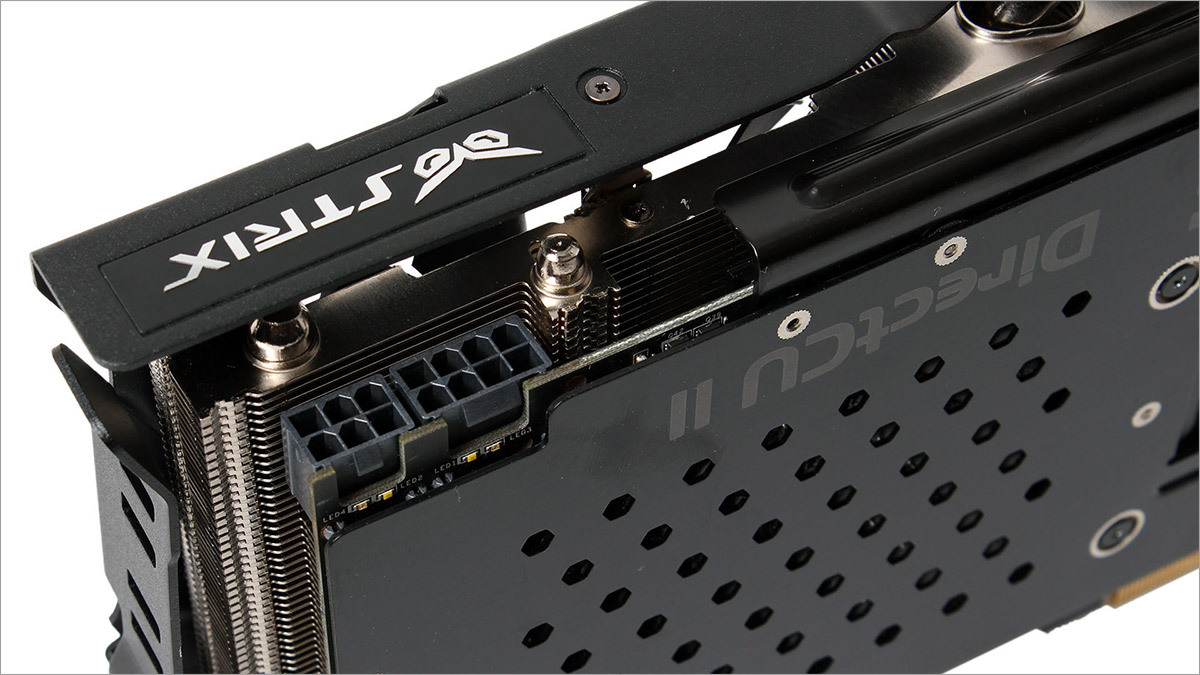

As a test subject, I used the ASUS STRIX GTX 970 : a video card with an incredibly quiet cooling system and excellent hardware.

What are young people playing here today? And then I heard that Battle City was a little out of fashion ... In general, the following games were chosen for the test:

In all cases, the maximum possible graphics settings with disabled vertical synchronization and active anti-aliasing x4 will be applied. Any Frame Limiters and other “smoothers” were turned off, after each test the video card cooled down to normal temperatures and the system rebooted.

A special guest is a game that does not differ in fantastic graphics or optimization, but can set the heat of many GPUs under certain conditions. We need it to show some of the bottlenecks of the Maxwell architecture. We will use Dark Souls 2 and perform downscale with a resolution of 3840x1260 to 2560x1440 and from 5220x2880 to 2560x1440.

Unfortunately, GTA V at the time of the tests and the writing of the article has not yet beendownloaded , but after that there was no time to restore the test environment, so forgive me, this time without it.

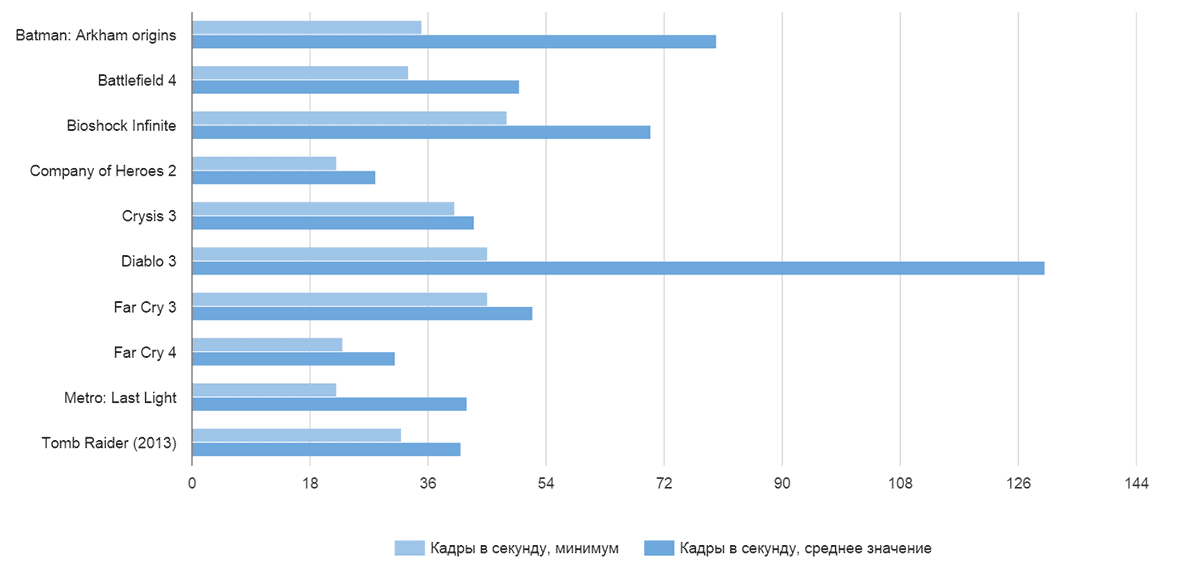

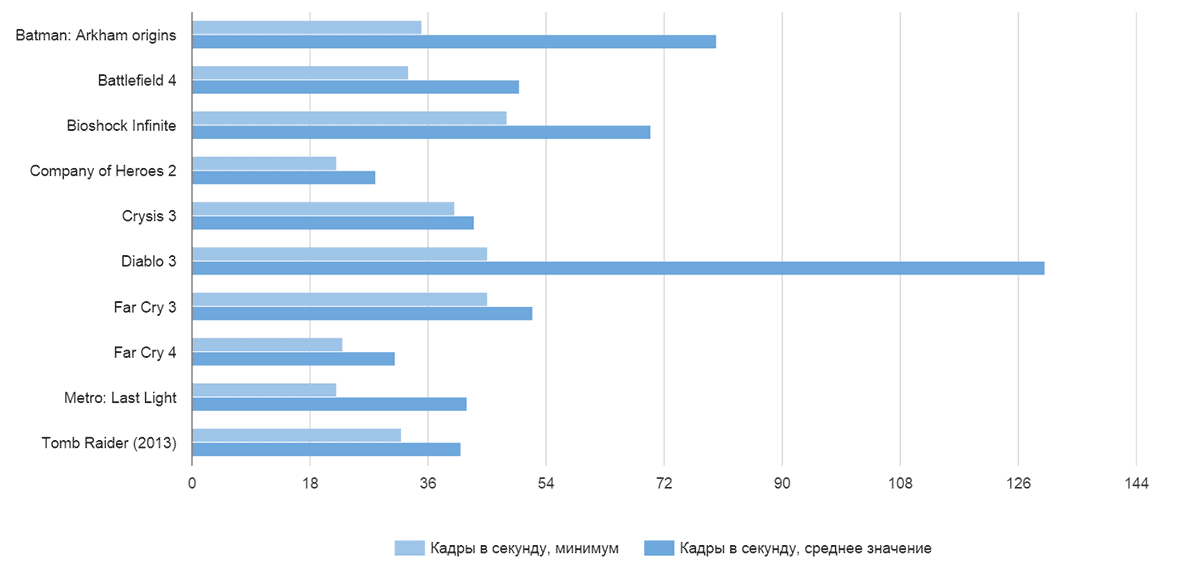

For a resolution of 2560x1440, it is quite difficult to achieve enough performance. Not so long ago (in the topic about GTA V) I found a person for whom “below 60 FPS are insane lags). Well, everyone has their own tastes, but I believe that 30 FPS at this resolution with a single video card is quite a playable option, especially if the number of frames per second in intense scenes does not sag below 25. Here are the results:

As you can see, almost all games on super settings and with quadruple smoothing show acceptable results, with the exception of frankly voracious monsters with so-called optimization: Metro Last Light and Company of Heroes 2 were expected to become outsiders of the charts.

As for memory consumption, it was possible to “get out” for three and a half gigabyte lines only in Battlefield 4 and Far Cry 4, (apparently, the magic of the number 4, no other way). Crysis and Metro didn't even come close: the most difficult scenes hardly occupied 3.2 GB of video memory.

Remember the very first post about the GTX 970? There, we looked at all the post-DX9 NVIDIA graphics cards and noted that for three generations, the company has been fighting over the energy efficiency of its chips. On the one hand, it is a noble cause, on the other hand, on desktops, especially with consumption it is possible not to bother: tea, we do not work on batteries. On the other hand, if you reduce energy consumption and heat dissipation, then within the framework of the same heat pack, you can squeeze more performance. And it was in this that Maxwell surpassed both past generations and red and white competitors.

The fact is that the energy efficient GM-204 chips have a heat pack of 165 watts. And they also have automatic overclocking technology: as long as the temperature allows, and the load matches, the core frequency rises within certain reasonable limits. Accordingly, the more reliable the power supply circuit, the better the cooling, the higher the base frequency of the chip and memory, the better the results can be achieved on the Maxwell architecture under load: thereby reducing the drawdown in FPS and increasing the average frame rate. A kind of Intel'ovskogo Turbo Boost.

The nominal frequencies of my video card were:

Kernel, Normal - 1114 MHz

Core, Boost - 1253 MHz

Memory: 7010 (1752.5 x4) MHz

After a bit of shamanism with software raising the voltage, checking for stability and other tweaks, we managed to get the following results:

Kernel, Normal: 1333 MHz

Core, Boost: 1490 MHz

Memory: 8000 (2000 x4) MHz

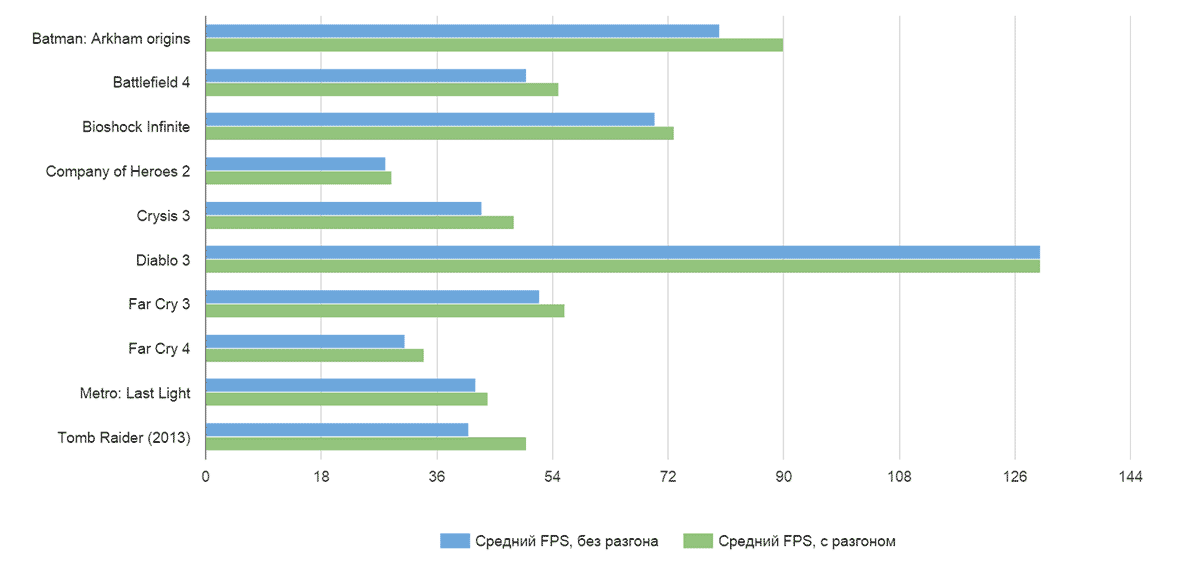

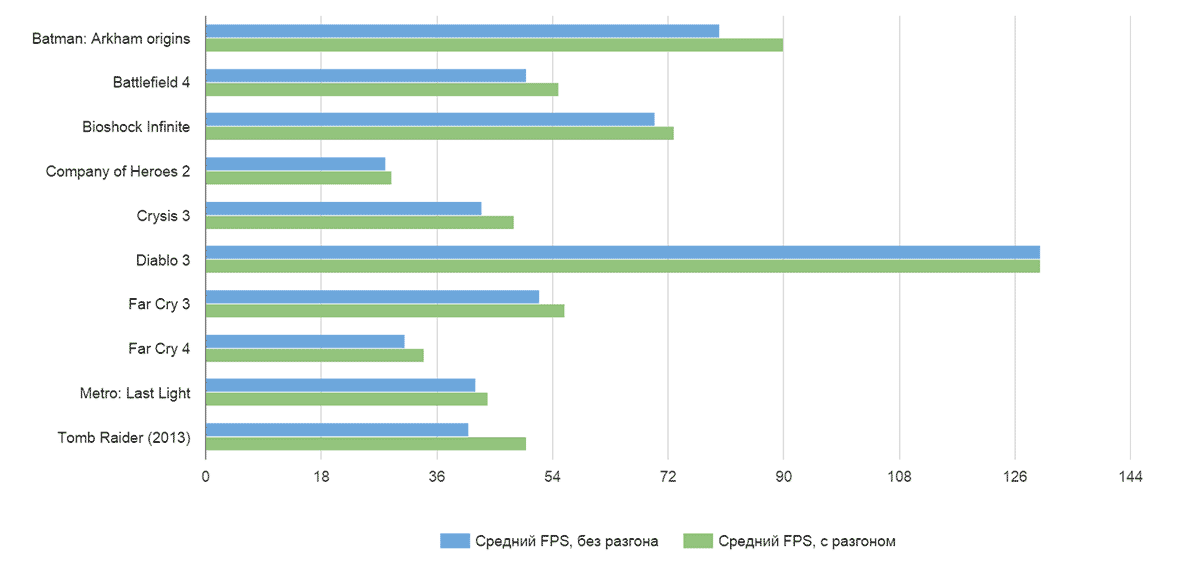

How did this affect performance? In the best way:

Almost everywhere, the graphics have crossed the "comfortable" 25 FPS abroad. It's funny, but overclocking didn’t have any impact on the performance of Diablo 3: apparently, either some kind of internal limiter still works, or the game rests on something else. A curve Company of Heroes 2, even overclocking did not save, as were the sad results, and remained.

Here is a comparison of the minimum frame rate:

Here, the increase is most important and noticeable: in the most intense scenes microfreezes and jams have disappeared, the whole picture has become smoother.

The average frame rate has also grown, but not so much, and overall, the performance gain is more typical for very busy scenes than for the general dynamics:

And now the most important thing. With overclocking, without - the video card is surprisingly quiet. No, of course, in an hour or another game in Crysis 3 with such settings there will be some background noise, but it doesn’t come to any comparison with the noise and heating of past-generation monsters like AMD HD7970, GTX 780 and even more so howl reference turbines. In this regard, ASUS did great work and made an excellent system that does not make noise and does its work for five plus.

In the past post separately complained about Far Cry 4, they say, if the game still crawls out for 3.5 GB, then all sorts of microfreezes, lags, frame drops begin, and the nightmare is going on at all (the second half of the video):

In a game of such beauty with dropped frames and smeared colored spots you will not see, there will just be a slight loss of smoothness of the picture. In the same Crysis 3, if it can be taken out of the limits of consumption of 3.5 GB of video memory in general, such problems are not observed and the performance drawdown is almost invisible, especially if you look at the sight and enjoy the gameplay, and not stare at the FPS-meter, catch a short-term drop in performance, take a screenshot and run to the forum, complain about how bad NVIDIA is. :)

For a resolution of 2560x1440 and 2560x1600, there is enough video card in all games: unless, of course, you are satisfied with an average of 30-40 FPS. Of course, there is not enough video card for 4K2K, and the point here is not in the memory, but in the computational capabilities of the video core. As a 4K2K test, I used Dark Souls 2 with a patch on the downscale graphics . Dark Souls itself does not shine with any graphics or system requirements. At medium-high settings, you can safely play in FullHD resolution with a mobile GT650M video card and 1 GB of video memory. The main load in this case falls on the GPU, and not on the video memory: since no super-complex special effects are applied, the post-processing is quite primitive, and the game itself was developed taking into account the capabilities of the previous generation consoles (the first version was used for tests Sin). With the downscale 3840x2160 to 2560x1440, the NVIDIA GTX 970 gives out confident 15-18 FPS, with overclocking - 16-19. The same exercise, but with a resolution of 5120x2880 does lead to a dismal 12-14 FPS.

For comparison, the old AMD HD7970 with 3 GB of video memory and working with a slight overclocking (1024 MHz core, 5600 (1400x4) MHz memory) surely gives out 25-30 FPS for a resolution of 3840x2160 pixels and 20-22 FPS for 5120x2880.

Firstly, the architecture of AMD and NVIDIA video accelerators is very different both in terms of the “common philosophy” and in some particular approaches.

The GeForce GTX 970 graphics card has a core frequency of 1050 MHz and 4 GB of GDDR5 memory, operating at a frequency of 1750x4 MHz, connected via a 256-bit bus, divided into 8 segments of 32 bits each. The total block of 3.5 GB is connected via a 224-bit segment, an additional 512 MB is connected via a 32-bit segment, which is the reason for the slowdown of the video card when applications get out of the limits of 3.5 GB of video memory.

The core of the Radeon R9 280X video card operates at a frequency of 1000 MHz and uses 1500x4 MHz memory connected via a 384-bit bus. Although it is divided into 6 dual-channel 64-bit blocks, none of them is turned off, so all the memory is available at full speed, even if it is only 3 GB.

Directly compare the characteristics (for example, the number of TMU - texture mapping units) does not make sense: different architecture, frequency of work and other features can not clearly demonstrate the superiority of a particular video card. If you give an abstract analogy: what will take more space - 20 boxes of some unknown volume or 30 boxes of a little different? Only by measuring the volume and multiplying by the number of boxes we can accurately answer this question.

So for the actual comparison of video cards, we better substitute the performance indicators of the R9 280X (aka the 7970 1GHZ Edition) and the GTX 970: they are already reduced to a common denominator and have the same dimensionality. In both cases, we will compare the reference values of standard accelerators, and not overclocked copies of different vendors.

As you can see, the main difference in performance in the area of pixel fill rate. It is responsible for turning the resulting calculations into a finished frame: the higher the pixel fill rate, the faster the video card can turn “magic” into two-dimensional images that can be sent to the monitor. It would be something to send, and this is the whole secret. If we run a toy at a low resolution, say, 1280x800, the load on the memory and computational cores is not high: the number of ready-made personnel is overwhelming and the video card, for example, can issue 100 or even 200 FPS in simple scenes, if it can turn everything that it counts in a two-dimensional image and send it to the monitor.

If we launch games in high resolution, then each frame becomes much more complicated in calculations, the frame frequency decreases, and on ROPs (video card modules that are responsible for transferring pictures to a flat frame) there is an additional load: there is a difference, count 1280x720 = 921,600 points or 2560x1440 = 3,686,400 points. The output frequency of finished frames drops, but the performance drop at all previous stages is stronger than the additional losses on ROPs, and therefore at low resolutions large pixel fill rate gives a huge increase in the results of benchmarks, and on any SuperUltraHD and 4K2K games rest on performance the video card itself, not the modules displaying the picture, and the results are aligned.

The AMD graphics card has a higher memory bandwidth (figures differ by almost 30%) and a smaller amount (3 GB versus 3.5 + 0.5 for the GTX 970), comparable core performance, slightly more shader processors. It was here that the red and white won against the black and green: the performance of the ROPs was enough, but the bonuses in the face of high memory bandwidth and more computational units did their job: at standard frequencies and high loads, HD 7970 or R9 280X could outrun GTX 970. Adjusted for the fact that the game did not require more than 3 GB of video memory. True, the price of this superiority is the monstrous power consumption of the Radeons, and, as a result, high heating, not the most pleasant acoustic mode of operation and overclocking.

The GTX 970 is a great video card that will allow you to play a couple of years at high settings in just about anything. Damaging her name language does not turn. 4K2K monitors are still few, optimization for them so-so, game engines are evolving ... In general, in a few years there will be benefits from all these innovations and architectural improvements. If you need a video card today ... Well, for a resolution of 1920x1080 and 2560x1440, the GTX 970 is great. It is quiet, well-driven, does not take up much space and does not require a super heaped power supply.

As for memory and disputes, 4 gigabytes or 3.5 - you know, if NVIDIA blocked this piece of memory at all and wrote 3.5 GB on a video card - it would be much worse. If I were in the place of high authorities, I would sell video cards with the declared 3.5 GB and actual 4, as they are now. As soon as it was revealed - the fans would carry a company on their hands, they say, they donate 512 memories. Let not fast. But give. For free! Well, performance ... honestly, in the heat of battlefield Battlefield or Far Cry, you will not see the difference between the "very high" texture settings and simply "high", and you will never see the overhead. And in all other games, you can safely put the maximum. :)

And for fans of bleeding edge technology, 4K2K and other delights of unlimited budgets, there are all sorts of Titans and other R9 295X2. Let them buy and rejoice. :)

Conclusions everyone is free to do on their own. As for me, the hysteria with the memory in the GTX 970 was far-fetched: it has almost no effect on performance, and the future driver updates and game patches will allow something to shuffle so that the owners of the GTX 970 do not feel any inconvenience at all.

As for the price-performance ratio, here, it seems to me, an option with the R9 280X ... where are you dragging me ...

Our reviews:

» Connect original gamepads to PC

» Razer Abyssus: the most affordable Razer

Nikon 1 S2: one-button mirrorless

» Lenovo Miix 3-1030 Review

»We understand the art-chaos of the company Wacom

» ASUS ZenFone 5, LG L90, HTC Desire 601 - a two-part war for the consumer, part 1

» ASUS Transformer Pad

» Razer Kraken headsets

PC Buyer's Guide Cycle:

» PC Buyer's guide: video card selection

» PC Buyer's Guide: Choosing a Power Supply

» PC Buyer's Guide: Cooling

» PC Buyer's Guide 2015: Motherboards, Chipsets, and Sockets

» Twist-twirl, I want to confuse. Understanding the HDD lines

Test equipment

For the tests, I used my personal PC: Core i7-3930K (6 cores @ 4.2 GHz), 16 gigabytes of fairly rare memory Kingston HyperX Beast 2400 MHz in four-channel mode (it is difficult to find anything faster on DDR3), all the toys and benchmarks stood on PCI-Express SSD disk to minimize the impact of other components on test results). The whole thing is connected to a monitor with a resolution of 2560x1440 pixels. As you can see, there’s really nothing to rest on: toys with 64-bit architecture and binaries - the cat cried, and the rest, in theory, should be enough for everything. Of course, all updates, patches, drivers, hotfixes and all that were the freshest, right from the garden.

')

As a test subject, I used the ASUS STRIX GTX 970 : a video card with an incredibly quiet cooling system and excellent hardware.

What are we testing?

What are young people playing here today? And then I heard that Battle City was a little out of fashion ... In general, the following games were chosen for the test:

- Batman: Arkham origins

- Battlefield 4

- Bioshock infinite

- Company of Heroes 2

- Crysis 3

- Diablo 3

- Far cry 3

- Far cry 4

- Metro: Last Light

- Tomb Raider (2013)

In all cases, the maximum possible graphics settings with disabled vertical synchronization and active anti-aliasing x4 will be applied. Any Frame Limiters and other “smoothers” were turned off, after each test the video card cooled down to normal temperatures and the system rebooted.

A special guest is a game that does not differ in fantastic graphics or optimization, but can set the heat of many GPUs under certain conditions. We need it to show some of the bottlenecks of the Maxwell architecture. We will use Dark Souls 2 and perform downscale with a resolution of 3840x1260 to 2560x1440 and from 5220x2880 to 2560x1440.

Unfortunately, GTA V at the time of the tests and the writing of the article has not yet been

Test results

For a resolution of 2560x1440, it is quite difficult to achieve enough performance. Not so long ago (in the topic about GTA V) I found a person for whom “below 60 FPS are insane lags). Well, everyone has their own tastes, but I believe that 30 FPS at this resolution with a single video card is quite a playable option, especially if the number of frames per second in intense scenes does not sag below 25. Here are the results:

As you can see, almost all games on super settings and with quadruple smoothing show acceptable results, with the exception of frankly voracious monsters with so-called optimization: Metro Last Light and Company of Heroes 2 were expected to become outsiders of the charts.

As for memory consumption, it was possible to “get out” for three and a half gigabyte lines only in Battlefield 4 and Far Cry 4, (apparently, the magic of the number 4, no other way). Crysis and Metro didn't even come close: the most difficult scenes hardly occupied 3.2 GB of video memory.

Maxwell bonuses

Remember the very first post about the GTX 970? There, we looked at all the post-DX9 NVIDIA graphics cards and noted that for three generations, the company has been fighting over the energy efficiency of its chips. On the one hand, it is a noble cause, on the other hand, on desktops, especially with consumption it is possible not to bother: tea, we do not work on batteries. On the other hand, if you reduce energy consumption and heat dissipation, then within the framework of the same heat pack, you can squeeze more performance. And it was in this that Maxwell surpassed both past generations and red and white competitors.

The fact is that the energy efficient GM-204 chips have a heat pack of 165 watts. And they also have automatic overclocking technology: as long as the temperature allows, and the load matches, the core frequency rises within certain reasonable limits. Accordingly, the more reliable the power supply circuit, the better the cooling, the higher the base frequency of the chip and memory, the better the results can be achieved on the Maxwell architecture under load: thereby reducing the drawdown in FPS and increasing the average frame rate. A kind of Intel'ovskogo Turbo Boost.

The nominal frequencies of my video card were:

Kernel, Normal - 1114 MHz

Core, Boost - 1253 MHz

Memory: 7010 (1752.5 x4) MHz

After a bit of shamanism with software raising the voltage, checking for stability and other tweaks, we managed to get the following results:

Kernel, Normal: 1333 MHz

Core, Boost: 1490 MHz

Memory: 8000 (2000 x4) MHz

How did this affect performance? In the best way:

Almost everywhere, the graphics have crossed the "comfortable" 25 FPS abroad. It's funny, but overclocking didn’t have any impact on the performance of Diablo 3: apparently, either some kind of internal limiter still works, or the game rests on something else. A curve Company of Heroes 2, even overclocking did not save, as were the sad results, and remained.

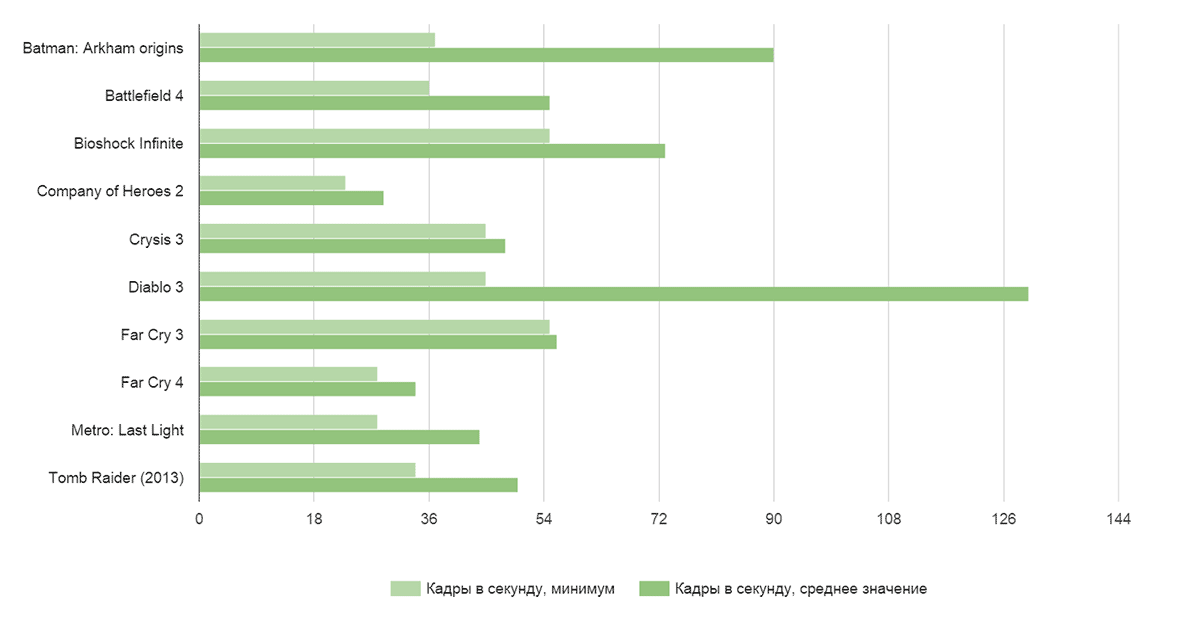

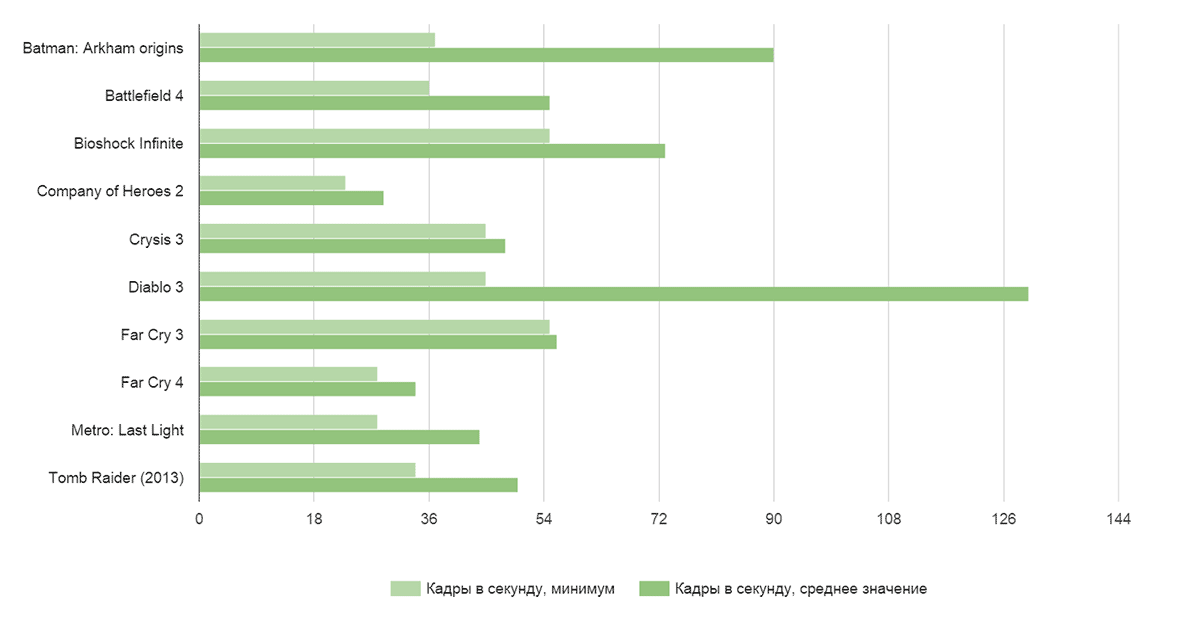

Here is a comparison of the minimum frame rate:

Here, the increase is most important and noticeable: in the most intense scenes microfreezes and jams have disappeared, the whole picture has become smoother.

The average frame rate has also grown, but not so much, and overall, the performance gain is more typical for very busy scenes than for the general dynamics:

And now the most important thing. With overclocking, without - the video card is surprisingly quiet. No, of course, in an hour or another game in Crysis 3 with such settings there will be some background noise, but it doesn’t come to any comparison with the noise and heating of past-generation monsters like AMD HD7970, GTX 780 and even more so howl reference turbines. In this regard, ASUS did great work and made an excellent system that does not make noise and does its work for five plus.

Personal opinion

In the past post separately complained about Far Cry 4, they say, if the game still crawls out for 3.5 GB, then all sorts of microfreezes, lags, frame drops begin, and the nightmare is going on at all (the second half of the video):

In a game of such beauty with dropped frames and smeared colored spots you will not see, there will just be a slight loss of smoothness of the picture. In the same Crysis 3, if it can be taken out of the limits of consumption of 3.5 GB of video memory in general, such problems are not observed and the performance drawdown is almost invisible, especially if you look at the sight and enjoy the gameplay, and not stare at the FPS-meter, catch a short-term drop in performance, take a screenshot and run to the forum, complain about how bad NVIDIA is. :)

For a resolution of 2560x1440 and 2560x1600, there is enough video card in all games: unless, of course, you are satisfied with an average of 30-40 FPS. Of course, there is not enough video card for 4K2K, and the point here is not in the memory, but in the computational capabilities of the video core. As a 4K2K test, I used Dark Souls 2 with a patch on the downscale graphics . Dark Souls itself does not shine with any graphics or system requirements. At medium-high settings, you can safely play in FullHD resolution with a mobile GT650M video card and 1 GB of video memory. The main load in this case falls on the GPU, and not on the video memory: since no super-complex special effects are applied, the post-processing is quite primitive, and the game itself was developed taking into account the capabilities of the previous generation consoles (the first version was used for tests Sin). With the downscale 3840x2160 to 2560x1440, the NVIDIA GTX 970 gives out confident 15-18 FPS, with overclocking - 16-19. The same exercise, but with a resolution of 5120x2880 does lead to a dismal 12-14 FPS.

For comparison, the old AMD HD7970 with 3 GB of video memory and working with a slight overclocking (1024 MHz core, 5600 (1400x4) MHz memory) surely gives out 25-30 FPS for a resolution of 3840x2160 pixels and 20-22 FPS for 5120x2880.

Why it happens

Firstly, the architecture of AMD and NVIDIA video accelerators is very different both in terms of the “common philosophy” and in some particular approaches.

The GeForce GTX 970 graphics card has a core frequency of 1050 MHz and 4 GB of GDDR5 memory, operating at a frequency of 1750x4 MHz, connected via a 256-bit bus, divided into 8 segments of 32 bits each. The total block of 3.5 GB is connected via a 224-bit segment, an additional 512 MB is connected via a 32-bit segment, which is the reason for the slowdown of the video card when applications get out of the limits of 3.5 GB of video memory.

The core of the Radeon R9 280X video card operates at a frequency of 1000 MHz and uses 1500x4 MHz memory connected via a 384-bit bus. Although it is divided into 6 dual-channel 64-bit blocks, none of them is turned off, so all the memory is available at full speed, even if it is only 3 GB.

Directly compare the characteristics (for example, the number of TMU - texture mapping units) does not make sense: different architecture, frequency of work and other features can not clearly demonstrate the superiority of a particular video card. If you give an abstract analogy: what will take more space - 20 boxes of some unknown volume or 30 boxes of a little different? Only by measuring the volume and multiplying by the number of boxes we can accurately answer this question.

So for the actual comparison of video cards, we better substitute the performance indicators of the R9 280X (aka the 7970 1GHZ Edition) and the GTX 970: they are already reduced to a common denominator and have the same dimensionality. In both cases, we will compare the reference values of standard accelerators, and not overclocked copies of different vendors.

| R9 280X | GTX 970 | |

| Performance GPU, GFLOPS: | 3, 482 | 3, 494 |

| Memory bandwidth, MB / sec .: | 288,000 | 224,000 |

| Fill rate | ||

| pixel, MP / sec .: | 27 200 | 58,800 |

| textural, Mtex. / Sec .: | 108,800 | 109,200 |

As you can see, the main difference in performance in the area of pixel fill rate. It is responsible for turning the resulting calculations into a finished frame: the higher the pixel fill rate, the faster the video card can turn “magic” into two-dimensional images that can be sent to the monitor. It would be something to send, and this is the whole secret. If we run a toy at a low resolution, say, 1280x800, the load on the memory and computational cores is not high: the number of ready-made personnel is overwhelming and the video card, for example, can issue 100 or even 200 FPS in simple scenes, if it can turn everything that it counts in a two-dimensional image and send it to the monitor.

If we launch games in high resolution, then each frame becomes much more complicated in calculations, the frame frequency decreases, and on ROPs (video card modules that are responsible for transferring pictures to a flat frame) there is an additional load: there is a difference, count 1280x720 = 921,600 points or 2560x1440 = 3,686,400 points. The output frequency of finished frames drops, but the performance drop at all previous stages is stronger than the additional losses on ROPs, and therefore at low resolutions large pixel fill rate gives a huge increase in the results of benchmarks, and on any SuperUltraHD and 4K2K games rest on performance the video card itself, not the modules displaying the picture, and the results are aligned.

The AMD graphics card has a higher memory bandwidth (figures differ by almost 30%) and a smaller amount (3 GB versus 3.5 + 0.5 for the GTX 970), comparable core performance, slightly more shader processors. It was here that the red and white won against the black and green: the performance of the ROPs was enough, but the bonuses in the face of high memory bandwidth and more computational units did their job: at standard frequencies and high loads, HD 7970 or R9 280X could outrun GTX 970. Adjusted for the fact that the game did not require more than 3 GB of video memory. True, the price of this superiority is the monstrous power consumption of the Radeons, and, as a result, high heating, not the most pleasant acoustic mode of operation and overclocking.

Results

The GTX 970 is a great video card that will allow you to play a couple of years at high settings in just about anything. Damaging her name language does not turn. 4K2K monitors are still few, optimization for them so-so, game engines are evolving ... In general, in a few years there will be benefits from all these innovations and architectural improvements. If you need a video card today ... Well, for a resolution of 1920x1080 and 2560x1440, the GTX 970 is great. It is quiet, well-driven, does not take up much space and does not require a super heaped power supply.

As for memory and disputes, 4 gigabytes or 3.5 - you know, if NVIDIA blocked this piece of memory at all and wrote 3.5 GB on a video card - it would be much worse. If I were in the place of high authorities, I would sell video cards with the declared 3.5 GB and actual 4, as they are now. As soon as it was revealed - the fans would carry a company on their hands, they say, they donate 512 memories. Let not fast. But give. For free! Well, performance ... honestly, in the heat of battlefield Battlefield or Far Cry, you will not see the difference between the "very high" texture settings and simply "high", and you will never see the overhead. And in all other games, you can safely put the maximum. :)

And for fans of bleeding edge technology, 4K2K and other delights of unlimited budgets, there are all sorts of Titans and other R9 295X2. Let them buy and rejoice. :)

findings

Conclusions everyone is free to do on their own. As for me, the hysteria with the memory in the GTX 970 was far-fetched: it has almost no effect on performance, and the future driver updates and game patches will allow something to shuffle so that the owners of the GTX 970 do not feel any inconvenience at all.

As for the price-performance ratio, here, it seems to me, an option with the R9 280X ... where are you dragging me ...

Our reviews:

» Connect original gamepads to PC

» Razer Abyssus: the most affordable Razer

Nikon 1 S2: one-button mirrorless

» Lenovo Miix 3-1030 Review

»We understand the art-chaos of the company Wacom

» ASUS ZenFone 5, LG L90, HTC Desire 601 - a two-part war for the consumer, part 1

» ASUS Transformer Pad

» Razer Kraken headsets

PC Buyer's Guide Cycle:

» PC Buyer's guide: video card selection

» PC Buyer's Guide: Choosing a Power Supply

» PC Buyer's Guide: Cooling

» PC Buyer's Guide 2015: Motherboards, Chipsets, and Sockets

» Twist-twirl, I want to confuse. Understanding the HDD lines

Source: https://habr.com/ru/post/366847/

All Articles