Spoken AI: how chat bots work and who makes them

Chatbots and artificial intelligence for understanding natural language (NLU - Natural Language Understanding) is quite a hot topic, it has been spoken about more than once in Habré. Nevertheless, quite rarely come across high-level and structured reviews of these technologies and the market as a whole. In our article we will try to understand a little what caused the demand for these technologies, what the modern interactive platform for NLU looks like, what companies and developments are present in this market.

We at Just AI (and previously at i-Free) have been working in this segment since 2011, developing and improving our platform for understanding and processing natural language (NLU-algorithms): we give companies the ability to automate call centers and support services, we create business skills for Yandex. Alice. We also teach robots and smart devices: in 2017, our children's robot Emelya was released - the first device on the Russian market that understands natural language (and, by the way, about 7000 Emel have already found their home). The article is introductory, and if this topic seems interesting, we will periodically write about the features of creating conversational interfaces, including in the format of specific cases that have been implemented for our clients, as well as about the features of various platforms, technologies and algorithms.

Quite a bit of history

Although AI is a fairly wide area, including machine vision, predictive analysis, machine translation and other areas, natural language understanding (NLU) and its generation (NLG) is a significant and fast growing part of it. The first chatbots and their development systems appeared quite a long time ago. Omitting the story that began in the 50s with Alan Turing and the Eliza program in the 60s, as well as scientific research in the field of linguistics and machine learning of the 90s, the emergence of the new artificial history AIML markup language Markup Language), developed in 2001 by Richard Wallace and based on the ALICE chatbot.

Over the next ten years, the approaches to writing chatbot in many respects consisted of refining or improving this methodology, called the “rule-based approach” or “formal rules-based approach”. Its essence lies in the allocation of semantically meaningful elements of phrases, their codification, the creation of special formal scripting programming languages, allowing to describe the scripts of dialogues. In most assistants we are familiar with today, basically, this approach is used. The newest development environments based on formal rules are complex and complex systems, including:

- systems of hypothesis ranking,

- selection of named entities from the text,

- morphological analysis of phrases

- systems for managing dialogue and preserving the local and global context,

- integration and external function calls.

However, most of the conversational solutions based on such systems are rather laborious in their implementation: a chatboot needs to communicate a wide range of topics or deeply and fully cover a specific area of knowledge requires a large amount of human labor.

The situation in this area has changed significantly recently with the development of algorithms for determining the semantic proximity of texts and machine learning technologies in general, which have made the approaches to text classification and learning of NLU systems much faster and more convenient. For example, in dialogs where chat rooms need to access large arrays of external data, allocate hundreds of thousands of named entities and integrate with external information systems, a large amount of human labor is still required, but the process of creating complex chatbots has become much simpler and the accuracy Recognition intents user - significantly higher. It was these technologies, together with a noticeable advancement in the field of speech synthesis and recognition technologies, as well as the spread of instant messengers and webchatas, which led to the rapid growth in the number of NLU-technology implementations in 2015-2018.

Why did these technologies become so popular right now?

Today, there are several key drivers that ensure the market growth of NLU technologies.

1. Contact centers

')

This is the largest market for the application of NLU-algorithms (according to the Everest Group - 330 billion dollars a year). Contact centers are used by hundreds of thousands of companies in the world, starting with banks, large retailers and ending with small businesses that serve customers with 2-3 support department managers. A huge number of routine operations are increasingly being transferred to artificial intelligence: chatbots can be used to answer typical questions (based on the FAQ principle, but with natural language and user requests), in “call steering” mode to route the user to the necessary department of the company through smart IVR, as well as “prompters” - bots for intelligent prompts to call center operators. All this makes it possible to significantly reduce personnel costs and increase the capacity of the KC without an increase in staff. However, the most effective combination of AI + Human, when complex analytical questions are transferred to the operator, who has the ability to give the client enough time, to really help and solve the problem.

2. Talking devices

Amazon Echo appeared 3 years ago, and the familiar world has become a little more comfortable: Alexa's assistant can wake up at a specified time, turn on her favorite music, control a smart home, find and tell news, order food at home, allow you to call a taxi or order a pizza with delivery. This is the first mass device on the US market, with high-quality speech recognition and the ability to hear the request, even in conditions of strong external noise. A Google Home device from Google followed, and at the moment they share a market with Amazon in an approximate 3: 1 ratio (an advantage on the side of Amazon). In the Chinese market, the struggle is even tougher, each of the Internet giants has released its own smart column by 2018 - these are Baidu, Xiaomi, Alibaba, Tencent and JD.com.

But this market is not limited to smart speakers - robots, children's toys, devices for cars and intelligent household appliances, in 2018 there are still a lot of amazing discoveries. Only at home, in Just AI, we are working on 5 similar projects at the moment.

3. Voice Assistants (IVA)

Alexa from Amazon, Google Assistant from Google, Apple's Siri, Microsoft's Cortana, Alice from Yandex - they determine the intents (intentions) of users and execute commands. A significant part of skills (skills) is created on third-party NLU-platforms. Yandex is now building around its assistant Alice a whole ecosystem of skills by opening the beta version of Yandex.Dialogs for third-party developers. At the same time, the market of virtual assistants is not only interesting for the end-user device market, it has every chance to take part of the automation market for business support (now Google Assistant routes user requests to company contact centers).

In general, talking devices and assistants are the most interesting and promising area of application of conversational AI technologies. Plus, this is a direct point of contact between business and end user. The demand for such technologies is increasing every year, and Russia is no exception.

How are conversational AI technologies arranged?

Briefly, the user interaction scheme and, for example, chatbot can be represented as follows:

Initially, the user addresses his request in any of the available channels. Behind the request is some intention, intent, i.e. the desire to get an answer to a question, to get a service, product or any content, such as music or video. The channels can be smart devices, assistants embedded in devices or mobile phones, the usual call to a phone number, instant messengers or web chat, like Livetex, Jivosite or Webim popular in Russia.

Further, additional processing or conversion of the message format may be required. Interactive platforms always work with text, while a number of channels involve voice communication. ASR (speech recognition), TTS (speech synthesis), systems for integration with telephony are responsible for this conversion. In some cases it may be necessary to recognize the interlocutor by voice - in this case, biometrics platforms are used. Separate channels, for example, instant messengers or Alice’s assistant in a mobile phone, allow to combine visual interactive elements (for example, buttons or cards of goods that can be tapped) and natural language. To work with them, integration with relevant APIs is required.

The request, converted to text, enters the interactive platform. Its task is to understand the meaning of what was said, to catch the user intent and effectively process it, giving the result. For this, dialogue platforms use a variety of technologies, such as text normalization, morphological analysis, analysis of the semantic proximity of what was said, hypothesis ranking, selection of named entities and, finally, query formation already in machine language, through a set of APIs to external databases and information systems. An example of such external systems could be 1C, Bitrix24, SAP, CRM systems, content bases or services, like Deezer or Google Play Music. After receiving the data, the dialogue platform generates a response - a text, a voice message (using TTS), includes streaming of content or notifies you of the action (for example, placing an order in an electronic store). If the initial data request is not enough to make decisions on further action, the NLU platform initiates a clarifying dialog to get all the missing parameters and remove uncertainty.

How is the logic of processing requests in interactive platforms (for example, Just AI)?

Here we want to talk a little about the internal structure of systems that process user requests in interactive systems.

We consider the processing process using the example of our platform, but it should be noted that at the top level, the main features are the same, if not in all, then at least in the platforms we know (here we mean the platform for business skills, and not the “chatter”) . The general scheme of our platform can be represented as follows:

The main client request processing cycle consists of the following events and actions:

- The system receives the client's request to the dialog management module - DialogManager.

- DialogManager loads the dialog context from the database.

- The client request (along with the context) is sent to the NLU module for processing, as a result of which the client’s intent (intent) and its parameters are determined. In the case of processing non-text events (buttons, etc.), this step is skipped.

- Based on the dialogue script and the extracted data, the DialogManager determines the next most appropriate state (block, screen, dialog page) that most closely matches the client's statement.

- Execution of business logic (scripts) in accordance with the specified chat bot script.

- Calling external information systems, if any, are programmed in business logic.

- Generate a text response using macro substitutions and word matching functions in natural language.

- Saving the context and dialog parameters in Dialog State DB for processing subsequent hits

- Sending a response to the client.

An important part of the system's work process is the management of the course of the dialogue (DialogManager), within which the overall context of the above and the relationship with previous and subsequent statements are determined. Thanks to this process, one or another phrase will be perceived differently, depending on when it is said, who said it, what additional data was transmitted to the system along with the request (for example, the user's location). In some systems, DialogManager also controls the filling of the context of the phrase with necessary data (slot filling), which can be obtained either from the client's phrase, or from the context of the previous phrases, or explicitly requested from the client. In our system, these functions are brought to the level of a “script” of dialogue in such a way that this process is fully controlled by the bot developer.

The most difficult stage of the dialogue platform is the process of analyzing the statements of the client. This process is called NLU - Natural Language Understanding, understanding the meaning of the request.

In its most simplified form, the process of “understanding” a language consists of the following major steps:

- Preliminary text processing

- Query classification, correlation with one of the classes known to the system,

- Retrieving query parameters.

And in this place, probably, lie the most significant differences in the platforms of various suppliers. Someone uses deep neural networks, someone has enough regular expressions or formal grammars, someone relies on third-party services.

The architecture of our system suggests the following approach to processing a request in natural language:

- Splitting text into words.

- Correction of typos (both versions of the text are saved).

- Replenishment of the text with morphological features - determination of the normal form (lemma) of words and parts of speech (gramme).

- Query expansion using synonym dictionaries.

- Expansion of the query with information about the “informational significance” (weights) of individual words.

- Expansion of the query tree parse.

- Expansion of the query with the results of coreference resolution (pronoun resolution).

- Definition of named entities.

- Query classification using two approaches (can be used in parallel): a. based on examples of phrases and algorithms based on machine learning; b. based on formal rules (patterns).

- Ranking classification hypotheses according to the current conversation context.

- Filling information "slots" - the request parameters passed in the user's phrase.

A more detailed account of the work of the NLU module is the topic of a separate article that we plan to prepare in the near future.

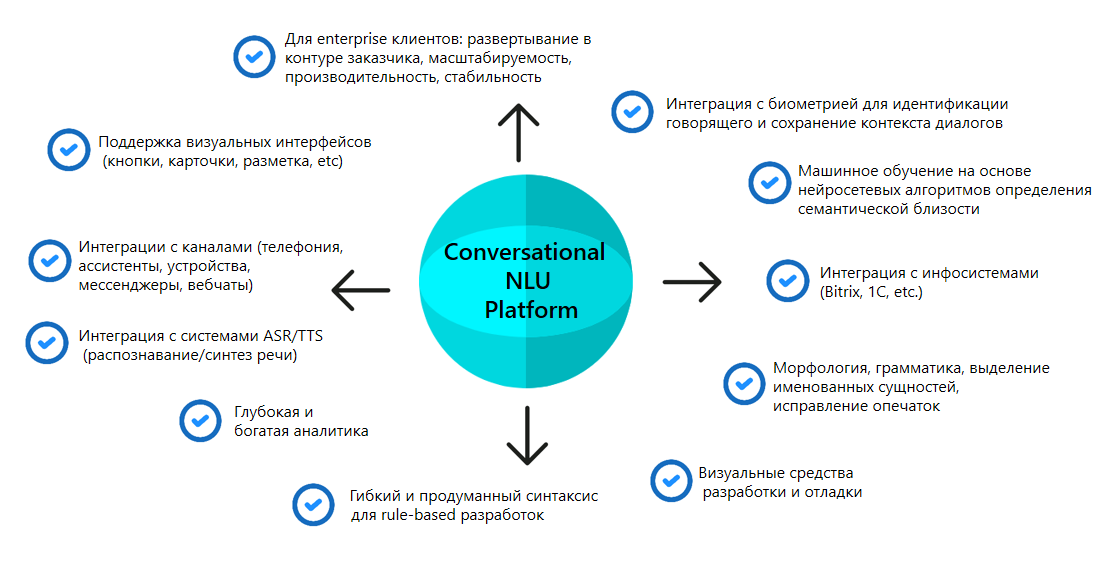

What should the interactive platform include?

A modern integrated interactive platform or, as they are also called, the Conversational Platform, should include many functions and technology modules. Schematically, they can be shown as follows:

The more integrations the platform has, the faster and easier you can create a ready skill on its base. The presence of a developed rule-based syntax can speed up the development of chatbots at times. In addition, individual dialogue management tasks are not at all realizable without formal rules. The presence of classification systems and machine learning allows you to speed up the creation of chatbots by orders of magnitude, analyzing, say, a huge number of log entries in a short time. Integration of all this into a single system allows you to combine different methods in the development of a single project, depending on its goals.

Visual tools for constructing skills help speed up their creation, simplify debugging and visualize the further flow of users' communication with the system. Emotion analysis, rich and deep analytics, special filters (for example, using profanity), language support, context storage, as well as the accuracy of the neural network algorithms used, as well as performance, scalability and stability are all important as well. not always obvious from the side, the features of dialogue platforms.

And, despite the large number of companies creating chatbots, units have fully functional NLU systems and not all existing systems are equally suitable for different tasks and languages. There are well-known Lex from Amazon, Microsoft Bot Framework, IBM Watson, Wit.ai from Facebook, but not all of them are in Russian or have insufficiently effective algorithms for the Russian language.

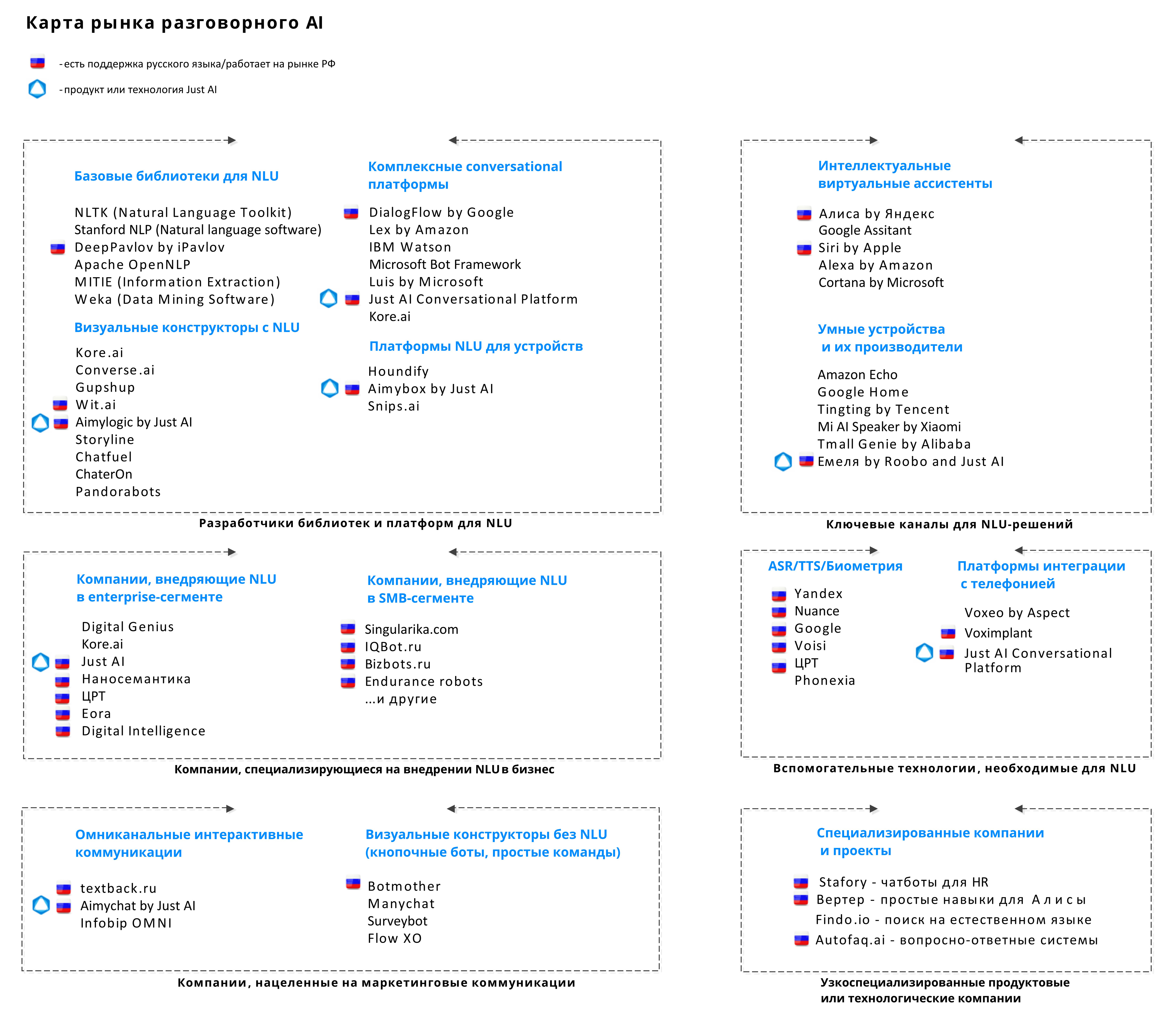

Who is involved in the creation of NLU technologies in Russia and on the international market?

Now it is interesting to see who is engaged in NLU-technologies in the international and Russian markets. The diagram below includes the main developers and solutions, the key players of the international market that do not work with the Russian language, but significant enough to mention them. Separately, there are Russian or international companies that have products that adapt to the Russian market and have support for the Russian language at the platform level.

Some companies focus on channels and user access interfaces, in terms of the value chain they are closest to the consumer and usually have their assistants or devices. In Russia, the most significant channel is Yandex with its assistant “Alice”. There is a whole group of companies that create conversational solutions for end-companies, providers of content, goods or services, i.e. develop those skills (skills) for assistants. Among them there are specialized companies, and there are integrators or developers who create similar solutions along with other projects. All of them use these or other dialogue platforms or solutions related to related technologies (synthesis, speech recognition), created either by specialized teams of developers of similar software, or global corporations (Microsoft, IBM, Amazon). And of course, there are individual players in the market, focusing on specific, specific areas - for example, chatbots for HR, companies collecting statistics or consulting companies in this area. At present, the market is growing quite fast and from month to month more and more players appear on it.

We are trying to track the emergence of new participants and technologies in this area and are going to regularly update and supplement with new participants and categories the given market map. In addition, we will prepare an overview of interactive platforms and describe our own case studies for creating conversational skills. Of particular interest is the comparison of various algorithms for determining semantic proximity as applied to different subject areas and conversational systems training technology. We want to devote all this to the Just AI team blog on Habré.

Source: https://habr.com/ru/post/364149/

All Articles