Moving worse than a fire: how to transport 3TB of data from Dropbox to Google Drive and survive

It’s hard to imagine what would happen to our company, if we lose all our files: for example, as a result of a fire. At a minimum, it’s quite problematic to do the publishing of board games without their layouts, not to mention the tons of other materials needed for the work of the company.

Therefore, 5 years ago, having founded the “Gang of clever men” and making a test run, we already stored all the data in Dropbox - since they were then placed in free limits. During this time, the team has grown from 2 to 35 people, and this year we realized that it was time to say goodbye to Dropbox and move to Google Drive. This decision caused a series of adventures that we had not planned at all.

To move from one cloud service to another there can be a lot of different reasons: from the risk of blocking (hello, Roskomnadzor) to the need for some fundamentally new functionality. In our case, the reason was simple: we sat down, felt and understood that instead of 12 accounts with unlimited storage, we could have 35 accounts (that is, the whole team) - and all this is exactly for the same money.

')

But the phrase “said - done” is not accidentally found only in fairy tales. It’s not that we thought that the transfer of all data would take place at the click of a finger, but still, on the coast, we imagined moving much easier. And in vain.

Here are 6 important points that we discovered during the process of changing the cloud provider.

When 35 people work with some data, unnecessary files inevitably appear: duplicated or completely irrelevant. Over time, they multiply and are distributed in a relatively even layer throughout the structure of the cloud disk.

It's so bad if they are thoughtfully placed in the folder “Layouts 2011 archive”. In this case, it is easier to understand what is needed and what is not. It is worse when it is “New folder (2)”, and in it is the file “aaaaaaaaaaaaysyyv.psd”. Independently assessing how important a specific file is, is almost unrealistic: you need to find out each time who is using a particular document or folder, how important it is to store and transfer. In addition, even useful and relevant documents sometimes found themselves in unexpected and unobvious places.

Therefore, we asked the team to “brush” the existing data structure: each took his block and arranged it in order from general to particular, so that even a person who had never come across a particular document had to understand where to find it: Actual general information \ Logo and corporate style \ Logo ( and ENG).

After this procedure, there are far fewer incomprehensible files and folders - they were either deleted or placed in the general structure. The final structuring of the entire data library was carried out last, when order was established in the subsections.

It is important that putting things in order is not the fastest thing. At first, we distributed the areas of responsibility, then we checked the logic of the new structure, modified something, dealt with the "orphan" files. All this was done in parallel with the current work and took about three weeks.

As a result, we got the most transparent and logical folder structure and got rid of 1/4 of the file size.

But the adventure has just begun.

After restoring order, we had to transfer 3 terabytes of data from Dropbox to Google Drive. The first thing that came to mind: "Probably, there is some kind of service that allows you to do this automatically - so that you press the button on Friday evening and go to sunbathe."

First, we were very inspired by the services for migration from one disk to another. But when we realized that we were dealing with a complex structure of personal and team folders, with which 35 users work with different levels of access, the fun began. A three-dimensional matrix was obtained:

As a result, we realized that none of the considered services can transfer 3 TB of data while preserving the structure and access levels. Moreover, the estimated time of "moving" with the help of such tools was a few months. It categorically did not suit us.

We rent the storage in the data center that runs on the Windows Server 2012 server operating system. It turned out that, unlike Dropbox, Google Drive does not just not support it. Our "ingenious" solution to start the copy process directly turned out to be technically unrealizable on existing software.

Then we turned to the data center with the request: “Guys, can you deploy a Windows 10 image on server capacities?” The partners were surprised - apparently, our request was not the most popular. It took them time to raise the “top ten” in our capacity pool. When we managed to do this, we installed two programs there: Dropbox and Google Drive - they both logged in under one employee appointed by the owner of all the folders. Now you can copy the data.

The problem was that at one time (for example, overnight) it was impossible to transfer all the data: the process took at least several weeks. If we just launched the data transfer, waited for completion and calmed down, we would receive a lot of irrelevant information in the new storage, because during the copying of folders they are working: changes are made to existing files, new ones are created.

Therefore, during the first run, we copied the entire amount of data that was current at the date of the start of copying, and then with the help of the xStarter program, we daily uploaded only those files that were changed or created. The program sees new files and detects changes in the properties of all previously uploaded files, as if giving a signal: “The file is created, upload. File changed, reload. " Over time, the downloads became smaller in volume. Before the weekend, we transferred the entire Dropbox to read mode so that no one made changes, and started the final transfer. The volume of the final reloading was 20 GB, the whole procedure took several hours. Compare with 3 TB at the beginning.

After the transfer of all data, we re-configured all the parameters related to employee access to different folders and finalized the structure. On Monday morning, we received fully relevant structured data on the Google Drive.

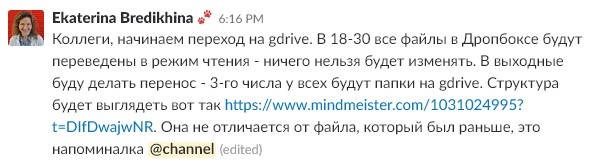

To make it clear to everyone how to quickly start working with data in the new conditions, we have made and published detailed instructions.

Here are its main elements:

• New folder structure, visually depicted in Mindmeister. To find out what was lying there, it was not necessary to dig in all the folders - it was enough to look at the map. Plus, the new file structure has become the most logical. On a conditional example, it looked like this:

1. Flight into space

1.1. Building rocket

1.1.1. Rocket archive

1.1.2. Current rocket scheme

1.2. Documentation archive

1.3. Actual launch schedule

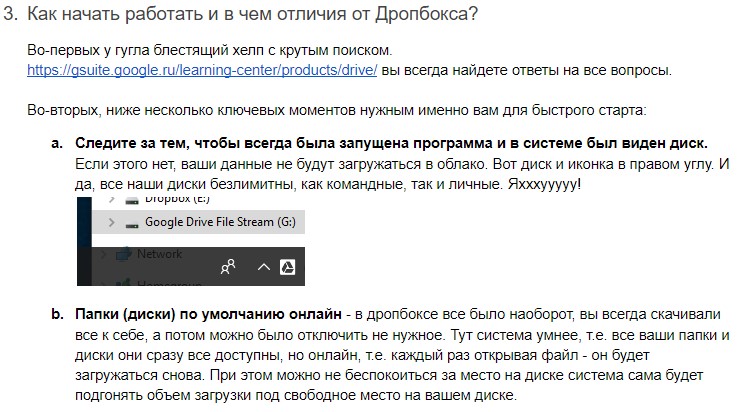

• Instructions for installing and using Google Drive.

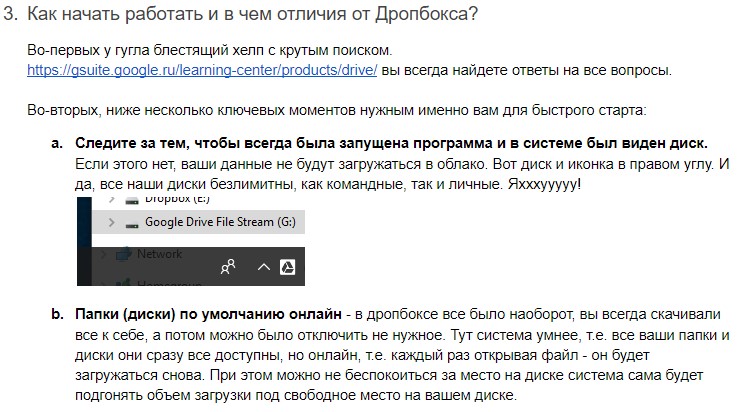

• The main differences in the work of Google and Dropbox, so that later they do not become an unexpected discovery.

• Rules for working with personal and shared folders.

The instruction allowed us to answer 90% of the questions that arise and significantly save time: both those who might be puzzled with the question and those who would have to answer.

All, happy &? No matter how wrong!

On the very first day of work in Google Drive, it turned out: we did not take into account in advance that the guys needed to have some of the data offline, and not in the form of links to files in the cloud - for example, heavy layouts. When designers began to load all sources and layouts into folders at the same time, our Internet channel became ill. Very bad. Some processes in the company just got up - even 1C did not work. Now, looking back, we understand what we should have taken in advance:

A. Agree with the guys on a gentle loading schedule. The main rule: do not load everything at once. First, only those files that we are working with right now, then the next ones in priority, and so on. If possible, do it at night or on weekends.

B. Ask the sysadmin in advance to set quotas on network equipment when each has its own guaranteed band. This makes it possible to rule out a situation where three designers immediately take out of 100 Mbit channel 90, and the remaining 32 people are forced to divide 10 Mbit.

C. Ask the same sysadmin to make a schedule of allowable downloads. For example, at night everyone can download data from a disk without restrictions, and during the day only by lanes.

D. Configure priority traffic. Suppose that you pass VOIP and RDP connections with the highest priority, so that Skype and remote servers always work. An important detail: not all network equipment has this option. For these purposes, we chose MikroTik equipment and set up everything without any problems.

In the first days of work, one more unpleasant feature emerged, after the discovery of which we will definitely double check all software before launching. When we included offline access to a folder or file in the Google Drive program, the entire cache was saved ... only on the “C” drive. There was no choice in the disk settings to save the cache.

It does not matter if these are 10 text documents with which a copywriter works. Problems begin when you need offline access to layouts and video sources, each of which can weigh gigabytes. As a result, the designers cache all the files fell on a small SSD-drive system. With its 250 GB capacity, this quickly led to overflow, while the “D” 4 TB disk remained untapped in this process.

Later it turned out that Google Drive only recently implemented a function that allows you to change the cache storage location of those files that are in offline access - from drive “C” to drive “D”. The ability to make changes is only for the administrator, who for this runs a special command in the registry editor - and an individual user still does not have this capability.

The conclusion is simple: before making a decision to launch a new software, it should be carefully and repeatedly tested in all work scenarios.

1. Choosing the right cloud is a vital thing.

Here you need to carefully weigh the pros and cons: examine the rates, software and functionality. In case of an error and subsequent disappointment in the choice, you can “get” to the move. And this, to put it mildly, is a non-trivial procedure that can freeze all the work and entail considerable costs.

2. It is useful to always keep the files in order and just in case have a plan “B”.

The need for relocation may arise unexpectedly: Roskomnadzor will start covering your provider with a lead pan, or the tariffs change - there may be a sea. In addition, it is easy and pleasant to work with a logical file structure.

3. Moving is not only about the technique, but also about the team.

All manipulations with the transfer of files and restoring order can turn into a complete failure, if you do not keep the team informed and act on the principle of "sort things out themselves." Maybe they will figure it out, but without a transparent and caring briefing, the work of this process will be much more laborious and exhausting.

Therefore, 5 years ago, having founded the “Gang of clever men” and making a test run, we already stored all the data in Dropbox - since they were then placed in free limits. During this time, the team has grown from 2 to 35 people, and this year we realized that it was time to say goodbye to Dropbox and move to Google Drive. This decision caused a series of adventures that we had not planned at all.

To move from one cloud service to another there can be a lot of different reasons: from the risk of blocking (hello, Roskomnadzor) to the need for some fundamentally new functionality. In our case, the reason was simple: we sat down, felt and understood that instead of 12 accounts with unlimited storage, we could have 35 accounts (that is, the whole team) - and all this is exactly for the same money.

')

But the phrase “said - done” is not accidentally found only in fairy tales. It’s not that we thought that the transfer of all data would take place at the click of a finger, but still, on the coast, we imagined moving much easier. And in vain.

Here are 6 important points that we discovered during the process of changing the cloud provider.

1. For the usual order of chaos

When 35 people work with some data, unnecessary files inevitably appear: duplicated or completely irrelevant. Over time, they multiply and are distributed in a relatively even layer throughout the structure of the cloud disk.

It's so bad if they are thoughtfully placed in the folder “Layouts 2011 archive”. In this case, it is easier to understand what is needed and what is not. It is worse when it is “New folder (2)”, and in it is the file “aaaaaaaaaaaaysyyv.psd”. Independently assessing how important a specific file is, is almost unrealistic: you need to find out each time who is using a particular document or folder, how important it is to store and transfer. In addition, even useful and relevant documents sometimes found themselves in unexpected and unobvious places.

Therefore, we asked the team to “brush” the existing data structure: each took his block and arranged it in order from general to particular, so that even a person who had never come across a particular document had to understand where to find it: Actual general information \ Logo and corporate style \ Logo ( and ENG).

After this procedure, there are far fewer incomprehensible files and folders - they were either deleted or placed in the general structure. The final structuring of the entire data library was carried out last, when order was established in the subsections.

It is important that putting things in order is not the fastest thing. At first, we distributed the areas of responsibility, then we checked the logic of the new structure, modified something, dealt with the "orphan" files. All this was done in parallel with the current work and took about three weeks.

As a result, we got the most transparent and logical folder structure and got rid of 1/4 of the file size.

But the adventure has just begun.

2. Automatic migration does not exist.

After restoring order, we had to transfer 3 terabytes of data from Dropbox to Google Drive. The first thing that came to mind: "Probably, there is some kind of service that allows you to do this automatically - so that you press the button on Friday evening and go to sunbathe."

First, we were very inspired by the services for migration from one disk to another. But when we realized that we were dealing with a complex structure of personal and team folders, with which 35 users work with different levels of access, the fun began. A three-dimensional matrix was obtained:

- The list of users who are involved in working with data;

- Access structure: which folders a particular user has access to;

- Level of access to a particular folder: viewing, commenting, editing, transferring rights, etc.

As a result, we realized that none of the considered services can transfer 3 TB of data while preserving the structure and access levels. Moreover, the estimated time of "moving" with the help of such tools was a few months. It categorically did not suit us.

3. Data transfer - the path through the thorns to the stars

We rent the storage in the data center that runs on the Windows Server 2012 server operating system. It turned out that, unlike Dropbox, Google Drive does not just not support it. Our "ingenious" solution to start the copy process directly turned out to be technically unrealizable on existing software.

Then we turned to the data center with the request: “Guys, can you deploy a Windows 10 image on server capacities?” The partners were surprised - apparently, our request was not the most popular. It took them time to raise the “top ten” in our capacity pool. When we managed to do this, we installed two programs there: Dropbox and Google Drive - they both logged in under one employee appointed by the owner of all the folders. Now you can copy the data.

The problem was that at one time (for example, overnight) it was impossible to transfer all the data: the process took at least several weeks. If we just launched the data transfer, waited for completion and calmed down, we would receive a lot of irrelevant information in the new storage, because during the copying of folders they are working: changes are made to existing files, new ones are created.

Therefore, during the first run, we copied the entire amount of data that was current at the date of the start of copying, and then with the help of the xStarter program, we daily uploaded only those files that were changed or created. The program sees new files and detects changes in the properties of all previously uploaded files, as if giving a signal: “The file is created, upload. File changed, reload. " Over time, the downloads became smaller in volume. Before the weekend, we transferred the entire Dropbox to read mode so that no one made changes, and started the final transfer. The volume of the final reloading was 20 GB, the whole procedure took several hours. Compare with 3 TB at the beginning.

After the transfer of all data, we re-configured all the parameters related to employee access to different folders and finalized the structure. On Monday morning, we received fully relevant structured data on the Google Drive.

4. Instructing - around the head

To make it clear to everyone how to quickly start working with data in the new conditions, we have made and published detailed instructions.

Here are its main elements:

• New folder structure, visually depicted in Mindmeister. To find out what was lying there, it was not necessary to dig in all the folders - it was enough to look at the map. Plus, the new file structure has become the most logical. On a conditional example, it looked like this:

1. Flight into space

1.1. Building rocket

1.1.1. Rocket archive

1.1.2. Current rocket scheme

1.2. Documentation archive

1.3. Actual launch schedule

• Instructions for installing and using Google Drive.

• The main differences in the work of Google and Dropbox, so that later they do not become an unexpected discovery.

• Rules for working with personal and shared folders.

The instruction allowed us to answer 90% of the questions that arise and significantly save time: both those who might be puzzled with the question and those who would have to answer.

All, happy &? No matter how wrong!

5. If you do not control the flow of data, crush begins

On the very first day of work in Google Drive, it turned out: we did not take into account in advance that the guys needed to have some of the data offline, and not in the form of links to files in the cloud - for example, heavy layouts. When designers began to load all sources and layouts into folders at the same time, our Internet channel became ill. Very bad. Some processes in the company just got up - even 1C did not work. Now, looking back, we understand what we should have taken in advance:

A. Agree with the guys on a gentle loading schedule. The main rule: do not load everything at once. First, only those files that we are working with right now, then the next ones in priority, and so on. If possible, do it at night or on weekends.

B. Ask the sysadmin in advance to set quotas on network equipment when each has its own guaranteed band. This makes it possible to rule out a situation where three designers immediately take out of 100 Mbit channel 90, and the remaining 32 people are forced to divide 10 Mbit.

C. Ask the same sysadmin to make a schedule of allowable downloads. For example, at night everyone can download data from a disk without restrictions, and during the day only by lanes.

D. Configure priority traffic. Suppose that you pass VOIP and RDP connections with the highest priority, so that Skype and remote servers always work. An important detail: not all network equipment has this option. For these purposes, we chose MikroTik equipment and set up everything without any problems.

6. The inscrutable ways of Google Drive

In the first days of work, one more unpleasant feature emerged, after the discovery of which we will definitely double check all software before launching. When we included offline access to a folder or file in the Google Drive program, the entire cache was saved ... only on the “C” drive. There was no choice in the disk settings to save the cache.

It does not matter if these are 10 text documents with which a copywriter works. Problems begin when you need offline access to layouts and video sources, each of which can weigh gigabytes. As a result, the designers cache all the files fell on a small SSD-drive system. With its 250 GB capacity, this quickly led to overflow, while the “D” 4 TB disk remained untapped in this process.

Later it turned out that Google Drive only recently implemented a function that allows you to change the cache storage location of those files that are in offline access - from drive “C” to drive “D”. The ability to make changes is only for the administrator, who for this runs a special command in the registry editor - and an individual user still does not have this capability.

The conclusion is simple: before making a decision to launch a new software, it should be carefully and repeatedly tested in all work scenarios.

Three short leads

1. Choosing the right cloud is a vital thing.

Here you need to carefully weigh the pros and cons: examine the rates, software and functionality. In case of an error and subsequent disappointment in the choice, you can “get” to the move. And this, to put it mildly, is a non-trivial procedure that can freeze all the work and entail considerable costs.

2. It is useful to always keep the files in order and just in case have a plan “B”.

The need for relocation may arise unexpectedly: Roskomnadzor will start covering your provider with a lead pan, or the tariffs change - there may be a sea. In addition, it is easy and pleasant to work with a logical file structure.

3. Moving is not only about the technique, but also about the team.

All manipulations with the transfer of files and restoring order can turn into a complete failure, if you do not keep the team informed and act on the principle of "sort things out themselves." Maybe they will figure it out, but without a transparent and caring briefing, the work of this process will be much more laborious and exhausting.

Source: https://habr.com/ru/post/362097/

All Articles