Yandex Blitz: machine learning

Not so long ago, we held Yandex. Blitz - a competition in algorithmic programming. The competition was successful: more than three hundred participants made it to the final, two of whom managed to solve all the proposed problems! Twenty finalists came to the Yandex office, met with the leaders of various services and learned more about the device of modern search engines.

However, in Yandex, developers solve a variety of tasks: from the development of high-loaded data processing systems to the construction of complex relevance models and mixing of search sources. Therefore, it seemed to us quite logical to continue the competition cycle from Yandex by the machine learning and data analysis competition.

Just like last time , we tell in advance on Habré what tasks can be met in the contest, and how they could be solved, so that potential participants have an idea of what awaits them.

The ML-blitz qualification will be held from June 11 to June 17, and the final will take place on June 23. The results of the competition will be announced on June 25th. To participate you must register in time!

Task # 1. Clustering

Clustering is a fairly common method of data processing in Yandex. For example, Yandex.News uses clustering methods to collect news messages into stories, and Search clusters user queries to understand which tasks users are solving and which ones are more frequent.

Often the clustering task is formulated in the following terms: given a set of objects , for some pairs of objects, the value of the proximity metric is known: . It is necessary to construct a partition of the set on a cluster, that is, find such non-intersecting subsets that their union coincides with and it is in some sense optimal.

In the first task, we suggest you solve the clustering problem for a fairly simple case. There is a set of points at -dimensional Euclidean space. It is known that this set of points can be divided into two subsets, and such that the distance between any two points from different subsets exceeds a certain amount . It is also known that a partition with the specified property is guaranteed to exist and is the only possible one. It is required to find it.

Decision

We construct the following clustering algorithm. Initially all points are separate clusters. Then if there are two clusters and such that there is at least one pair of points of them with a distance less than , these two clusters are combined into one:

This operation is repeated as long as there is at least one pair of clusters with the specified property. It is clear that after the end of the algorithm, only two clusters will remain - otherwise it would be possible to split the set of points in more than one way while observing the conditions of the problem.

Such an algorithm can be implemented using the data structures of a forest of non-intersecting sets and a KD-tree , or, for example, a VP-tree . At the first stage, we will construct a search tree (KD- or VP-tree) from all points of the original set; besides, we initialize a forest of disjoint sets of size . Then for each point of let's implement the following algorithm:

- Using the search tree, we define all points lying at a distance of no more than from .

- For each point found carry out the operation in a forest of non-intersecting sets for a pair of indices corresponding to objects and .

At the end of this algorithm, the forest of disjoint sets will contain a partition of the original set into two clusters.

Some ready-made implementations of clustering algorithms are also suitable for solving the problem. For example, the DBSCAN algorithm from the scikit-learn library for python is suitable.

It is worth noting that in solving this problem one can do without a forest of non-intersecting sets. You can use, for example, traversing an implicit graph in depth: at each step, determine the vertices adjacent to the current one, and recursively start a traversal from them.

Task # 2. Identify extra objects

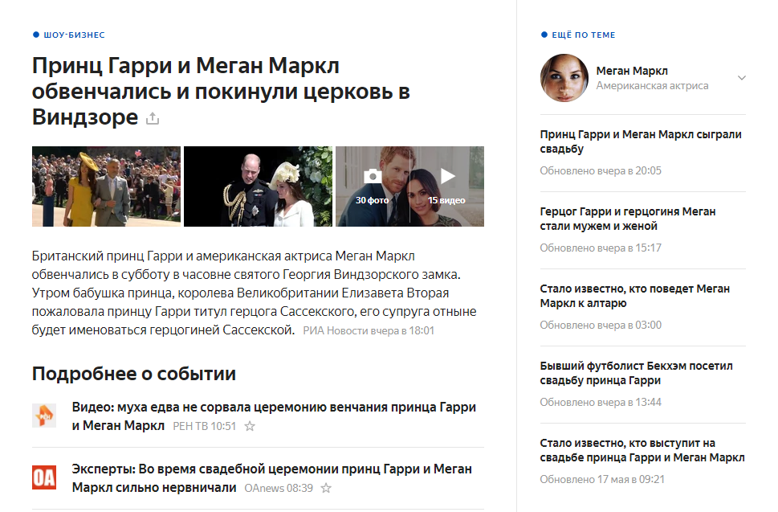

Yandex.News is a service that aggregates hundreds of thousands of news texts per day, processes them, including extracting named entities. It so happens that an irrelevant message gets into the plot, or in the text of a particular message by mistake it turns out to be a fragment unrelated to its main theme. In this case, an erroneous named entity (for example, a person) may become attached to the plot, and the user will perceive this as an error. When properly triggered, we can add to the story of the wedding of Prince Harry and Megan Markle, for example, a link with information about the bride:

In this task, you need to determine which of the objects is superfluous. We took one hundred thousand news messages, retrieved all the named entities from them, and renumbered them, building a set of numbers . Thus, each text is a certain set of numbers. If a - the set of all texts, then:

Then we added one random object to each text. It is clear that a random object with a high probability has nothing to do with the text of the news. Your task is to determine for each news item which object is superfluous.

Decision

This is an example of a task in which it is impossible to give an exact answer. In it, it is important to get as large a value as possible of a certain quality metric - in this case, the proportion of correctly guessed extra objects. Since the contest is limited in time, it is reasonable to start with a simple method that is easy to implement and at the same time guaranteeing a certain result.

In this case, you can use, for example, the following simple heuristics. If two objects are in some sense semantically related (for example, objects and are definitely related), they will sometimes be found in the same texts. On the other hand, there are extremely many objects, so a randomly selected couple is unlikely to meet together often.

Therefore, we calculate, for example, the function of the conditional probability of occurrence of objects:

In this way, - this is the probability to find an object in the text. provided that the object is found in the text . For practical purposes, this function should be smoothed - for example, to ensure that it never accepts values smaller than a certain small number, for example, .

If we use the naive assumption of independence of conditional probabilities, then using the Bayes formula, it is possible to determine for each object the probability of its occurrence, provided that all other objects are present in the text:

It follows that as an “extra” object one can take

Thus, you can get a simple solution that with some quality will cope with the task.

You can then build a more complex solution using real machine learning. Factors will be, for example, estimates akin to that obtained above. In order to formulate a binary classification problem, it will be necessary to choose “positive” and “negative” pairs of texts and objects. As positive, you can use pairs that are actually observed in the data, and as negative, you can use pairs in which an object is selected at random from the set of all objects . On such a sample can be trained, for example, CatBoost .

To predict an extra object for some text, now we need to apply the constructed model to all objects from this text. The object that will receive the smallest prediction of the classifier, and we will be considered randomly added.

In the competition you will be able to meet the tasks of both types: both those in which you need to get a concrete exact answer, and those in which it is a priori impossible and you need to achieve the best possible value of a certain quality metric.

Yandex. Blitz on machine learning will be held on the platform Yandex. Contest - in the same place where the last Yandex Blitz took place. To participate in the competition you need to register . We will definitely send you a reminder about the start of the rounds.

')

Source: https://habr.com/ru/post/359435/

All Articles