AI, practical course. Comparison of deep learning software

At a certain stage of your AI project, you will have to decide which machine learning environment you will use. For some tasks, traditional machine learning algorithms will suffice. However, if you are working with a large amount of text, images, video or voice data, it is recommended to use deep learning.

So which deep learning environment to choose? This article is devoted to a comparative analysis of existing deep learning environments.

Deep learning environments simplify the development and training of deep learning models by providing the simplest high-level elements for complex and unreliable mathematical transformations, such as gradient descent, backward propagation, and inference.

Choosing the right environment is not easy, because this area is still poorly developed, and at the moment there is no absolutely winning option. Also, the choice of environment may depend on your goals, resources and team.

')

We will focus on environments that have versions optimized by Intel and that can work efficiently on new CPUs (for example, Intel Xeon Phi processors) optimized for matrix multiplication.

We evaluated media in two stages.

Every Wednesday we evaluated based on community activity and participants' ratings.

Github

Stack overflow

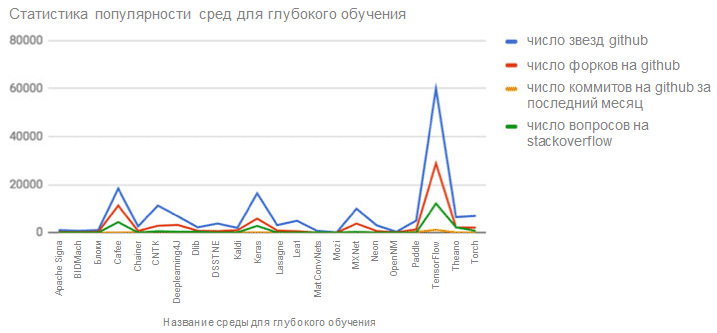

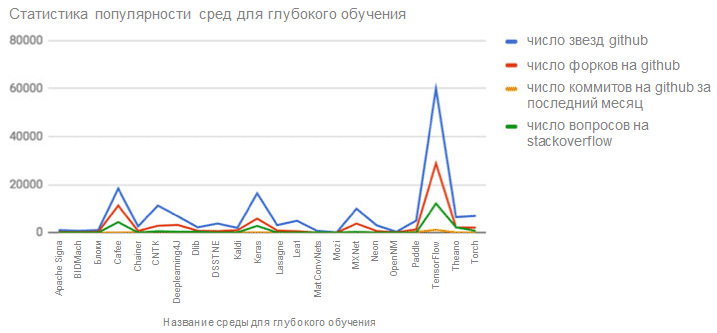

According to the results of our analysis of popular media, we have compiled the following table for evaluating each medium by popularity and activity.

The overall popularity and activity of different environments

According to these data, the most popular environments are TensorFlow, Caffe, Keras, Microsoft Cognitive Toolkit (formerly known as CNTK), MXNet, Torch, Deeplearning4j (DL4J) and Theano. The popularity of the neon environment is also growing. We will cover these environments in the next section.

In-depth learning environments vary in their level of functionality. Some of them, such as Theano and TensorFlow, allow you to define neural networks of arbitrary level of complexity using the most basic structural elements. Environments of this type can even be called languages. Other environments, such as Keras, are engines or shells designed to increase developer productivity, but are limited in functionality due to a higher level of abstraction.

When choosing a deep learning environment, you must first choose a low-level environment. A high-level shell may be a good addition, but it is not required. As ecosystems mature, more and more low-level environments will be complemented by higher-level satellites.

Caffe is a deep learning environment that is designed with expression, speed, and modularity in mind. It was created by Berkeley AI Research (BAIR) with the participation of community members. Yangqing Jia created a project during his doctoral thesis at the University of California at Berkeley. The Caffe Library is licensed under article 2 on the distribution of Berkeley University software.

Important features:

Microsoft Cognitive Toolkit (formerly called CNTK) is a unified set of tools for deep learning that presents neural networks as a series of computational actions through a directed graph. In the graph, leaves represent input values or network parameters, and other nodes represent matrix operations in response to input values. The toolkit makes it easy to implement and combine popular types of models, for example, pre-emptive deep neural networks, convolutional neural networks, recurrent neural networks (RNN) and networks with long short-term memory (LSTM). It implements learning on the principle of stochastic gradient descent (SGD, backward error propagation) with automatic differentiation and parallelization across several GPUs and servers. The library has been licensed for open source software since April 2015.

Important features.

Keras is a high-level neural network API written in Python. This library can be used in addition to TensorFlow or Theano. The Keras library is mainly intended to speed up experiments. An important condition for successful research is the possibility of transition from the idea to the result with the minimum possible delay.

Important features.

Deeplearning4j (DL4J) is the first distributed open source deep learning library for commercial use written for Java * and Scala *. The DL4J library is integrated with Hadoop * and Apache Spark * and is intended for use in corporate environments on distributed graphics processors (GPUs) and CPUs. DL4J is a state-of-the-art, ready-to-use library that is more standard-oriented than customizable and provides rapid prototyping for non-professional researchers. DL4J can be customized when scaled. The library is available under the Apache 2.0 license, and all derived products from DL4J belong to their creators. DL4J allows you to import neural network models from most of the core environments through Keras, including TensorFlow, Caffe, Torch and Theano, closing the gap between the Python ecosystem and the Java Virtual Machine (JVM) using a common set of tools designed for analysts, data engineers and developers. . As the DL4J application programming interface in Python, Keras is used.

Important features.

MXNet is a simple, versatile and ultra- scalable environment for deep learning. The environment supports modern models of deep learning, including convolutional neural networks and LSTM. The library originates in the scientific community and is the product of collaborative and individual work of researchers from several leading universities. The library, actively supported by Amazon, was developed with a special focus on machine vision, processing and understanding of speech and language, generating models, convolutional and recurrent neural networks. MXNet allows you to define, train and deploy networks in a wide variety of conditions: from powerful cloud infrastructures to mobile and connected devices. The library provides a universal environment with support for many common languages, making it possible to use both imperative and symbolic software constructs. The MXNet library also takes up little space. Because of this, it can be scaled efficiently on multiple graphics processors and machines, which is well suited for learning on large data sets in a cloud environment.

Important features.

With an open source Python-based language and a set of libraries for developing deep learning models, neon is a fast, powerful, and easy-to-use tool.

Important features.

TensorFlow is an open source software library for numerical calculations using data flow graphs. The nodes in the graph represent mathematical operations, and the branches of the graph represent multidimensional data arrays (tensors) that they exchange. Thanks to the universal computing architecture, you can deploy on one or more CPUs or graphics processors on a desktop computer, server, or mobile device with a single API. TensorFlow was originally developed by researchers and engineers who worked for the Google Brain team at Google’s Machine Intelligence unit for machine learning and neural networks, but because of its general nature, the system can also be used in other areas.

Theano is a Python library designed to define, optimize, and evaluate mathematical expressions, especially expressions with multidimensional arrays (numpy.ndarray). Theano allows you to achieve speeds of working with large amounts of data that can compete only with specially made programs in the SI language. Theano also surpasses the SI on the CPU many times due to the use of the latest versions of graphics processors. Theano combines aspects of the computer algebra system (SKA) with aspects of an optimizing compiler. With Theano, you can also create custom SI code for many mathematical operations. This combination of SKA with optimizing compilation is especially well suited for problems in which complex mathematical expressions are evaluated many times, therefore the speed of evaluation is important for them. In situations where many different expressions are evaluated only once, Theano helps reduce unproductive compilations and analyzes, while still providing symbolic functions, such as automatic differentiation.

Important features.

Torch is a scientific computing environment with broad support for machine learning algorithms, with an emphasis on graphics processors (GPUs). It is notable for its ease of use and speed due to the simple and fast scripting language, LuaJIT and the basic C / CUDA program.

Important features.

Most environments have common features: speed, portability, community and ecosystem, ease of development, compatibility, and scalability.

The inputs for the search process in the environment for our project to create an automatic video editing application must meet the following requirements.

Developer productivity is of great importance, especially at the stage of prototyping, so you need to choose an environment that matches the skills and knowledge of your team members. For this reason, we will exclude all environments based on alternative languages.

Most projects, including our training project, use only some existing neural network algorithm, such as AlexNet or LSTM. In our case, any deep learning environment should be suitable. However, if you are working on a research project (for example, developing a new algorithm, testing a new hypothesis or optimizing a library), high-level systems, such as Keras, will not work for you. Low-level environments are likely to require you to write a new algorithm in C ++.

Our list of (deep learning environments) remains the same.

Our training project involves the solution of two traditional problems of deep learning:

All environments support convolutional neural networks (used mainly for image processing) and recurrent neural networks (used for sequence modeling).

Our list remains the same:

Since we do not have a large data set (for example, 6000 annotated images) to train the model of our project, we must use previously trained models.

All selected environments support model parks. In addition, there are converters that allow the use of models that have been trained using another library. The Caffe library was the first to enter the model park and has the widest selection of models. There are tools for converting Caffe park models to virtually any other model:

All of these environments also have their own model parks.

Our list remains the same:

The model can be defined in two ways: using the configuration file (for example, using Caffe) or using scripts (in other environments). Configuration files are convenient from the point of view of model portability, but they are difficult to use when creating a complex neural network architecture (for example, try manually copying layers in ResNet-101). On the other hand, using scripts you can create complex neural networks with minimal code repetition, but the possibility of transferring such code to another environment will be questionable. Usually it is preferable to use scripts, because transitions from one environment to another within one project rarely happen.

Now our list will change as follows:

The Intel Math Kernel Library (Intel MKL) for vector and matrix multiplication has been refined for deep learning and now includes the integrated multi-core architecture of Intel Many Integrated Core Architecture. This is a processor architecture with extensive parallelization, which significantly speeds up the process of deep learning on the CPU. All current competing environments have already integrated Intel MKL and offer versions optimized by Intel.

It is also important to know whether distributed learning is supported by multiple CPUs. Based on these criteria, our modified list will be as follows:

TensorFlow supports a special tool called TensorFlow Serving. It takes a trained TensorFlow model as input and converts it into a web service that allows it to evaluate incoming requests. If you are going to use a trained model on a mobile device, TensorFlow Mobile provides immediate compression of the model.

Read more about TensorFlow Serving

TensorFlow Mobile

MXNet provides a merge deployment, in which the model, along with all the necessary bindings, is placed in a standalone file. Such a file can then be transferred to another machine, and accessed through other programming languages. For example, it can be used on a mobile device. More details .

The Microsoft Cognitive Toolkit (formerly CNTK) provides model deployment through the Azure * machine learning cloud environment. Mobile devices are not supported yet. Their support is expected in the next version. More details .

Although we are not going to deploy an out-of-the-box application on a mobile device, in some situations this possibility can be very useful. For example, imagine a video editing application that can be used to create videos from just taken snapshots (this feature is already available in Google Photos).

Now we have two competitors left: TensorFlow and MXNet. In the end, the team working on this project decided to use the TensorFlow library in conjunction with Keras, because it has a more active community, the team members have experience working with these tools, and also because TensorFlow has more successful projects. See the list of users TensorFlow .

In this article, we presented several popular environments for deep learning and compared them according to several criteria. The ease of prototyping, deployment, and debugging of the model, along with community size and scalability across multiple machines, are among the most important criteria that should be guided in choosing a deep learning environment. All modern environments now support convolutional and recurrent neural networks, have parks of pre-trained models and offer versions optimized for modern Intel Xeon Phi processors. As our analysis showed, for the purposes of our project, Keras based on the TensorFlow version optimized by Intel would be an excellent choice. Another successful option would be the Intel optimized MXNet library.

Although according to the results of this comparative analysis, the TensorFlow library won, we did not take into account comparison standards, which are of key importance. We plan to write a separate article in which we compare the output and training time of the TensorFlow and MXNet libraries for a wide range of deep learning models in order to determine the final winner.

So which deep learning environment to choose? This article is devoted to a comparative analysis of existing deep learning environments.

general information

Deep learning environments simplify the development and training of deep learning models by providing the simplest high-level elements for complex and unreliable mathematical transformations, such as gradient descent, backward propagation, and inference.

Choosing the right environment is not easy, because this area is still poorly developed, and at the moment there is no absolutely winning option. Also, the choice of environment may depend on your goals, resources and team.

')

We will focus on environments that have versions optimized by Intel and that can work efficiently on new CPUs (for example, Intel Xeon Phi processors) optimized for matrix multiplication.

Criteria for evaluation

We evaluated media in two stages.

- At first, we quickly evaluated them for their compliance with the basic criterion of community activity.

- After that, we performed a deeper and thorough analysis of each environment.

Preliminary estimate

Every Wednesday we evaluated based on community activity and participants' ratings.

Github

- Stars of the repository (tracking interesting projects)

- Forks of the repository (free experimentation with changes)

- The number of commits for the last month (indicates whether the project is active)

Stack overflow

- Number of asked and answered programming questions

- Informal popularity rating for citing (web search engines, articles, blogs, etc.)

Detailed analysis

- Each medium was carefully analyzed based on the following criteria:

- Availability of pre-trained models.

- Licensing model.

- Connect to a research university or academy.

- Availability of information on large-scale deployments by respected companies.

- Reference standards:

- The presence of a dedicated cloud environment optimized for the library;

- Availability of debugging tools (for example, visualization and model checkpoints).

- Training;

- Engineering productivity;

- Compatibility (supported languages for writing applications);

- Open source;

- Supported operating systems and platforms;

- Language implementation environment.

- Supported families of algorithms and deep learning models

Deep learning environments

According to the results of our analysis of popular media, we have compiled the following table for evaluating each medium by popularity and activity.

The overall popularity and activity of different environments

| Deep learning environment | Number of stars GitHub | Number of forks GitHub | Number of GitHub commits in the last month | Number of questions Stack Overflow |

|---|---|---|---|---|

| Tensorflow | 60 030 | 28,808 | 1127 | 12 118 |

| Caffe | 18 354 | 11,277 | 12 | 4355 |

| Keras | 16 344 | 5788 | 71 | 2754 |

| Microsoft Cognitive Toolkit | 11,250 | 2823 | 337 | 545 |

| MXNet | 9951 | 3730 | 230 | 289 |

| Torch | 6963 | 2062 | 7 | 722 |

| Deeplearning4J | 6800 | 3168 | 172 | 316 |

| Theano | 6417 | 2154 | 100 | 2207 |

| Wednesday neon | 3043 | 665 | 38 | 0 |

According to these data, the most popular environments are TensorFlow, Caffe, Keras, Microsoft Cognitive Toolkit (formerly known as CNTK), MXNet, Torch, Deeplearning4j (DL4J) and Theano. The popularity of the neon environment is also growing. We will cover these environments in the next section.

Learn more about the most popular environments.

In-depth learning environments vary in their level of functionality. Some of them, such as Theano and TensorFlow, allow you to define neural networks of arbitrary level of complexity using the most basic structural elements. Environments of this type can even be called languages. Other environments, such as Keras, are engines or shells designed to increase developer productivity, but are limited in functionality due to a higher level of abstraction.

When choosing a deep learning environment, you must first choose a low-level environment. A high-level shell may be a good addition, but it is not required. As ecosystems mature, more and more low-level environments will be complemented by higher-level satellites.

Caffe

Caffe is a deep learning environment that is designed with expression, speed, and modularity in mind. It was created by Berkeley AI Research (BAIR) with the participation of community members. Yangqing Jia created a project during his doctoral thesis at the University of California at Berkeley. The Caffe Library is licensed under article 2 on the distribution of Berkeley University software.

Important features:

- expressive architecture fosters application and innovation;

- extensible code stimulates active development;

- Speed - Caffe offers one of the fastest deployment options for convolutional neural networks (CNN).

- Used for research projects, prototypes of start-ups and even large-scale production applications in the field of vision, speech and multimedia.

Microsoft Cognitive Toolkit

Microsoft Cognitive Toolkit (formerly called CNTK) is a unified set of tools for deep learning that presents neural networks as a series of computational actions through a directed graph. In the graph, leaves represent input values or network parameters, and other nodes represent matrix operations in response to input values. The toolkit makes it easy to implement and combine popular types of models, for example, pre-emptive deep neural networks, convolutional neural networks, recurrent neural networks (RNN) and networks with long short-term memory (LSTM). It implements learning on the principle of stochastic gradient descent (SGD, backward error propagation) with automatic differentiation and parallelization across several GPUs and servers. The library has been licensed for open source software since April 2015.

Important features.

- Speed and Scalability: Teaches and analyzes deep learning algorithms faster than other available toolkits, effectively scaling in different environments.

- Quality at the level of commercial products.

- Compatibility: has the most expressive and easy-to-use architecture with well-known languages and networks, such as C ++ and Python *.

Keras

Keras is a high-level neural network API written in Python. This library can be used in addition to TensorFlow or Theano. The Keras library is mainly intended to speed up experiments. An important condition for successful research is the possibility of transition from the idea to the result with the minimum possible delay.

Important features.

- User-friendly: minimizes the number of user actions required in standard situations, and also provides clear and practical instructions in the event of a user error.

- Modular design: A model is considered as a sequence or graph of autonomous, fully configurable modules that can be connected together with a minimum number of restrictions.

- Simple extension: new modules are easily added as new classes and functions, and existing modules provide enough examples.

DeepLearning4j

Deeplearning4j (DL4J) is the first distributed open source deep learning library for commercial use written for Java * and Scala *. The DL4J library is integrated with Hadoop * and Apache Spark * and is intended for use in corporate environments on distributed graphics processors (GPUs) and CPUs. DL4J is a state-of-the-art, ready-to-use library that is more standard-oriented than customizable and provides rapid prototyping for non-professional researchers. DL4J can be customized when scaled. The library is available under the Apache 2.0 license, and all derived products from DL4J belong to their creators. DL4J allows you to import neural network models from most of the core environments through Keras, including TensorFlow, Caffe, Torch and Theano, closing the gap between the Python ecosystem and the Java Virtual Machine (JVM) using a common set of tools designed for analysts, data engineers and developers. . As the DL4J application programming interface in Python, Keras is used.

Important features.

- Beneficially uses the latest libraries for distributed computing.

- Open libraries served by the developer community and the Skymind team.

- Written in Java and compatible with any JVM language, such as Scala, Clojure and Kotlin.

MXNet

MXNet is a simple, versatile and ultra- scalable environment for deep learning. The environment supports modern models of deep learning, including convolutional neural networks and LSTM. The library originates in the scientific community and is the product of collaborative and individual work of researchers from several leading universities. The library, actively supported by Amazon, was developed with a special focus on machine vision, processing and understanding of speech and language, generating models, convolutional and recurrent neural networks. MXNet allows you to define, train and deploy networks in a wide variety of conditions: from powerful cloud infrastructures to mobile and connected devices. The library provides a universal environment with support for many common languages, making it possible to use both imperative and symbolic software constructs. The MXNet library also takes up little space. Because of this, it can be scaled efficiently on multiple graphics processors and machines, which is well suited for learning on large data sets in a cloud environment.

Important features.

- Convenience programming: the ability to mix imperative and symbolic languages.

- Compatibility: supports a wide range of programming languages in the interface part of the library, including C ++, JavaScript *, Python, r *, MATLAB *, Julia *, Scala and Go *

- Portability between platforms: the ability to deploy models in a variety of conditions, providing access for the widest range of users.

- Scalability: created on the basis of a scheduler with a dynamic dependency, which analyzes data dependencies in a sequential code and automatically immediately parallelizes both declarative and imperative operations.

Wednesday neon

With an open source Python-based language and a set of libraries for developing deep learning models, neon is a fast, powerful, and easy-to-use tool.

Important features.

- The desire to ensure the best quality of work on any device with assembly-level optimization, support for multiple GPUs, optimized data loading and using Winograd algorithm for convolutions of calculations.

- As with Python, syntax includes object-oriented implementations of all deep learning components, including layers, learning rules, activations, optimizers, initialization blocks, and cost functions.

- The Nviz utility creates bar charts and other visualization elements, helping you to track and better understand the progress of the deep learning process.

- Available for free download under the Apache 2.0 open source license.

Tensorflow

TensorFlow is an open source software library for numerical calculations using data flow graphs. The nodes in the graph represent mathematical operations, and the branches of the graph represent multidimensional data arrays (tensors) that they exchange. Thanks to the universal computing architecture, you can deploy on one or more CPUs or graphics processors on a desktop computer, server, or mobile device with a single API. TensorFlow was originally developed by researchers and engineers who worked for the Google Brain team at Google’s Machine Intelligence unit for machine learning and neural networks, but because of its general nature, the system can also be used in other areas.

Theano

Theano is a Python library designed to define, optimize, and evaluate mathematical expressions, especially expressions with multidimensional arrays (numpy.ndarray). Theano allows you to achieve speeds of working with large amounts of data that can compete only with specially made programs in the SI language. Theano also surpasses the SI on the CPU many times due to the use of the latest versions of graphics processors. Theano combines aspects of the computer algebra system (SKA) with aspects of an optimizing compiler. With Theano, you can also create custom SI code for many mathematical operations. This combination of SKA with optimizing compilation is especially well suited for problems in which complex mathematical expressions are evaluated many times, therefore the speed of evaluation is important for them. In situations where many different expressions are evaluated only once, Theano helps reduce unproductive compilations and analyzes, while still providing symbolic functions, such as automatic differentiation.

Important features.

- Runtime optimization: using g ++ or nvcc to compile parts of an expression graph in a CPU instruction or GPU that runs much faster than instructions in pure Python

- Symbolic differentiation: automatic creation of symbolic graphs for calculating gradients

- Enhancing stability: recognizing [some] numerically unstable expressions and calculating them using more robust algorithms.

Torch

Torch is a scientific computing environment with broad support for machine learning algorithms, with an emphasis on graphics processors (GPUs). It is notable for its ease of use and speed due to the simple and fast scripting language, LuaJIT and the basic C / CUDA program.

Important features.

- It is based on the LuaJIT language with a high degree of optimization and provides access to low-level resources, for example, to work with ordinary C pointers.

- It seeks to ensure maximum versatility and speed in creating scientific algorithms, while at the same time simplifying the process.

- It has a large ecosystem of packages, including in the field of machine learning, machine vision, signal processing, parallel processing, images, video, audio, and networks that are being developed by the community, as well as being developed on the basis of the Lua community.

- Easy-to-use libraries of popular neural networks and optimization tools, characterized by maximum versatility when implementing complex topologies of neural networks.

- The whole environment (including Lua) is autonomous and can be transferred to any platform without changes.

Detailed analysis

Most environments have common features: speed, portability, community and ecosystem, ease of development, compatibility, and scalability.

The inputs for the search process in the environment for our project to create an automatic video editing application must meet the following requirements.

- A good Python shell or library, because most data analysts, including those who work with these training materials, are familiar with Python.

- A version optimized by Intel for the high-concurrency Intel Xeon Phi product family.

- A wide range of deep learning algorithms that are well suited for quickly creating prototypes and, in particular, images (emotion recognition) and data sequences (audio and music).

- Pre-trained models that can be used to create high-quality models, even when a relatively small set of image data (for emotion recognition) and musical compositions (for making music) is available for teaching a model.

- The ability to withstand high loads in production conditions on large projects, although in our project large loads are not planned.

Developer productivity: Language

Developer productivity is of great importance, especially at the stage of prototyping, so you need to choose an environment that matches the skills and knowledge of your team members. For this reason, we will exclude all environments based on alternative languages.

- The best options are Caffe, (py) Torch, Theano, TensorFlow, MXNet, Microsoft Cognitive Toolkit, neon, and Keras.

- Valid: DL4J (great for Java / Scala; most of the big data libraries most commonly used in enterprises are written in Java / Scala, for example, Spark, Hadoop, and Kafka).

New or existing deep learning algorithms and network architecture

Most projects, including our training project, use only some existing neural network algorithm, such as AlexNet or LSTM. In our case, any deep learning environment should be suitable. However, if you are working on a research project (for example, developing a new algorithm, testing a new hypothesis or optimizing a library), high-level systems, such as Keras, will not work for you. Low-level environments are likely to require you to write a new algorithm in C ++.

Our list of (deep learning environments) remains the same.

- Optimal options: Caffe, (py) Torch, Theano, TensorFlow, MXNet, Microsoft Cognitive Toolkit, neon, Keras

- Valid: Not defined.

Supported Neural Network Architectures

Our training project involves the solution of two traditional problems of deep learning:

- image classification (usually performed using convolutional neural networks, such as AlexNet, VGG or Resnet);

- creating musical accompaniment (a typical sequence modeling task that recurrent neural networks do well, such as LSTM);

All environments support convolutional neural networks (used mainly for image processing) and recurrent neural networks (used for sequence modeling).

Our list remains the same:

- Optimal options: Caffe, (py) Torch, Theano, TensorFlow, MXNet, Microsoft Cognitive Toolkit, neon, Keras

- Valid: Not defined.

Availability of pre-trained models

Since we do not have a large data set (for example, 6000 annotated images) to train the model of our project, we must use previously trained models.

All selected environments support model parks. In addition, there are converters that allow the use of models that have been trained using another library. The Caffe library was the first to enter the model park and has the widest selection of models. There are tools for converting Caffe park models to virtually any other model:

- MXNet

- Torch

- Tensorflow

- neon

- Microsoft Cognitive Toolkit (thanks to the efforts of Microsoft, you can convert almost any model into a CNTK fleet model)

- Theano (there is no actively supported tool, but Keras supports separate image processing models)

All of these environments also have their own model parks.

Our list remains the same:

- Optimal options: Caffe, (py) Torch, Theano, TensorFlow, MXNet, Microsoft Cognitive Toolkit, neon, Keras

- Valid: Not defined.

Developer productivity: Easy model definition

The model can be defined in two ways: using the configuration file (for example, using Caffe) or using scripts (in other environments). Configuration files are convenient from the point of view of model portability, but they are difficult to use when creating a complex neural network architecture (for example, try manually copying layers in ResNet-101). On the other hand, using scripts you can create complex neural networks with minimal code repetition, but the possibility of transferring such code to another environment will be questionable. Usually it is preferable to use scripts, because transitions from one environment to another within one project rarely happen.

Now our list will change as follows:

- Optimal options: (py) Torch, Theano, TensorFlow, MXNet, Microsoft Cognitive Network, neon, Keras

- Allowed: Caffe

Support for optimized CPUs and multiple CPUs for learning deep learning models

The Intel Math Kernel Library (Intel MKL) for vector and matrix multiplication has been refined for deep learning and now includes the integrated multi-core architecture of Intel Many Integrated Core Architecture. This is a processor architecture with extensive parallelization, which significantly speeds up the process of deep learning on the CPU. All current competing environments have already integrated Intel MKL and offer versions optimized by Intel.

It is also important to know whether distributed learning is supported by multiple CPUs. Based on these criteria, our modified list will be as follows:

- Optimal options:

- Tensorflow

- MXNet

- Microsoft Cognitive Toolkit (in our opinion, MPI is a bit more complicated than other libraries)

- Permissible:

- (py) Torch (in the next major version, you will be able to work with several CPUs, if you focus on the answer of the company's representative at the official forum .

- Theano (multicore parallelization on one machine via OpenMP)

- neon (not yet available)

Developer Performance: Deploying the Model

TensorFlow supports a special tool called TensorFlow Serving. It takes a trained TensorFlow model as input and converts it into a web service that allows it to evaluate incoming requests. If you are going to use a trained model on a mobile device, TensorFlow Mobile provides immediate compression of the model.

Read more about TensorFlow Serving

TensorFlow Mobile

MXNet provides a merge deployment, in which the model, along with all the necessary bindings, is placed in a standalone file. Such a file can then be transferred to another machine, and accessed through other programming languages. For example, it can be used on a mobile device. More details .

The Microsoft Cognitive Toolkit (formerly CNTK) provides model deployment through the Azure * machine learning cloud environment. Mobile devices are not supported yet. Their support is expected in the next version. More details .

Although we are not going to deploy an out-of-the-box application on a mobile device, in some situations this possibility can be very useful. For example, imagine a video editing application that can be used to create videos from just taken snapshots (this feature is already available in Google Photos).

Now we have two competitors left: TensorFlow and MXNet. In the end, the team working on this project decided to use the TensorFlow library in conjunction with Keras, because it has a more active community, the team members have experience working with these tools, and also because TensorFlow has more successful projects. See the list of users TensorFlow .

Conclusion

In this article, we presented several popular environments for deep learning and compared them according to several criteria. The ease of prototyping, deployment, and debugging of the model, along with community size and scalability across multiple machines, are among the most important criteria that should be guided in choosing a deep learning environment. All modern environments now support convolutional and recurrent neural networks, have parks of pre-trained models and offer versions optimized for modern Intel Xeon Phi processors. As our analysis showed, for the purposes of our project, Keras based on the TensorFlow version optimized by Intel would be an excellent choice. Another successful option would be the Intel optimized MXNet library.

Although according to the results of this comparative analysis, the TensorFlow library won, we did not take into account comparison standards, which are of key importance. We plan to write a separate article in which we compare the output and training time of the TensorFlow and MXNet libraries for a wide range of deep learning models in order to determine the final winner.

Source: https://habr.com/ru/post/359184/

All Articles