Pyramid of tests in practice

About the author: Ham Focke is a developer and consultant for ThoughtWorks in Germany. Tired of deploying at three nights, he added means of continuous delivery and careful automation to his toolbox. Now he is building such systems to other teams to ensure reliable and efficient software delivery. So it saves companies the time that these annoying little people spent on their antics.

About the author: Ham Focke is a developer and consultant for ThoughtWorks in Germany. Tired of deploying at three nights, he added means of continuous delivery and careful automation to his toolbox. Now he is building such systems to other teams to ensure reliable and efficient software delivery. So it saves companies the time that these annoying little people spent on their antics.The “test pyramid” is a metaphor that means the grouping of software tests at different levels of detail. She also gives an idea of how many tests should be in each of these groups. Despite the fact that the concept of a test pyramid has been around for a long time, many development teams are still trying to incorrectly implement it in practice properly. This article discusses the initial concept of the test pyramid and shows how to implement it. It shows what types of tests to look for at different levels of the pyramid, and gives practical examples of how to implement them.

Content

Notes

')

- The importance of automation (tests)

- Pyramid of tests

- What tools and libraries will we look at?

- Sample application

- Unit tests

- Integration tests

- Contract Tests

- UI tests

- Through tests

- Acceptance tests - do your features work correctly?

- Research testing

- Confusion with terminology in testing

- Deploying tests into the deployment pipeline

- Avoid duplicate tests

- Write clean code for tests

- Conclusion

Notes

')

The dramatically reduced feedback loop, fed by automated tests, goes hand in hand with flexible development practices, continuous delivery and the DevOps culture. An effective testing approach ensures fast and confident development.

This article looks at how a well-formed test suite should look to be flexible, reliable, and supported — regardless of whether you are building microservice architecture, mobile applications, or IoT ecosystems. We will also take a closer look at creating effective and readable automated tests.

The importance of automation (tests)

Software has become an integral part of the world in which we live. It outgrew the original sole goal of increasing business efficiency. Today, each company seeks to become a first-class digital company. All of us every day we are users of more and more software. The speed of innovation is increasing.

If you want to keep up with the times, you need to look for faster ways to deliver software without sacrificing its quality. Continuous delivery can help in this — a practice that automatically ensures that software can be released into production at any time. With continuous delivery, an assembly pipeline is used to automatically test software and deploy it to test and production environments.

Soon, assembling, testing, and deploying an ever-growing amount of software manually becomes impossible - unless you want to spend all your time doing manual tasks instead of delivering working software. The only way is to automate everything, from assembly to testing, deployment and infrastructure.

Fig. 1. Using assembly conveyors for automatic and reliable software commissioning

Traditionally, testing required excessive manual work through deployment in a test environment, and then tests in the black box style, for example, by clicking everywhere in the user interface with the observation that bugs appear. Often these tests are set by test scripts to ensure that testers check everything consistently.

It is obvious that testing all changes manually takes a lot of time, it is monotonous and tedious. Monotony is boring, and boredom leads to mistakes.

Fortunately, there is a great tool for monotonous tasks: automation .

Automating monotonous tests will change your life as a developer. Automate the tests, and you no longer have to thoughtlessly follow click protocols, checking the correctness of the program. Automate the tests, and without batting an eye, change the code base. If you have ever tried large-scale refactoring without a proper set of tests, I’m sure you know what horror it can turn into. How do you know if you accidentally make a mistake in the process? Well, you have to click manually on all test cases, how else. But let's be honest: do you really like it? How about even after large-scale changes any bugs reveal themselves in a few seconds while you are drinking coffee? In my opinion, it is much nicer.

Pyramid of tests

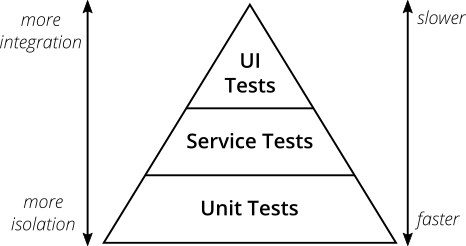

If you take the automatic tests seriously, then there is one key concept: the test pyramid . She was introduced by Mike Cohn in his book Scrum: Flexible Software Development (Succeeding With Agile. Software Development Using Scrum). This is an excellent visual metaphor, suggestive of different levels of tests. It also shows the amount of tests at each level.

Fig. 2. Pyramid of tests

Mike Cohn's original test pyramid consists of three levels (bottom to top):

- Unit tests.

- Service tests.

- UI tests.

Unfortunately, with a more thorough concept seems insufficient. Some argue that either the naming or some conceptual aspects of the Mike Cohn test pyramid are not perfect, and I have to agree. From the modern point of view, the pyramid of tests seems overly simplistic and therefore may be misleading.

However, because of its simplicity, the essence of the test pyramid represents a good rule of thumb when it comes to creating your own set of tests. From this pyramid the main thing to remember are two principles:

- Write tests of different details.

- The higher the level, the fewer the tests.

Stick to the shape of a pyramid to come up with a healthy, fast and supported set of tests. Write a lot of small and fast unit tests . Write a few more general tests and very few high-level end-to-end tests that test the application from start to finish. Keep in mind that you have failed in the end the ice cream test cake , which will become a nightmare in support and will run for too long.

Do not get too attached to the names of the individual levels of the pyramid of tests. In fact, they can be misleading: the term “service test” is difficult to understand (Cohn himself noted that many developers completely ignore this level ). Nowadays, frameworks for single-page applications like React, Angular, Ember.js and others are becoming obvious that the UI tests do not belong at the top of the pyramid - you can perfectly test the UI in all of these frameworks.

Given the shortcomings of the original names in the pyramid, it is quite normal to come up with other names for their levels of tests. The main thing is that they correspond to your code and terminology adopted in your team.

What tools and libraries will we look at?

- JUnit : to run tests

- Mockito : for imitation dependencies

- Wiremock : for external service stubs

- Pact : for writing CDC tests

- Selenium : for writing end-to-end UI tests

- REST-assured : for writing end-to-end REST API tests

Sample application

I wrote a simple microservice with tests from different levels of the pyramid.

This is an example of a typical microservice. It provides a REST interface, communicates with the database and extracts information from a third-party REST service. It is implemented on Spring Boot and should be understandable even if you have never worked with Spring Boot.

Be sure to check the code on Github . The readme file contains instructions for running applications and automated tests on your computer.

Functionality

The application has simple functionality. It provides a REST interface with three endpoints:

GET /hello

"Hello World" . .

GET /hello /{lastname}

. , "Hello {Firstname} {Lastname}" .

GET /weather

, .High level structures

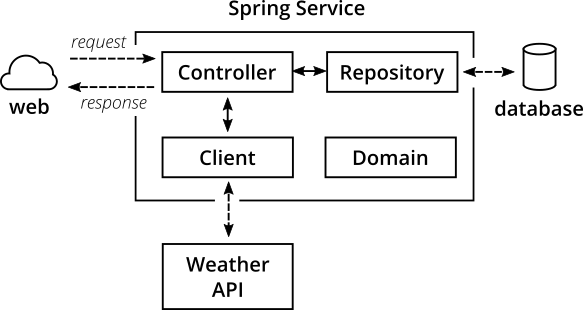

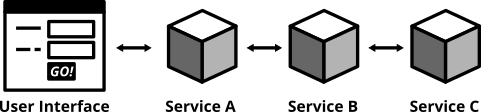

At a high level, the system has the following structure:

Fig. 3. High-level microservice structure

Our microservice provides a REST interface over HTTP. For some endpoints, the service receives information from the database. In other cases, it accesses the external API via HTTP to get and display the current weather.

Internal architecture

Inside the Spring Service, the typical architecture for Spring is:

Fig. 4. The internal structure of microservice

Controllerclasses provide REST endpoints that handle HTTP requests and responses.Repositoryclasses interact with the database and are responsible for writing and reading data to / from the persistent storage.Clientclasses interact with other APIs; in our case, they take JSON data over HTTPS from the weather API on darksky.net.Domainclasses capture the domain model , including the domain logic (which, frankly, is rather trivial in our case).

Experienced Spring developers may notice that a frequently used layer is missing here: many developers inspired by problem-oriented design create a service layer consisting of classes of services . I decided not to include it in the app. One of the reasons is that our application is quite simple, and the layer of services will become an unnecessary level of indirection. Another reason is that in my opinion people often overdo it with these layers. Often you have to see code bases where classes of services cover all business logic. The domain model becomes just a layer for the data, not for the behavior (an anemic domain model ). For each non-trivial application, great opportunities for good code structuring and testability are lost, and the power of object orientation is not fully utilized.

Our repositories are simple and provide simple CRUD functionality. For simplicity, I used Spring Data . It provides a simple and universal implementation of the CRUD repository, and also takes care of deploying the in-memory database for our tests, rather than using real PostgreSQL, as it would be in production.

Take a look at the code base and get acquainted with the internal structure. This is useful for the next step: test the application!

Unit tests

The core of your test suite consists of unit tests (unit tests). They verify that the individual unit ( test subject ) of the code base is working properly. Unit tests have the narrowest area among all the tests in the test suite. The number of unit tests in the kit significantly exceeds the number of any other tests.

Fig. 5. Usually a unit test replaces external users with test duplicates.

What is a unit?

If you ask three different people what “unit” means in the context of unit tests, you will probably get four different, slightly different answers. To a certain extent, this is a question of your own definition - and it is normal that there is no generally accepted canonical answer.

If you are writing in a functional language, then the unit is likely to be a separate function. Your unit tests will invoke a function with various parameters and return the expected values. In an object-oriented language, a unit can vary from a single method to a whole class.

Sociable and lonely tests

Some argue that all participants (for example, called classes) of the test subject should be replaced with imitations (mocks) or stubs (stubs) in order to create perfect isolation, avoid side effects and difficult test setup. Others argue that imitations and stubs should be replaced only by participants who slow down the test or have strong side effects (for example, classes with database access or network calls).

Sometimes these two types of unit tests are called solitary in the case of the total use of imitations and stubs or sociable in the case of real communication with other participants (these terms were invented by Jay Fields for the book "Effective work with unit tests" ). If you have some free time, you can go down the rabbit hole and understand the advantages and disadvantages of different points of view.

But in the end it doesn't matter what type of tests you choose. What really matters is their automation. Personally, I constantly use both approaches. If it is inconvenient to work with real participants, I will abundantly use imitations and stubs. If I feel that attracting a real participant gives more confidence in the test, I will drown out only the most distant parts of the service.

Imitations and stubs

Imitations (mocks) and stubs (stubs) are two different types of test substitutions (there are generally more of them). Many use the terms interchangeably. I think that it is better to observe accuracy and keep in mind the specific properties of each of them. For production objects, test substitute is created for test implementation.

Simply put, you replace a real thing (for example, a class, module or function) with a fake copy. Counterfeit looks and acts like the original (gives the same answers to the same method calls), but these are predefined answers that you define for the unit test.

Test doublers are used not only in unit tests. More complex backups are used to control the imitation of entire parts of your system. However, unit tests use especially many imitations and stubs (depending on whether you prefer sociable or single tests) simply because many modern languages and libraries make it easy and convenient to create them.

Regardless of the technology chosen, the standard library of your language or some popular third-party library already has an elegant way of setting up simulations. And even for writing your own simulations from scratch, you just need to write a fake class / module / function with the same signature as the real one, and the simulation settings for the test.

Your unit tests will work very quickly. On a decent car, you can run thousands of unit tests in a few minutes. Test small code base fragments in isolation and avoid contacts with the database, file system and HTTP requests (putting simulations and stubs here) to maintain high speed.

Having understood the basics, over time you will begin to write unit tests more freely and easily. Stub of external participants, setting the input data, calling the test subject - and checking that the return value is as expected. Look at test -driven development (TDD), and let unit tests guide your development; if they are applied correctly, it will help get into a powerful stream and create a good supported architecture, automatically generating a comprehensive and fully automated test suite. But this is not a universal solution. Try and see for yourself whether TDD is appropriate for your particular case.

What to test?

It’s good that unit tests can be written for all classes of production code, regardless of their functionality or what level of internal structure they belong to. Unit tests are suitable for controllers, repositories, domain classes or file reading programs. Just stick to the rule of thumb for one test class per one production class .

A unit test should at least test the open interface of a class . Private methods cannot be tested anyway, because they cannot be called from another test class. Protected or available only within the package (package-private) methods are available from the test class (considering that the package structure of the test class is the same as in production), but testing these methods may already go too far.

When it comes to writing unit tests, there is a delicate feature: they must ensure that all non-trivial code paths are checked, including the default scenario and borderline situations. At the same time, they should not be too closely tied to the implementation.

Why is that?

Tests that are too attached to the production code quickly become annoying. As soon as you refactor the code (that is, change the internal structure of the code without changing the external behavior), the unit tests immediately break.

Thus, you lose the important advantage of unit tests: act as a security system for code changes. You’ll rather get tired of these stupid tests that fall every time after refactoring, bringing more problems than good; Whose foolish idea was it to introduce tests?

What to do? Do not reflect in the unit tests the internal structure of the code. Test the observed behavior. For example:

If I enter x and y values, will the result be z?

instead of this:

If I enter x and y, will the method first go to class A, then to class B, and then add the results from class A and class B?

As a rule, the closed methods should be considered as an implementation detail. That is why there should not even be a desire to check them.

Often I hear from opponents of unit testing (or TDD) that writing unit tests becomes meaningless if you need to test all the methods for a large test coverage. They often refer to scenarios where an overly eager tmlid made writing unit tests for getters and setters and other trivial code in order to reach 100% of the test coverage.

This is completely wrong.

Yes, you should test the public interface . But even more important is not to test the trivial code . Don't worry, Kent Beck approves . You will get nothing from testing simple getters or setters or other trivial implementations (for example, without any conditional logic). And you will save time, so you can sit at one more meeting, hooray!

But I really need to check out this private method.

If you ever find yourself in a situation where you really need to check the closed method, you need to take a step back and ask yourself: why?

I am sure that there is more of a design problem. Most likely, you feel the need to test a closed method, because it is complicated, and testing a method through an open class interface requires too inconvenient configuration.

Whenever I find myself in such a situation, I usually come to the conclusion that the class being tested is overcomplicated. He does too much and violates the principle of shared responsibility - one of the five principles of SOLID .

For me, the solution to splitting the source class into two classes often works. Often, after a minute or two of reflection, there is a good way to break a large class into two smaller ones with individual responsibility. I move the private method (which I urgently need to test) to the new class and let the old class call the new method. Voila, the closed method, inconvenient for testing, is now public and easily tested. In addition, I improved the structure of the code by introducing the principle of common responsibility.

Dough structure

The good structure of all your tests (not only modular) is:

- Setup test data.

- Call a test method.

- Check that the expected results are returned.

There is a good mnemonic for memorizing this structure: three A ( Arrange, Act, Assert ). You can use another mnemonic with roots in BDD (a development based on a behavior description). This triad is given when, then , where "given" reflects the setting, "when" is the method call, and "then" is the statement.

This pattern can also be applied to other higher level tests. In each case, they ensure that the tests remain easy and readable. In addition, tests written for this structure are usually shorter and more expressive.

Unit test implementation

Now we know what to test and how to structure unit tests. It's time to look at a real example.

Take the simplified version of the class

ExampleController . @RestController public class ExampleController { private final PersonRepository personRepo; @Autowired public ExampleController(final PersonRepository personRepo) { this.personRepo = personRepo; } @GetMapping("/hello/{lastName}") public String hello(@PathVariable final String lastName) { Optional<Person> foundPerson = personRepo.findByLastName(lastName); return foundPerson .map(person -> String.format("Hello %s %s!", person.getFirstName(), person.getLastName())) .orElse(String.format("Who is this '%s' you're talking about?", lastName)); } } The unit test for the

hello(lastname) method might look like this: public class ExampleControllerTest { private ExampleController subject; @Mock private PersonRepository personRepo; @Before public void setUp() throws Exception { initMocks(this); subject = new ExampleController(personRepo); } @Test public void shouldReturnFullNameOfAPerson() throws Exception { Person peter = new Person("Peter", "Pan"); given(personRepo.findByLastName("Pan")) .willReturn(Optional.of(peter)); String greeting = subject.hello("Pan"); assertThat(greeting, is("Hello Peter Pan!")); } @Test public void shouldTellIfPersonIsUnknown() throws Exception { given(personRepo.findByLastName(anyString())) .willReturn(Optional.empty()); String greeting = subject.hello("Pan"); assertThat(greeting, is("Who is this 'Pan' you're talking about?")); } } We write unit tests in JUnit , a standard Java testing framework. We use Mockito to replace the real

PersonRepository class with a class with a stub for the test. This stub allows you to specify preset answers that the stub method returns. This approach makes the test easier and more predictable, making it easy to set up data validation.Following the structure of “three A”, we write two unit tests for positive and negative cases when the desired person cannot be found. A positive test case creates a new person object and tells the repository simulation to return this object when the

lastName parameter is called with a Pan value. The test then calls the test method. Finally, he compares the answer with the expected one.The second test works in the same way, but it tests a script in which the test method does not find the person object for this parameter.

Specialized Test Helpers

It's great that you can write unit tests for the entire code base, regardless of the level of architecture of your application. The example below shows a simple unit test for the controller. Unfortunately, when it comes to Spring controllers, this approach has a drawback: the Spring MVC controller makes extensive use of annotations with declarations of the paths being listened to, the HTTP commands used, the URL parsing parameters, the request parameters, and so on. A simple call to the controller method in a unit test will not check all these important things. Fortunately, the Spring community has come up with a good test helper, which you can use to improve controller testing. Be sure to check out the MockMVC . This will give a great DSL to generate fake requests to the controller and verify that everything works fine. I included an example in the code. Many frameworks have test helpers to simplify tests of specific parts of the code. Familiarize yourself with the documentation on your framework and see if any useful helpers are there for your automated tests.

Integration tests

All non-trivial applications are integrated with some other parts (databases, file systems, network calls to other applications). In unit tests, you usually mimic them for better isolation and increased speed. However, your application will actually interact with other parts - and this should be tested. For this are intended integration tests . They check the integration of the application with all components outside the application.

For automated tests, this means that you need to run not only your own application, but also an integrated component. If you are testing integration with a database, then when performing tests you need to run the database. To check the reading of files from disk, you need to save the file to disk and load it into the integration test.

I previously mentioned that unit tests are an indefinite term. To a greater extent, this applies to integration tests. For someone, “integration” means testing the entire stack of your application in combination with others. I like the narrower definition and testing of each integration point separately, replacing the rest of the services and databases with test doublers. Together with contract testing and performing contract tests on doublers and real implementations, you can come up with integration tests that are faster, more independent and usually easier to understand.

Narrow integration tests live on the edge of your service. Conceptually, they always launch an action that leads to integration with the external part (file system, database, separate service). The database integration test is as follows:

Fig. 6. Database Integration Test integrates your code with a real database.

- Running the database.

- Connecting the application to the database.

- Run the function in code that writes data to the database.

- Check that the expected data is written to the database by reading them from the database.

Another example. The test of integrating your service with a separate service through the REST API may look like this:

Fig. 7. This kind of integration test verifies that the application is able to properly interact with individual services.

- Application launch.

- Starting an instance of a separate service (or a test substitute with the same interface).

- Run the function in code that reads data from the external service API.

- Check that the application correctly parses the answer.

Like unit tests, your integration tests can be done quite transparently (whitebox). Some frameworks allow you to simultaneously run your application and simulate its individual parts to check the correct interaction.

Write integration tests for all code fragments where data is serialized or deserialized .This happens more often than you think. Think about the following:

- Calls REST API of their services.

- Reading and writing to the database.

- API calls from other applications.

- Reading from the queue and writing there.

- Write to the file system.

Writing integration tests around these boundaries ensures that writing data and reading data from these external participants works fine.

When writing narrow integration tests, try to locally run external dependencies: a local MySQL database, a test on the local ext4 file system. If you integrate with a separate service, either launch an instance of this service locally, or create and run a fake version that simulates the behavior of a real service.

If it is not possible to start a third-party service locally, then it is better to start the selected test instance and point to it in the integration test. In automated tests, avoid integration with a real production system. Running thousands of test requests to the production system is a sure way to piss people off because you clog their logs (at best) or just do their service (at worst). Integration with the service over the network is a typical feature of a broad integration test . Usually, because of it, tests are harder to write and they work slower.

As for the test pyramid, the integration tests are at a higher level than the modular ones. The integration of file systems and databases is usually much slower than the execution of unit tests with their imitations. They are also harder to write than small isolated unit tests. In the end, you need to think about the work of the outer part of the test. Nevertheless, they have an advantage, because they give confidence in the correct operation of the application with all external parts with which it is necessary. Unit tests are useless here.

Database Integration

PersonRepository- the only class of repository in the entire code base. It relies on Spring Data and has no actual implementation. It simply extends the interface CrudRepositoryand provides a single method header. The rest is Spring magic. public interface PersonRepository extends CrudRepository<Person, String> { Optional<Person> findByLastName(String lastName); } Through the interface

CrudRepositorySpring Boot provides a fully functional repository CRUD with the methods findOne, findAll, save, updateand delete. Our own definition of a method findByLastName ()extends this basic functionality and makes it possible to get people, that is, objects Person, by their last names. Spring Data analyzes the return type of the method, the name of the method, and checks it for compliance with the naming conventions to find out what it should do.Although Spring Data does a great job of implementing database repositories, I still wrote a database integration test. You can say this is a framework test., which should be avoided, because we are testing someone else's code. Nevertheless, I believe that it is very important to have at least one integration test. First, it checks the normal operation of our method

findByLastName. Secondly, it proves that our repository correctly uses Spring and is able to connect to the database.To facilitate the execution of tests on your computer (without installing the PostgreSQL database), our test connects to the database in memory H2 .

I defined H2 as a test dependency in a file

build.gradle. The file application.propertiesin the test directory does not define any properties spring.datasource. This tells Spring Data to use the in-memory database. Since he finds H2 on the way to the class, he simply uses H2.Real profile application

int(for example, after installation as an environment variable SPRING_PROFILES_ACTIVE=int) will connect to the PostgreSQL database, as defined in application-int.properties.I understand that here you need to know and understand a bunch of Spring features. We'll have to shovel a bunch of documentation . The final code is simple in appearance, but difficult to understand, unless you know the specific features of Spring.

In addition, working with a database in memory is a risky business. In the end, our integration tests work with a database of a different type than in production. Try and decide for yourself whether to prefer Spring magic and simple code — or an explicit, but more detailed implementation.

Well, enough explanations. Here is a simple integration test that saves a Person object to a database and finds it by last name.

@RunWith(SpringRunner.class) @DataJpaTest public class PersonRepositoryIntegrationTest { @Autowired private PersonRepository subject; @After public void tearDown() throws Exception { subject.deleteAll(); } @Test public void shouldSaveAndFetchPerson() throws Exception { Person peter = new Person("Peter", "Pan"); subject.save(peter); Optional<Person> maybePeter = subject.findByLastName("Pan"); assertThat(maybePeter, is(Optional.of(peter))); } } As you can see, our integration test follows the same “three A” structure as unit tests. He said that this is a universal concept!

Integration with individual services

Our microservice receives weather data from darksky.net through the REST API. Of course, we want to make sure that the service sends requests and parses the answers correctly.

When performing automated tests, it is advisable to avoid interaction with real darksky servers . Limits on our free fare are just one of the reasons. The main thing is a decoupling . Our tests should be run no matter how lovely people do their job at darksky.net. Even if our machine cannot reach the darksky servers or they are closed for maintenance.

To avoid interacting with real darksky servers , we run our own fake darksky server for integration tests .. This may seem like a very difficult task. But it is simplified thanks to tools like Wiremock . See for yourself:

@RunWith(SpringRunner.class) @SpringBootTest public class WeatherClientIntegrationTest { @Autowired private WeatherClient subject; @Rule public WireMockRule wireMockRule = new WireMockRule(8089); @Test public void shouldCallWeatherService() throws Exception { wireMockRule.stubFor(get(urlPathEqualTo("/some-test-api-key/53.5511,9.9937")) .willReturn(aResponse() .withBody(FileLoader.read("classpath:weatherApiResponse.json")) .withHeader(CONTENT_TYPE, MediaType.APPLICATION_JSON_VALUE) .withStatus(200))); Optional<WeatherResponse> weatherResponse = subject.fetchWeather(); Optional<WeatherResponse> expectedResponse = Optional.of(new WeatherResponse("Rain")); assertThat(weatherResponse, is(expectedResponse)); } } For Wiremock, create an instance

WireMockRuleon a fixed port ( 8089). With DSL, you can set up a Wiremock server, define endpoints for listening, and predefined answers.Next, we call the test method — the one that calls on the third-party service — and check that the result is parsed correctly.

It is important to understand how the test determines that it should access the fake Wiremock server instead of the real darksky API . The secret is in the file

application.propertiesthat is located in src/test/resources. Spring loads it when executing tests. In this file, we override the configuration like API keys and URLs with values suitable for tests. Including assigning a call to a fake Wiremock server instead of the real one: weather.url = http://localhost:8089 Please note that the port defined here must be the same as we specified when creating the WireMockRule instance for the test. Replacing the URL of the real API with a fake one was made possible by introducing the URL into the class constructor

WeatherClient: @Autowired public WeatherClient(final RestTemplate restTemplate, @Value("${weather.url}") final String weatherServiceUrl, @Value("${weather.api_key}") final String weatherServiceApiKey) { this.restTemplate = restTemplate; this.weatherServiceUrl = weatherServiceUrl; this.weatherServiceApiKey = weatherServiceApiKey; } So we tell our

WeatherClientto read the value of the weatherUrlproperty parameter weather.url, which we defined in the properties of our application.With tools like Wiremock, writing narrow integration tests for a separate service becomes quite a simple task. Unfortunately, this approach has a flaw: how to ensure that the fake server we created behaves like a real one? With the current implementation, a separate service can change its API, and our tests will still pass, as if nothing had happened. Now we are just testing that we are

WeatherClientable to perceive responses from a fake server. It is a beginning, but it is very fragile. The problem is solved through testsand testing on a real service, but so we become dependent on its availability. Fortunately, there is a better solution to this dilemma — contract tests with participation and simulation, and a real server ensure that the imitation in our integration tests exactly matches the original. Let's see how it works.Contract Tests

More modern companies have found a way to scale development by distributing work among different teams. They create separate, loosely coupled services without interfering with each other, and integrate them into a large, seamless system. The recent hype around microservices is connected with this.

Dividing the system into many small services often means that these services must interact with each other through certain (preferably well defined, but sometimes randomly created) interfaces.

Interfaces between different applications can be implemented in different formats and technologies. The most common:

- REST and JSON over HTTPS;

- RPC using something like gRPC ;

- building event-oriented architecture using queues.

Each interface involves two sides: the supplier and the consumer. The supplier provides data to consumers. The consumer processes the data received from the supplier. In the REST world, the provider creates a REST API with all the necessary endpoints, and the consumer accesses this REST API to get data or initiate changes to another service. In the asynchronous world of an event-oriented architecture, a provider (often referred to as a publisher) publishes data into a queue; and the consumer (often called the subscriber) subscribes to these queues, reads and processes the data.

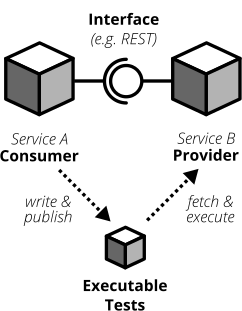

Fig. 8. Each interface involves a provider (or publisher) and a consumer (or subscriber). Interface specification can be considered a contract.

Since the services of the supplier and the consumer are distributed to different teams, you find yourself in a situation where you need to clearly specify the interface between them (the so-called contract). Traditionally, companies approach this problem as follows:

- Write a long and detailed interface specification ( contract ).

- Implement the service provider according to a specific contract.

- Pass the interface specifications to the consumer side.

- Wait until they implement their part of the interface.

- Run a large-scale manual system test to check everything.

- It is hoped that both teams will always comply with the interface definitions and are not screwed up.

More modern companies have replaced steps 5 and 6 with automated contract tests , which verify that implementations on the consumer and supplier side still adhere to a specific contract. They perform a good set of regression tests and guarantee early detection of deviations from the contract.

In a more flexible organization, you should choose a more efficient and less wasteful route. The application is created within the same organization. It should not be a problem to talk with the developers of other services instead of throwing overly detailed off-the-shelf documentation with them. In the end, these are your employees, not a third-party vendor, with whom you can communicate only through customer support or bulletproof legal contracts.

User-focused contract tests (CDC tests) allow consumers to manage contract implementation . With CDC, consumers write tests that check the interface for all the data they need. The team then publishes these tests so that vendor service developers can easily get and run these tests. Now they can develop their API by running CDC tests. After running all the tests, they know that they have satisfied all the needs of the team on the consumer side.

Fig. 9. Contract tests ensure that the supplier and all users of the interface adhere to a specific interface contract. Using CDC tests, interface users publish their requirements in the form of automated tests; Suppliers continuously receive and perform these tests.

This approach allows the interface provider team to implement only what is really needed (while maintaining simplicity, YAGNIetc). The supplier team should continuously receive and perform these CDC tests (in their assembly line) to immediately notice any critical changes. If they break the interface, their CDC tests will not pass, preventing critical changes. While the tests pass, the team can make any changes that it wants without worrying about other teams. A consumer-oriented contractual approach shortens the development process to the following:

- The consumer team writes automated tests with all the expectations from consumers.

- They publish tests for the supplier team.

- The vendor team continuously runs CDC tests and monitors them.

- Teams immediately enter into negotiations when CDC tests break down.

If your organization implements the microservices approach, then CDC tests are an important step towards creating autonomous groups. CDC tests are an automated way to encourage team communication. They ensure that the interfaces between the teams work at any time. A failed CDC test is a good excuse to go to the affected team, talk about upcoming API changes and find out where to go next.

A naive implementation of CDC tests is as simple as requesting an API and evaluating responses for the availability of all the necessary. These tests are then packaged into an executable file (.gem, .jar, .sh) and loaded somewhere for another command (for example, a repository like Artifactory ).

In recent years, the CDC approach has become more popular and several tools have been created to simplify the writing and exchange of tests.

Pact is probably the most famous among them. It offers a sophisticated approach to writing tests for consumers and suppliers, provides plugs for individual services, and allows you to exchange CDC tests with other teams. Pact is ported to many platforms and can be used with JVM, Ruby, .NET, JavaScript, and many others.

Pact is a smart choice to get started with the CDC. Documentation is stunning at first, but if you have patience, you can overcome it. It helps to gain a solid understanding of the CDC, which in turn makes it easier for you to promote the CDC to work with other teams.

User-focused contractual tests can dramatically simplify the work of autonomous teams that will begin to act quickly and confidently. Do me a favor, read and try this concept. A qualitative set of CDC tests is invaluable in order to quickly continue development without breaking other services or upsetting other teams.

Customer test (our team)

Our microservice uses weather API. So our duty is to write a consumer test that defines our expectations under the contract (API) between our microservice and the weather service.

First, we include the library for writing consumer tests in ours

build.gradle: testCompile('au.com.dius:pact-jvm-consumer-junit_2.11:3.5.5') Thanks to this library, we can implement a consumer test and use Pact simulation services:

@RunWith(SpringRunner.class) @SpringBootTest public class WeatherClientConsumerTest { @Autowired private WeatherClient weatherClient; @Rule public PactProviderRuleMk2 weatherProvider = new PactProviderRuleMk2("weather_provider", "localhost", 8089, this); @Pact(consumer="test_consumer") public RequestResponsePact createPact(PactDslWithProvider builder) throws IOException { return builder .given("weather forecast data") .uponReceiving("a request for a weather request for Hamburg") .path("/some-test-api-key/53.5511,9.9937") .method("GET") .willRespondWith() .status(200) .body(FileLoader.read("classpath:weatherApiResponse.json"), ContentType.APPLICATION_JSON) .toPact(); } @Test @PactVerification("weather_provider") public void shouldFetchWeatherInformation() throws Exception { Optional<WeatherResponse> weatherResponse = weatherClient.fetchWeather(); assertThat(weatherResponse.isPresent(), is(true)); assertThat(weatherResponse.get().getSummary(), is("Rain")); } } If you look closely, it is

WeatherClientConsumerTestvery similar to WeatherClientIntegrationTest. Only for the server stub instead of the Wiremock this time we use Pact. On the test itself, the consumer works in the same way as the integration one: we replace the real third-party server with a stub, determine the expected response, and verify that the client can properly parse it. In this sense, WeatherClientConsumerTestis a narrow integration test. The advantage over Wiremock-based dough is that it generates a Pact file (located intarget/pacts/&pact-name>.json). It describes our contract expectations in a special JSON format. You can then use this file to verify that the stub server behaves like a real one. We can take a pact file and pass it to the command that provides the interface. They take the pact file and write the test provider, using the expectations specified there. So they check if their API meets all our expectations.As you can see, it was from here that the “customer focus” part of the CDC definition came from . The consumer controls the implementation of the interface, describing their expectations. The supplier must ensure that it meets all expectations. No extra specs, YAGNI and everything.

Transferring a pact file to a vendor team can follow several paths. Simple - register it in the version control system and tell the vendor team to always take the latest version of the file. A more advanced way is to use an artifact repository like Amazon S3 or Pact Broker. Start simple and grow as needed.

In a real application, you do not need both an integration test and a consumer test at the same time .for client class. Our sample code contains both for demonstration how to use each one. If you want to write CDC tests on Pact, I recommend staying with it. In this case, the advantage is that you automatically get a pact file with contract expectations that other teams can use to easily make their supplier tests. Of course, it only makes sense if you can convince the other team to use Pact. If not, use the integration test integration in conjunction with Wiremock as a worthy alternative.

Supplier test (other team)

Vendor tests must be implemented by those who provide a weather API. We use the public API from darksky.net. Theoretically, the darksky team, for its part, should perform a vendor test and make sure that it does not violate the contract between its application and our service.

Obviously, they are not worried about our modest test application - and they will not do the CDC test for us. There is a big difference between a public API and an organization that uses microservices. The public API cannot take into account the needs of each individual consumer, otherwise it will not be able to work normally. Inside your organization, you can and should take them into account. Most likely, your application will serve several, well, maybe a couple of dozen consumers. Nothing prevents you from writing vendor tests for these interfaces in order to maintain a stable system.

The vendor team receives the pact file and runs it on its service. To do this, it implements a test that reads the pact-file, puts several stubs and checks on its waiting service defined in the pact-file.

The Pact project community has written several libraries for implementing vendor tests. Their main GitHub repositories have a good selection of libraries for consumers and providers. Choose the one that best suits your technology stack.

For simplicity, suppose the darksky API is also implemented in Spring Boot. In this case, they can use the Pact Spring library , which connects well to the MockMVC Spring mechanisms. The hypothetical test of the supplier that the darksky.net team could implement looks like this:

@RunWith(RestPactRunner.class) @Provider("weather_provider") // same as the "provider_name" in our clientConsumerTest @PactFolder("target/pacts") // tells pact where to load the pact files from public class WeatherProviderTest { @InjectMocks private ForecastController forecastController = new ForecastController(); @Mock private ForecastService forecastService; @TestTarget public final MockMvcTarget target = new MockMvcTarget(); @Before public void before() { initMocks(this); target.setControllers(forecastController); } @State("weather forecast data") // same as the "given()" in our clientConsumerTest public void weatherForecastData() { when(forecastService.fetchForecastFor(any(String.class), any(String.class))) .thenReturn(weatherForecast("Rain")); } } As you can see, the supplier only needs to load the pact file (for example,

@PactFolderdetermines where to download the resulting pact files), and then determine how to provide test data for predefined states (for example, using Mockito simulations). No need to write any special test. Everything is taken from a pact file. It is important that the supplier’s test matches the supplier’s name and status as stated in the customer’s test.Supplier test (our team)

We looked at how to test a contract between our service and weather information provider. In this interface, our service acts as a consumer, and the meteorological service acts as a supplier. Thinking again, we will see that our service also acts as a supplier for others: we provide a REST API with several endpoints for other consumers.

Since we know the importance of contractual tests, of course we will write a test for this contract. Fortunately, our contracts are customer oriented, so all the consumer teams send us their pact files, which we can use to implement the vendor tests for our REST API.

First, add the Pact supplier for Spring to our project:

testCompile('au.com.dius:pact-jvm-provider-spring_2.12:3.5.5')The implementation of the vendor test follows the same scheme described. For simplicity, I will register a pact file from our simple consumer in the repository of our service. For our case, this is easier, but in real life, you may have to use a more complex mechanism for distributing pact files.

@RunWith(RestPactRunner.class) @Provider("person_provider")// same as in the "provider_name" part in our pact file @PactFolder("target/pacts") // tells pact where to load the pact files from public class ExampleProviderTest { @Mock private PersonRepository personRepository; @Mock private WeatherClient weatherClient; private ExampleController exampleController; @TestTarget public final MockMvcTarget target = new MockMvcTarget(); @Before public void before() { initMocks(this); exampleController = new ExampleController(personRepository, weatherClient); target.setControllers(exampleController); } @State("person data") // same as the "given()" part in our consumer test public void personData() { Person peterPan = new Person("Peter", "Pan"); when(personRepository.findByLastName("Pan")).thenReturn(Optional.of (peterPan)); } } Shown

ExampleProviderTestshould provide status according to the pact file received, that's all. When we run the test, Pact will pick up the pact file and send an HTTP request to our service, which will respond according to the specified state.UI tests

Most applications have some kind of user interface. We usually talk about the web interface in the context of web applications. People often forget that the REST API or the command line interface is the same UI as the fancy web interface.

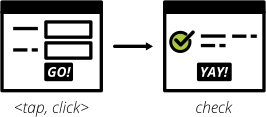

UI tests verify the correctness of the user interface of the application. User actions should trigger the right events, data should be presented to the user, the state of the UI should change as expected.

It is sometimes said that UI tests and end-to-end tests are one and the same (as Mike Cohn says). For me, this is the identification of two things with highly orthogonal concepts.

Yes, testing the application from start to finish often means passing through the user interface. But the opposite is not true.

User interface testing need not be end-to-end. Depending on the technology used, testing the UI may be as simple as writing some unit tests for a JavaScript frontend with a drowned backend.

To test the UI of traditional web applications are designed special tools like Selenium. If you consider the user interface of the REST API, then the correct integration tests around the API are sufficient.

In web interfaces, it is advisable to check several aspects of the UI, including behavior, layout, usability, adherence to corporate identity, etc.

Fortunately, testing the behavior of the UI is quite simple. Click here, enter the data there - and check that the state of the UI changes accordingly. Modern frameworks for one-page applications ( react , vue.js , Angularand others) are often supplied with tools and helpers for thorough testing of these interactions at a rather low level (in unit test). Even if you roll out your own frontend implementation on vanilla JavaScript, you can still use conventional testing tools such as Jasmine and Mocha . For a more traditional server-side rendering application, Selenium-based tests will be the best choice.

The integrity of the web application layout is a little more difficult to verify. Depending on the application and user needs, it may be necessary to make sure that code changes do not accidentally violate the layout of the site.

But computers do not do well with checking that everything “looks fine” (perhaps in the future some kind of clever machine learning algorithm will change this).

There are some tools to try automatically checking the design of a web application in an assembly pipeline. Most of them use Selenium to open a web application in different browsers and formats, screenshots and comparisons with previously taken screenshots. If the old and new screenshots differ in an unexpected way, the tool will give a signal.

One such tool is Galen . Some teams use lineup and his brother Java-based jlineup to achieve a similar result. Both tools use the same approach based on Selenium.

As soon as you want to test usability and nice design , you leave the automated testing space. Here we will have to rely on research tests , usability tests (up to the simplest Hall tests on random people). We'll have to hold demonstrations for users and check whether they like the product and if they can use all the features without frustration or irritation.

Through tests

Testing a deployed application through the UI is the most complete test that can be done. The UI tests described above through WebDriver are good examples of pass-through tests.

Fig. 11. End-to-end tests verify a fully integrated system as a whole.

End-to-end tests (also called wide-stack tests ) provide maximum certainty whether the software is running or not. Selenium and the WebDriver protocol allow you to automate tests by automatically sending headless browsers to deployed services to perform clicks, enter data, and check the status of the UI. You can use Selenium directly or use tools based on it, such as Nightwatch .

Pass-through tests have other problems. They are known for their unreliability, failures for unexpected and unforeseen reasons. Quite often these are false positive failures. The more complex the UI, the more fragile the tests become. Browser freaks, timing issues, animations, and unexpected pop-up dialogs are just some of the reasons why I spent more time debugging than I would like.

In the world of microservices it is also unclear who is responsible for writing these tests. Since they cover several services (the entire system), there is no one specific team responsible for writing end-to-end tests.

If there is a centralized quality assurance teamthey look like a good candidate. Again, it is strictly not recommended to start a centralized QA team, this should not be in the world of DevOps, where all teams are truly universal. There is no simple answer, who should own end-to-end tests. Maybe your organization has some kind of initiative group or quality guild to take care of them. Here a lot depends on the specific organization.

In addition, end-to-end tests require serious support and are rather slow. If you have a lot of microservices, then you can not even run pass-through tests locally, because then you will need to run all microservices locally. Try running hundreds of applications on your computer, there’s not enough RAM.

Due to high maintenance costs, the number of end-to-end tests should be kept to an absolute minimum.

Think of the most important user interactions with the application. Think up the main “routes” of users from screen to screen to automate the most important of these steps in end-to-end tests.

If you are doing an online store, then the most valuable "route" will be a product search - placing it in the basket - placing an order. That's all.While this route works, there are no special problems. Perhaps you will find a couple of important routes for end-to-end tests. Everything else is likely to bring more problems than good.

Remember: in your test pyramid there are a lot of low-level tests, where we have already tested all variants of border situations and integration with other parts of the system. There is no need to repeat these tests at a higher level. Great maintenance efforts and a lot of false positives will slow down your work too much, and sooner or later you will lose confidence in the tests at all.

UI end-to-end tests

For end-to-end tests, many developers choose Selenium and the WebDriver protocol . With Selenium, you can choose any browser and set it on the site. Let him push buttons and links everywhere, enter data and check for changes in the UI.

Selenium needs a browser that can be launched and used for tests. There are several so-called "drivers" for different browsers. Select one (or several) and add it to your

build.gradle. Whatever browser you choose, you should make sure that all developers and CI server have the correct browser version installed. It may be difficult to ensure such synchronization. For Java there is a small webdrivermanager library .that automates the loading and configuration of the correct browser version. Add two such dependencies to build.gradle: testCompile('org.seleniumhq.selenium:selenium-chrome-driver:2.53.1') testCompile('io.github.bonigarcia:webdrivermanager:1.7.2') Running a full browser in a test suite can be a problem. Especially if the continuous delivery server where our pipeline is running is not able to deploy a browser with a UI (for example, because the X-Server is not available). In this case, you can run a virtual X-Server like xvfb .

A newer approach is to use a headless browser (i.e., a browser without a user interface) for WebDriver tests. Until recently, PhantomJS was used most often to automate browser tasks. But when Chromium and Firefoxhave introduced a headless mode out of the box, the PhantomJS is suddenly out of date. In the end, it is better to test the site using a real browser that users actually have (for example, Firefox and Chrome), rather than using an artificial browser just because it is convenient for you as a developer.

Both the Firefox and Chrome headless browsers are completely new and have not yet become widely used for WebDriver tests. We do not want to complicate anything. Instead of messing around with fresh headless modes, let's stick to the classic way, that is, Selenium in conjunction with a regular browser. Here’s what a simple pass-through test that runs Chrome, goes to our service and checks the contents of the site:

@RunWith(SpringRunner.class) @SpringBootTest(webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT) public class HelloE2ESeleniumTest { private WebDriver driver; @LocalServerPort private int port; @BeforeClass public static void setUpClass() throws Exception { ChromeDriverManager.getInstance().setup(); } @Before public void setUp() throws Exception { driver = new ChromeDriver(); } @After public void tearDown() { driver.close(); } @Test public void helloPageHasTextHelloWorld() { driver.get(String.format("http://127.0.0.1:%s/hello", port)); assertThat(driver.findElement(By.tagName("body")).getText(), containsString("Hello World!")); } } Please note that this test will work only if Chrome is installed on the machine where the test is running (your local computer, CI server).

The test is simple. It runs the Spring application on a random port with

@SpringBootTest. Then a new Chrome “web driver” is created, he is instructed to go to the end point of /helloour microservice and check that “Hello World!” Is printed in the browser window. Cool!REST API end-to-end test

To increase the reliability of tests, a good idea is to avoid GUI. Such tests are more stable than full-fledged end-to-end tests, and at the same time cover a significant portion of the application stack. This can be useful if testing an application through a web interface is especially difficult. Maybe you don’t even have a web interface, but only a REST API (because a one-page application communicates with this API somewhere or simply because you despise everything beautiful and brilliant). In any case, the situation is suitable for the subcutaneous test (subcutaneous test), which tests everything that is under the GUI. If you are servicing the REST API, then such a test will be correct, as in our example:

@RestController public class ExampleController { private final PersonRepository personRepository; // shortened for clarity @GetMapping("/hello/{lastName}") public String hello(@PathVariable final String lastName) { Optional<Person> foundPerson = personRepository.findByLastName(lastName); return foundPerson .map(person -> String.format("Hello %s %s!", person.getFirstName(), person.getLastName())) .orElse(String.format("Who is this '%s' you're talking about?", lastName)); } } Let me show you another library that will come in handy when testing a service that provides the REST API. The REST-assured library provides a good DSL for running real HTTP requests to the API and evaluating the responses received.

First of all, add a dependency to yours

build.gradle. testCompile('io.rest-assured:rest-assured:3.0.3') Using this library, you can implement a pass-through test for our REST API:

@RunWith(SpringRunner.class) @SpringBootTest(webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT) public class HelloE2ERestTest { @Autowired private PersonRepository personRepository; @LocalServerPort private int port; @After public void tearDown() throws Exception { personRepository.deleteAll(); } @Test public void shouldReturnGreeting() throws Exception { Person peter = new Person("Peter", "Pan"); personRepository.save(peter); when() .get(String.format("http://localhost:%s/hello/Pan", port)) .then() .statusCode(is(200)) .body(containsString("Hello Peter Pan!")); } } We again run the entire Spring application with

@SpringBootTest. In this case, we make @Autowirefor PersonRepository, to easily write test data to the database. Now when we ask the REST API to say “hello” to our friend “Mr Pan”, we see a pleasant greeting. Awesome! And more than enough for an end-to-end test, if there is no web interface at all.Acceptance tests - do your features work correctly?

The higher you rise in the pyramid of tests, the more likely you are to ask questions: do the features work correctly from the user's point of view? You can view the application as a black box and change the direction of the tests from the past:

when I enter x and y, the return value should be z

to the next:

Considering that there is an authorized user

and there is a “bicycle” product

when the user goes to the “bicycle” product description page

and presses the “Add to basket” button,

then the “bicycle” product should be in his basket

It happens that such tests are called functional or acceptance tests . Some say that functional and acceptance tests are two different things. Sometimes terms combine. Sometimes people endlessly argue about formulations and definitions. Often such discussions introduce even more confusion.

Here's what: at some point you should make sure that your program works correctly from the user's point of view , and not just from a technical point of view. What you call these tests is not really that important. But the presence of these tests is important. Choose any term, stick to it and write these tests.

You can also mention the BDD (development, based on the description of the behavior) and tools for it. BDD and the corresponding test-writing style is a good trick to change your thinking from implementation details to user needs. Do not be afraid and try.

You don't even have to implement full-scale BDD tools, such as Cucumber (although you can also implement). Some assertion libraries like chai.js allow you to write assertions with keywords in a style

shouldthat bring tests to BDD. And even if you don’t use a library that provides this notation, smart and well thought out code will allow you to write tests that focus on user behavior. Some helper methods / functions can be very successful: # a sample acceptance test in Python def test_add_to_basket(): # given user = a_user_with_empty_basket() user.login() bicycle = article(name="bicycle", price=100) # when article_page.add_to_.basket(bicycle) # then assert user.basket.contains(bicycle) Acceptance tests can be conducted at different levels of detail. Basically, they will be quite high level and test the service through the UI. But it is important to understand that technically there is no mandatory requirement to write acceptance tests at the highest level of the test pyramid. If the structure of the application and the existing script allow you to write an acceptance test at a lower level, do it. A low test is better than a high test. The concept of acceptance tests - to prove that the application features work correctly for the user - is completely orthogonal to your test pyramid.

Research testing

Even the most diligent efforts to automate tests are not perfect. Sometimes in automatic tests you miss certain borderline cases. Sometimes it is simply impossible to detect a specific error by writing a unit test. Some quality problems will not appear at all in automated tests (think about design or usability). Despite the best intentions regarding test automation, manual tests are still irreplaceable in some respects.

Fig. 12. Research testing will identify quality issues that are not noticed during the build process.

Include research tests in your test suite. This manual testing procedure emphasizes the freedom and creativity of the tester, who is able to find quality problems in a running system. Just set aside some time in the schedule, roll up your sleeves and try to cause the application to fail in some way. Turn on destructive thinking and think of ways to provoke problems and bugs in the program. Document everything you find. Look for bugs, design issues, slow response times, missing or misleading error messages, and anything else that annoys you as a user.

The good news is that you can easily automate tests for most of the errors found. Writing automated tests for errors found ensures that there will be no regressions of this error in the future. In addition, it helps to find out the root cause of the problem when fixing a bug.

During exploratory testing, you will find problems that have slipped imperceptibly through the assembly line. Dont be upset. This is a good feedback to improve the assembly line. As with any feedback, be sure to respond on your part: think about what action to take to avoid this kind of problem in the future. Maybe you missed a certain set of automatic tests. It may have been careless with automated tests at this stage and should be more thoroughly carried out in the future. Perhaps there is some brilliant new tool or approach that you can use in your pipeline to avoid such problems. Be sure to respond so that your pipeline and the entire software delivery system become better and improved with each step.

It is always difficult to talk about different classifications of tests. My understanding of unit tests (unit tests) may be slightly different from yours. With integration tests even worse. For some people, integration testing is a very broad activity that tests many different parts of the entire system. For me, this is a rather narrow thing: testing only integration with one external part at a time. Some people call it integration tests, some - component tests , others prefer the term service test . Someone will say that these are generally three completely different things. There is no right or wrong definition. The software community simply did not establish well-defined terms in testing.

Do not focus on ambiguous terms. It does not matter if you call this the end-to-end test, the wide stack test, or the functional test. It doesn’t matter if your integration tests mean to you that’s not for people in another company. Yes, it would be very good if our industry could clearly define the terms and all would adhere to them. Unfortunately, this has not happened yet. And since there are many nuances in testing, we still deal with a wide range of tests rather than with a bunch of discrete sets, which further complicates clear terminology.

It is important to take it this way: you simply find terms that work for you and your team. Clearly identify for yourself the various types of tests you want to write. Agree on terms in your team and find a consensus on the coverage of each type of test. If in your team (or even throughout the organization) you are consistent with these terms, then this is all that needs to be taken care of. Simon Stewart summed it up well in the approach that is used in Google. I think this is a wonderful demonstration that you should not get too hung up on the names and conventions of the terms.

Deploying tests into the deployment pipeline

If you use continuous integration or continuous delivery, then your deployment pipeline runs automated tests every time you make changes to the software. Usually the pipeline is divided into several stages, which gradually give more and more confidence that your program is ready for deployment in a working environment. When you hear about all the varieties of tests, you may be wondering how to put them in the deployment pipeline. To answer this, you just need to think about one of the most fundamental values of continuous delivery (this is one of the key values of extreme programming and agile development): fast feedback .

A good assembly line quickly reports errors. You do not want to wait a whole hour to find out that the latest change broke some simple unit tests. If the conveyor is so slow, then you could have gone home when feedback arrived. Information should arrive in a few seconds or a few minutes from quick tests in the early stages of the pipeline. Conversely, longer tests — usually with a wider area — are placed at later stages so as not to inhibit feedback from fast tests. As you can see, the stages of the deployment pipeline are not determined by the types of tests, but by their speed and scope. Therefore, it may be very reasonable to place some of the narrowest and fastest integration tests at the same stage as unit tests - simply because they provide faster feedback.And it is not necessary to conduct a strict line on the formal type of tests.

Avoid duplicate tests

There is another trap that should be avoided: duplication of tests at different levels of the pyramid. The smell says there aren't many tests, but let me assure you: it happens. Each test in the test set - extra baggage, which does not cost for free. Writing and running tests takes time. Reading and understanding someone else's test takes time. And of course, the execution of tests also takes time.

As with the production code, you should strive for simplicity and avoid duplication. In the context of the implementation of the pyramid of tests, there are two rules of thumb:

- If an error is found in the test of a higher level, and there is no error in the tests of a lower level, then it is necessary to write a test of a lower level.

- Move the tests as low as possible along the levels of the pyramid.

The first rule is important because lower level tests better allow you to narrow the area and reproduce the error in isolation. They are faster and less bloated, which helps with manual debugging. And in the future will serve as a good regression test. The second rule is important for quick test suite execution. If you have confidently tested all the conditions on tests of a lower level, then there is no need to test a higher level. It simply does not add confidence that everything works. Excess tests will become a burden and begin to annoy in everyday work. The test suite will run slower, and as the code changes, more tests will need to be changed.

We formulate differently: if a test of a higher level gives more confidence that the application works correctly, then you need to have such a test. Writing a unit test for the controller class helps to verify the logic inside the controller itself. However, it does not tell you whether the REST endpoint that this controller provides really responds to HTTP requests. Thus, you move up the pyramid of tests and add a test that verifies that, but no more. In a higher level test, you do not test all conditional logic and borderline cases that are already covered by lower level unit tests. Make sure that a high level test focuses only on what is not covered by lower level tests.

I strictly refer to the exclusion of tests that have no value. I delete high-level tests that are already covered at a lower level (given that they do not provide additional value). Replace higher level tests with lower level tests, if possible. It is sometimes difficult to remove an extra test, especially if it was not easy to invent. But you risk creating irrecoverable costs , so feel free to click Delete. There is no reason to spend precious time on the test, which has ceased to be useful.

Write clean code for tests

As with the usual code, you should take care of the good and clean code of the tests. Here are some more tips for creating supported test code before you start and create an automated test suite:

- , . . « » — .

- . .

- (arrange, act, assert) «, , » — , .

- . DRY ( « »). , . DRY DAMP (DAMP — Descriptive And Meaningful Phrases, ).

- , . Use before reuse .

Conclusion

That's all!I know it was a long and difficult explanation of why and how to conduct testing. The great news is that this information has almost no statute of limitations and does not depend on which program you are creating. You work on microservices, IoT devices, mobile applications or web applications, the lessons from this article apply to everything.

I hope that the article has something useful. Now go ahead, study the sample code and apply the learned concepts in your test suite. Creating a strong test suite requires some effort. It will pay off in the long run and make your developer life more relaxed, trust me.

See also:

"Antipattern testing software"

Source: https://habr.com/ru/post/358950/

All Articles