How to speed up mobile search in half. Yandex lecture

On the phone, web pages often load longer than on the desktop. Developer Ivan Khvatov talks about the reasons for the backlog and how to deal with it. The lecture consists of several parts: the first is about the main stages of loading the page on mobile devices, the second is about the techniques that we use to speed up loading, the third is about our method of adapting the layout for different speeds.

- Hello everyone, my name is Ivan Khvatov, I work in the search infrastructure. Recently, I am working on accelerating the download of search results. I work with layout, backend teams and traffic delivery. Today I will tell you how we accelerated the mobile search, which techniques we used, successful and unsuccessful. They are not unique to us. Something you may be able to try yourself. We will tell about our failures, what we learned from them and how we came to adapt the layout depending on the connection speed.

If we look at the time of the first rendering of the output in the desktop and mobile cutouts in runet, we will see that the mobile ones lag 1.5-2 times depending on the connection speed.

')

It's no secret that speed directly affects the happiness of users. The user likes to use fast services, quickly solve their problems. For some questions, users expect from the mobile Internet that it will work, if not faster, then at least as a desktop. Therefore, we have recently been seriously engaged in the optimization of the mobile Internet.

To understand the reasons, let's take a look at the mobile web feature.

Mobile Internet is almost 100% wireless: cellular or Wi-Fi. Any connection has the following characteristics: delay, time of passing one packet to the server and back, ping command. And - channel width: how much data per unit time passes the connection.

These characteristics are very bad for mobile Internet, for Wi-Fi and cellular networks. Big delays. There are network technologies where the channel is very narrow. But even worse, these characteristics are unstable, depend on various conditions, on the loading of the tower, on the distance from the tower, on whether we stopped at the tunnel. And classical algorithms in operating systems used to be tuned up, tuned to the fact that these characteristics are stable, and with unstable characteristics, they dramatically degrade the connection speed.

The second distinguishing feature is iron. Phones, despite their rapid growth, still lag behind the desktops on the processor, memory and flash. Even if we take resources from the cache, it will be much slower than on the desktop.

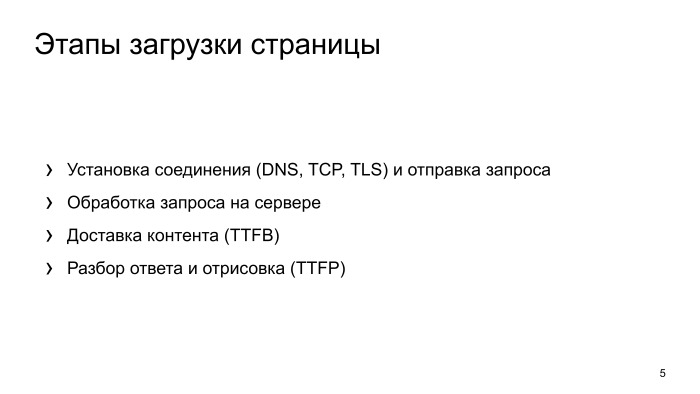

How can these features affect page loading? What happens when the browser makes a request and tries to render the page? Some kind of network activity occurs, a connection is established. Connection is received on the server, the server processes it, some time is spent. Then the traffic is delivered. The next step - the browser parses the answer and renders the issue.

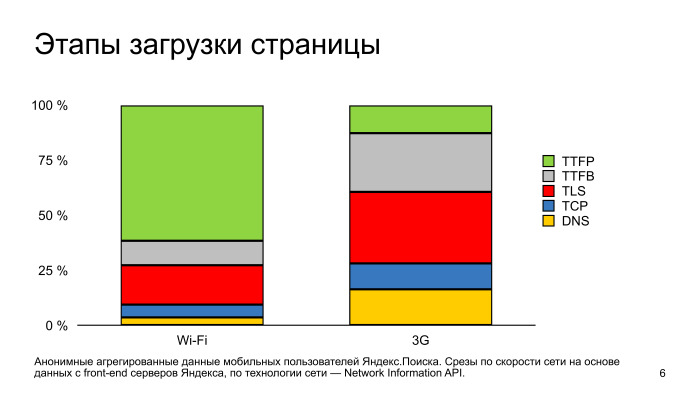

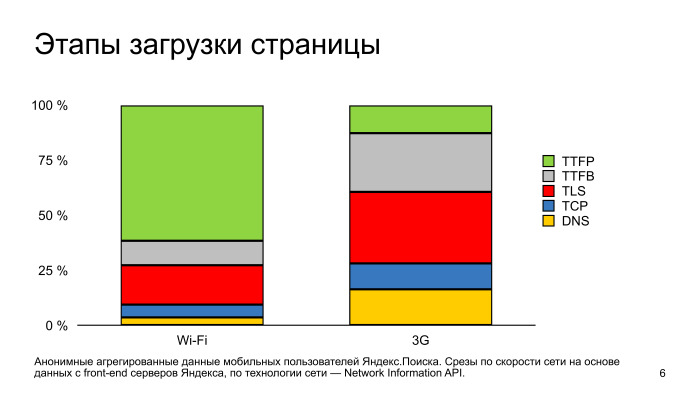

If we look at how these stages look in time relative to each other in terms of fast and slow connections in phones, then we note that in fast networks, the bottleneck in the page display is precisely the parsing of the page and its rendering.

Separately, I note that the installation of a secure connection also takes significant time, this is a red zone.

For 3G networks, most of the time is spent on the network part, to establish a connection. And it also highlights the secure connection setup. If we want to optimize mobiles so that the user sees fewer white pages, we need to pay attention to setting up a connection and separately pay attention to setting up a secure connection.

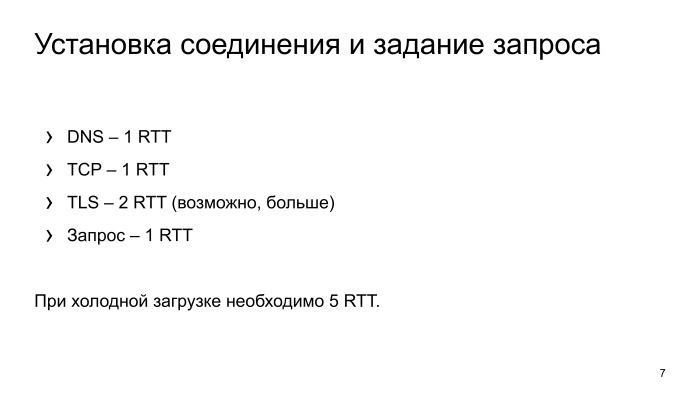

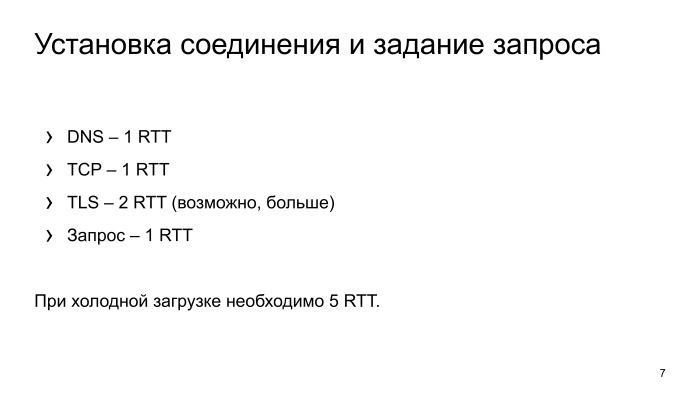

What happens with the connection setup? In the worst case, the browser resolves DNS, gets an IP address, makes another network input, establishes a TCP connection, then establishes a secure connection. With him, as a rule, a little more complicated. At least two requests should be made. We will tell about it in more detail, two network entries. And ask the request.

As a result, to just get the first data, you need to perform five network entries. Let's look at the real mobile Internet in Russia, which is equal to one network entry in time.

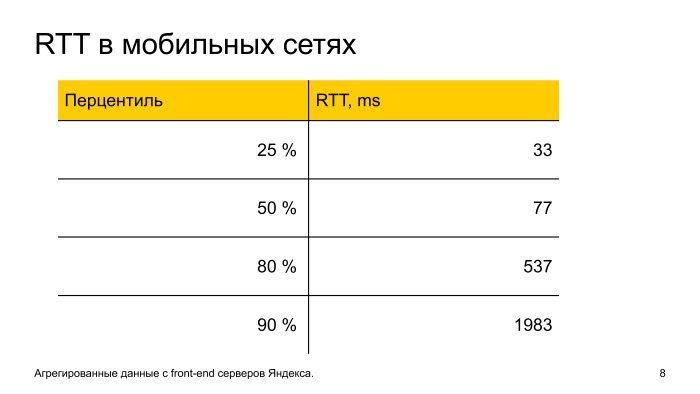

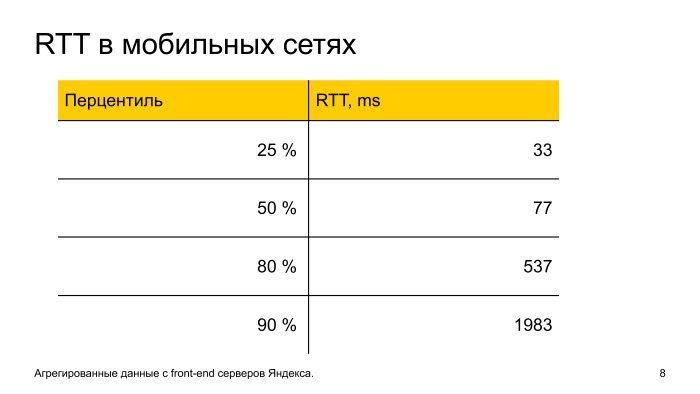

We see that in 50% of users in runet, in principle, everything is fine with latency: 77 ms, five network entries, the user almost does not notice this moment. But the second part of users latency is growing strongly. And by the 10th slowest one network entry equals almost 2 s. That is, 10% of Yandex users will expect a full connection to be installed in almost 10 seconds, which is a very long time.

Why such a tail, why does latency grow so much?

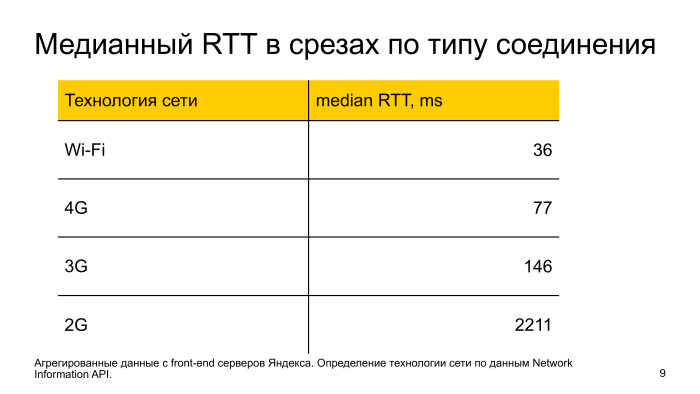

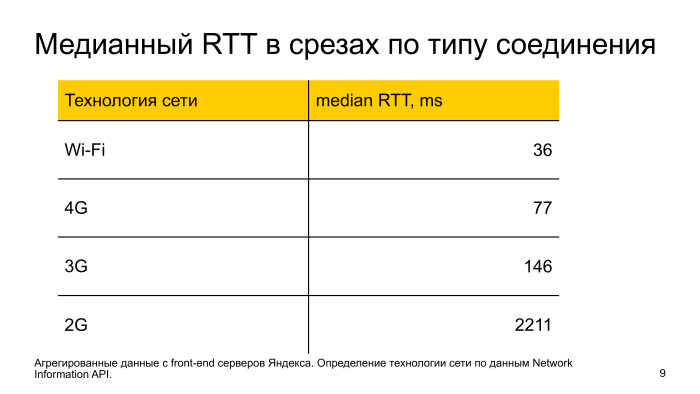

Two reasons. First, network technologies, if we look at 2G and 3G, then by the type of network technology, median latency, they don’t have a passport, not a technology, but real numbers that we remove, latency is very bad. With Wi-Fi and 4G, everything is more or less normal. But there are nuances.

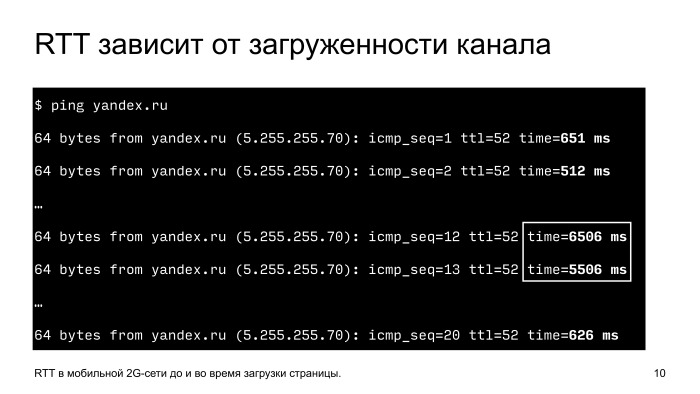

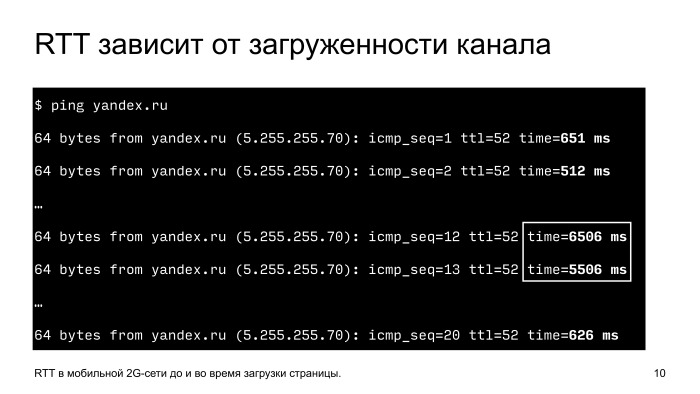

Overload, unstable signals, physical interference. Here is a simple example: phone, remove latency from it to Yandex, run the ping command and see what it is equal to. We see that in the usual situation 0.5 with one network entry. We start at this time to download the channel, just download the page, latency grows almost 10 times, up to 6 seconds. It is clear that at this time, when the channel is loaded, we will start to make some blocking calls necessary for rendering, the user will never wait for them. Five network entries, 25 seconds, no one will wait so much.

What to do with it? Let's optimize.

There are several approaches. The simplest and most effective - in principle, do not establish a connection. Then an important point - we look at redirects, we also try not to do them. and further delve into the installation of a secure connection, what can be done there.

How can I make a connection? This is a seemingly simple moment. We look to keepalive on the server. But there are nuances. If the application is placed in a bad network, it often begins to break connection on timeout. Under normal conditions, we do not notice this, on slow networks it becomes a problem, the connection breaks due to a timeout, and the browser needs to re-create the connection. Therefore, we analyze the time-outs, which are at the application level, and configure them correctly.

The second is the preconnect. Make the connection setup before you need this connection. When the time comes and the user requests a page, we will already have a ready connection, and he will not have to wait long.

Preconnect is a standard directive, it can be specified directly in HTML, the browser understands it. Most.

We at Yandex analyzed how our requests go, we found a service that shows search tips. They realized that at the very beginning of work, when there are no ready-made connections, it does so through a cold connection. They slightly changed the architecture, made it so that it reused the connection from another service, and the number of impressions of this service grew by almost 5%.

Redirects. This is a great evil in terms of speed. First, the browser goes through all the stages of establishing a connection, the user is waiting, then the server says that it has come to the wrong place, go the other way. And the browser re-creates the connections again, goes through all these stages.

What can be done with this? We analyze logs, we look, where we do redirects, we change links on the websites in applications that always there were direct visits.

The second feature in our logs was a lot of redirects from HTTP to HTTPS. The user switches to HTTP, we redirect him to HTTPS, he waits again, and still establishes a secure connection.

To prevent this from happening, there is HSTS technology - you can say once in the server’s response that our site works only on HTTPS, and the next time we make a call on HTTP, the browser will automatically make internal redirects, immediately make a request for HTTPS.

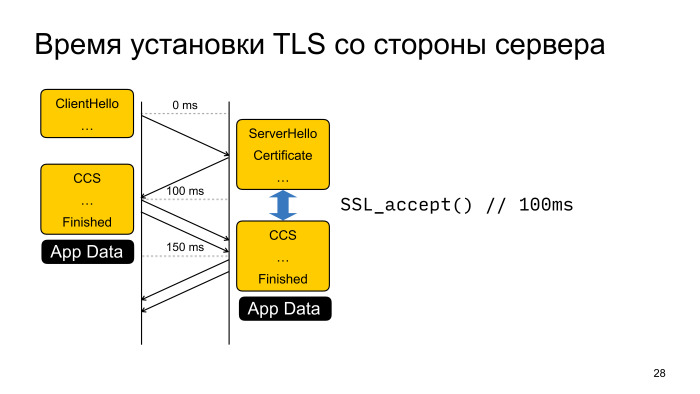

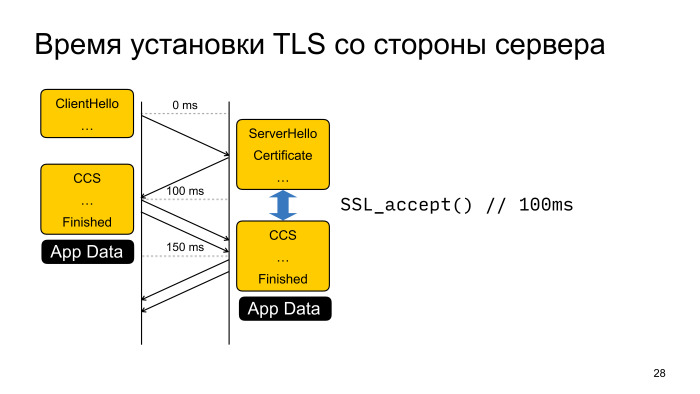

TLS, setting up a secure connection. Let's consider such a scheme in terms of speed, not in terms of security. How is the installation of TLS? Two stages. At the first stage, the client and the server exchange the encryption settings, which algorithms they will use. On the second more detailed information, keys, how to encrypt data.

In addition to the fact that there are two network entries, the client and the server spend some more CPU time on the calculation. This is also bad and slow too.

What can be done here? There is a more or less standard optimization TLS Session Ticket, what is its essence? After the connection has completely passed all stages, the server can transfer the ticket to the client and say that the next time you set up the connection, come with this ticket, and we will restore the session. Everything works great, we save one network entry, and secondly, we do not do complex cryptography.

But there are nuances. These tickets can not be stored for long security reasons. The browser is often pushed out of memory, loses these keys, and the next time it has to go through it in two stages.

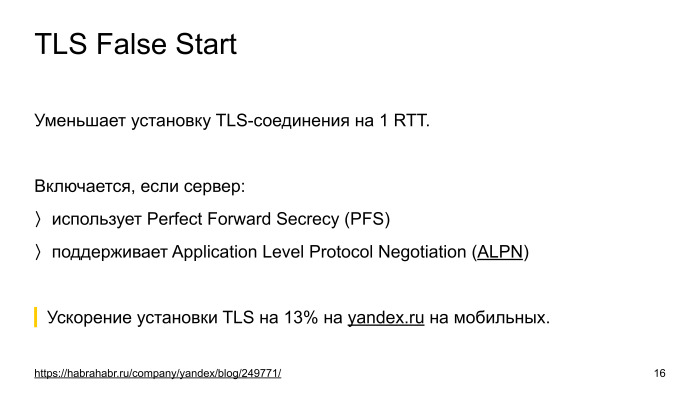

Therefore, a second optimization comes to the rescue, called TLS False Start.

It also reduces one network entry. All its essence is that if we look at the scheme, when we first received a response from the server, we have all the data to encrypt the request to the user and send all the keys to the server. Our connection occurs in a semi-open state.

In principle, nothing interferes, and this technique is called TLS False Start, when the client receives the first data from the server, it immediately encrypts it, and together with the connection setup it sends a request.

But there is a nuance. Since this is the Internet, there are a lot of implementations of TLS servers, some implementations break down from this. There was an attempt by the IE browser to enable such optimization by default, but they got the problem that some sites simply did not work. They maintained a list of broken sites, and so on.

The rest of the browsers went the other way. They have turned off this optimization by default, but look at other features, on how the TLS server is configured. If he uses modern encryption algorithms and supports the ALPN extension, which they understand from the first response from the server, then they include this optimization and everything is fine.

In more detail how to configure the HTTPS service, you can read in the post of our security service on Habré, there everything is there.

After we turned on TLS False Start optimization, the ticket has long been turned on, and we accelerated the installation of a secure connection by an average of 13% on mobile. She is very effective.

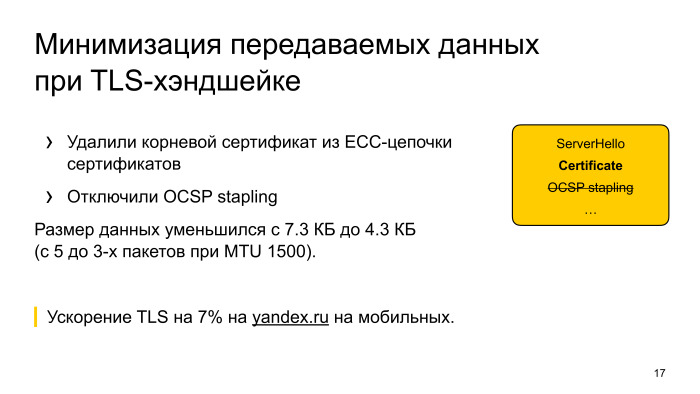

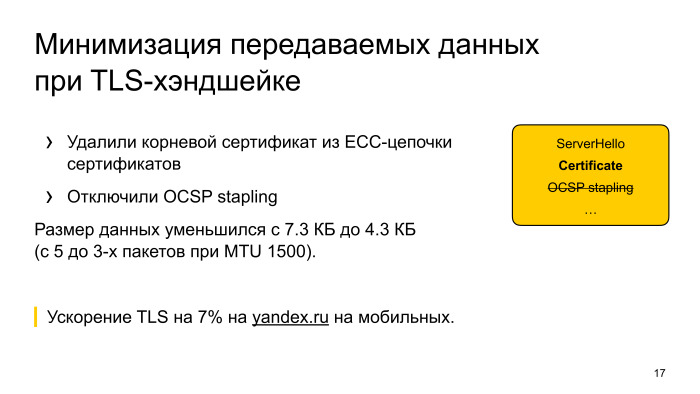

What's next? We rolled out all the changes, we looked, the installation time for a secure connection still takes a lot of time. We started to think, we saw that besides the fact that there are two head-heads, they seem to have been corrected, but a lot of data is being transmitted. We thought how this amount of data that is transmitted from the server to the client can be cut.

We found that we have a certificate in the chain for backward compatibility, which is not needed now, it was removed. And an eye was placed on OCSP stapling - this is the technology that was invented in order to check the certificate revocation, so that if the certificate was revoked, the browser established a secure connection and somehow warned.

But time goes on, now the industry is at such a stage that by default for ordinary certificates it is considered that OCSP does not help for security, therefore it is not used, almost everyone, but maintain a list of revoked certificates that are periodically updated in the background.

We tried to turn off the OSCP, clipped the certificate, started the experiment and saw that our TLS handshake is still accelerating by 7%. We decided to leave this optimization in production. We noticed that the connection setup in Opera, which looks at the OCSP response, has degraded, but after some time, when the cache is heated in the browser, the OSCP response is cached there, which Opera does not get OCSP from the response of the service, but goes to a separate service that blocks and checks to see if the certificate has been revoked for yandex.ru. If not withdrawn, the response is cached for some time, and the next time there is no such walking.

When this cache was typed, we even noticed in Opera that the connection started to be established faster. Since we do not lose a bit of security, we turned off this piece, cut the size of the data, and for slow networks, where the channel width is a bottleneck, we received acceleration.

Well, established the connection, then download the content. The server received a request, in our case it is a search request, the search does not work instantly. There are many servers, many network entries, rankings, it all takes time, we are actively working on it, we are also optimizing, but nonetheless. How can you beat this situation?

We have a search arrow, which does not depend on the search results. We can start this search arrow to send to the user even before we received the issue as a result of the search. Put Chunked-Encoding in HTTP, send the search arrow. First, the user sees that his page is loading, he is not just looking at the white screen. Secondly, we are also accelerating. When we pass this search arrow, we warm up the TCP connection, and the next time when we have the search results ready, they come faster. Plus, this search arrow can JavaScript'om something to load and take the connection.

Received search results, then upload them. Here is a banal optimization that we need to shrink the data as much as possible, so we use Brotli, Zopfli. Brotli - where possible. Fulbatch on Zopfli for images, optimize images, if the browser can WebP, then use it. Can SVG - use SVG.

Be sure not to block on external resources, that is, inline styles, all in HTML, so that immediately upon receipt of the HTML could organize the issue.

And a separate feature that we are trying to warm up the statics. When a user visits, for example, our service, on the main page, and his connection is idle, we can download some resources that are used for search results. And after the user enters the request, goes to the search results, he will already have a hot cache, he will not need to upload any resources.

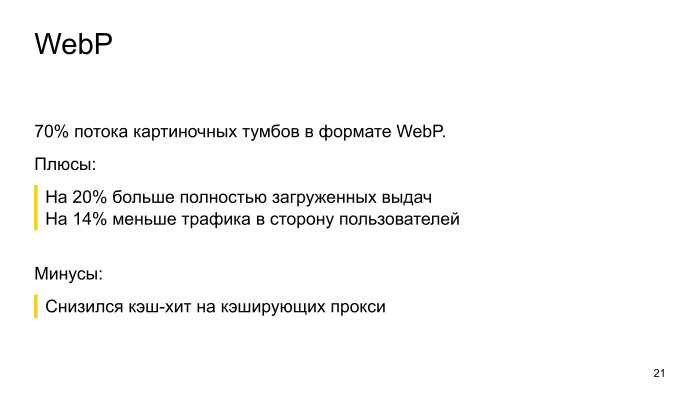

About WebP I want to separately note. It would seem that the optimization is very simple, we include and work, but it gives a very large profit. Great acceleration.

When we enabled WebP on the map service, searching by image, we noticed that the number of full downloads increased by 20%. Users began to wait when their page is fully loaded. We cut traffic by 14%.

Of the minuses, our hash hit has decreased. We added servers, solved this problem.

The main thing here is not to overdo it, just when we shook, shook HTML, reduced everything, we came to the next situation. It was possible to optimize, but at the same time, when we conducted an online experiment, we noticed a slowdown in rendering. We realized that for users on slow networks, we accelerated them, made better, the page loads better, but where the network was not a bottleneck, we had a slowdown.

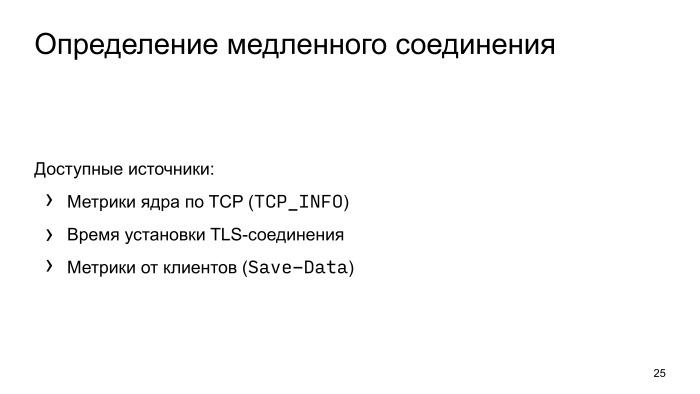

We thought about how to deal with this situation, and came to the following conclusion: it is necessary, depending on the connection speed, to change the output, to somehow adapt. How to do it? We went further and concluded that we will make a special light version of the issue for users on slow networks. To do this, you need to learn how to determine the connection speed from the server.

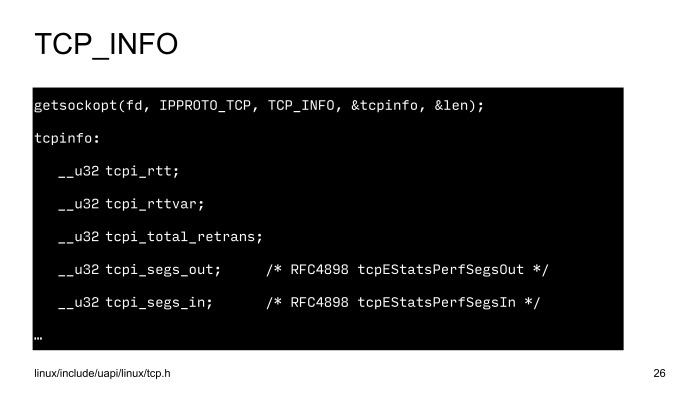

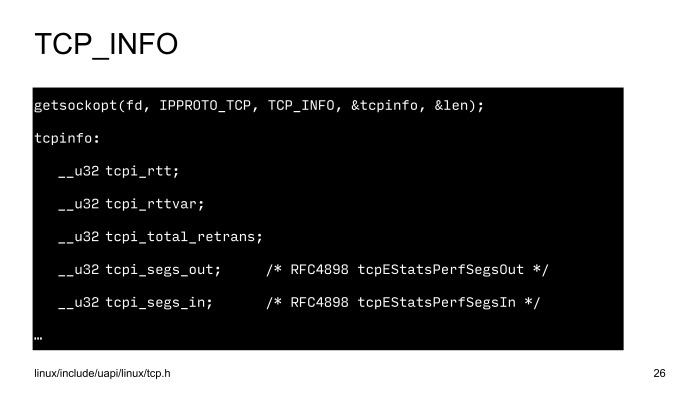

Secondly, to make a super-layout that will work in any networks, on any devices. There are several ways to learn how to determine the connection speed on the server. We use Linux kernel data, TCP_INFO data, we look at the setup time of the TLS connection, and if the clients are very smart and they themselves tell us that they are on a bad network, then we also use this data.

It's great that you can use TCP_INFO data directly in nginx, enable the config with one line of code, and the speed metrics will come to the backend.

SSL, we measure time when we send a packet and receive a packet from the user. This interval is considered the time of one network entry.

All these factors are used to determine the connection speed.

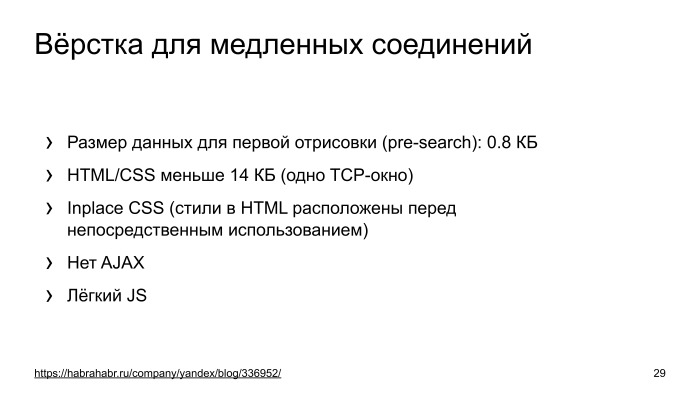

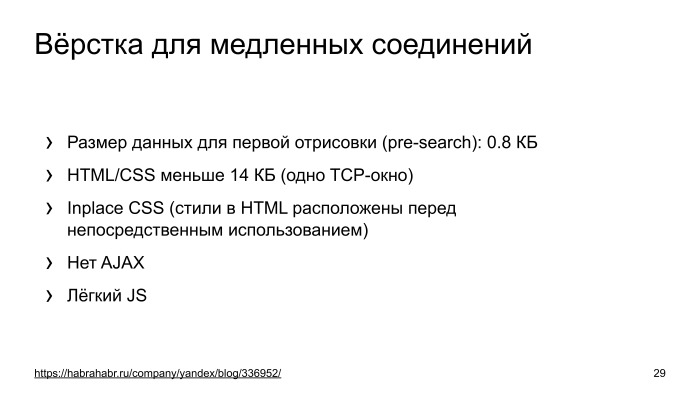

Further layout. About the layout we have a separate post on Habré from the main developer of this layout. If you like and enjoy the front-end, you can read - it is very interesting. In short, it is very trimmed in terms of size, but it doesn’t have any visible differences. An untrained person cannot distinguish a full layout from a light one. It is clear that there may not be any results or something else like that. We use the same techniques, CSS inline, reduce the size, make a very small search arrow, discard Ajax and use very light JS so that any slowest device can quickly draw it.

After we started the experiment on Yandex, we saw that the number of search hits, the number of search results increased by 2.2%. This is a very big result. This means that 2.2% more of our users were able to solve their problems in slow networks.

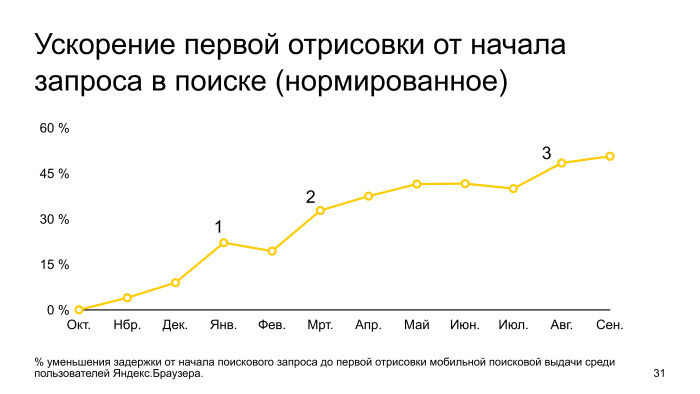

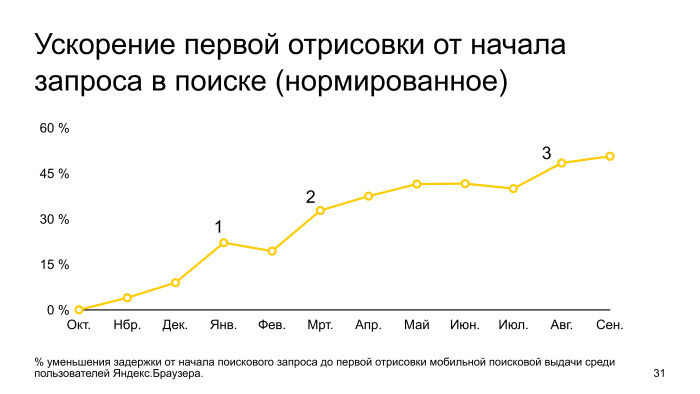

Let's look at the graph of the speed of rendering the issue for the year. It is normalized by October. It can be seen that the schedule is constantly growing, that is, the optimization is being implemented all the time, many commands in Yandex are working on it. But there are three stages: in the first, we just introduced an easy search results, the initial inclusion, in the second, we improved the algorithm for determining slow connections, in the third, we implemented optimization for TLS connections. As we can see, over the year we managed to speed up the first rendering almost twice.

That's all I wanted to say. I hope these optimizations will be useful to you. Be sure to test them, because different sites behave differently on the same optimizations, you need to collect metrics, look at how it all works. Thank.

- Hello everyone, my name is Ivan Khvatov, I work in the search infrastructure. Recently, I am working on accelerating the download of search results. I work with layout, backend teams and traffic delivery. Today I will tell you how we accelerated the mobile search, which techniques we used, successful and unsuccessful. They are not unique to us. Something you may be able to try yourself. We will tell about our failures, what we learned from them and how we came to adapt the layout depending on the connection speed.

If we look at the time of the first rendering of the output in the desktop and mobile cutouts in runet, we will see that the mobile ones lag 1.5-2 times depending on the connection speed.

')

It's no secret that speed directly affects the happiness of users. The user likes to use fast services, quickly solve their problems. For some questions, users expect from the mobile Internet that it will work, if not faster, then at least as a desktop. Therefore, we have recently been seriously engaged in the optimization of the mobile Internet.

To understand the reasons, let's take a look at the mobile web feature.

Mobile Internet is almost 100% wireless: cellular or Wi-Fi. Any connection has the following characteristics: delay, time of passing one packet to the server and back, ping command. And - channel width: how much data per unit time passes the connection.

These characteristics are very bad for mobile Internet, for Wi-Fi and cellular networks. Big delays. There are network technologies where the channel is very narrow. But even worse, these characteristics are unstable, depend on various conditions, on the loading of the tower, on the distance from the tower, on whether we stopped at the tunnel. And classical algorithms in operating systems used to be tuned up, tuned to the fact that these characteristics are stable, and with unstable characteristics, they dramatically degrade the connection speed.

The second distinguishing feature is iron. Phones, despite their rapid growth, still lag behind the desktops on the processor, memory and flash. Even if we take resources from the cache, it will be much slower than on the desktop.

How can these features affect page loading? What happens when the browser makes a request and tries to render the page? Some kind of network activity occurs, a connection is established. Connection is received on the server, the server processes it, some time is spent. Then the traffic is delivered. The next step - the browser parses the answer and renders the issue.

If we look at how these stages look in time relative to each other in terms of fast and slow connections in phones, then we note that in fast networks, the bottleneck in the page display is precisely the parsing of the page and its rendering.

Separately, I note that the installation of a secure connection also takes significant time, this is a red zone.

For 3G networks, most of the time is spent on the network part, to establish a connection. And it also highlights the secure connection setup. If we want to optimize mobiles so that the user sees fewer white pages, we need to pay attention to setting up a connection and separately pay attention to setting up a secure connection.

What happens with the connection setup? In the worst case, the browser resolves DNS, gets an IP address, makes another network input, establishes a TCP connection, then establishes a secure connection. With him, as a rule, a little more complicated. At least two requests should be made. We will tell about it in more detail, two network entries. And ask the request.

As a result, to just get the first data, you need to perform five network entries. Let's look at the real mobile Internet in Russia, which is equal to one network entry in time.

We see that in 50% of users in runet, in principle, everything is fine with latency: 77 ms, five network entries, the user almost does not notice this moment. But the second part of users latency is growing strongly. And by the 10th slowest one network entry equals almost 2 s. That is, 10% of Yandex users will expect a full connection to be installed in almost 10 seconds, which is a very long time.

Why such a tail, why does latency grow so much?

Two reasons. First, network technologies, if we look at 2G and 3G, then by the type of network technology, median latency, they don’t have a passport, not a technology, but real numbers that we remove, latency is very bad. With Wi-Fi and 4G, everything is more or less normal. But there are nuances.

Overload, unstable signals, physical interference. Here is a simple example: phone, remove latency from it to Yandex, run the ping command and see what it is equal to. We see that in the usual situation 0.5 with one network entry. We start at this time to download the channel, just download the page, latency grows almost 10 times, up to 6 seconds. It is clear that at this time, when the channel is loaded, we will start to make some blocking calls necessary for rendering, the user will never wait for them. Five network entries, 25 seconds, no one will wait so much.

What to do with it? Let's optimize.

There are several approaches. The simplest and most effective - in principle, do not establish a connection. Then an important point - we look at redirects, we also try not to do them. and further delve into the installation of a secure connection, what can be done there.

How can I make a connection? This is a seemingly simple moment. We look to keepalive on the server. But there are nuances. If the application is placed in a bad network, it often begins to break connection on timeout. Under normal conditions, we do not notice this, on slow networks it becomes a problem, the connection breaks due to a timeout, and the browser needs to re-create the connection. Therefore, we analyze the time-outs, which are at the application level, and configure them correctly.

The second is the preconnect. Make the connection setup before you need this connection. When the time comes and the user requests a page, we will already have a ready connection, and he will not have to wait long.

Preconnect is a standard directive, it can be specified directly in HTML, the browser understands it. Most.

We at Yandex analyzed how our requests go, we found a service that shows search tips. They realized that at the very beginning of work, when there are no ready-made connections, it does so through a cold connection. They slightly changed the architecture, made it so that it reused the connection from another service, and the number of impressions of this service grew by almost 5%.

Redirects. This is a great evil in terms of speed. First, the browser goes through all the stages of establishing a connection, the user is waiting, then the server says that it has come to the wrong place, go the other way. And the browser re-creates the connections again, goes through all these stages.

What can be done with this? We analyze logs, we look, where we do redirects, we change links on the websites in applications that always there were direct visits.

The second feature in our logs was a lot of redirects from HTTP to HTTPS. The user switches to HTTP, we redirect him to HTTPS, he waits again, and still establishes a secure connection.

To prevent this from happening, there is HSTS technology - you can say once in the server’s response that our site works only on HTTPS, and the next time we make a call on HTTP, the browser will automatically make internal redirects, immediately make a request for HTTPS.

TLS, setting up a secure connection. Let's consider such a scheme in terms of speed, not in terms of security. How is the installation of TLS? Two stages. At the first stage, the client and the server exchange the encryption settings, which algorithms they will use. On the second more detailed information, keys, how to encrypt data.

In addition to the fact that there are two network entries, the client and the server spend some more CPU time on the calculation. This is also bad and slow too.

What can be done here? There is a more or less standard optimization TLS Session Ticket, what is its essence? After the connection has completely passed all stages, the server can transfer the ticket to the client and say that the next time you set up the connection, come with this ticket, and we will restore the session. Everything works great, we save one network entry, and secondly, we do not do complex cryptography.

But there are nuances. These tickets can not be stored for long security reasons. The browser is often pushed out of memory, loses these keys, and the next time it has to go through it in two stages.

Therefore, a second optimization comes to the rescue, called TLS False Start.

( Link - Ed.)

It also reduces one network entry. All its essence is that if we look at the scheme, when we first received a response from the server, we have all the data to encrypt the request to the user and send all the keys to the server. Our connection occurs in a semi-open state.

In principle, nothing interferes, and this technique is called TLS False Start, when the client receives the first data from the server, it immediately encrypts it, and together with the connection setup it sends a request.

But there is a nuance. Since this is the Internet, there are a lot of implementations of TLS servers, some implementations break down from this. There was an attempt by the IE browser to enable such optimization by default, but they got the problem that some sites simply did not work. They maintained a list of broken sites, and so on.

The rest of the browsers went the other way. They have turned off this optimization by default, but look at other features, on how the TLS server is configured. If he uses modern encryption algorithms and supports the ALPN extension, which they understand from the first response from the server, then they include this optimization and everything is fine.

In more detail how to configure the HTTPS service, you can read in the post of our security service on Habré, there everything is there.

After we turned on TLS False Start optimization, the ticket has long been turned on, and we accelerated the installation of a secure connection by an average of 13% on mobile. She is very effective.

What's next? We rolled out all the changes, we looked, the installation time for a secure connection still takes a lot of time. We started to think, we saw that besides the fact that there are two head-heads, they seem to have been corrected, but a lot of data is being transmitted. We thought how this amount of data that is transmitted from the server to the client can be cut.

We found that we have a certificate in the chain for backward compatibility, which is not needed now, it was removed. And an eye was placed on OCSP stapling - this is the technology that was invented in order to check the certificate revocation, so that if the certificate was revoked, the browser established a secure connection and somehow warned.

But time goes on, now the industry is at such a stage that by default for ordinary certificates it is considered that OCSP does not help for security, therefore it is not used, almost everyone, but maintain a list of revoked certificates that are periodically updated in the background.

We tried to turn off the OSCP, clipped the certificate, started the experiment and saw that our TLS handshake is still accelerating by 7%. We decided to leave this optimization in production. We noticed that the connection setup in Opera, which looks at the OCSP response, has degraded, but after some time, when the cache is heated in the browser, the OSCP response is cached there, which Opera does not get OCSP from the response of the service, but goes to a separate service that blocks and checks to see if the certificate has been revoked for yandex.ru. If not withdrawn, the response is cached for some time, and the next time there is no such walking.

When this cache was typed, we even noticed in Opera that the connection started to be established faster. Since we do not lose a bit of security, we turned off this piece, cut the size of the data, and for slow networks, where the channel width is a bottleneck, we received acceleration.

Well, established the connection, then download the content. The server received a request, in our case it is a search request, the search does not work instantly. There are many servers, many network entries, rankings, it all takes time, we are actively working on it, we are also optimizing, but nonetheless. How can you beat this situation?

We have a search arrow, which does not depend on the search results. We can start this search arrow to send to the user even before we received the issue as a result of the search. Put Chunked-Encoding in HTTP, send the search arrow. First, the user sees that his page is loading, he is not just looking at the white screen. Secondly, we are also accelerating. When we pass this search arrow, we warm up the TCP connection, and the next time when we have the search results ready, they come faster. Plus, this search arrow can JavaScript'om something to load and take the connection.

Received search results, then upload them. Here is a banal optimization that we need to shrink the data as much as possible, so we use Brotli, Zopfli. Brotli - where possible. Fulbatch on Zopfli for images, optimize images, if the browser can WebP, then use it. Can SVG - use SVG.

Be sure not to block on external resources, that is, inline styles, all in HTML, so that immediately upon receipt of the HTML could organize the issue.

And a separate feature that we are trying to warm up the statics. When a user visits, for example, our service, on the main page, and his connection is idle, we can download some resources that are used for search results. And after the user enters the request, goes to the search results, he will already have a hot cache, he will not need to upload any resources.

About WebP I want to separately note. It would seem that the optimization is very simple, we include and work, but it gives a very large profit. Great acceleration.

When we enabled WebP on the map service, searching by image, we noticed that the number of full downloads increased by 20%. Users began to wait when their page is fully loaded. We cut traffic by 14%.

Of the minuses, our hash hit has decreased. We added servers, solved this problem.

The main thing here is not to overdo it, just when we shook, shook HTML, reduced everything, we came to the next situation. It was possible to optimize, but at the same time, when we conducted an online experiment, we noticed a slowdown in rendering. We realized that for users on slow networks, we accelerated them, made better, the page loads better, but where the network was not a bottleneck, we had a slowdown.

We thought about how to deal with this situation, and came to the following conclusion: it is necessary, depending on the connection speed, to change the output, to somehow adapt. How to do it? We went further and concluded that we will make a special light version of the issue for users on slow networks. To do this, you need to learn how to determine the connection speed from the server.

Secondly, to make a super-layout that will work in any networks, on any devices. There are several ways to learn how to determine the connection speed on the server. We use Linux kernel data, TCP_INFO data, we look at the setup time of the TLS connection, and if the clients are very smart and they themselves tell us that they are on a bad network, then we also use this data.

It's great that you can use TCP_INFO data directly in nginx, enable the config with one line of code, and the speed metrics will come to the backend.

SSL, we measure time when we send a packet and receive a packet from the user. This interval is considered the time of one network entry.

All these factors are used to determine the connection speed.

Further layout. About the layout we have a separate post on Habré from the main developer of this layout. If you like and enjoy the front-end, you can read - it is very interesting. In short, it is very trimmed in terms of size, but it doesn’t have any visible differences. An untrained person cannot distinguish a full layout from a light one. It is clear that there may not be any results or something else like that. We use the same techniques, CSS inline, reduce the size, make a very small search arrow, discard Ajax and use very light JS so that any slowest device can quickly draw it.

After we started the experiment on Yandex, we saw that the number of search hits, the number of search results increased by 2.2%. This is a very big result. This means that 2.2% more of our users were able to solve their problems in slow networks.

Let's look at the graph of the speed of rendering the issue for the year. It is normalized by October. It can be seen that the schedule is constantly growing, that is, the optimization is being implemented all the time, many commands in Yandex are working on it. But there are three stages: in the first, we just introduced an easy search results, the initial inclusion, in the second, we improved the algorithm for determining slow connections, in the third, we implemented optimization for TLS connections. As we can see, over the year we managed to speed up the first rendering almost twice.

That's all I wanted to say. I hope these optimizations will be useful to you. Be sure to test them, because different sites behave differently on the same optimizations, you need to collect metrics, look at how it all works. Thank.

Source: https://habr.com/ru/post/358944/

All Articles