Computer vision and machine learning in PHP using the opencv library

Hello. This is my anniversary article on Habré. For almost 7 years I have written 10 articles (including this one), 8 of them are technical. The total number of views of all articles is about half a million.

I made the main contribution to two hubs: PHP and Server Administration. I like to work at the junction of these two areas, but the scope of my interests is much broader.

Like many developers, I often use the results of other people's work (articles on Habré, code on githubs, ...), so I am always happy to share my results with the community in response. Writing articles is not only a return of debt to the community, but also allows you to find like-minded people, get comments from professionals in a narrow field and further deepen your knowledge in the field of study.

Actually this article is about one of these moments. In it, I will describe what I did almost all my free time in the last six months. Except for those moments when I went swimming in the sea across the road , watching TV shows or playing games.

')

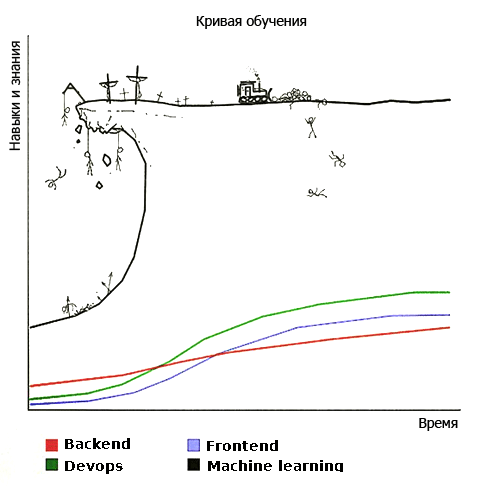

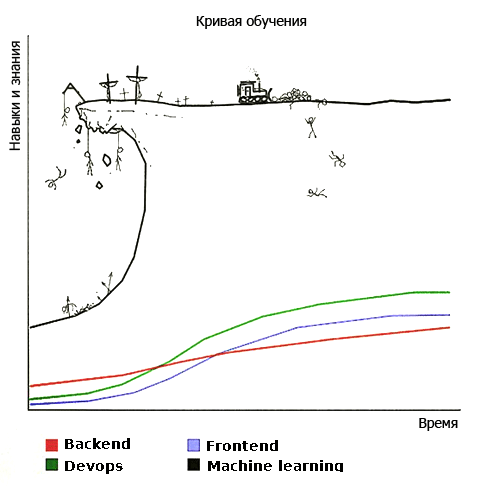

Now “Machine learning” is developing very strongly, many articles have already been written on it, including on Habré and almost every developer would like to take and start using it in his work tasks and home projects, but where to start and what to use is not always understandable. Most of the articles for beginners offer a lot of literature, for the reading of which life is not enough, English-language courses (and not all of us can learn the material in English as effectively as in Russian), “low-cost” Russian-language courses, etc.

New articles are regularly published, which describe new approaches to solving a particular problem. On github you can find the implementation of the approach described in the articles. The most commonly used programming languages are c / c ++, python 2/3, lua and matlab, and the frameworks are caffe, tensorflow, torch. Everyone writes - who is ready for anything. Large segmentation by programming languages and frameworks greatly complicates the search for what you need and the integration of this into the project. In addition, recently a lot of source code with comments in Chinese.

In order to somehow reduce all this chaos in opencv, we added the dnn module , which allows us to use models that have been trained in basic frameworks. For my part, I will show how this module can be used from php.

Jeremy Howard (the creator of the free practical course “machine learning for coders” ) believes that now there is a big threshold between learning machine learning and putting it into practice.

Howard says that one year of programming experience is enough to start learning machine learning. I completely agree with him and I hope that my article will help reduce the threshold for entering opencv for php-developers who have little knowledge of machine learning and are not yet sure whether they want to do it at all or not, and also try to describe all the points I spent hours and days so that it took you no more than a minute.

So, what did I do besides the logo?

(I hope that opencv will not sue me for plagiarism)

I considered the possibility of writing a php-opencv module myself using SWIG and spent a lot of time on it, but did not achieve anything. Everything was complicated by the fact that I did not know c / c ++ and did not write extensions for php 7. Unfortunately, most of the materials on the Internet on php extensions were written for php 5, so I had to collect information bit by bit, and solve the problems myself.

Then I found the php-opencv library in the githab open spaces, it is a module for php7 that makes calls to opencv methods. It took me several evenings to compile, install and run the examples. I started to try various features of this module, but I lacked some methods, I added them myself, created a pullrequest, and the author of the library accepted. Later I added even more features.

Perhaps the reader at this point will ask himself the question: why did the author need such problems at all, why was it just not to start using python and tensorflow?

In general, while it suits me to work with opencv on php.

Here is the image loading:

For comparison, on python it looks like this:

When reading an image in php (as well as in c ++), the information is stored in a Mat object (matrix). In php, its counterpart is a multidimensional array, but unlike a multidimensional array, this object allows various fast manipulations, for example, dividing all the elements into a number. In python, the numpy object is returned when the image is loaded.

Carefully, Legacy! It so happened that imread (in php, c ++ and pyton) loads an image not in RGB format, but in BGR. Therefore, in the examples with opencv, you can often see the procedure for converting BGR-> RGB and vice versa.

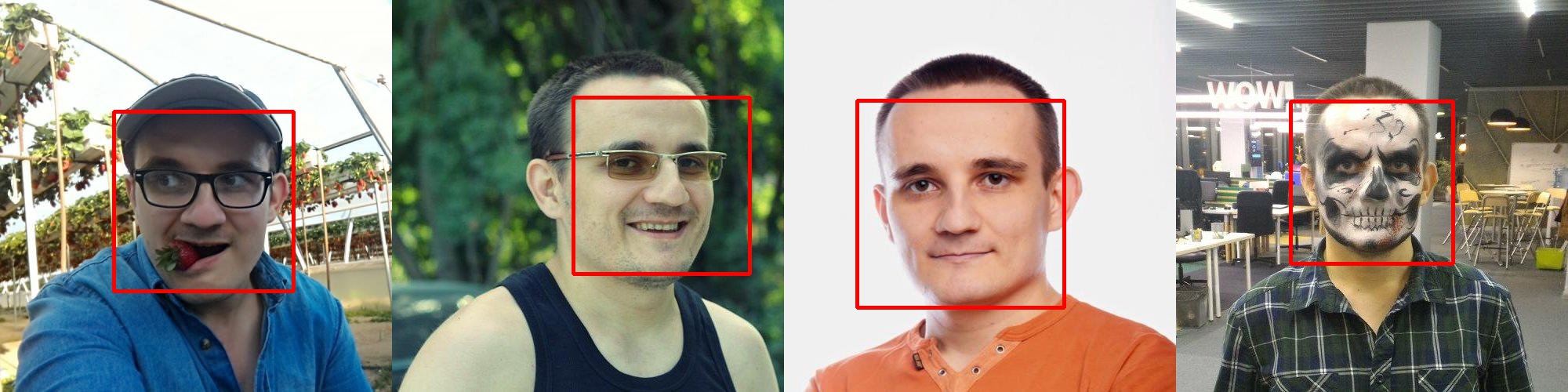

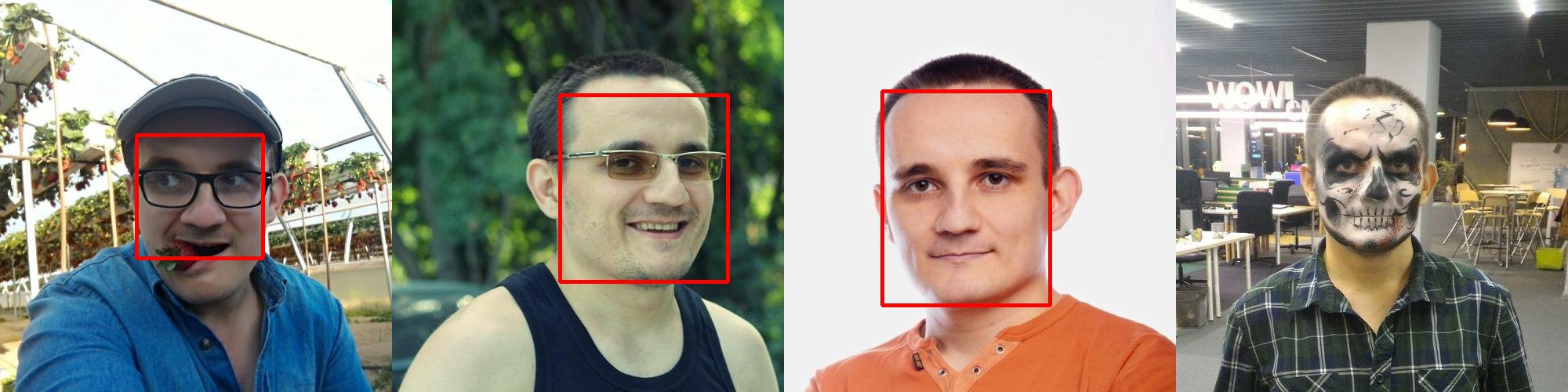

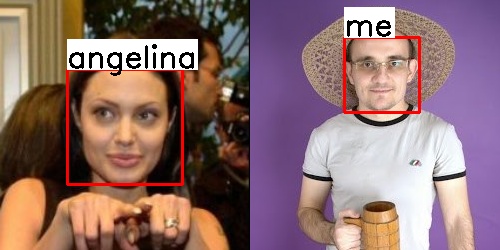

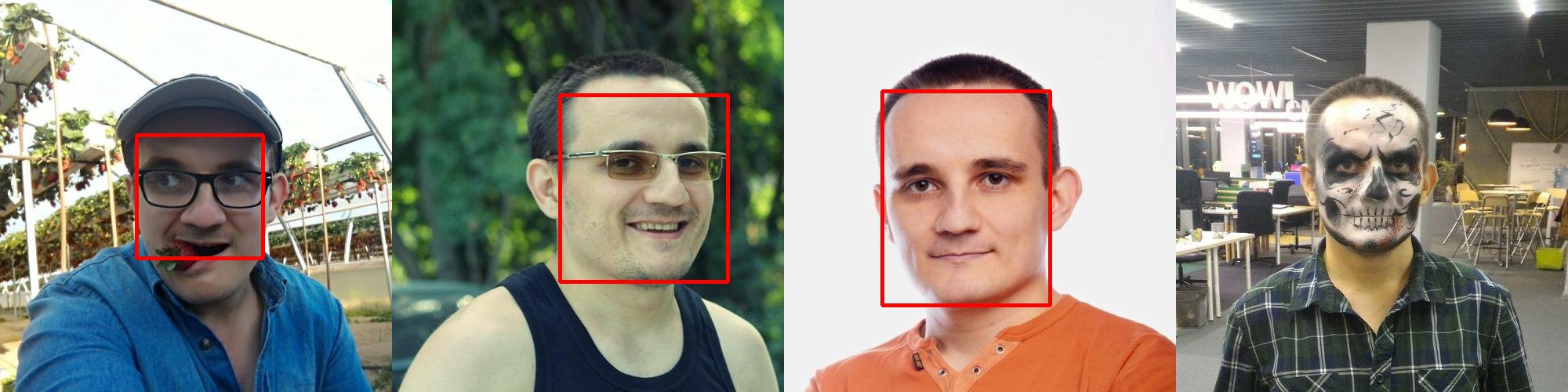

First I tried this feature. For it, opencv has a class CascadeClassifier , which can use a pre-trained model in xml format. Before finding a face, it is recommended to convert the image to black and white.

→ Full sample code

Result:

As you can see from the example, it is no problem to find a face even in the photo in a zombie makeup. Points also do not interfere with finding a person.

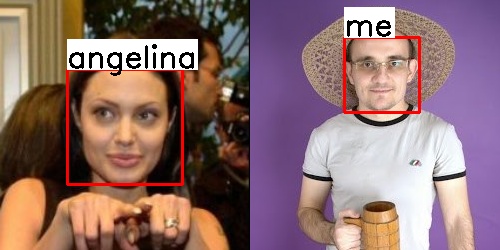

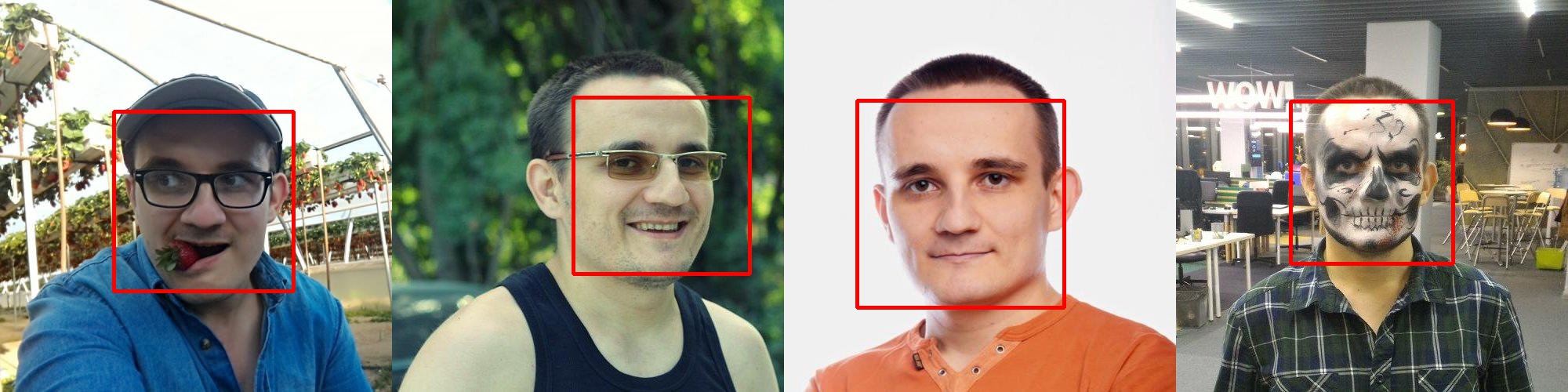

To do this, opencv has a class LBPHFaceRecognizer and methods train / predict.

If we want to find out who is in the photo, then we first need to train the model using the train method, it takes two parameters: an array of face images and an array of numeric labels for these images. Then you can call the predict method on the test image (face) and get a numeric label, which it corresponds to.

→ Full sample code

Person sets:

Result:

When I started working with LBPHFaceRecognizer, he did not have the ability to save / load / retrain the finished model. Actually, my first pullrequest added these methods: write / read / update.

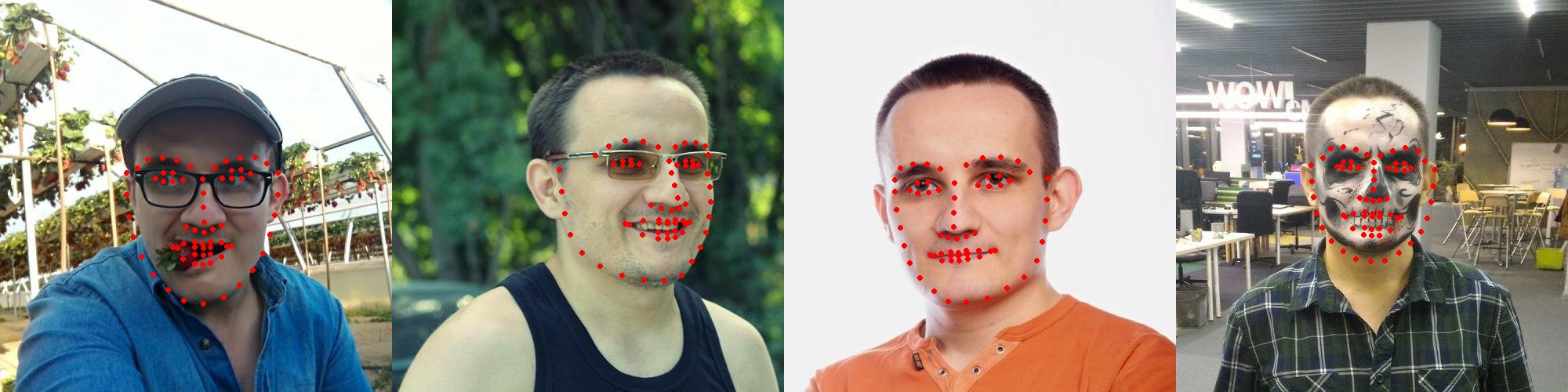

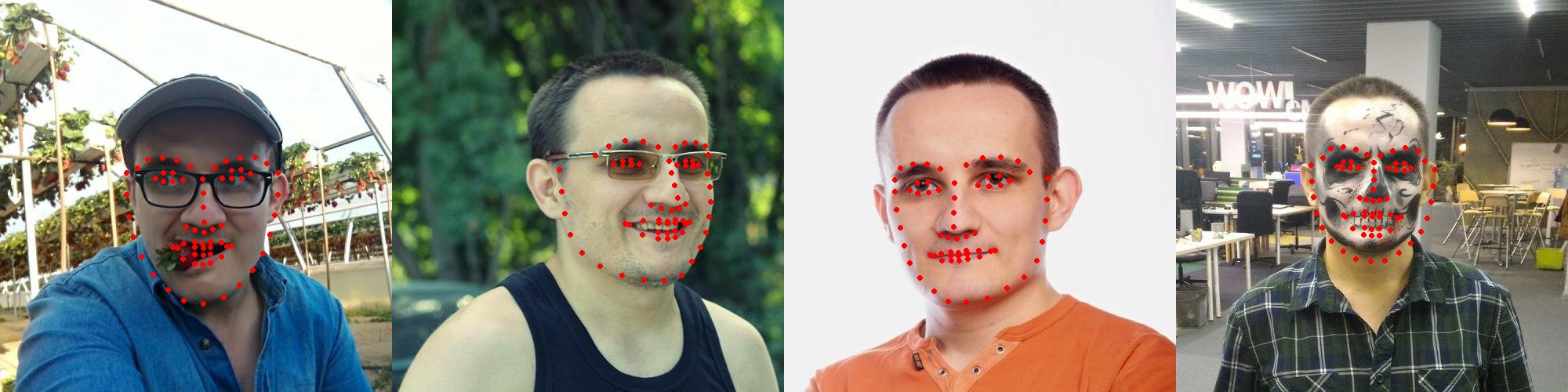

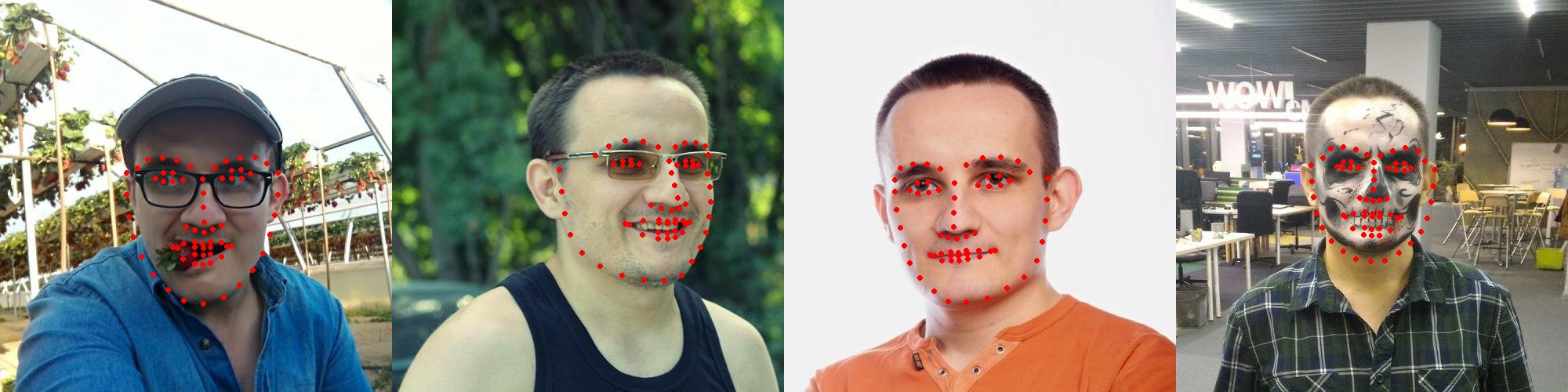

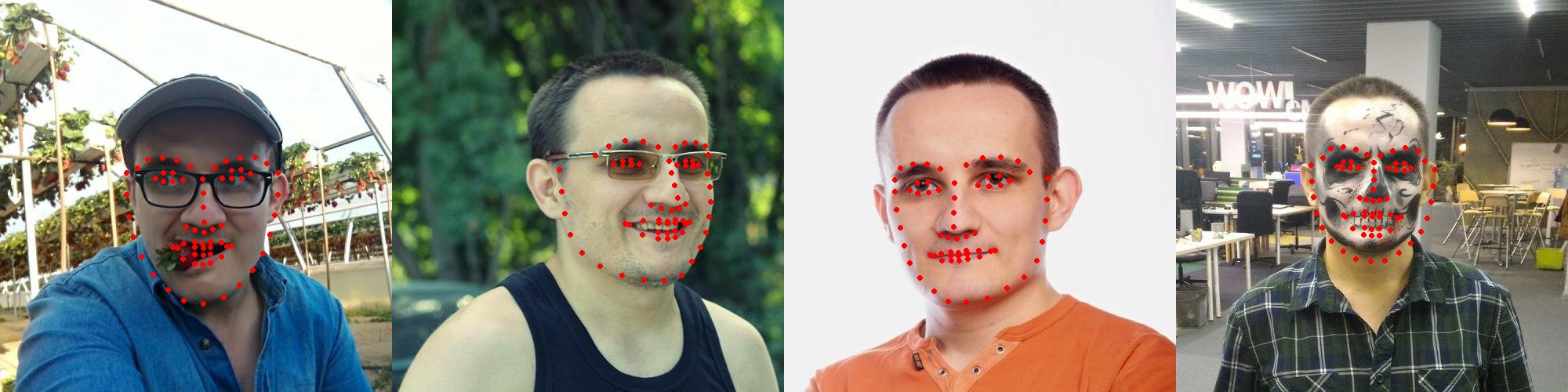

When I started to get acquainted with opencv, I often came across photos of people with dots, eyes, nose, lips, etc. marked with dots. I wanted to repeat this experiment myself, but in the opencv version for python this was not implemented. I spent the evening to add support for FacemarkLBF in php and send the second pullrequest. Everything works simply, load the pre-trained model, feed the array of faces, get an array of points for each face.

→ Full sample code

Result:

As you can see from the example, zombie makeup can make it harder to find pivot points on the face. Glasses can also interfere with finding a face. The flare is also affected. In this case, foreign objects in the mouth (strawberries, cigarettes, etc.) may not interfere.

After my first Pulrequest, I was inspired and began to look at what can be done with opencv and came across a Deep Learning article , now in OpenCV . Without hesitation, I decided to add to php-opencv the possibility of using pre-trained models, which are full on the Internet. It turned out to be not very difficult to load caffe models, though later it took me a lot of time to get to learn how to work with multidimensional matrices, half of which went to the c ++ study and studying opencv internals, and the second to python and working with caffe / torch models / tensorflow without using opencv.

So, opencv allows you to load pre-trained models in Caffe using the readNetFromCaffe function. It takes two parameters - the paths to the files .prototxt and .caffemodel. In the prototxt file there is a description of the model, and in the caffemodel - the weights calculated during the training of the model.

Here is an example of starting the prototxt file :

This piece of the file describes that a 4-dimensional 1x3x300x300 matrix is expected at the input. In the description of the models they usually write what is expected in such a format, but more often this means that an RGB image (3 channels) with a size of 300x300 is expected at the input.

By loading a 300x300 RGB image using the imread function, we get a 300x300x3 matrix.

To bring the 300x300x3 matrix to 1x3x300x300 in opencv, there is a blobFromImage function.

After that, we just have to submit blob to the network input using the setInput method and call the forward method, which will return the finished result.

In this case, the result is a 1x1x200x7 matrix, i.e. 200 arrays of 7 elements each. In the photo with four faces, the network found 200 candidates for us. Each of which looks like this [,, $ confidence, $ startX, $ startY, $ endX, $ endY]. The $ confidence element is responsible for “confidence”, i.e. the fact that the probability of prediction is successful, for example, 0.75. The following elements are responsible for the coordinates of the rectangle with the face. In this example, only 3 individuals were found with more than 50% confidence, and the remaining 197 candidates of individuals are less than 15% confident.

Model size 10 MB, full code example .

Result:

As you can see from the example, the neural network does not always produce good results when using it head-on. The fourth person was not found, while if the fourth photo is cut and sent to the network separately, the face will be found.

I have long heard about the waifu2x library, which allows you to eliminate noise and increase the size of icons / photos. The library itself is written in lua, and under the hood uses several models (for increasing icons, eliminating photo noise, etc.) trained in torch. The author of the library exported these models to caffe and helped me use them from opencv. As a result, an example was written in php to increase the resolution of the icons.

Model size 2 MB, full code example .

Original:

Result:

Enlarging pictures without using a neural network:

The MobileNet neural network, trained on the ImageNet data set, allows you to classify an image. In total, it can define 1000 classes , which in my opinion is not enough.

Model size 16 MB, full code example .

Original:

Result:

87% - Egyptian cat, 4% - tabby, tabby cat, 2% - tiger cat

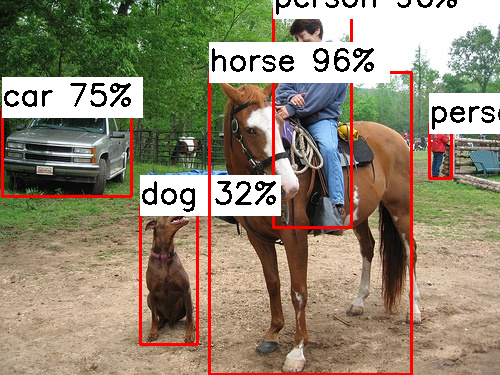

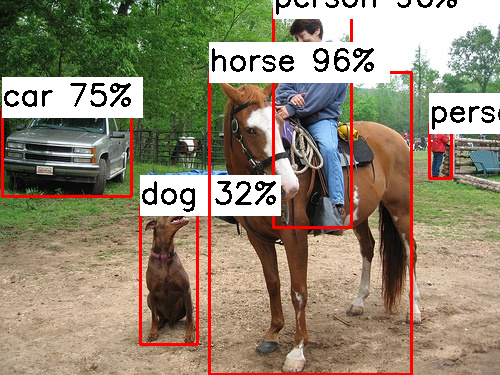

The MobileNet SSD (Single Shot MultiBox Detector) neural network, trained in Tensorflow on a COCO dataset, can not only classify an image, but also return regions, although it can only determine 182 classes .

Model size 19 MB, full code example .

Original:

Result:

In the repository with examples, I also added the file phpdoc.php . Thanks to it, Phpstorm highlights the syntax of functions, classes and their methods, as well as code autocompletion. This file does not need to be included in your code (otherwise there will be an error), it is enough to put it in your project. Personally, it simplifies my life. This file describes most of the features of opencv, but not all, so pullrequests are welcome.

The dnn module appeared in opencv only in version 3.4 (before that it was in opencv-contrib).

In ubuntu 18.04, the latest version of opencv is 3.2 . It takes about half an hour to build opencv from source, so I compiled a package for ubuntu 18.04 (works for 17.10, size 25MB), and also built php-opencv packages for php 7.2 (ubuntu 18.04) and php 7.1 (ubuntu 17.10) (size 100KB).

I registered ppa: php-opencv, but have not yet mastered the fill there and have not found anything better than just pouring the packages onto the githab . I also created an application to create an account in pecl, but after a few months I did not receive an answer.

So now the installation for ubuntu 18.04 looks like this:

Installation of this option takes about 1 minute. All installation options on ubuntu .

I also collected a 168 MB docker image .

Download:

Run:

I ask all interested parties to respond to polls after the article, well, subscribe to not miss my next articles, put likes to motivate me to write them and write questions in the commentary, suggest options for new experiments / articles.

Traditionally, I warn you that I do not advise and help through personal messages of Habr and the social network.

You can always ask questions by creating the Issue on the githab (in Russian).

Thanks:

dkurt for quick answers on github.

arrybn for the article "Deep Learning, now in OpenCV"

References:

→ php-opencv-examples - all examples from the article

→ php-opencv / php-opencv - my fork with dnn module support

→ hihozhou / php-opencv - the original repository, without the support of the dnn module (I created a pullrequest, but it has not yet been adopted).

→ Translation of the article into English - I heard that the British and Americans are very patient with those who make mistakes in English, but it seems to me that everything has a redistribution and I crossed this line :) in general, like, who does not mind. The same on reddit .

I made the main contribution to two hubs: PHP and Server Administration. I like to work at the junction of these two areas, but the scope of my interests is much broader.

Like many developers, I often use the results of other people's work (articles on Habré, code on githubs, ...), so I am always happy to share my results with the community in response. Writing articles is not only a return of debt to the community, but also allows you to find like-minded people, get comments from professionals in a narrow field and further deepen your knowledge in the field of study.

Actually this article is about one of these moments. In it, I will describe what I did almost all my free time in the last six months. Except for those moments when I went swimming in the sea across the road , watching TV shows or playing games.

')

Now “Machine learning” is developing very strongly, many articles have already been written on it, including on Habré and almost every developer would like to take and start using it in his work tasks and home projects, but where to start and what to use is not always understandable. Most of the articles for beginners offer a lot of literature, for the reading of which life is not enough, English-language courses (and not all of us can learn the material in English as effectively as in Russian), “low-cost” Russian-language courses, etc.

New articles are regularly published, which describe new approaches to solving a particular problem. On github you can find the implementation of the approach described in the articles. The most commonly used programming languages are c / c ++, python 2/3, lua and matlab, and the frameworks are caffe, tensorflow, torch. Everyone writes - who is ready for anything. Large segmentation by programming languages and frameworks greatly complicates the search for what you need and the integration of this into the project. In addition, recently a lot of source code with comments in Chinese.

In order to somehow reduce all this chaos in opencv, we added the dnn module , which allows us to use models that have been trained in basic frameworks. For my part, I will show how this module can be used from php.

Like_multiply_standards.jpg

Probably an attentive reader immediately thought about this picture and it will be partially right.

Jeremy Howard (the creator of the free practical course “machine learning for coders” ) believes that now there is a big threshold between learning machine learning and putting it into practice.

Howard says that one year of programming experience is enough to start learning machine learning. I completely agree with him and I hope that my article will help reduce the threshold for entering opencv for php-developers who have little knowledge of machine learning and are not yet sure whether they want to do it at all or not, and also try to describe all the points I spent hours and days so that it took you no more than a minute.

So, what did I do besides the logo?

(I hope that opencv will not sue me for plagiarism)

I considered the possibility of writing a php-opencv module myself using SWIG and spent a lot of time on it, but did not achieve anything. Everything was complicated by the fact that I did not know c / c ++ and did not write extensions for php 7. Unfortunately, most of the materials on the Internet on php extensions were written for php 5, so I had to collect information bit by bit, and solve the problems myself.

Then I found the php-opencv library in the githab open spaces, it is a module for php7 that makes calls to opencv methods. It took me several evenings to compile, install and run the examples. I started to try various features of this module, but I lacked some methods, I added them myself, created a pullrequest, and the author of the library accepted. Later I added even more features.

Perhaps the reader at this point will ask himself the question: why did the author need such problems at all, why was it just not to start using python and tensorflow?

Answer. Caution, tediousness and excuses!

The fact is that I am not a professional machine learning specialist, I cannot at this stage develop my own approach to solving a particular narrow problem, in which I will achieve results a couple percent better than other researchers, and then get more This is a patent case. For example, five Chinese guys with advanced degrees, who developed mtcnn and wrote the implementation on matlab and caffe, did this. Then the other three Chinese guys transferred this code to C ++ & caffe, Python & mxnet, Python & caffe. As you may have guessed, knowing only python and tensorflow will not go far. We will have to constantly deal with the code in different languages using different frameworks and comments in Chinese.

Another example, I wanted to use facemark from opencv, but unfortunately the authors did not add support for this module when working from python. At the same time, in order to add facemark bindings in php, it took me one evening.

I also tried to compile opencv to work with nodejs, according to several instructions, but I had various errors and failed to achieve the result.

For the most part, I was interested in doing this despite all the difficulties.

Another example, I wanted to use facemark from opencv, but unfortunately the authors did not add support for this module when working from python. At the same time, in order to add facemark bindings in php, it took me one evening.

I also tried to compile opencv to work with nodejs, according to several instructions, but I had various errors and failed to achieve the result.

For the most part, I was interested in doing this despite all the difficulties.

In general, while it suits me to work with opencv on php.

Here is the image loading:

$image = cv\imread("images/faces.jpg"); For comparison, on python it looks like this:

image = cv2.imread("images/faces.jpg") When reading an image in php (as well as in c ++), the information is stored in a Mat object (matrix). In php, its counterpart is a multidimensional array, but unlike a multidimensional array, this object allows various fast manipulations, for example, dividing all the elements into a number. In python, the numpy object is returned when the image is loaded.

Carefully, Legacy! It so happened that imread (in php, c ++ and pyton) loads an image not in RGB format, but in BGR. Therefore, in the examples with opencv, you can often see the procedure for converting BGR-> RGB and vice versa.

Search for faces in the photo

First I tried this feature. For it, opencv has a class CascadeClassifier , which can use a pre-trained model in xml format. Before finding a face, it is recommended to convert the image to black and white.

$src = imread("images/faces.jpg"); $gray = cvtColor($src, COLOR_BGR2GRAY); $faceClassifier = new CascadeClassifier(); $faceClassifier->load('models/lbpcascades/lbpcascade_frontalface.xml'); $faceClassifier->detectMultiScale($gray, $faces); → Full sample code

Result:

As you can see from the example, it is no problem to find a face even in the photo in a zombie makeup. Points also do not interfere with finding a person.

Recognition (recognition) of persons in the photo

To do this, opencv has a class LBPHFaceRecognizer and methods train / predict.

If we want to find out who is in the photo, then we first need to train the model using the train method, it takes two parameters: an array of face images and an array of numeric labels for these images. Then you can call the predict method on the test image (face) and get a numeric label, which it corresponds to.

$faceRecognizer = LBPHFaceRecognizer::create(); $faceRecognizer->train($myFaces, $myLabels = [1,1,1,1]); // 4 $faceRecognizer->update($angelinaFaces, $angelinaLabels = [2,2,2,2]); // 4 $label = $faceRecognizer->predict($faceImage, $confidence); // label (1 2) $confidence () → Full sample code

Person sets:

Result:

When I started working with LBPHFaceRecognizer, he did not have the ability to save / load / retrain the finished model. Actually, my first pullrequest added these methods: write / read / update.

Finding marks on faces

When I started to get acquainted with opencv, I often came across photos of people with dots, eyes, nose, lips, etc. marked with dots. I wanted to repeat this experiment myself, but in the opencv version for python this was not implemented. I spent the evening to add support for FacemarkLBF in php and send the second pullrequest. Everything works simply, load the pre-trained model, feed the array of faces, get an array of points for each face.

$facemark = FacemarkLBF::create(); $facemark->loadModel('models/opencv-facemark-lbf/lbfmodel.yaml'); $facemark->fit($src, $faces, $landmarks); → Full sample code

Result:

As you can see from the example, zombie makeup can make it harder to find pivot points on the face. Glasses can also interfere with finding a face. The flare is also affected. In this case, foreign objects in the mouth (strawberries, cigarettes, etc.) may not interfere.

After my first Pulrequest, I was inspired and began to look at what can be done with opencv and came across a Deep Learning article , now in OpenCV . Without hesitation, I decided to add to php-opencv the possibility of using pre-trained models, which are full on the Internet. It turned out to be not very difficult to load caffe models, though later it took me a lot of time to get to learn how to work with multidimensional matrices, half of which went to the c ++ study and studying opencv internals, and the second to python and working with caffe / torch models / tensorflow without using opencv.

Search for faces in the photo using the dnn module

So, opencv allows you to load pre-trained models in Caffe using the readNetFromCaffe function. It takes two parameters - the paths to the files .prototxt and .caffemodel. In the prototxt file there is a description of the model, and in the caffemodel - the weights calculated during the training of the model.

Here is an example of starting the prototxt file :

input: "data" input_shape { dim: 1 dim: 3 dim: 300 dim: 300 } This piece of the file describes that a 4-dimensional 1x3x300x300 matrix is expected at the input. In the description of the models they usually write what is expected in such a format, but more often this means that an RGB image (3 channels) with a size of 300x300 is expected at the input.

By loading a 300x300 RGB image using the imread function, we get a 300x300x3 matrix.

To bring the 300x300x3 matrix to 1x3x300x300 in opencv, there is a blobFromImage function.

After that, we just have to submit blob to the network input using the setInput method and call the forward method, which will return the finished result.

$src = imread("images/faces.jpg"); $net = \CV\DNN\readNetFromCaffe('models/ssd/res10_300x300_ssd_deploy.prototxt', 'models/ssd/res10_300x300_ssd_iter_140000.caffemodel'); $blob = \CV\DNN\blobFromImage($src, $scalefactor = 1.0, $size = new Size(300, 300), $mean = new Scalar(104, 177, 123), $swapRB = true, $crop = false); $net->setInput($blob, ""); $result = $net->forward(); In this case, the result is a 1x1x200x7 matrix, i.e. 200 arrays of 7 elements each. In the photo with four faces, the network found 200 candidates for us. Each of which looks like this [,, $ confidence, $ startX, $ startY, $ endX, $ endY]. The $ confidence element is responsible for “confidence”, i.e. the fact that the probability of prediction is successful, for example, 0.75. The following elements are responsible for the coordinates of the rectangle with the face. In this example, only 3 individuals were found with more than 50% confidence, and the remaining 197 candidates of individuals are less than 15% confident.

Model size 10 MB, full code example .

Result:

As you can see from the example, the neural network does not always produce good results when using it head-on. The fourth person was not found, while if the fourth photo is cut and sent to the network separately, the face will be found.

Image Enhancement with Neural Network

I have long heard about the waifu2x library, which allows you to eliminate noise and increase the size of icons / photos. The library itself is written in lua, and under the hood uses several models (for increasing icons, eliminating photo noise, etc.) trained in torch. The author of the library exported these models to caffe and helped me use them from opencv. As a result, an example was written in php to increase the resolution of the icons.

Model size 2 MB, full code example .

Original:

Result:

Enlarging pictures without using a neural network:

Image classification

The MobileNet neural network, trained on the ImageNet data set, allows you to classify an image. In total, it can define 1000 classes , which in my opinion is not enough.

Model size 16 MB, full code example .

Original:

Result:

87% - Egyptian cat, 4% - tabby, tabby cat, 2% - tiger cat

Tensorflow Object Detection API

The MobileNet SSD (Single Shot MultiBox Detector) neural network, trained in Tensorflow on a COCO dataset, can not only classify an image, but also return regions, although it can only determine 182 classes .

Model size 19 MB, full code example .

Original:

Result:

Syntax Highlighting and Code Autocompletion

In the repository with examples, I also added the file phpdoc.php . Thanks to it, Phpstorm highlights the syntax of functions, classes and their methods, as well as code autocompletion. This file does not need to be included in your code (otherwise there will be an error), it is enough to put it in your project. Personally, it simplifies my life. This file describes most of the features of opencv, but not all, so pullrequests are welcome.

Installation

The dnn module appeared in opencv only in version 3.4 (before that it was in opencv-contrib).

In ubuntu 18.04, the latest version of opencv is 3.2 . It takes about half an hour to build opencv from source, so I compiled a package for ubuntu 18.04 (works for 17.10, size 25MB), and also built php-opencv packages for php 7.2 (ubuntu 18.04) and php 7.1 (ubuntu 17.10) (size 100KB).

I registered ppa: php-opencv, but have not yet mastered the fill there and have not found anything better than just pouring the packages onto the githab . I also created an application to create an account in pecl, but after a few months I did not receive an answer.

So now the installation for ubuntu 18.04 looks like this:

apt update && apt install -y wget && \ wget https://raw.githubusercontent.com/php-opencv/php-opencv-packages/master/opencv_3.4_amd64.deb && dpkg -i opencv_3.4_amd64.deb && rm opencv_3.4_amd64.deb && \ wget https://raw.githubusercontent.com/php-opencv/php-opencv-packages/master/php-opencv_7.2-3.4_amd64.deb && dpkg -i php-opencv_7.2-3.4_amd64.deb && rm php-opencv_7.2-3.4_amd64.deb && \ echo "extension=opencv.so" > /etc/php/7.2/cli/conf.d/opencv.ini Installation of this option takes about 1 minute. All installation options on ubuntu .

I also collected a 168 MB docker image .

Using examples

Download:

git clone https://github.com/php-opencv/php-opencv-examples.git && cd php-opencv-examples Run:

php detect_face_by_dnn_ssd.php PS

I ask all interested parties to respond to polls after the article, well, subscribe to not miss my next articles, put likes to motivate me to write them and write questions in the commentary, suggest options for new experiments / articles.

Traditionally, I warn you that I do not advise and help through personal messages of Habr and the social network.

You can always ask questions by creating the Issue on the githab (in Russian).

Thanks:

dkurt for quick answers on github.

arrybn for the article "Deep Learning, now in OpenCV"

References:

→ php-opencv-examples - all examples from the article

→ php-opencv / php-opencv - my fork with dnn module support

→ hihozhou / php-opencv - the original repository, without the support of the dnn module (I created a pullrequest, but it has not yet been adopted).

→ Translation of the article into English - I heard that the British and Americans are very patient with those who make mistakes in English, but it seems to me that everything has a redistribution and I crossed this line :) in general, like, who does not mind. The same on reddit .

Source: https://habr.com/ru/post/358902/

All Articles