Kubernetes NodePort vs LoadBalancer vs Ingress? When and what to use?

I was recently asked what the difference is between NodePorts, LoadBalancers and Ingress. These are all different ways to get external traffic into a cluster. Let's see how they differ, and when to use each of them.

Note: recommendations are calculated on Google Kubernetes Engine . If you are working in another cloud, on your own server, on a mini-cube or something else, there will be differences. I don't get into the technical details. For details, refer to the official documentation .

Clusterip

ClusterIP is the default Kubernetes service. It provides a service within the cluster that other applications can access within the cluster. No external access.

The YAML for the ClusterIP service looks like this:

apiVersion: v1 kind: Service metadata: name: my-internal-service selector: app: my-app spec: type: ClusterIP ports: - name: http port: 80 targetPort: 80 protocol: TCP How did I start talking about the ClusterIP service, if it cannot be accessed from the Internet? There is a way: using the proxy server Kubernetes!

We start the Kubernetes proxy server:

$ kubectl proxy --port=8080 Now you can navigate through the Kubernetes API to access this service using the scheme:

http: // localhost: 8080 / api / v1 / proxy / namespaces / / services / <SERVICE-NAME>: <PORT-NAME> /

Use this address to access the above service:

When to use?

There are several scenarios for using the Kubernetes proxy server to access services.

Debugging services or connecting to them directly from a laptop for other purposes.

Allowing internal traffic, displaying internal panels, etc.

Since this method requires kubectl to run as an authenticated user, it should not be used to provide access to a service on the Internet or for production services.

NodePort

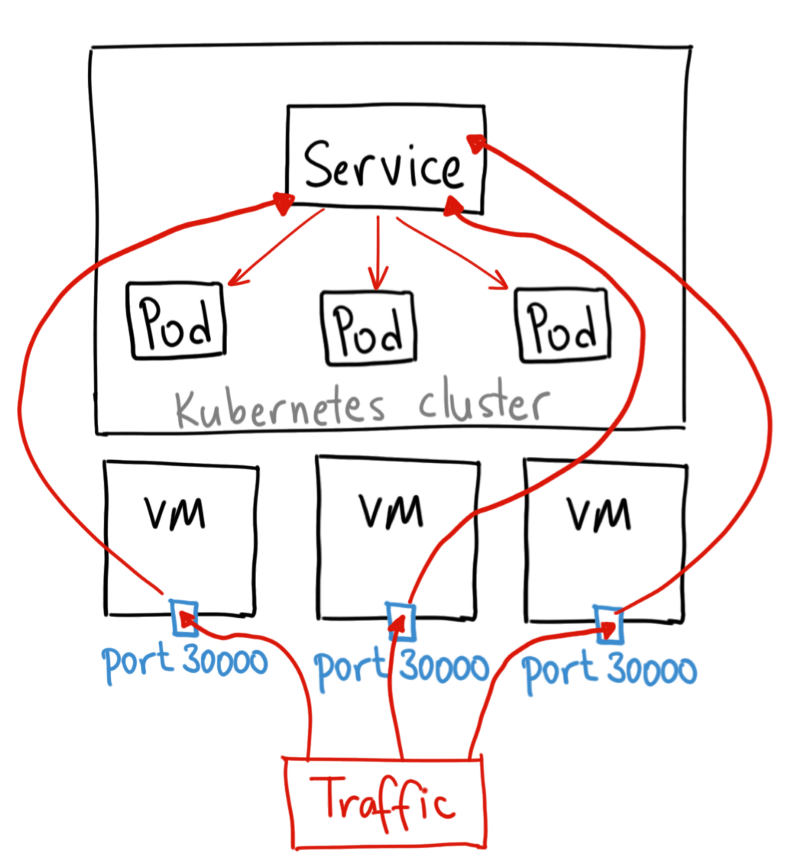

The NodePort service is the most primitive way to send external traffic to a service. NodePort, as the name implies, opens the specified port for all Nodes (virtual machines), and traffic to this port is redirected to the service.

YAML for the NodePort service looks like this:

apiVersion: v1 kind: Service metadata: name: my-nodeport-service selector: app: my-app spec: type: NodePort ports: - name: http port: 80 targetPort: 80 nodePort: 30036 protocol: TCP In fact, the NodePort service has two differences from the usual ClusterIP service. First, the NodePort type. There is an additional port, called nodePort, which indicates which port to open on the nodes. If we do not specify this port, it will choose a random one. In most cases, let Kubernetes choose the port itself. As thockin says, with the choice of ports, everything is not so simple.

When to use?

The method has many disadvantages:

Only one service sits at the port.

Only ports 30000–32767 are available.

If the IP address of the host / virtual machine changes, you'll have to figure it out.

For these reasons, I do not recommend using this method in production to directly provide access to the service. But if the constant availability of the service is indifferent to you, and the level of costs is not, this method is for you. A good example of such an application is a demo or temporary plug.

LoadBalancer

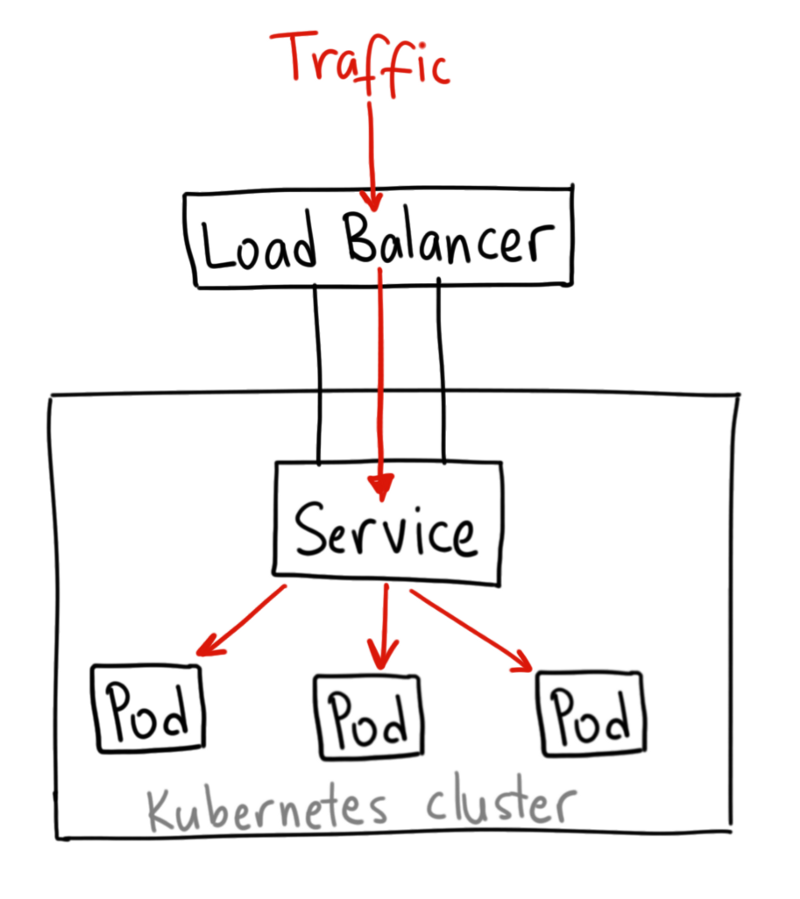

The LoadBalancer service is a standard way to provide a service on the Internet. On the GKE, it will deploy the Network Load Balancer , which will provide the IP address. This IP address will direct all traffic to the service.

When to use?

If you want to expand the service directly, this is the default method. All traffic of the specified port will be sent to the service. No filtering, no routing, etc. This means that we can send to the service such types of traffic as HTTP, TCP, UDP, Websockets, gRPC and the like.

! But there is one drawback. Each service that we deploy using LoadBalancer needs its own IP address, which can cost you a lot.

Ingress

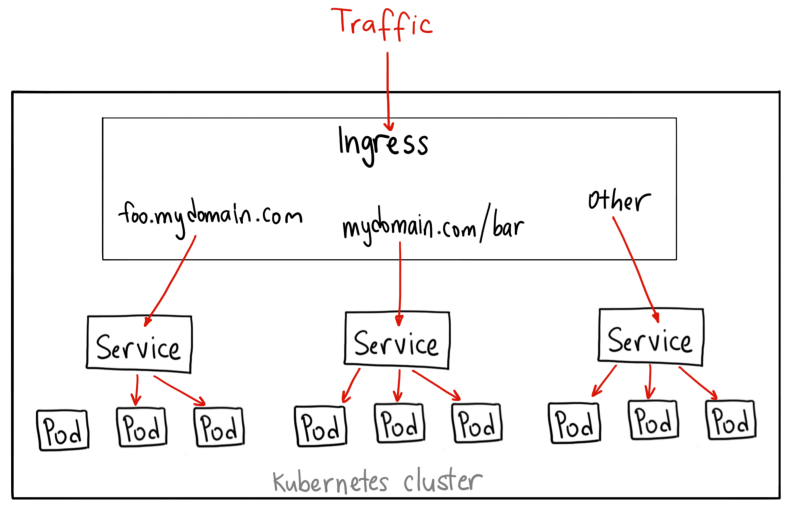

Unlike the examples given, Ingress is not a service in itself. It stands in front of several services and acts as a “smart router” or a cluster entry point.

There are different types of Ingress controllers with rich features.

The GKE controller starts the HTTP (S) Load Balancer by default. You will simultaneously be able to route to backend services based on paths and subdomains. For example, everything on foo.yourdomain.com is sent to the foo service, and the path yourdomain.com/bar/ with all attachments is sent to the bar service.

The YAML for the Ingress object on GKE with the L7 HTTP Load Balancer looks like this:

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: my-ingress spec: backend: serviceName: other servicePort: 8080 rules: - host: foo.mydomain.com http: paths: - backend: serviceName: foo servicePort: 8080 - host: mydomain.com http: paths: - path: /bar/* backend: serviceName: bar servicePort: 8080 When to use?

On the one hand, Ingress is one of the best ways to open services. On the other hand - one of the most difficult. There are many Ingress controllers: Google Cloud Load Balancer , Nginx , Contour , Istio, and others. There are also plugins for Ingress controllers, such as cert-manager , which automatically provides SSL certificates for services.

Ingress is good at opening multiple services on one IP address, when all services use the common L7 protocol (usually HTTP). Using the built-in GCP integration, you only pay for one load balancer. And since Ingress is “smart,” you get a lot of features out of the box (for example, SSL, Auth, Routing, etc.)

Thanks for the charts Ahmet Alp Balkan .

This is not the most technically accurate diagram, but it illustrates well the work of NodePort.

Original: Kubernetes NodePort vs LoadBalancer vs Ingress? When should I use what? .

')

Source: https://habr.com/ru/post/358824/

All Articles