Correction of typos, side view

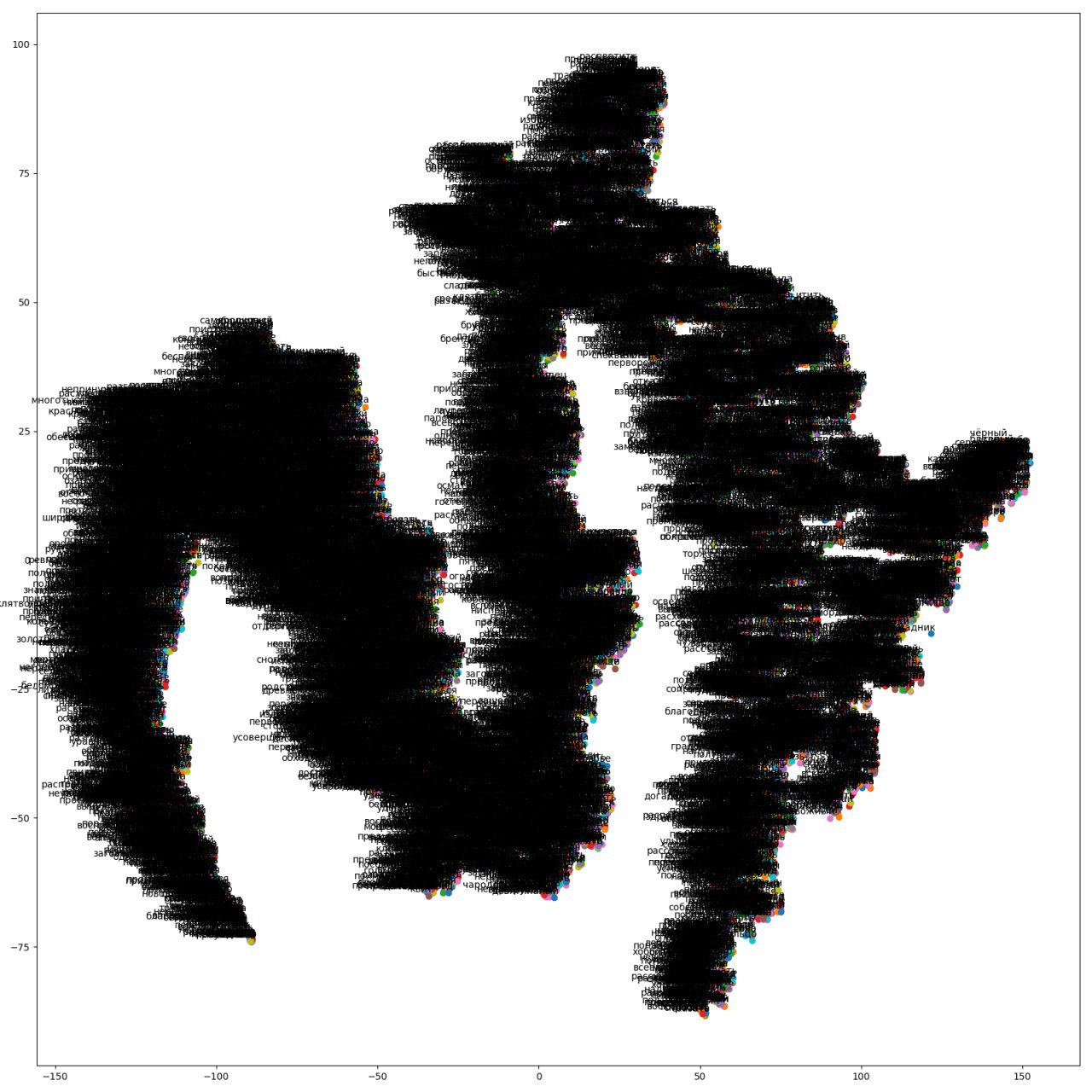

Visualization of the Word2Vec model obtained by the student. She studied at the "Lord of the Rings." Obviously something in the black dialect.

Formulation of the problem

Given : Dictionary (many words).

Required : For an input word with a typo (which may not be in the dictionary), find the nearest words from the dictionary based on a predefined metric. These words are found and are options for correcting the input word.

Theoretical educational program

For our task, we need Word Embedding, or a vector representation of words (the Russian-speaking community still has doubts about the translation of the term), and again there is a wonderful article in Habré, which tells about it well. In order not to repeat, but also not to chase the reader on the links - a brief excursion into the topic.

')

Word embedding is a vector representation of words, that is, one word is associated with a vector of fixed dimension, for example, for the conditional word “home” -

[0.1, 0.3, ..., 0.7] . Important note: usually the dimension of the vector is much smaller than the dimension of the dictionary, otherwise it degenerates into one-hot encoding .The number of vector elements depends on many conditions: the chosen method, the requirements of the problem, the weather in Krasnoyarsk and much more. In current SotA implementations, this is 100-500 values (usually 300).

Vectors are obtained in the process of learning, for this, some text or texts are fed to the algorithm (from works of art to the wikipedia dump), and a vector is calculated for each word iteratively.

There are various methods for obtaining these vectors, the most popular now are Word2Vec , GloVe , WordRank and FastText .

In short, the idea is based on: words whose contexts are similar - most likely have similar meanings. This implies how the typos are corrected in the first example given. That is, we have two sentences: “to find tours with preferences” and “to find tours with preferences”, the contexts are similar, therefore, the words “adventures” and “adventures” are similar in meaning (this is a rough approximation, but the meaning is ).

Such an approach to correcting typos, besides the obvious merits, has one important drawback - all variants of errors that we can correct should be in the text in which we learn. That is, we cannot get a vector for a word that we have never met before.

All (known to the author) modern methods for obtaining word vectors, except FastText, operate on words as indivisible entities (the word is replaced by an integer index, and then the index is processed). In FastText (if even more precisely, in its supplement ) an interesting sentence was added: let's consider these vectors not for whole words, but for n-grams of characters. For example, the word "table" (with the added characters of the beginning and end of a word, like "<table>") is converted to the following list: 3-grams: <St, hundred, tol, ol>; 4 grams: <one hundred, table, tol>. The authors propose to use n-grams from 3 to 6 inclusive, we will not argue with them. Then the resultant vector of the word is equal to the sum of the vectors of the n-grams composing it:

Where

- the set of all n-gram words,

- the vector of the corresponding n-gram,

- word vector.

What important changes does this approach hold for us?

First, the authors introduced this in order to work better in languages with a rich morphology (which is Russian). And indeed, morphological changes now have less effect on the distance between words; here is a tablet for different languages from the same article as proofs:

| Tongue | Dataset | Sg | CBOW | sisg- | sisg |

|---|---|---|---|---|---|

| Ar | WS353 | 51 | 52 | 54 | 55 |

| De | Gur350 | 61 | 62 | 64 | 70 |

| De | Gur65 | 78 | 78 | 81 | 81 |

| De | ZG222 | 35 | 38 | 41 | 44 |

| En | Rw | 43 | 43 | 46 | 47 |

| En | WS353 | 72 | 73 | 71 | 71 |

| Es | WS353 | 57 | 58 | 58 | 59 |

| Fr | RG65 | 70 | 69 | 75 | 75 |

| Ro | WS353 | 48 | 52 | 51 | 54 |

| Ru | HJ | 59 | 60 | 60 | 66 |

Correlation between expert evaluation and method evaluation. SG and CBOW - skip-gram and continious bag of words, respectively, are Word2Vec variants, sisg- when unknown words are replaced with a zero vector, and sisg - when unknown words are replaced by the sum of their n-grams.

Secondly, it is a little step back, in Word2Vec we departed from the literal representation of the word, trying to bring together words like "king" and "king", "mother" and "son", now we return to the "letter affinity", which for The semantic task may not be very good , but for our version (I remind you - correcting typos), this is what you need.

This is the theoretical part, let's move on to practice.

Practical training ground

We introduce some preconditions:

- For the tests, we take a relatively small text, namely, some artistic work, for example, “The Quiet Don” by Sholokhov. Why is that? It will be easier to repeat to the interested reader and, being aware of the context of the work, we will be able to explain the behavior of our method. In general, vector representations of words are trained on large bodies of language, such as Wikipedia dumps.

- Normalize the words before learning, that is, we bring them into normal form (for example, for nouns this is the only number and nominative case). This is a strong simplification in order not to tinker with the endings and increase the frequency of occurrence of words for more adequate vectors (for this purpose, it is primarily taught on large bodies to get as many uses as possible for each word).

Implementation

The test code is quite simple (thanks, gensim ), the whole script is here , directly learning the model in one line:

model = gensim.models.FastText(sentences, size=300, window=3, min_count=2, sg=1, iter=35) Small explanations:

sentences is a list of lists, each element is a sentence, each element of a sentence is a word;

size - the size of the output vectors;

window - window size, the words within the window we consider the context for the word in the center;

min_count — consider only words that occur at least 2 times;

sg - use skip-gram option, not CBOW;

iter is the number of iterations.

In addition, there are two parameters that are left by default, their value was discussed above, but you can play with them: min_n and max_n - lower and upper thresholds, which n-grams to take (default is 3 to 6 characters)

Measure of similarity

As a metric, we take the measure of similarity between the Cosine similarity vectors, which has already become classical in this problem, which takes values from 0 to 1, where 0 - the vectors are completely different, 1 - the vectors are the same:

Where and - components of the corresponding vectors.

Experiments

So, we figured out what we have, and what we want, just in case again:

- The hypothesis is that based on the vector representation of words, we can correct typos.

- We have a trained FastText model in just one work, the words in it are normalized, we can also get a vector for unknown words.

- The method of comparing words, or rather their vectors, is defined in the previous paragraph.

Now let's see what we can do, for the tests we take the following pairs - a word with a typo and the word in parentheses:

man (person), chair (student), student (student), chilovennost (humanity), participate (participate), tactics (tactics), in general (generally), simpotichny (cute), create (make), watch (watch), algaritm (algorithm), lay down (put).

Summary table with the results:

| A typo word | Conceived word | No. in the list of the nearest | Metric value |

|---|---|---|---|

| man | person | one | 0.88227 |

| stool | student | ten | 0.67878 |

| student's | student | one | 0.85078 |

| chilovennost | humanity | one | 0.75012 |

| take part | participate | 6 | 0.87767 |

| tact | tactics | 2 | 0.73543 |

| in general | at all | (one) | 0.96243 |

| simpotichny | pretty | (one) | 0.92399 |

| create one | to make | 2 | 0.91553 |

| smtret | watch | one | 0.80055 |

| algaritm | algorithm | (one) | 0.86162 |

| lay down | put | ten | 0.81719 |

Remarks:

- If the number is indicated in brackets, it means that the word is not in the dictionary, but it could be in this place (based on the metric).

- It’s actually not so bad with a couple of lay-put, since the words above are “put”, “put aside”, “lay out”, etc. (see spoiler).

- Sometimes in the top of similar words there are words that are very different from queries (chair - driver), presumably this is due to a kind of collision of vectors - when approximately identical vectors are obtained for different n-grams.

man 0.87035

chelba 0.80893

human 0.77607

check 0.74867

century 0.71127

shuttle 0.68631

human 0.63725

humanity 0.63615

granddaughters 0.59655

bee 0.59173

stool:

chair 0.73342

chair 0.70797

tarpaulin 0.67196

knocker 0.64903

tool 0.64340

foundation 0.61881

driver 0.60767

bridge 0.60279

sheepskin 0.60249

turbo 0.59757

student 0.58953

student's:

student 0.85685

youthful 0.75904

weighty 0.72052

chemical 0.71119

adolescent 0.70076

hysterical 0.69888

physical 0.69580

boyish 0.68713

phosphoric 0.68312

full 0.68136

chileiness:

humanity 0.75012

candor 0.74727

worth of 0.72961

proximity 0.72873

boundedness 0.72581

the value of 0.72350

cowardice 0.72186

ambiguity 0.72128

pregnancy 0.72121

significance 0.72100

participate:

health 0.93609

rampage 0.89396

persist 0.89216

to live in distress 0.88620

gloat 0.87975

participate 0.87767

rampage 0.87446

get drunk 0.86810

feel 0.86627

sympathize with 0.86622

tactic:

tact 0.87537

tactic 0.73542

stool 0.66532

label 0.65750

scab 0.65702

brush 0.64602

Annie 0.64291

grid 0.62549

taka 0.62321

convolution 0.61241

in general:

Post 0.57405

armament 0.51535

insignificant 0.50341

armed with 0.49465

unarmed 0.48958

communication 0.48076

dormitory 0.48069

journey 0.47493

low value 0.46655

report 0.46373

simpotichny:

personal 0.86102

street 0.84662

energetic 0.83907

cynical 0.82305

excellent 0.81775

typical 0.80783

factory 0.80701

egg 0.80283

secondary 0.79368

0.7946 howitzers

to create:

to make 0.93598

make 0.91553

do 0.90678

make 0.87672

to finish 0.87297

trim 0.85775

close up 0.84235

wish for 0.82316

do 0.78098

do 0.77232

see:

see 0.80055

see 0.78115

look at 0.77926

third 0.73819

view 0.716420

view 0.71075

weathered 0.68950

peer 0.68513

motley 0.65353

look 0.65069

algaritm:

rhythm 0.85291

demi-season 0.69376

leggings 0.66552

cynicism 0.66278

stupid 0.65656

Shorin 0.65496

baritone 0.64623

frasenbruder 0.64395

harness 0.63321

trellis 0.63161

lay down:

attach 0.89386

put 0.89129

impose 0.87222

lay out 0.87199

set aside 0.84127

put down 0.83720

lay 0.83572

add 0.83549

apply 0.82764

put 0.81718

Conclusion

Using a vector representation can undoubtedly help in the task of correcting typos / errors, but using it alone is quite dangerous, as sometimes (though rarely) strong errors occur.

In fact, this is another metric comparing two lines for similarity, but already a level higher than, for example, the same Damerau-Levenshteyn distance . Using FastText as a supplement to other methods may well improve the quality of typo correction.

Source: https://habr.com/ru/post/358664/

All Articles