Custdev from Support

Hello again!

We continue to expand the topics taught by us. Now we have developed and developed the course “Product Owner” . The author of the course, Ekaterina Marchuk, invites you to get acquainted with her author’s article and invites you to an open lesson.

How do we interpret feedback?

')

On customer development or development of users recently all speak to everyone. And everyone who is familiar with the concept, realizes how important it is, and speaking directly, it is vital for the successful launch of the product.

Customer develoment helps to understand the value of the product, to reveal the hidden motives of consumers, their real problems and needs. And most importantly, custdev allows us to test hypotheses. Without testing hypotheses, it is difficult to lead a project in the right direction, because, as practice shows, bare figures, alas, are not representative.

But, as usual, on a purely theoretical understanding, everything ends. We do not have enough time for anything, including - and for such important tasks.

Almost everyone has a bad habit that one wants to get rid of. However, thinking about a bad habit and dealing with it are completely different things. So here: we seem to be engaged in the development of users - we collect a huge amount of feedback through call centers, social networks, thematic platforms, technical service centers and ... we are not doing anything useful. Instead of controlled collection and analysis of user feedback, we get a chaotic stream of unstructured information and do not receive user development.

When we talk about collecting feedback, it is important to understand that each channel of contact with users has its own audience and its own specifics. Our main task is not just to collect feedback, but to be able to correctly interpret the information received.

In pursuit of quantity

or why it is not necessary to run MVP without checks

Let's talk about the life cycle of user feedback, that is, reviews, applications and downloads. Why do you think the feedback handling mechanism often fails?

A simple example. Let's face it: we all love KPI. And if you look at the Support Service, one of the key components of KPI will be the number of closed applications.

At first glance, everything is logical. The more applications are processed, the higher the loyalty will be. The higher the loyalty, the longer the user retention period. So, not so: the CSI index (Customer Satisfaction Index, customer satisfaction index) does not grow in proportion to the number of requests closed by the Support Service. Why?

It's pretty simple. In the quantitative approach, the received applications are not validated properly, that is, no customer development is applied. This is tantamount to trying to realize an idea, a function or a whole product without testing key hypotheses: why are we doing all this? What need, task or pain are we trying to satisfy? What benefits do we want to bring? How this attempt will end, I think, is obvious to everyone.

That is why it is incredibly important to build a transparent system for processing user requests and requests. What we talk about today.

How did we get to life like that?

or about regular blockages in support after product launch

Take the standard script. The owner of the product, Timofey, always relies on his experience and sense of beauty. It turned out that when creating the MVP terms were burning, the stakeholders lyutovali, and Timofey decided that there was no time for deep consumer research, one can do without them. Everyone knows: an experienced team is able to create a good and popular product on their own. Real needs research is not needed! Especially when there is no time.

No time! Therefore, we forgot about the A / B test before launch. A soft launch did not hold, because there is no time. You remember, yes?

The result is natural: the flow of angry appeals after the release. What does Timofey do? Of course, it starts to urgently fix the problems - all at once.

Metrics, of course, we did not have. In a hurry forgot to write and screw. And Timofey decides to make amends for the last serious miss by adding new functionality. And finally, we really came out in release! Hooray? Grateful users bring us money?

Not at all! Instead of thanks, users bring even more applications to the Support Service. There are more and more applications, even more, and now this avalanche falls on our backlog ... No wonder, the support service works well, not like our Timofey. He now rake descended avalanche and save the product. Do not be like Timothy!

In our scenario, the Support Service is the most effective. Judge for yourself: a huge number of applications were processed. And what will happen next? There will be new applications, and then another - and the cycle will be repeated. The more cycles passed, the more difficult it is to control the situation. Baclog becomes unmanageable, it is impossible to update it, as well as put down priorities for everything that goes there.

And when the number of requests and applications for the new functionality finally goes off scale, it comes to understand that it is impossible to live this way. And how to live?

How to start life from scratch?

or Return to the sources - we introduce metrics!

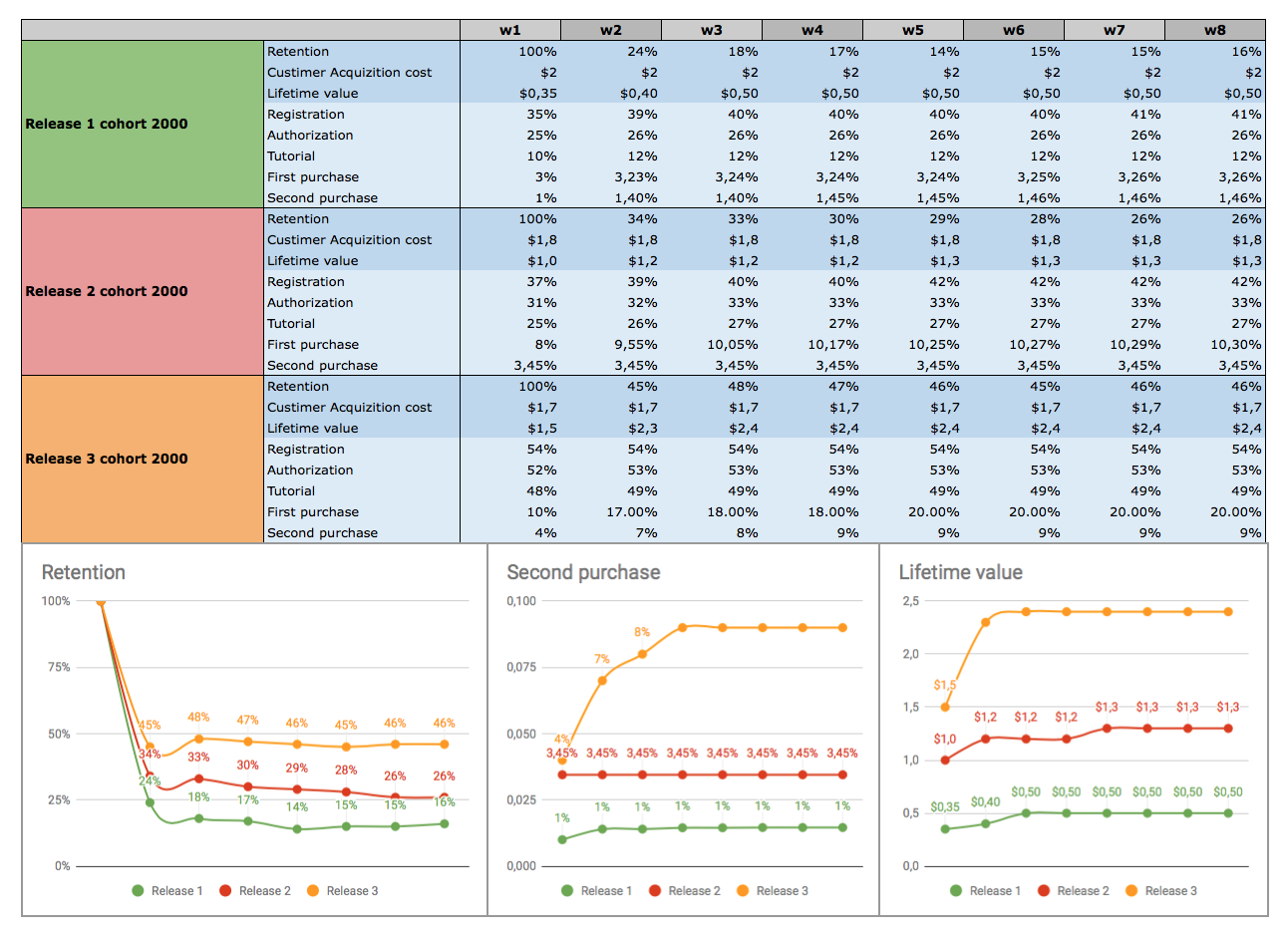

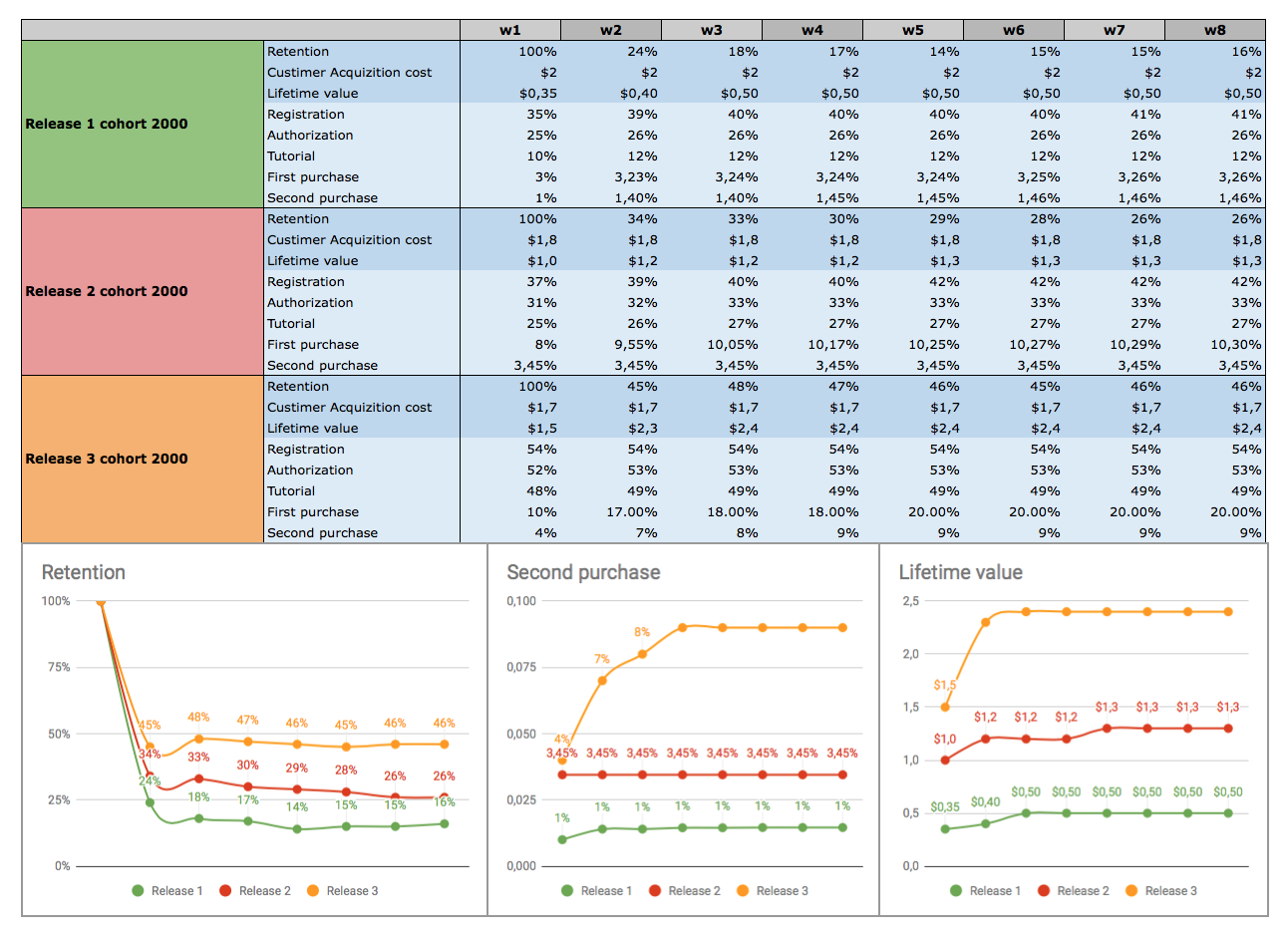

You need to start by creating simple metrics. Be sure to release the first MVP!

When you compile the first MVP, you build a value proposition model for user profiles. You yourself have to pre-assemble and form the profiles.

If for some improbable reason nothing has been done, go to the very beginning and act in order: create profiles, test hypotheses, build our proposal and only then the business model! Otherwise, all your efforts will go only to heat the environment and you will not get any result.

In profiles you describe needs, benefits, tasks. Each of them has its own priority, which is reflected in the MVP. We have included in the MVP all the most valuable, and the rest is postponed until later. When we have a good metrics system, you can check how important a function is, by itself or relative to another function.

It may happen that the function is important, but it is not used due to poor implementation. And we make mistakes and can not understand the needs of users. Then some functions will not be used, because they are not needed. All this we will definitely see in the metrics that we have. After all, we have them, right?

Good. What to do if you overslept all the polymers and the product saw the light without proper preparatory work? Do not put a cross on the product! Take up the creation of metrics and communication with users. Smoothly go to the next cycle: skimming the metrics -> processing feedback -> implementing features according to assigned priorities -> release. And may the Force be with you!

Fine, you say. And ask this question: why not just conduct A / B tests before release? To look at their metrics, check everything, correct errors and go on release?

Very good question. And you are certainly right ... if we are talking about a product that has already entered the market. Then you clearly understand the target audience, and cohort A / B tests will probably be one of the best assessment tools.

But if you create an innovative product and you do not have users, it remains only to guess who they are and what they need. It happens that nothing. Then you will have bad things, but this is a completely different story ... We will not be better off about this.

So again. The goal of each of your metrics is not just to show the numbers, but to describe what is good and what is bad, why we have come up with the metric and how we will use it. If one metric is related to another, we need to see what their connection is, how the metrics affect each other and how we can apply them together.

Just do not think that with an increase in the number of metrics, the overall picture will definitely become clearer and it will be easier for you to correct mistakes. Adopt a few rules to help structure your metrics:

The metrics should have a clear structure and order. Avoid metrics that do not have specific goals and those that you cannot adequately interpret. Work with such metrics threatens with wrong conclusions.

When we have dealt with the metrics and our MVP is already ready to see the light, click on the “Release!” Button and go nervously to smoke on the sidelines. Not for long.

We get the first reviews

or the sixth feat of Hercules

To simplify the life of yourself and the team, and at the same time prevent panic on the ship in the period immediately after release, when you are covered with an avalanche of reviews, requests and requests from users, you need to think in advance about the rules of aggregation and accumulation of incoming data. Why do you need it? In order to prevent mirroring of all received feedback in backlog under loud calls urgently fix all the problems and immediately roll out the updated solution.

If you have read this far, most likely you have come across the wrong prioritization of bugs. By its nature and consequences, the incoming avalanche of non-prioritized requests is about the same.

Wait, you say, because this is the task of the support service - to bring you everything on a saucer in the best possible way.

Formally, everything is so. But if you look deeper, it’s the product owner who will work with the information and clean up the consequences. Therefore, it is in your interest to take care of everything personally and prepare in advance a reliable springboard for quick and effective problem solving. Believe me, it will be easier for you.

Formulate in advance simple and understandable criteria for how similar treatment will be grouped. Do not forget to add the counter of these hits to your BTS (Bug tracking system). Ideally, if you can automatically receive numbers from CRM from already aggregated calls.

Set thresholds for the counters and assign appropriate actions to take upon reaching each threshold. For example: while the number of requests for one problem is less than 5 - we do not record it in backlog, except for cases when the problem blocks our main scenario. After 5 hits, we are backing up and tracking. We achieve the value of 20 hits - we begin a detailed study, communicate with users, try to understand the reason. Reached 50 - we transfer to the category of critical, prioritize and take the next sprint to work out. Your numbers may be different, and the rules will depend on the specifics of the product, the number of customers and other factors. But you understood the principle.

An important point: everything described above does not mean that we immediately do what the user wants. First you need to understand what is his real pain.

So, the key steps to prioritize:

The mechanism of the system should be understood not only to you as the Product Owner, but also to the Support Service, and all members of the product team.

After completing all key steps, be sure to roll in the system. Make sure all roles understand how it works. Communicate to each team member the importance and usefulness of this information. This work is enough to do once - and it will pay off many times. You will save a lot of time and nerves in the future. Take a word.

How not to stay with a long release

or don't try to grasp the immensity

The most severe and at the same time classic mistake at the stage of forming a release plan and release cycle is to try to include as many new features and fixed bugs as possible in each release.

Usually this story develops like this: at the stage of forming a plan, we include 10 features, after two weeks we have 12, then 15, and the release goes as long as 25. The time before the release stretches, there is no intermediate feedback, and after the release we get that avalanche of reviews. Beauty!

And how does this threaten us? Here’s what:

Wash off. Repeat. The result is obvious.

How to be? What to do? Release as often as possible. You say: laudable intention, but something does not come out! I agree. Getting to the frequent release cycle is not easy. Here you also need to understand that these are not just frequent releases, they are frequent releases with well-developed feedback.

Everything always depends on the specifics of the product. But there are some general points: a week after the release, you usually have a clear picture of both the metrics and the appeals received. From this information you can understand where there are problems.

The main and fatal mistake is usually that we do not dig deep into to understand the real reason. Most often we try to correct only the consequences.

The trouble is that the correction of the consequences only works in one case - when we missed the bugs in the release. If we are talking about usability and user desires, we need research and clarification of requirements. Need a dialogue with the client.

Before you start ramming up the following sprints to the back of the new features and Wishlist, a list of which you got from the analysis of metrics and different counters, find out a few things:

How you study customers is up to you. Interviews, observations, other research methodologies are at your discretion.

You must be prepared that none of the selected approaches will bring instant results. However, this will change the paradigm of attitudes towards the product. Step by step, the product will begin to transform. You are likely to receive:

When you learn to study clients deeper and more qualitatively, the desire to embrace the immensity will gradually disappear. And knowing the priorities and values of each feature, you will be able to build the right release cycle, and the feedback will work for you, not against you.

What is left over?

One of the most difficult stages during product development is the first weeks after the MVP release. At this time, there is a stream of feedback and information from the metrics. The better prepared you are, the sooner you will begin to improve performance. Go through several iterations and verify the built-in process of collecting and processing information about user behavior.

To proceed to the next stage, you will need to honestly answer a few questions:

We will definitely answer the answers to these important questions in the following articles. Without them, our story remains unfinished.

And a small, but important afterword. The first pancake almost always comes out lumpy. To be honest, on the first attempt it is almost impossible to select well-defined and effective metrics, ideally setting up a mechanism for collecting and processing feedback. So that later did not have to make clarifications and again communicate with users. Yes, for the first time there can be complete nonsense, there is nothing to hide. We must try again, experiment, redo and repeat again. Only by your own trial and error you will receive invaluable experience in the development of consumers and products, you will begin to create a personal work philosophy that will certainly lead to success!

THE END

We are waiting for comments and a question here, or you can ask Catherine directly at an open lesson , which will be dedicated to this topic.

We continue to expand the topics taught by us. Now we have developed and developed the course “Product Owner” . The author of the course, Ekaterina Marchuk, invites you to get acquainted with her author’s article and invites you to an open lesson.

How do we interpret feedback?

')

On customer development or development of users recently all speak to everyone. And everyone who is familiar with the concept, realizes how important it is, and speaking directly, it is vital for the successful launch of the product.

Customer develoment helps to understand the value of the product, to reveal the hidden motives of consumers, their real problems and needs. And most importantly, custdev allows us to test hypotheses. Without testing hypotheses, it is difficult to lead a project in the right direction, because, as practice shows, bare figures, alas, are not representative.

But, as usual, on a purely theoretical understanding, everything ends. We do not have enough time for anything, including - and for such important tasks.

Almost everyone has a bad habit that one wants to get rid of. However, thinking about a bad habit and dealing with it are completely different things. So here: we seem to be engaged in the development of users - we collect a huge amount of feedback through call centers, social networks, thematic platforms, technical service centers and ... we are not doing anything useful. Instead of controlled collection and analysis of user feedback, we get a chaotic stream of unstructured information and do not receive user development.

When we talk about collecting feedback, it is important to understand that each channel of contact with users has its own audience and its own specifics. Our main task is not just to collect feedback, but to be able to correctly interpret the information received.

In pursuit of quantity

or why it is not necessary to run MVP without checks

Let's talk about the life cycle of user feedback, that is, reviews, applications and downloads. Why do you think the feedback handling mechanism often fails?

A simple example. Let's face it: we all love KPI. And if you look at the Support Service, one of the key components of KPI will be the number of closed applications.

At first glance, everything is logical. The more applications are processed, the higher the loyalty will be. The higher the loyalty, the longer the user retention period. So, not so: the CSI index (Customer Satisfaction Index, customer satisfaction index) does not grow in proportion to the number of requests closed by the Support Service. Why?

It's pretty simple. In the quantitative approach, the received applications are not validated properly, that is, no customer development is applied. This is tantamount to trying to realize an idea, a function or a whole product without testing key hypotheses: why are we doing all this? What need, task or pain are we trying to satisfy? What benefits do we want to bring? How this attempt will end, I think, is obvious to everyone.

That is why it is incredibly important to build a transparent system for processing user requests and requests. What we talk about today.

How did we get to life like that?

or about regular blockages in support after product launch

Take the standard script. The owner of the product, Timofey, always relies on his experience and sense of beauty. It turned out that when creating the MVP terms were burning, the stakeholders lyutovali, and Timofey decided that there was no time for deep consumer research, one can do without them. Everyone knows: an experienced team is able to create a good and popular product on their own. Real needs research is not needed! Especially when there is no time.

No time! Therefore, we forgot about the A / B test before launch. A soft launch did not hold, because there is no time. You remember, yes?

The result is natural: the flow of angry appeals after the release. What does Timofey do? Of course, it starts to urgently fix the problems - all at once.

Metrics, of course, we did not have. In a hurry forgot to write and screw. And Timofey decides to make amends for the last serious miss by adding new functionality. And finally, we really came out in release! Hooray? Grateful users bring us money?

Not at all! Instead of thanks, users bring even more applications to the Support Service. There are more and more applications, even more, and now this avalanche falls on our backlog ... No wonder, the support service works well, not like our Timofey. He now rake descended avalanche and save the product. Do not be like Timothy!

In our scenario, the Support Service is the most effective. Judge for yourself: a huge number of applications were processed. And what will happen next? There will be new applications, and then another - and the cycle will be repeated. The more cycles passed, the more difficult it is to control the situation. Baclog becomes unmanageable, it is impossible to update it, as well as put down priorities for everything that goes there.

And when the number of requests and applications for the new functionality finally goes off scale, it comes to understand that it is impossible to live this way. And how to live?

How to start life from scratch?

or Return to the sources - we introduce metrics!

You need to start by creating simple metrics. Be sure to release the first MVP!

When you compile the first MVP, you build a value proposition model for user profiles. You yourself have to pre-assemble and form the profiles.

If for some improbable reason nothing has been done, go to the very beginning and act in order: create profiles, test hypotheses, build our proposal and only then the business model! Otherwise, all your efforts will go only to heat the environment and you will not get any result.

In profiles you describe needs, benefits, tasks. Each of them has its own priority, which is reflected in the MVP. We have included in the MVP all the most valuable, and the rest is postponed until later. When we have a good metrics system, you can check how important a function is, by itself or relative to another function.

It may happen that the function is important, but it is not used due to poor implementation. And we make mistakes and can not understand the needs of users. Then some functions will not be used, because they are not needed. All this we will definitely see in the metrics that we have. After all, we have them, right?

Good. What to do if you overslept all the polymers and the product saw the light without proper preparatory work? Do not put a cross on the product! Take up the creation of metrics and communication with users. Smoothly go to the next cycle: skimming the metrics -> processing feedback -> implementing features according to assigned priorities -> release. And may the Force be with you!

Fine, you say. And ask this question: why not just conduct A / B tests before release? To look at their metrics, check everything, correct errors and go on release?

Very good question. And you are certainly right ... if we are talking about a product that has already entered the market. Then you clearly understand the target audience, and cohort A / B tests will probably be one of the best assessment tools.

But if you create an innovative product and you do not have users, it remains only to guess who they are and what they need. It happens that nothing. Then you will have bad things, but this is a completely different story ... We will not be better off about this.

So again. The goal of each of your metrics is not just to show the numbers, but to describe what is good and what is bad, why we have come up with the metric and how we will use it. If one metric is related to another, we need to see what their connection is, how the metrics affect each other and how we can apply them together.

Just do not think that with an increase in the number of metrics, the overall picture will definitely become clearer and it will be easier for you to correct mistakes. Adopt a few rules to help structure your metrics:

- collect requirements from teams - involve representatives of different levels and competencies;

- make sketches of metrics - do not be afraid to use everything that comes to mind;

- Break metrics into distinct categories - from business aspects to interface;

- discard from the list metrics for which you have no understanding how to interpret them;

- after the first collection of metrics, conduct an audit - discard those that you cannot interpret, add what you have missed.

The metrics should have a clear structure and order. Avoid metrics that do not have specific goals and those that you cannot adequately interpret. Work with such metrics threatens with wrong conclusions.

When we have dealt with the metrics and our MVP is already ready to see the light, click on the “Release!” Button and go nervously to smoke on the sidelines. Not for long.

We get the first reviews

or the sixth feat of Hercules

To simplify the life of yourself and the team, and at the same time prevent panic on the ship in the period immediately after release, when you are covered with an avalanche of reviews, requests and requests from users, you need to think in advance about the rules of aggregation and accumulation of incoming data. Why do you need it? In order to prevent mirroring of all received feedback in backlog under loud calls urgently fix all the problems and immediately roll out the updated solution.

If you have read this far, most likely you have come across the wrong prioritization of bugs. By its nature and consequences, the incoming avalanche of non-prioritized requests is about the same.

Wait, you say, because this is the task of the support service - to bring you everything on a saucer in the best possible way.

Formally, everything is so. But if you look deeper, it’s the product owner who will work with the information and clean up the consequences. Therefore, it is in your interest to take care of everything personally and prepare in advance a reliable springboard for quick and effective problem solving. Believe me, it will be easier for you.

Formulate in advance simple and understandable criteria for how similar treatment will be grouped. Do not forget to add the counter of these hits to your BTS (Bug tracking system). Ideally, if you can automatically receive numbers from CRM from already aggregated calls.

Set thresholds for the counters and assign appropriate actions to take upon reaching each threshold. For example: while the number of requests for one problem is less than 5 - we do not record it in backlog, except for cases when the problem blocks our main scenario. After 5 hits, we are backing up and tracking. We achieve the value of 20 hits - we begin a detailed study, communicate with users, try to understand the reason. Reached 50 - we transfer to the category of critical, prioritize and take the next sprint to work out. Your numbers may be different, and the rules will depend on the specifics of the product, the number of customers and other factors. But you understood the principle.

An important point: everything described above does not mean that we immediately do what the user wants. First you need to understand what is his real pain.

So, the key steps to prioritize:

- establish prioritization criteria;

- add counters of the same type of calls;

- select dependencies;

- add thresholds;

- identify specific actions to be taken when each of the thresholds is reached;

- write detailed instructions on how the system should work.

The mechanism of the system should be understood not only to you as the Product Owner, but also to the Support Service, and all members of the product team.

After completing all key steps, be sure to roll in the system. Make sure all roles understand how it works. Communicate to each team member the importance and usefulness of this information. This work is enough to do once - and it will pay off many times. You will save a lot of time and nerves in the future. Take a word.

How not to stay with a long release

or don't try to grasp the immensity

The most severe and at the same time classic mistake at the stage of forming a release plan and release cycle is to try to include as many new features and fixed bugs as possible in each release.

Usually this story develops like this: at the stage of forming a plan, we include 10 features, after two weeks we have 12, then 15, and the release goes as long as 25. The time before the release stretches, there is no intermediate feedback, and after the release we get that avalanche of reviews. Beauty!

And how does this threaten us? Here’s what:

- the delivery time of features is not maintained;

- we can not keep customers / users;

- it is impossible to attract new customers - the sales plan fails;

- market share shrinking;

- stakeholders are unhappy;

- demotivation of the whole team.

Wash off. Repeat. The result is obvious.

How to be? What to do? Release as often as possible. You say: laudable intention, but something does not come out! I agree. Getting to the frequent release cycle is not easy. Here you also need to understand that these are not just frequent releases, they are frequent releases with well-developed feedback.

Everything always depends on the specifics of the product. But there are some general points: a week after the release, you usually have a clear picture of both the metrics and the appeals received. From this information you can understand where there are problems.

The main and fatal mistake is usually that we do not dig deep into to understand the real reason. Most often we try to correct only the consequences.

The trouble is that the correction of the consequences only works in one case - when we missed the bugs in the release. If we are talking about usability and user desires, we need research and clarification of requirements. Need a dialogue with the client.

Before you start ramming up the following sprints to the back of the new features and Wishlist, a list of which you got from the analysis of metrics and different counters, find out a few things:

- Do you understand the problem / task / Wishlist?

- Is the priority assigned correctly?

- Is there something more important and significant that you don’t know about?

- Have you calculated the value from the implementation of a particular feature?

How you study customers is up to you. Interviews, observations, other research methodologies are at your discretion.

You must be prepared that none of the selected approaches will bring instant results. However, this will change the paradigm of attitudes towards the product. Step by step, the product will begin to transform. You are likely to receive:

- clear priorities for each feature;

- sprint goals;

- assessment of the value of each release.

When you learn to study clients deeper and more qualitatively, the desire to embrace the immensity will gradually disappear. And knowing the priorities and values of each feature, you will be able to build the right release cycle, and the feedback will work for you, not against you.

What is left over?

One of the most difficult stages during product development is the first weeks after the MVP release. At this time, there is a stream of feedback and information from the metrics. The better prepared you are, the sooner you will begin to improve performance. Go through several iterations and verify the built-in process of collecting and processing information about user behavior.

To proceed to the next stage, you will need to honestly answer a few questions:

- How do you evaluate the obtained indicators - are they good or bad? Does the hypothesis meet expectations or not?

- What metrics need to be fixed first?

- How to prioritize features?

We will definitely answer the answers to these important questions in the following articles. Without them, our story remains unfinished.

And a small, but important afterword. The first pancake almost always comes out lumpy. To be honest, on the first attempt it is almost impossible to select well-defined and effective metrics, ideally setting up a mechanism for collecting and processing feedback. So that later did not have to make clarifications and again communicate with users. Yes, for the first time there can be complete nonsense, there is nothing to hide. We must try again, experiment, redo and repeat again. Only by your own trial and error you will receive invaluable experience in the development of consumers and products, you will begin to create a personal work philosophy that will certainly lead to success!

THE END

We are waiting for comments and a question here, or you can ask Catherine directly at an open lesson , which will be dedicated to this topic.

Source: https://habr.com/ru/post/358644/

All Articles