AI, practical course. Project planning

This article will focus on:

- design of the idea in a real project using different methods of analysis and appropriate project management tools;

- using the CRISP-DM methodology (interdisciplinary standard process for data mining);

- defining standard tasks for any AI project.

Some tasks are presented with a focus on group work. If you are working alone, you can skip these sections or complete the corresponding tasks, combining several functions .

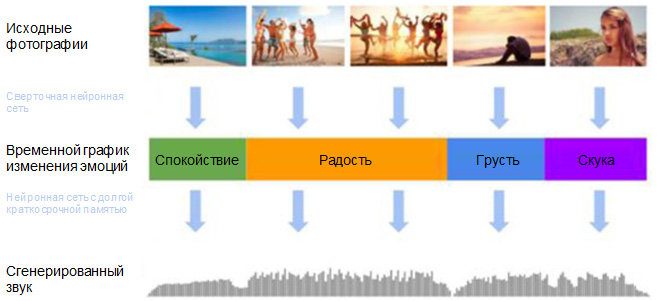

As an educational project for this series of articles, we took an application that will recognize emotions on downloaded images using an image processing algorithm (emotion recognition), create musical accompaniment suitable for recognized emotions, and then edit the video, combining images and musical accompaniment.

')

Design design in the project

Making the design in the project begins with determining the scope of the project, that is, what you will create and how. This eliminates possible obscure situations during development.

For example:

- How many pictures can I upload?

- What is meant by the recognition of emotions?

- How will we represent the emotion on the computer?

- What emotions will be supported?

- How are we going to assign an emotion to a set of images?

- How will we train the music making model?

- How will the emotion recognition component and the music production component be connected?

- How will the process of creating music?

- How to ensure that the melody created by the computer, emotions?

- How will the final video with smooth transitions between frames be mounted?

You need to find the answer to each of these questions before you can go into the implementation phase. In addition, you need a methodology that will help you formulate your own questions about the future of the application, so as not to miss any important aspects.

Project Analysis Methods

We recommend using these three simple but effective techniques for analyzing a project and formulating tasks.

- Hierarchical decomposition . This technique is based on the principle of “divide and conquer” and implies an iterative decomposition of tasks into subtasks until the time to complete a single subtask is only two hours or the time required for decomposition becomes comparable to the time to complete a task. At the top of the hierarchy is a block that denotes the entire system. At the next level, there are blocks associated with logically autonomous system components, which together provide the result necessary for the user, etc., in the reverse order. Since you start with the system as a whole, and then disassemble its level after level into a number of mutually exclusive components, this prevents you from missing a single task.

- Analysis of possible situations . When using this method, you constantly ask yourself the question “What if?” To discover the hidden nuances.

- Modeling user path . This technique is used by many user interface developers and product managers and involves the creation of a number of tasks and scenarios that your application must support. Imagine that you yourself use the application, and simulate the typical modes that the user can call. If you can mentally go from beginning to end without a hitch, there is no need to add other tasks - this is your stop criterion. However, at the very beginning you will most likely encounter problems at every step of the user's path. In this case, just write down the essence of the problem and create a task to solve it after.

Analysis of video editing applications

Based on the hierarchical decomposition method for our project, we decide that the video editing application, like any other application involving user interaction, can be divided into:

- the interface part, i.e. the component directly interacting with the user;

- software and hardware, i.e., a component, where interesting AI processes take place and which, in turn, can be divided into:

- o emotion recognition component;

- o component to create music.

In the interface and hardware-software parts, there are three main components: the user interface (blue), the recognition of emotions (orange) and the creation of musical accompaniment (green), as shown in Fig. one.

Fig. 1. Scheme of the application for video editing.

User interface

The user interacts with this component, using the buttons to upload images, start the video editing process, and organize sharing or downloading the result. Although the user interface is an important element, in this series of educational materials we will focus on intelligent information processing in software and hardware using new technologies from Intel.

Experience the benefits of an AI-based video editing app.

Step 1. Recognizing emotions

Step 2. Video editing

Step 3. Exchange

Emotion recognition by hardware and software (image processing)

This component is responsible for assigning emotions to images. Each intelligent component that processes data has two processes:

- the learning process or data extraction component, ie, the learning phase;

- the process of applying the component, i.e. the phase of application and testing.

Each of these processes is associated with a number of standard AI tasks .

During the learning phase, you supply the component with response data and teach the machine the "rule" of assigning the appropriate responses to input data from the same set or distribution. The tasks of the training phase include:

- define input and output data for each stage of data processing;

- find or create a machine learning dataset that matches specific input and output data;

- train a machine learning model;

- evaluate the machine learning model.

During the application and testing phase, the trained model is used to predict responses when unknown objects are received from the same population or distribution. Tasks include:

- define input and output data for each stage of data processing;

- deploy a machine learning model.

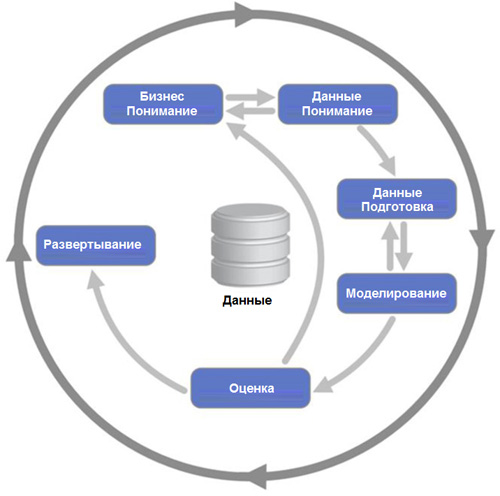

In the broader context, the tasks and steps typical of almost every AI project can be defined using the CRISP-DM methodology . Within this methodology, a project based on data mining or AI is presented in the form of a cycle with six states, as shown in Fig. 2. At each stage there are different tasks and subtasks, for example, data markup, model assessment and function development. The cycle arises due to the fact that any real intelligent system can be improved.

Figure 2. Relationships between different phases of CRISP-DM.

Moving from theory to practice, let's formulate the input and output data for training and test the model of emotion recognition.

We have six different emotions: anxiety, sadness, joy, calm, determination and fear.

- Each emotion is represented in the computer as a code from 1 to 6 in accordance with the method of encoding the content.

- Each image is represented as a three-dimensional tensor (three-dimensional array), where three dimensions are height, width, and color channel (for example, RGB)

Training

- Input data: four-dimensional tensor (a set of three-dimensional images with labels, i.e. images and classes of their emotions)

- Output: a trained machine learning model.

Testing

- Input data: three-dimensional tensor (image without a label), a learning model.

- Output data: multinomial probability distribution by code / class.

Next, you need to see how the project fits into the big picture, and apply system analysis methods to eliminate inconsistencies:

- The user will upload multiple images. Therefore, we must decide how, from the emotions recognized for each individual image, to get a single emotional mood for the entire video.

- Images will be accompanied by music. Therefore, we must understand how a melody will be created on the basis of a particular emotion.

- The content of the images must be synchronized with the music, and the transitions between the images must be smooth so that the user can enjoy watching.

To begin, we will create the music for one image. We will return to several images after we consider the component of creating musical accompaniment and understand how to integrate it with the component of emotion recognition.

Creation of musical accompaniment by software and hardware

This component produces a melody in response to an emotion. To connect the component of creating music with the component of image processing, we need a connecting link between the emotion code and the audio signal:

- Take a randomly known song from a previously created database of famous songs.

- Adjust the tempo, scale, rhythm of the melody in accordance with the emotion using a simple script.

- Start the process of creating music based on machine learning using a basic melody tuned to the emotion.

The process of creating musical accompaniment should complete the basic melody tuned to the emotion, identifying the most naturally sounding next musical note by learning in the past on a wide body of songs.

As in the case of the emotion recognition component, we need to define the input and output data for learning and testing the music creation model.

Training

- Input: collection of songs.

- Output: A trained machine learning model for creating musical accompaniment (for example, predicting the next note in response to a specific note).

Testing

- Input data: a trained machine learning model for creating musical accompaniment And a prepared basic melody (a series of notes).

- Output: a sequence of notes that creates a basic melody.

Taking into account the constant APIs for the music creation component, we need to implement the modulation script depending on the emotion and complete all the AI tasks related to creating the music accompaniment, including searching for a data set to train the music creation model or searching for basic melodies . We will discuss these aspects later in the articles. For our educational project we will use the following:

- Base tunes are taken from the open collection of musical compositions (musical compositions created before 1922)

- The process of creating music is taught in the choirs of Bach, i.e., the notes symbolically representing the works of Bach, which were prepared as part of the BachBot project

- Source files are taken from the music21 project.

Component connection

Options for combining images and melodies are presented below with their own advantages and disadvantages.

Option A: One base tune is modulated according to the prevailing emotion for all images.

- Benefits: Smooth transitions between images.

- Disadvantages: The user may experience discomfort if the musical accompaniment remains the same when the joyful image changes to sad.

Option B: One base tune is modulated separately for each emotion found on the images.

- Benefits: Smooth transitions between images and a clear correspondence between the emotion in the image and musical accompaniment.

- Disadvantages: None.

Option : Different basic melodies are modulated separately for each emotion found on the images.

- Benefits: A clear match between the emotion in the image and music.

- Disadvantages: Non-smooth transitions between images, because music can change dramatically when emotions change.

For example, we have three images with different emotions (joy, tranquility and fear). We'll use Jingle Bells as the base song, and we'll use BachBot to create the music. As a result, we will get three songs created based on three versions of “Jingle Bells” (the first for joy, the second for calm, and the third for fear). Each incoming image is processed separately by calling the API to recognize emotions using one base song that applies to all images. The base song is modulated for each emotion, after which for each image the corresponding version of the song is used, modulated to fit the emotion.

For more information, see:

General recommendations for project management

To decompose a project, do the following:

- determine the structure of the upper level (up to three levels of decomposition);

- talk to each member separately (usually performed by a system architect) to

- learn about the capabilities and requirements of the respective components;

- define official API requirements;

- refine the project decomposition hierarchy. In this case, the smallest integration details will be revealed at an early stage of the project, thereby reducing the amount of work and improvements at the integration stage.

Below are general guidelines that will help you to draw up your plan in the project.

- One specialist from your project team (usually a system architect) will be responsible for decomposition, and all other team members should be actively involved in the process, providing initial data and feedback on the technical specifications. This will ensure the integrity of the idea - the main feature of a well-designed system ².

- To find the right abstraction (input and output) for each component, you may need to consider several components at the same time. In this case, determine all possible input and output formats for each system, and then select a configuration suitable for both components.

- Since tasks are repeated from project to project, after defining AI tasks you can save time by creating a set of standard AI tasks as a subtree in the decomposition hierarchy.

- Start with a simple case (for example, one image, one emotion, or one melody) and go away from it instead of trying to create a model of integration of components for a whole application from scratch.

In subsequent articles, we will consider in detail all the tasks for our educational project.

Control card of decomposition elements and resources for the AI project

Below is a list of typical tasks for AI projects. Use it as a template for your project.

- Formulate a business problem by defining input and output data, for example, objects and labels or target variables.

- Analyze the data.

- Make a selection of data.

- Perform a search analysis of the data.

- Clear the data.

- Delete duplicate, extraneous data, etc.

- Normalize function values.

- Create a test model for the assessment methodology.

- Develop a machine learning model.

- Prepare a machine learning dataset.

- Collect baseline data.

- Find a suitable data set.

- Configure the storage infrastructure.

- If tags are missing, annotate the source data.

- Make recommendations for annotation.

- Perform an annotation process.

- Check the quality of the annotation.

- Teach a machine learning model.

- Select a library.

- Perform a comparative analysis of existing libraries.

- Install and configure the most appropriate library.

- Select and configure the infrastructure for machine learning.

- Perform a comparative analysis of private and public cloud environments and performance technologies.

- Perform resource planning to achieve your machine learning goals.

- Select an algorithm.

- Create a prototype of the algorithm.

- Refine your model by setting up hyper parameters and adding improvements related to this subject area.

- Select a library.

- Perform a model evaluation.

- Deploy the model.

- Determine the nature of service level agreements (SLAs) for machine learning APIs.

- Put the machine learning model in the API (container or re-use in a more efficient programming language).

- Test the API under load to see if it complies with the terms of service level agreements (SLA).

Conclusion

In this article, we looked at three popular systems analysis methods that were applied to the project to create a video editing application. Having performed a hierarchical decomposition, we identified three main components of the application: the user interface, emotion recognition, and the creation of musical accompaniment. We performed a detailed analysis of the AI components for emotion recognition and musical accompaniment creation, including the definition of input and output data, training and testing, as well as the integration of components. Finally, we gave recommendations on project planning, and also shared a set of standard AI tasks based on the CRISP-DM methodology, suitable for any AI project.

Source: https://habr.com/ru/post/358506/

All Articles