Chip aging is accelerating

In the process of adding chips made by advanced technological processes to cars, and changing the patterns of their use in data centers, new questions are starting to appear related to their reliability.

Reliability is becoming one of the important advantages for new chips entering markets such as automobiles, cloud computing and the industrial “Internet of things”, but it’s really possible to prove that the chip will work for a long time, as it should, is becoming more difficult.

In the past, reliability was usually considered a problem of an integrated circuit fabrication factory. The chips, designed for computers and telephones, were designed to work at the peak of opportunities for an average of two to four years of normal use. After that, their functionality began to degrade, and users were updated to the next revision of the product, boasting new features, speed and increased battery life. But with the development of chips for new markets, or markets that in the past have used less sophisticated electronics - cars, machine learning, Internet of things, industrial Internet of things, virtual and augmented reality, home automation, cloud technologies, mining cryptocurrency - reliability has ceased to be simple item from a large check list.

Each of these target markets demonstrates unique needs and characteristics that determine how and under what conditions chips are used. And this in turn seriously affects their aging, safety, and other factors. Consider the following statements:

- Reliability is no longer measured for years. Usage patterns are changing dramatically. A modern car can be idle 90-95% of the time, and the mobile will stand idle 5-10% of the time. This affects the development of electronics, and the core business model used in technology development.

- The definitions of what can be considered “functional” or “good enough” are changing, as advanced electronics are constantly becoming more complex. In the past, the cracked or dirty camera of a drone or robot was replaced. But with the addition of more sophisticated electronics to advanced devices, the impact of a cracked lens can be compensated by staying within functionally adequate work. On the other hand, what was acceptable for less complex systems can now be considered unacceptable on the basis of tightened system tolerances.

- Modeling degradation and quality is subject to a much larger number of factors than it was before, some of which may not even be obvious when developing chips. For example, a chip well-known for good quality may behave differently when combined with other chips or devices on a printed circuit board.

Methods of using electronics vary. This happens even in data centers that are historically the most conservative in terms of adapting new technologies and methodologies.

')

“Aging depends on clock speed and power, but in the past, servers were sometimes turned on for work, and then most of the time was in standby mode,” said Simon Cigars, general director of ARM . “Turning to cloud technologies, you need to change the criteria for development, because they are based on continuous use. This raises many questions about how to properly develop a chip for long-term work. ”

At the beginning of the millennium, the average server load was at the level of 5-15%, and this trend has continued since the 1990s, because IT-specialists, fearing equipment failures, reluctantly launched more than one or two applications on a single server. Two events occurred that changed this state of affairs. First, the cost of energy began to grow. Secondly, and more importantly, companies were reorganized so that IT departments, and not equipment maintenance departments, were in charge of the cost of used electricity. Both factors led to an increase in sales of virtualization software, which increases server utilization, which led to a decrease in the number of servers that needed to be powered and cooled.

Cloud technology takes operational efficiency to a new level. Their goal is to maximize the load through balancing computing tasks across the entire data center. So you can increase the percentage of load for all servers, and not just for servers in one rack, or allow you to quickly turn off those that are not needed now. This approach is effective in terms of energy use, but it seriously affects the degradation and aging of electronic circuits.

“We are witnessing an acceleration of aging, until complete failure of the chip,” said Magdi Abadir, vice president of marketing for Helic . “They begin to skip bars or jitter increases. Or dielectric breakdown occurs. And every time something breaks, there is a whole avalanche of things that need to be taken care of. Many of the aging models evolved at a time when electronics were used occasionally. And now the chips are constantly working. Inside the chip, the blocks are heated, which accelerates aging. Because of this, you can encounter various strange phenomena. Many companies have not updated their aging models. They assumed that their devices would last three or four years, but failure could occur earlier. Deviations from the original design may be small from the very beginning, but aging increases them. ”

The tendency to increase the load penetrates into the cars, and will continue to do so until fully autonomous vehicles replace people drivers. Robomobili process more and more information, some of which flow from sensors such as radar, LIDAR and cameras. All these data must be processed faster than before, and with greater accuracy - and this greatly loads the electronics.

“The minimum reliability of ADAS [ advanced driver assistance systems / approx. trans. ] is 15 years, which is much more than 2-5 years for former modules, ”says Norman Chan, chief process engineer at ANSYS . - Aging is not just due to the time of work. There is also NBTI [ negative bias temperature instability - threshold voltage shift / approx. trans. ], electromigration , which can also be related to temperature, ESD [ electrostatic discharge ] and thermal coupling [thermal coupling]. ”

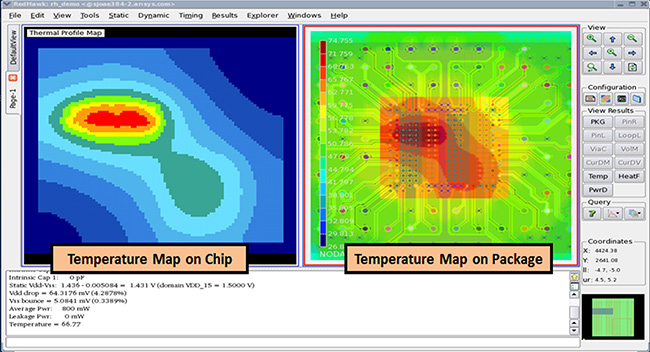

Temperature Modeling for Chip and Housing

And although many auto parts suppliers have already manufactured chips that can withstand extreme temperatures, mechanical vibration, and various noise, such loads have never been applied to CMOS chips manufactured using advanced process technology for a long time. Many industry-related sources confirm that automakers are developing 10/7 nm chips to process all data, so that their schemes do not become outdated too quickly - the latter are often developed for several successive generations of vehicles. The problem is the lack of a sufficient amount of real data, talking about the reliability of these devices, working for a long time under the influence of the environment.

“We have to do a different design,” said Segars. - According to one of the ideas, in the end we will need fewer machines, since they will hardly stand idle. But there is another: robomobil will work more and faster wear. After all, all in the end wear out. The challenge is to ensure that the electronics do not wear out faster than the mechanics, and for this you need to make a different design. Consider everything from a more accurate attitude to noise to minimizing voltage spikes. ”

Thinner insulation, thinner substrate

One of the ironic sides of increasing reliability is that it contradicts five decades of progress, the purpose of which was, for reasons of economy, to reduce the size of the elements of a microcircuit every two years. And this usually means using a thinner dielectric and wires, as well as an increase in dynamic power. And increasingly, this means using a thinner substrate. For the most advanced technical processes, this has resulted in an increase in current leakage, the amount of noise, electromigration and other effects.

"From the point of view of the scheme, it is necessary to somehow cope with the deviations of the process,] said Andre Lang, manager for quality and reliability at Fraunhofer EAS. “But from the point of view of development, it is necessary to take into account how the system will cope with the defects known to it. If you take roboomobili, they have a central processor, which needs to decide what information from which sensor to use. One of them may become dirty or refuse. ”

This makes the modeling of degradation more difficult, since it must be carried out in the context of the entire system. “Most parts of the system contribute to the degradation of electronic circuits, be it NBTI, an increase in the number of defects per unit area or process deviations,” said Lang. He noted that another big problem is to determine the causes of the defect without processing all the available data, because their volume may be excessive.

An example of what could go wrong

Different approaches

The deviation of the process increases with each new process technology. In the past decade, smartphones have set the tone (iPhones appeared in 2007). Today, the largest users of advanced technical processes are servers for data mining, machine learning, AI and cloud services.

The relationship between process deviation and reliability is described in detail, but because of deviations, it becomes more difficult to accurately model the effects associated with aging. Because of this, several different approaches to solving this problem have appeared, from complex statistical modeling and simulations to the location of sensors on chips or inside buildings.

“It is necessary to monitor the temperature rise at the heat source using an approach called“ random walk ”that works both locally and globally, said Ralph Iverson, chief engineer of the R & D department at Synopsys. “Using a random bypass, the voltage is averaged, so the delta is zero.”

This helps build models, but the resistivity on a scale of 5 nm and less does not always remain constant, Iverson says. Surface effects play a role, and the data does not always represent a copper contact, so more localized data is required. It is in this area that the hybrid approach begins to manifest itself, since this level of uncertainty is difficult to describe in the abstract.

“In the world of cars, everything works quite well at the BiCMOP level, but now there are requests for an advanced version of CMOS,” said Mick Tegetoff, marketing director at Mentor, a division of Siemens Business. - We are seeing an increase in interest from manufacturers, and companies involved in the automation of electronics design, already simulate the aging of chips under load. is that enough? Any model is just an attempt to bring the real world closer. You do the simulation, do everything you can to create a chip that has to work for a long time, but then you need to go back to physical testing and, for example, put the chips in the oven to create physical exertion. Before our eyes, more and more electronics are being subjected to this kind of testing. ”

Analog vs. Digit

So far, all obsolescence modeling has focused on digital circuits. Analog systems add a whole new perspective to aging.

“Companies are well-versed in the aging process and the abnormalities of chips located somewhere close to the engine compartment, so they are not moving blindly,” said Oliver King, technical director of Moortec. “But analog circuits are much more variable.” The digital chip will simply stop working. Analog can start to work a little worse, a little less accurate, so you have to adjust to it. Developers of analog systems traditionally did not impose such strict requirements on geometry as developers of digital ones. Electromigration is still a problem, as is the current density. But the effects of aging are not so strong. Still, chips need to be developed more proactively, given the state of the repair and whether it is necessary to take some action. ”

Frank Ferro, senior director of product management at Rambus, agrees with this view. “The main problem of PHY chips is ambient temperature. When it grows, the speed starts to "float", so recalibration is required. For users there is a so-called "Christmas test". This is when the Playstation or other electronics are stored in cold weather in the garage, and then you turn it on Christmas morning, and the device must instantly go from cold to working mode. The same applies to memory systems in cars and base stations. Aging has an effect on these systems, and they have to be recalibrated to eliminate the negative impact. ”

Ferro says that PHYs are subject to the same checks as digital components, including tests for forced failure, for voltage and temperature fluctuations. But PHYs are designed to change due to these fluctuations, which is quite difficult to integrate into digital circuits - especially on advanced process technology in which deviations affect power and speed.

Analog circuits are often developed on the basis of so-called cyclograms [mission profiles]. A certain function of the roboMobile is a cyclogram for an integrated circuit designed specifically for RoboMobiles.

“One of the major problems we faced is that these devices can be used in different cases,” says Art Schaldenbrand, chief marketing officer at IC and PCB Group. - The device can fail in many ways. We select various loads designed to disable it. Temperature instability can lead to a failure of 10% of devices, but this is the worst case scenario. We need ways to better express the degradation of the chips. At finFET transistors, the loads will be different from flat ones, so you have to simulate various phenomena. ”

Shells and other unknowns

As Moore’s law slows down, more and more companies are turning to advanced packaging to improve speed and flexibility in design. It is not yet clear how to simulate advanced packaging for determining loads and aging. In particular, difficulties arise because of a very large number of packaging options, because of which no one knows which one will win. This is also influenced by the relative novelty of some of these technologies, and what happens inside the housings should show time.

“Housing layers may be too close to other components or to loads on the other hand,” said Abadir from Helic. - This is all you need to model. And even before obsolescence, it is necessary to model aging, since the number of factors affecting the work is growing. Therefore, the location becomes important. If you start moving components along the circuit, you change the resonance frequency. There are no simple rules for this. We'll have to analyze the whole scheme, and, having encountered a problem, you may have to move something. ”

In complex schemes, there are also other anomalies that can eventually affect reliability. Some models of use can turn on and off the circuit more often than others, which loads them more.

“If something is in standby for too long, it will not age like other schemes,” said Jushan Sai, chief software architect at Cadence. - And the smaller the device, the stronger the effect of aging. Loads will be higher, and aging will be faster. "

How to approach all the problems described is not completely clear. Some of them will obviously require new materials and technologies.

“Power electronics are moving from silicon devices to SiC and GaN, capable of operating at higher switching frequencies, more efficiently at higher temperatures,” said John Perry, director of industrial electronics marketing at Mentor. “In some cases, this will allow the power electronics to be located closer to the motor, that is, in higher temperature conditions. In other cases, the use of semiconductors capable of withstanding higher temperatures, means reducing the need for cooling. However, semiconductors need to be packaged, after which this case must also withstand high temperatures. New technologies are poured a lot of money - for example, in sintered silver, used as a material for planting a crystal, and clips instead of wired connections, so the packaging of power devices like the IGBT is undergoing dramatic changes in terms of materials, processing and development technologies. "

Conclusion

There is a change in attitudes that aging, loads and other effects bring more and more problems when switching to advanced process technology or when used for extended periods of time in those markets where safety of the device is important.

"The starting point is that customers are asking us questions today," said Lang. - Their reference points differ from client to client, but questions sound quite often. Many are just embarking on this issue. They are faced with an increase in voltage or temperature, and certain experiments are underway to extrapolate the effect of excessive loads. But to understand how degradation will affect the whole scheme is harder. For complex chips, much more needs to be done. "

But with the change of attitudes, the contribution of people to solving these problems also changes. Chip developers are just starting to think about modeling degradation and aging. As with the power electronics of a decade ago, all this will soon change.

Source: https://habr.com/ru/post/358342/

All Articles