Software testing: automation, evaluation and ... utopian

Last time we told how to prove to all project participants that testing is a useful thing. We hope that the arguments were convincing. Now you can talk about how to approach the creation and planning of tests, their classification and evaluation.

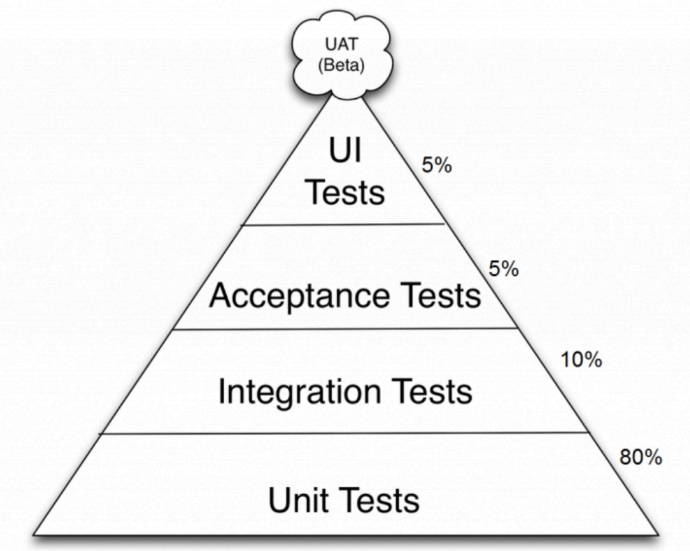

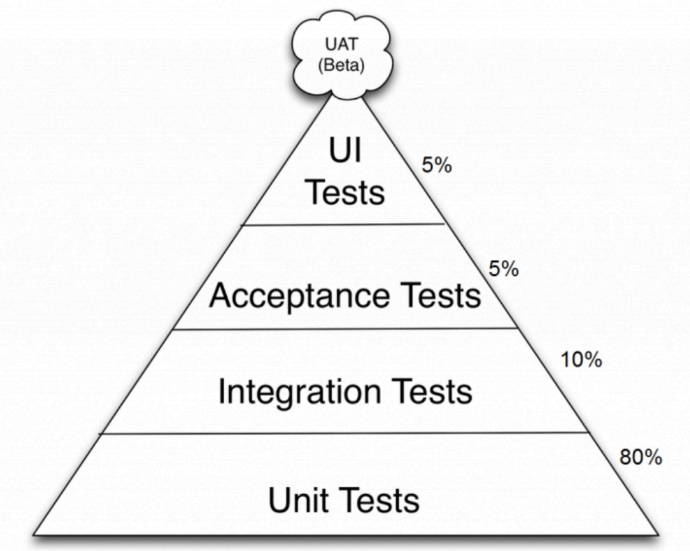

The effective distribution of tests in terms of their automation can be represented as a pyramid.

Most of the software functionality is overlapped by simple unit tests, and more complex ones are created for the remaining functionality. If we turn this pyramid, we will get a funnel with a lot of different bugs at the entrance and a small number of heisenbags at the exit. Consider each group in more detail

')

At the beginning of the project and in young teams, manual tests take a lot of time and effort, and automatic tests are almost never used. Because of this, the effectiveness of testing decreases, and it is necessary to get rid of it: to move away from manual tests and increase the number of unit tests.

Compared to UI tests, unit tests run faster and take less time to develop. They can run locally, without powerful farms or individual devices. Feedback from unit tests is several times faster than from UI tests. From changes in the API or XML file, UI tests begin to fall massively, they must be rewritten each time. Against this background, an important advantage of modular tests is greater stability.

In terms of code coverage, unit tests are more efficient, but testing cannot be completely blocked. You need to constantly keep a balance in which unit tests cover basic cases, and UI tests solve more complex tasks.

It is important to understand that high-level tests signal unclosed bugs in tests at lower levels, so when an error occurs, for example, in the combat code, you should immediately make the corresponding unit test. When bugs are resurrected, it is much more offensive than when they are detected for the first time.

In addition to the well-known principles of SOLID, which determine the quality and beauty of the code, two more requirements must be taken into account when programming tests: speed and reliability. They can be achieved, guided by the five basic principles, united by the acronym FIRST.

If you really simplify, then the tests should be fast and reliable. For this, synchronicity, lack of expectation of external reactions and internal timeouts are important.

In the framework of TDD (test-driven development), today there are two testing schools. In the Chicago school, emphasis is placed on the results that test classes and methods give out. In the London School, testing focuses on behavior and implies the use of mock objects.

Suppose we have a class that multiplies two numbers, for example, 7 and 5. The Chicago school simply checks that the result will be 35 — it doesn’t matter how we know it. The London school says that you need to lock the internal calculator that exists in our class, check that it is called with the correct set of methods and data, and make sure that it returns the locked data. The Chicago school is testing "on the black box", the London - on white. In different tests it makes sense to use both methods.

Even if you cover with tests 100% of the code, you can’t guarantee the absence of bugs anyway. And the desire to achieve 100% coverage only hinders the work: developers are beginning to strive for this figure, creating tests for the sake of indicators. The quality of the tests is indicated not by the level of coverage, but by simpler facts: few bugs reach the final product and new ones do not appear when closing a found bug. The level of coverage only signals what, by definition, should be - whether we have uncovered portions of the code or not.

In the following material of our test cycle, we will proceed to the design, analyze various testing notations and types of test objects, as well as quality requirements for the test code.

Pyramid testing

The effective distribution of tests in terms of their automation can be represented as a pyramid.

Most of the software functionality is overlapped by simple unit tests, and more complex ones are created for the remaining functionality. If we turn this pyramid, we will get a funnel with a lot of different bugs at the entrance and a small number of heisenbags at the exit. Consider each group in more detail

')

- Unit testing or block testing works with certain functions, classes or methods separately from the rest of the system. Due to this, when a bug occurs, you can isolate each block in turn and determine where the error is. In this group, behavioral testing is isolated. In fact, it looks like a modular one, but it implies strict rules for writing test code, which is regulated by a set of keywords.

- Integration testing is more complex than modular testing , as it verifies the interaction of application code with external systems. For example, EarlGrey UI tests track how an application interacts with server API responses.

- Acceptance testing verifies that the functions described in the terms of reference are working correctly. It consists of sets of large integration testing cases that are extended or narrowed in terms of the test environment and specific launch conditions.

- UI testing checks how the interface of the application meets specifications from designers or user requests. It highlights snapshot testing, when a screenshot of an application is pixel-by-pixel compared to a reference one. With this method it is impossible to close the entire application, since the comparison of two pictures requires a lot of resources and loads test servers.

- Manual or regression testing remains after automation of all other scenarios. These cases are performed by QA specialists, when there is no time to write UI tests or you need to catch a floating error.

At the beginning of the project and in young teams, manual tests take a lot of time and effort, and automatic tests are almost never used. Because of this, the effectiveness of testing decreases, and it is necessary to get rid of it: to move away from manual tests and increase the number of unit tests.

Unit vs UI

Compared to UI tests, unit tests run faster and take less time to develop. They can run locally, without powerful farms or individual devices. Feedback from unit tests is several times faster than from UI tests. From changes in the API or XML file, UI tests begin to fall massively, they must be rewritten each time. Against this background, an important advantage of modular tests is greater stability.

In terms of code coverage, unit tests are more efficient, but testing cannot be completely blocked. You need to constantly keep a balance in which unit tests cover basic cases, and UI tests solve more complex tasks.

It is important to understand that high-level tests signal unclosed bugs in tests at lower levels, so when an error occurs, for example, in the combat code, you should immediately make the corresponding unit test. When bugs are resurrected, it is much more offensive than when they are detected for the first time.

Test Code Requirements

In addition to the well-known principles of SOLID, which determine the quality and beauty of the code, two more requirements must be taken into account when programming tests: speed and reliability. They can be achieved, guided by the five basic principles, united by the acronym FIRST.

- F - Fast . Good tests give the fastest response. The test itself, installing it into the environment and clearing resources after execution should take several milliseconds.

- I - Isolated. Tests should be isolated. The test is carried out independently, and all data for it is taken from the environment, do not depend on other tests and test modules. A nice bonus for following this principle: the order of tests is not important, and they can be run in parallel.

- R - Repeatable. Tests should have predictable behavior. The test should give a clear result, regardless of the number of repetitions and environment.

- S - Self-verifying. The test result should be available, displayed in some obvious log.

- T - Thorough / Timely. Tests must be developed in parallel with the code, at least within one pull request. This guarantees coverage of the combat code, as well as the relevance of the tests themselves and the fact that they will not need to be further modified.

If you really simplify, then the tests should be fast and reliable. For this, synchronicity, lack of expectation of external reactions and internal timeouts are important.

What tests should cover

In the framework of TDD (test-driven development), today there are two testing schools. In the Chicago school, emphasis is placed on the results that test classes and methods give out. In the London School, testing focuses on behavior and implies the use of mock objects.

Suppose we have a class that multiplies two numbers, for example, 7 and 5. The Chicago school simply checks that the result will be 35 — it doesn’t matter how we know it. The London school says that you need to lock the internal calculator that exists in our class, check that it is called with the correct set of methods and data, and make sure that it returns the locked data. The Chicago school is testing "on the black box", the London - on white. In different tests it makes sense to use both methods.

Why is it impossible to 100% test coverage

Even if you cover with tests 100% of the code, you can’t guarantee the absence of bugs anyway. And the desire to achieve 100% coverage only hinders the work: developers are beginning to strive for this figure, creating tests for the sake of indicators. The quality of the tests is indicated not by the level of coverage, but by simpler facts: few bugs reach the final product and new ones do not appear when closing a found bug. The level of coverage only signals what, by definition, should be - whether we have uncovered portions of the code or not.

In the following material of our test cycle, we will proceed to the design, analyze various testing notations and types of test objects, as well as quality requirements for the test code.

Source: https://habr.com/ru/post/358224/

All Articles