Notification Center. Tame 200+ mailings

Hello! In this article we will describe how the notification center was created - a system that solves the quality of communication with users within a large and constantly evolving 10-year system.

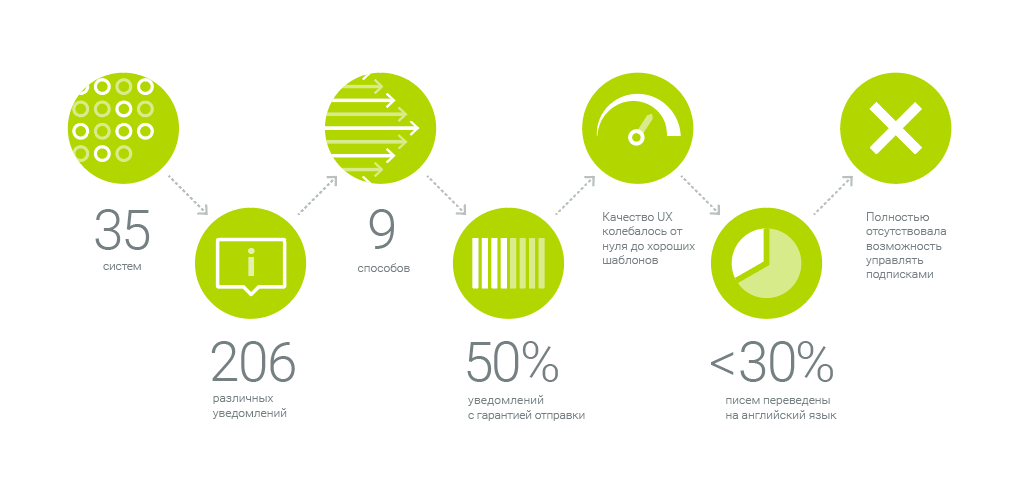

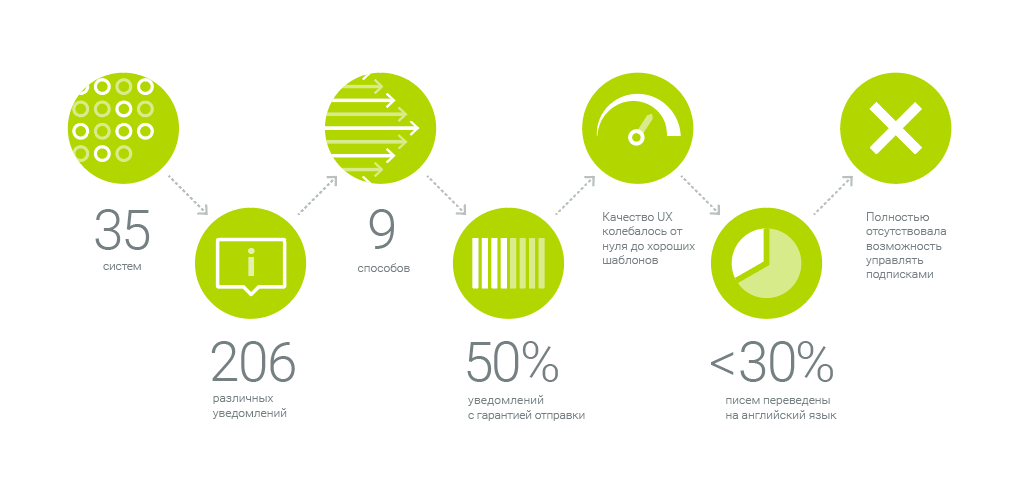

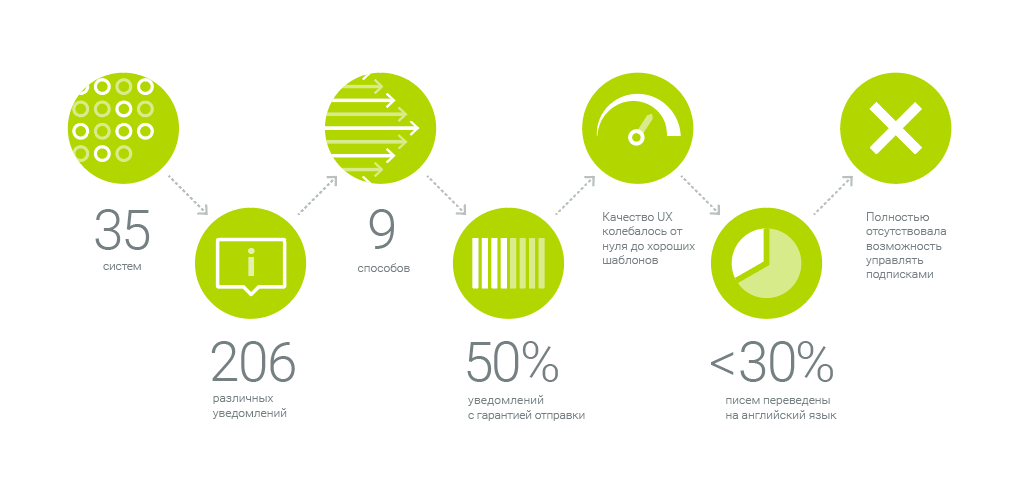

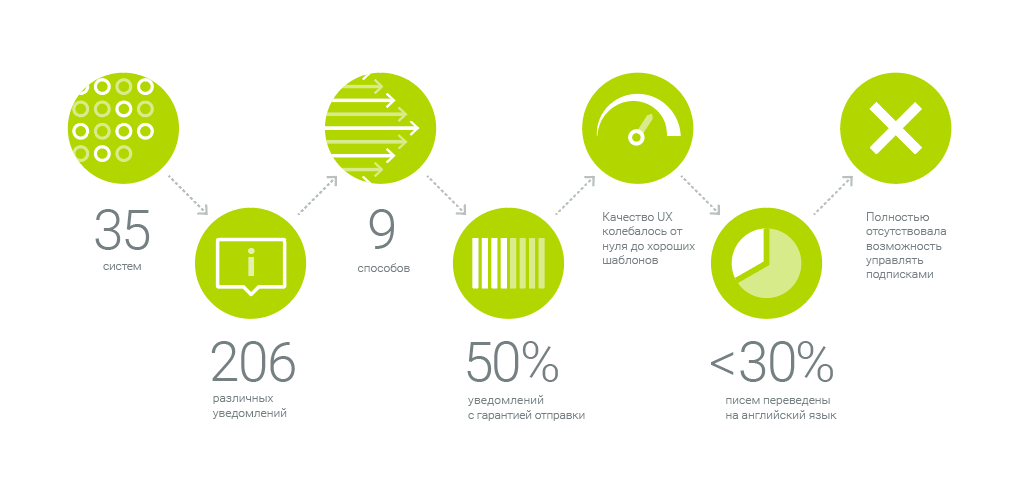

Our system sends 206 different notifications from 35 systems in nine ways. Here is a front of work. As for this colossus, we created a single communication platform - the center of notifications - we tell under the cut.

')

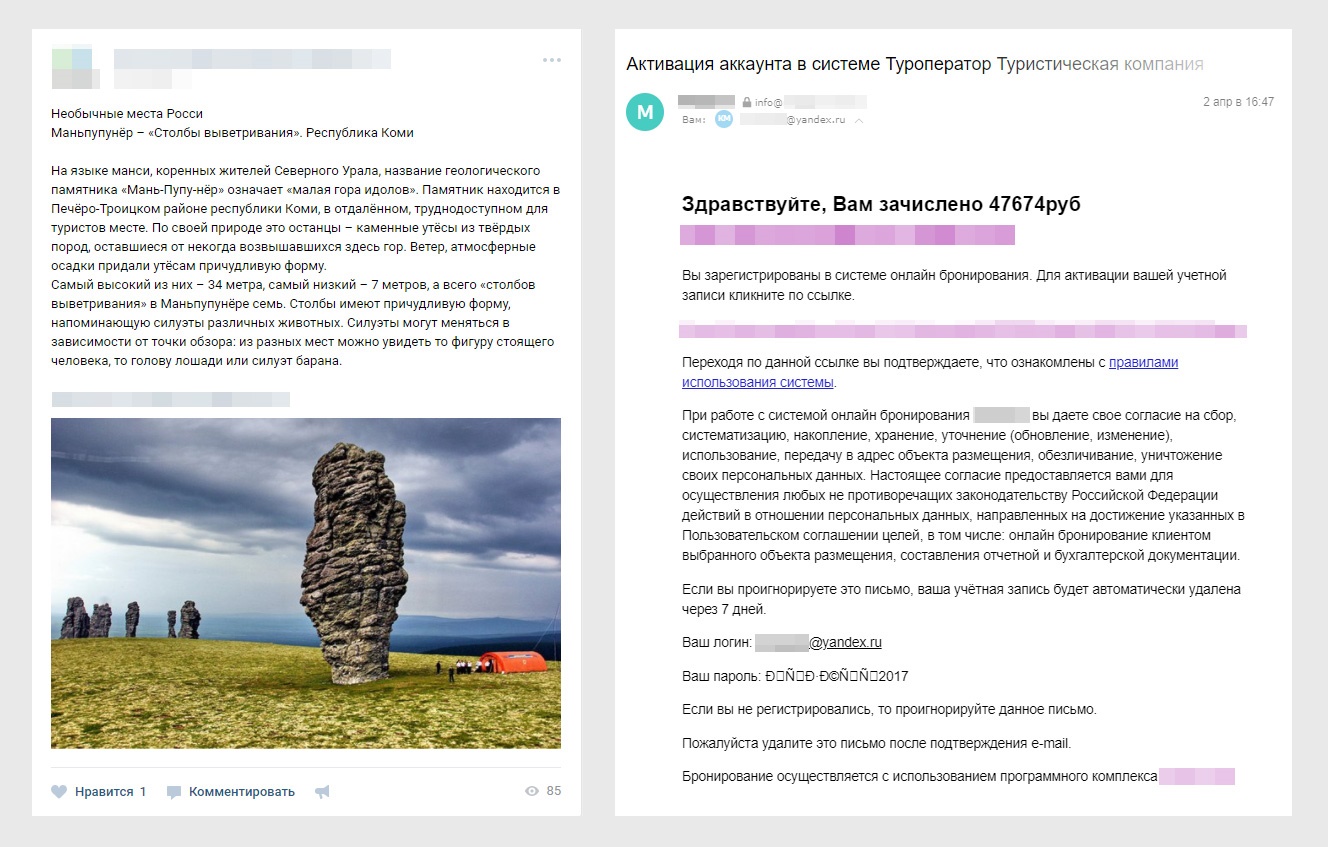

Any information systems are developing and are beginning to contact with the user more and more. Think of any online store where you recently made an order. He sends letters with information about the order, mailings and push-notifications about new promotions, SMS, and is still active on social networks. And you have probably noticed that these messages are composed differently. And how different can be the tone and quality - in nets neat texts and hashtags, and I want to send a letter to spam without reading.

First of all, we analyzed all these messages by the following parameters:

• who are the recipients of these messages,

• which dispatch strategy (immediately, according to the schedule, aggregation),

• is there a template for the message,

• reliability of sending,

• Does it fit the corporate style?

• is there any support for multilingualism?

In other words, we wanted to know what we send and to whom. And here are the results we got.

We realized that we want to come to a single pipeline of notifications, where messages are delivered efficiently and retain meaning.

We want to remove the burden and responsibility for sending notifications and understanding such entities as “subscriptions”, “localization”, etc. from business systems.

In our concept, the information system should become a centralized mini-editorial with a smart pipeline that recognizes how, when, to whom and through which channels it should be distributed by subject / type of message. And spreads.

1. Agree on the rules of the game and bring all the notifications to a single quality. Analyze all notifications and remove situations when 5 systems sent the same information to the user, but in a different design. We remove from the developers of business systems responsibility for the UI and the literacy of notifications, the guarantee of sending notifications, the monitoring of sending notifications. Instead, we come to a centralized system where all notification templates with a single design and functionality and a separate team that deals with these issues are stored.

2. Ensure timely dispatch. Have the ability to configure how often to send information and what type of notification to send. Be able to quickly add a new notification for a business request. Say, order cancellation information needs to be delivered as quickly as possible, and you can talk about sales and new collections once a week.

3. Ensure the guaranteed sending of information. An important message to send the most operatively viewed channels - this is SMS, instant messengers. Or all at once. Monitor and duplicate through the same or other channels if there is no reaction.

4. Maintain a single style of communication. Create a communication system - templates for letters and messages - and stick to it. (This is a single UI and redpolitik ).

5. Personalize communication. Distinguish when we communicate with an individual, and when with a legal person, in what time zone he lives.

6. Watch out, that is, set up monitoring and security. Send links to attachments that, when opened, perform security checks and download statistics are collected, instead of sending attachments directly in letters. Provide guaranteed shipment, monitor what was sent to whom.

There are packaged solutions on the market that perform part of the functions we need. For example, you can embed event notifications in BizTalk and set up special pipes that ultimately make up this letter. In Dynamics CRM and SharePoint there are settings that respond to some events. As a rule, it comes down to the fact that someone somewhere pressed a button, and as a result - the email went. About more complex things, such as aggregation policy and a beautiful UI, there is no question.

Azure and AWS have many services that are able to take ready-made content and send a mailing list. It turns out that all the content for it must be prepared manually. And the purpose of the notification center is to prepare the content.

So we made sure that in our case we should not rely on a solution out of the box, where we will have to configure the UI, and the system will connect itself somewhere and pull out everything that is necessary. Such things do not work out of the box, they need to be done by hand. Box solutions in such cases involve the use of meta-language and configuration, which ultimately turn into a configuration problem. We also got a solution made for the specific needs of the business. It is easy to set up and add new tasks.

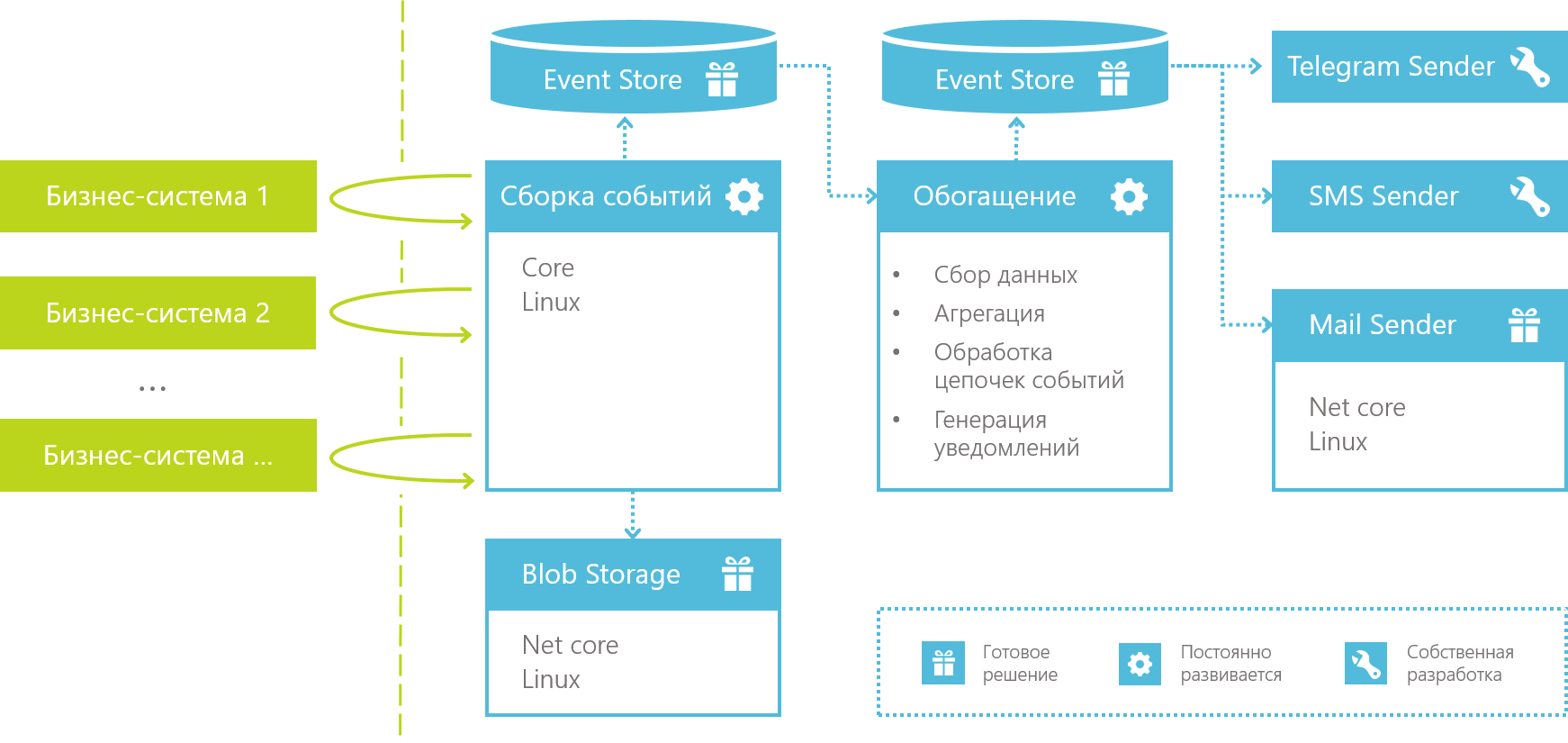

We have business systems - these are systems that solve the main tasks of a business. They work separately from the notification center and should not deal with communication with users.

Ideally, such systems simply generate the business events necessary for their work and interaction with other systems, and the Notification Center is simply added alongside and becomes another listener of these business events, and reacts to them as to signals for action with the minimum required input set information. Next, the Notification Center supplements the events with the necessary information, processes it, and at the output we receive one or several notifications ready for sending, each of which personally addresses the interested user in the right language and with all the necessary information.

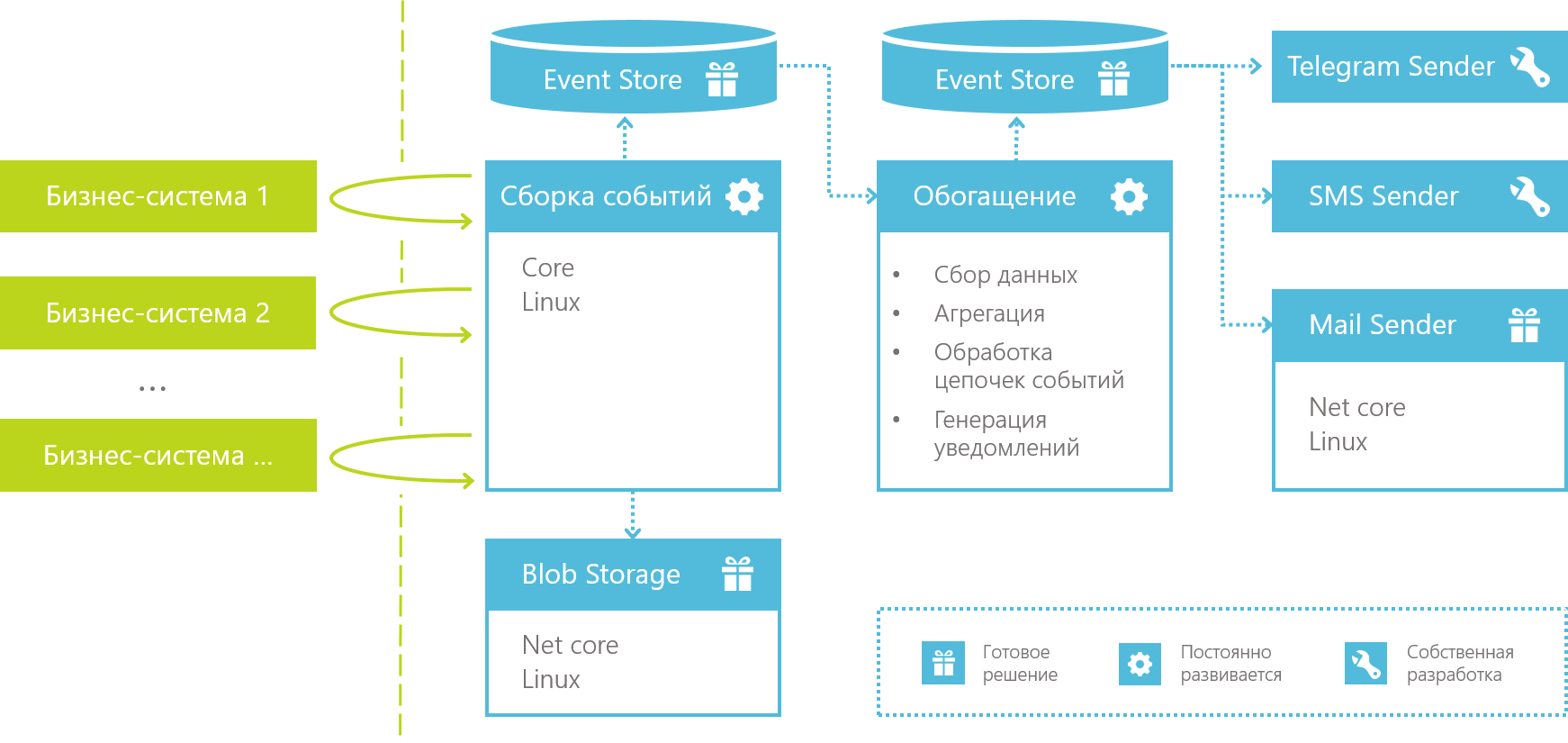

Technically, all this is decomposed into an information processing pipeline, which looks like this:

It all starts with a collection of events that intercepts or purposefully collects data from business systems and stores them as a “raw” stream of events for further processing.

From the development point of view, the event collector is a host system designed with the aim that we can very quickly add here the processing of the next type of events.

From the very beginning, we abandoned the idea of a megasystem for data assembly, which will have its own meta-language and wonder-UI-settings, with the help of which it can be connected to anything and collect anything. Instead, we based the system on the most flexible tool available to us — a programming language, and focused on handling the next business event to write one little classic who knows where to pick up the event and what to turn it into. Then this classic is thrown into the host system of data assembly and just starts working as another element of the pipeline.

At the time of data collection, they are reduced to a “canonical form” - each event receives a unique identifier (or a CorrelationId is taken, which has already been assigned to an event in the business system), the event date is determined (DateTime.Now or the date from the business data received depends on the case ), event type, etc., and all events are saved to the general event stream for further processing. The collector is not directly interested in the event data, he, figuratively speaking, wraps them in an envelope. The envelope form is canonical, and the contents are different.

As we wrote above, at the time of collecting events, they are stored in the general stream for further processing. In deciding how to store data, we chose a widely known product in narrow circles called EventStore . By the way, one of the ideologists of EventStore is Greg Young , whose name is inextricably linked with concepts such as CQRS and Event Sourcing.

According to the definition on the main page, EventStore is “a functional database with complex event handling in JavaScript”. If we try to formulate in free form what the EventStore is, then we would say that this is a very interesting hybrid of a database and a message broker.

The advantage taken from brokers is the ability to build a reactive system in which EventStore takes on notification of listeners about new events, guaranteeing message delivery, competitive processing, tracking which of which subscribers have already been read, etc.

But, unlike message brokers, data is not deleted from the EventStore after it has been read by the recipient. This allows not only reactive work with atomic events, but also work with arrays of historical data, as in the database. By the way, one of the most advanced features of the Event Store is the ability to build temporal queries that allow analyzing sequences of events over time, which is usually difficult to do with the use of “traditional” databases.

In the case of the Notification Center, both the EventStore features were in demand. Using the reactive subscription model, we implemented the generation of instant notifications and enrichment of event data (about it below). And with the help of projections, we organized a sequence analysis of events, with the help of which you can:

but. generate aggregated events in the spirit of "Summary of ... for the week / month / year",

b. analyze sequences of different types of events and find interesting patterns between them, track peaks of current events and in general.

Another issue related to data storage and arising in the development of the Notification Center was the work with files. Often we need to send a file to the user in the notification.

The simplest example: sending a report by mail. And the simplest thing you can do is attach a file to the letter as an attachment. But if we develop the scenario, we will immediately notice several limitations in this decision.

The simplest solution to all these questions is to send a link instead of a file. So we don’t load the mail server either, and we can monitor what is happening with the file, and we can even conduct security checks and check that the user can actually see this file.

On the other hand, there is a purely practical sense in this. The file can be bound to the event from the moment the event occurred. For example, when the essence of a business event - received an exchange file from the booking system in air travel for a specific date. Or a file can be generated as a result of processing a batch of events, say, for the last month.

In general, files can exist from the very beginning of an event’s life, and there’s no point in “dragging” heavy content through the entire pipeline of the Notification Center only to send it at the end. You can save the file to a special service at the very beginning, and save its identifier in the event. And you can get the file where it will be needed already when sending a notification.

This is how one more very simple service was born - Blob Storage. You can save the file through a POST request and receive a Guid in reply. You can also send a GET request to the service with the received Guid, and in return it will return the file. And all security checks, statistics gathering and more are internal affairs of the Blob Storage service.

So, at the moment we have considered the event assembly stage and how we store information about events and files. Let us proceed to the stage at which work begins with the data - the stage of enrichment.

The “canonical” events collected earlier fall at the entrance to this stage. At the output, we receive from zero to N ready-made notifications that the recipient already has, the text of the message in a specific language, and it is already clear which channel (email, telegram, sms, etc.) will need to be sent to the notification.

At this stage, the original event may be subjected to the following manipulations.

Mainly due to this operation, the whole stage was called “enrichment”. The simplest example of adding data is in the "canonical" event, we received a user Id. At this stage, we, by the user's Id, find his name in order to be able to contact him by his name in the notification text, and supplement the original event with the information found.

Each “dresser” is a separate small class, which (by analogy with event collectors) is quickly written and “thrown” into a common pipeline, which will already give all the necessary information to the input and save the result where it is needed.

Everything is quite simple here. The approach is the same as in enrichment, but the aggregator classes run on a schedule, rather than reacting to new events in the stream. The result of executing such handlers in the general case is a new event with a different type, which falls into the same canonical stream based on the data from which it was born. Further, this new event can likewise be enriched, aggregated, etc.

After the enrichment is completed, the last stage of work with the event begins:

The notification differs from the event in that it is not just JSON-data, but a completely specific message with a well-known recipient, the sending channel (email, sms, etc.), and the notification text.

Notification generation starts immediately after all necessary enrichments have been applied.

At its core, this is a simple join of an incoming event with subscriptions of users for a given type of event and a run of the original JSON data through one or another template engine for generating message text for the specified channel in the subscription. As a result, from one event we receive from 0 to N notifications.

Then it’s easy to do - push the received notifications to the corresponding streams, from where they are subtracted by microservices involved in sending emails, sms messages, etc. But these are all obvious and uninteresting things, and we will not spend on them the attention of readers.

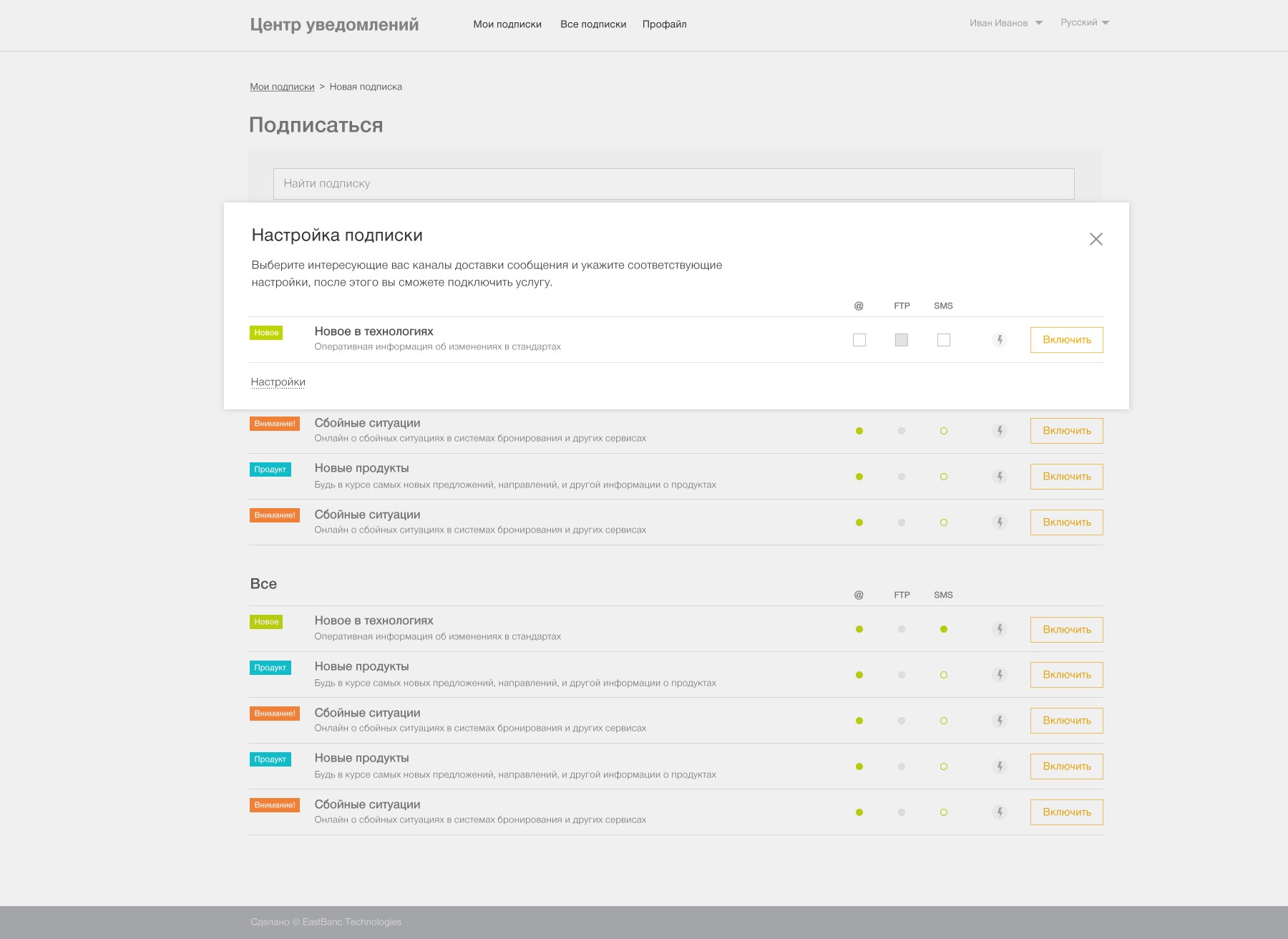

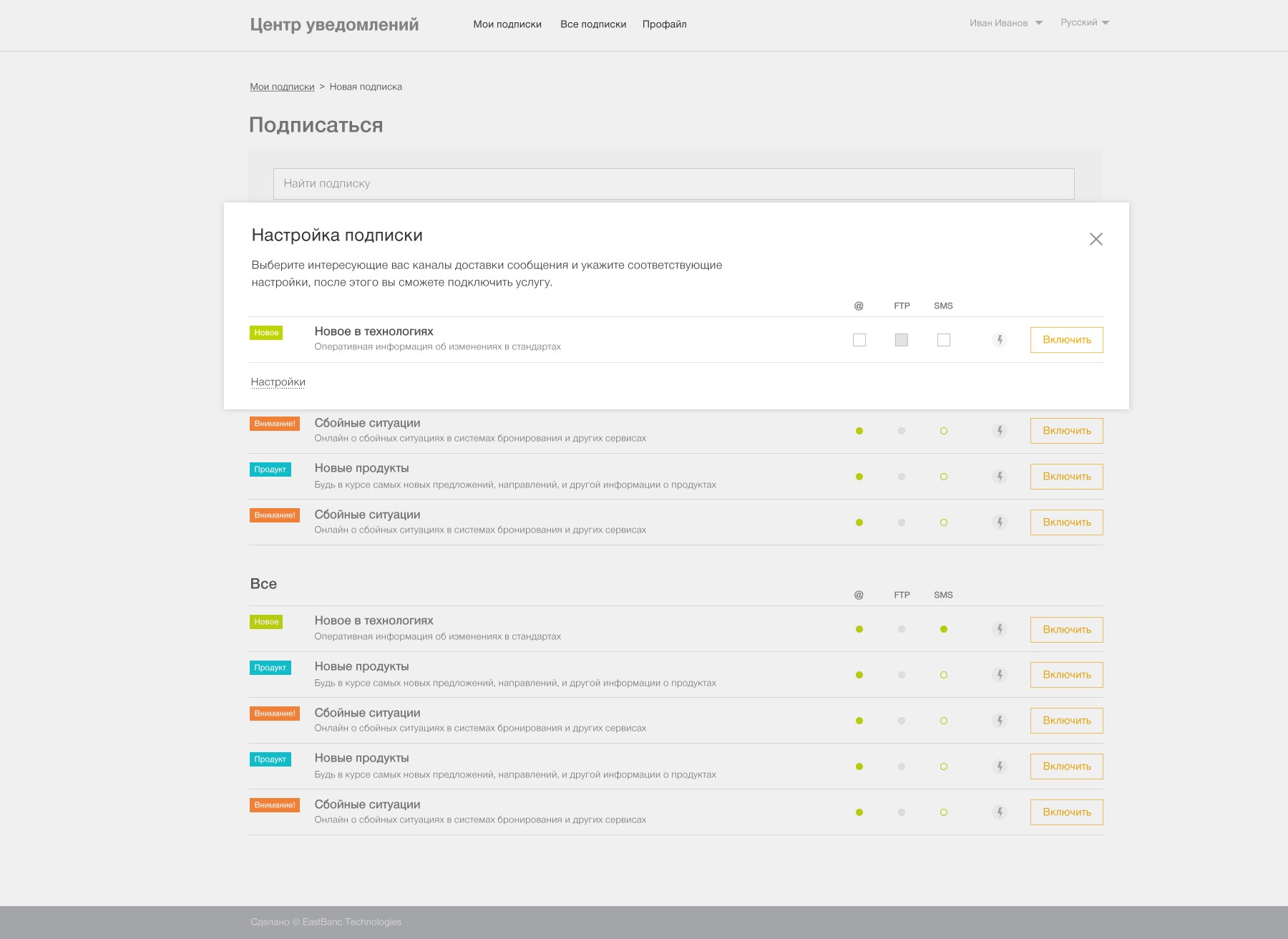

The last module we’ll consider is the subscription customization module by users.

The idea is simple - it is a database that stores information about who is interested in what type of notifications + UI for configuration.

When a user wants to subscribe, he chooses an interesting type of notification and opens the settings window. For each type of notification, one or more sending channels are available. Here the user specifies the minimum number of settings: his email for email or his phone number for Telegram and, if desired, sends a test message to make sure that everything is configured correctly.

That's all. Now we have a subscription of a user with a specific type, and in the pipeline we have an event with the same type. We cross the event and the subscription - and at the exit we get a ready notification.

Another point that we did not consider earlier is access control. Explain by example. Suppose we have a notice that it is time for the counterparty to pay for the goods. This notice applies to a specific counterparty and it should be received by one, not all counterparties. Accordingly, the type of notification described above alone is not enough to determine for whom the event is specifically intended.

The simplest, but also the most unfortunate decision is to introduce into the logic of the Notification Center the concept of a counterparty, which has, for example, a code. And when defining recipients for an event, cut off not only by the type of event, but also by the counterparty code.

Why is that bad? The notification center appeared as a separate system in order to save the business system from the task of sending notifications. But, by bringing such details to the Notification Center, over time we will fill the Notification Center with a bunch of knowledge from business systems.

The correct solution to this issue in our case was still very simple, but flexible.

For authorization in our systems we use ADFS, that is, authorization based on claims . Claim is essentially just an id-value pair. There is a predefined set of claims - username, set of claims with user roles, etc. The keys of these types of claims are known in advance and standard for all applications. We also use custom claim providers to assign different specific attributes to users during authorization.

For example, the counterparty code mentioned above, if this user is a counterparty, can be saved as a claim with its own key. Or the code of the region in which the user is located. Or the owner of a device he is, IPhone or Android. We can save any meaningful markers this way.

This is already half the solution to the problem - we have a set of claims for each user, and therefore subscriptions.

If we add claims to our events, then we can match events and subscriptions not only by type, but also check that both the event and the subscription claims with the same keys have the same values.

Notice that the concept of an event that has a claim X with a value of Y is a much more abstract thing than an event intended for counterparty Y. We do not need to contribute to the Notification Center any specifics of business systems.

The question remains where to fill these claims for events. Since the generation of Claims is still a business specificity in the spirit of “the event of the need for payment must have a claim by the counterparty”, we have placed this logic at the data assembly stage. The collector at least knows where to get the event and its structure. That is, it already has some business context.

On the example of the event of the need to make payment, the counterparty code is most often found in the contract of a business event of the need for payment, which the collector intercepted. Accordingly, the collector can take the counterparty code from the desired contract field and abstract it to the notion of “claim with key X and value Y” before sending the event to the notification center conveyor.

This is how we solved the task of unifying the processes of informing different groups of users about different events that took place in different modules of the system. The conveyor is single, information is omnichannel, dispatch is guaranteed and safe, and the platform itself is open to development.

Our system sends 206 different notifications from 35 systems in nine ways. Here is a front of work. As for this colossus, we created a single communication platform - the center of notifications - we tell under the cut.

')

Any information systems are developing and are beginning to contact with the user more and more. Think of any online store where you recently made an order. He sends letters with information about the order, mailings and push-notifications about new promotions, SMS, and is still active on social networks. And you have probably noticed that these messages are composed differently. And how different can be the tone and quality - in nets neat texts and hashtags, and I want to send a letter to spam without reading.

Awareness of the task

First of all, we analyzed all these messages by the following parameters:

• who are the recipients of these messages,

• which dispatch strategy (immediately, according to the schedule, aggregation),

• is there a template for the message,

• reliability of sending,

• Does it fit the corporate style?

• is there any support for multilingualism?

In other words, we wanted to know what we send and to whom. And here are the results we got.

We realized that we want to come to a single pipeline of notifications, where messages are delivered efficiently and retain meaning.

We want to remove the burden and responsibility for sending notifications and understanding such entities as “subscriptions”, “localization”, etc. from business systems.

In our concept, the information system should become a centralized mini-editorial with a smart pipeline that recognizes how, when, to whom and through which channels it should be distributed by subject / type of message. And spreads.

More specifically, here are our main goals:

1. Agree on the rules of the game and bring all the notifications to a single quality. Analyze all notifications and remove situations when 5 systems sent the same information to the user, but in a different design. We remove from the developers of business systems responsibility for the UI and the literacy of notifications, the guarantee of sending notifications, the monitoring of sending notifications. Instead, we come to a centralized system where all notification templates with a single design and functionality and a separate team that deals with these issues are stored.

2. Ensure timely dispatch. Have the ability to configure how often to send information and what type of notification to send. Be able to quickly add a new notification for a business request. Say, order cancellation information needs to be delivered as quickly as possible, and you can talk about sales and new collections once a week.

3. Ensure the guaranteed sending of information. An important message to send the most operatively viewed channels - this is SMS, instant messengers. Or all at once. Monitor and duplicate through the same or other channels if there is no reaction.

4. Maintain a single style of communication. Create a communication system - templates for letters and messages - and stick to it. (This is a single UI and redpolitik ).

5. Personalize communication. Distinguish when we communicate with an individual, and when with a legal person, in what time zone he lives.

6. Watch out, that is, set up monitoring and security. Send links to attachments that, when opened, perform security checks and download statistics are collected, instead of sending attachments directly in letters. Provide guaranteed shipment, monitor what was sent to whom.

What with ready-made solutions?

There are packaged solutions on the market that perform part of the functions we need. For example, you can embed event notifications in BizTalk and set up special pipes that ultimately make up this letter. In Dynamics CRM and SharePoint there are settings that respond to some events. As a rule, it comes down to the fact that someone somewhere pressed a button, and as a result - the email went. About more complex things, such as aggregation policy and a beautiful UI, there is no question.

Azure and AWS have many services that are able to take ready-made content and send a mailing list. It turns out that all the content for it must be prepared manually. And the purpose of the notification center is to prepare the content.

So we made sure that in our case we should not rely on a solution out of the box, where we will have to configure the UI, and the system will connect itself somewhere and pull out everything that is necessary. Such things do not work out of the box, they need to be done by hand. Box solutions in such cases involve the use of meta-language and configuration, which ultimately turn into a configuration problem. We also got a solution made for the specific needs of the business. It is easy to set up and add new tasks.

We collect the conveyor. The architecture of our notification center

We have business systems - these are systems that solve the main tasks of a business. They work separately from the notification center and should not deal with communication with users.

Ideally, such systems simply generate the business events necessary for their work and interaction with other systems, and the Notification Center is simply added alongside and becomes another listener of these business events, and reacts to them as to signals for action with the minimum required input set information. Next, the Notification Center supplements the events with the necessary information, processes it, and at the output we receive one or several notifications ready for sending, each of which personally addresses the interested user in the right language and with all the necessary information.

Technically, all this is decomposed into an information processing pipeline, which looks like this:

1. Build events

It all starts with a collection of events that intercepts or purposefully collects data from business systems and stores them as a “raw” stream of events for further processing.

From the development point of view, the event collector is a host system designed with the aim that we can very quickly add here the processing of the next type of events.

From the very beginning, we abandoned the idea of a megasystem for data assembly, which will have its own meta-language and wonder-UI-settings, with the help of which it can be connected to anything and collect anything. Instead, we based the system on the most flexible tool available to us — a programming language, and focused on handling the next business event to write one little classic who knows where to pick up the event and what to turn it into. Then this classic is thrown into the host system of data assembly and just starts working as another element of the pipeline.

At the time of data collection, they are reduced to a “canonical form” - each event receives a unique identifier (or a CorrelationId is taken, which has already been assigned to an event in the business system), the event date is determined (DateTime.Now or the date from the business data received depends on the case ), event type, etc., and all events are saved to the general event stream for further processing. The collector is not directly interested in the event data, he, figuratively speaking, wraps them in an envelope. The envelope form is canonical, and the contents are different.

2. Where to save data? Eventstore

As we wrote above, at the time of collecting events, they are stored in the general stream for further processing. In deciding how to store data, we chose a widely known product in narrow circles called EventStore . By the way, one of the ideologists of EventStore is Greg Young , whose name is inextricably linked with concepts such as CQRS and Event Sourcing.

According to the definition on the main page, EventStore is “a functional database with complex event handling in JavaScript”. If we try to formulate in free form what the EventStore is, then we would say that this is a very interesting hybrid of a database and a message broker.

The advantage taken from brokers is the ability to build a reactive system in which EventStore takes on notification of listeners about new events, guaranteeing message delivery, competitive processing, tracking which of which subscribers have already been read, etc.

But, unlike message brokers, data is not deleted from the EventStore after it has been read by the recipient. This allows not only reactive work with atomic events, but also work with arrays of historical data, as in the database. By the way, one of the most advanced features of the Event Store is the ability to build temporal queries that allow analyzing sequences of events over time, which is usually difficult to do with the use of “traditional” databases.

In the case of the Notification Center, both the EventStore features were in demand. Using the reactive subscription model, we implemented the generation of instant notifications and enrichment of event data (about it below). And with the help of projections, we organized a sequence analysis of events, with the help of which you can:

but. generate aggregated events in the spirit of "Summary of ... for the week / month / year",

b. analyze sequences of different types of events and find interesting patterns between them, track peaks of current events and in general.

3. Blob Storage

Another issue related to data storage and arising in the development of the Notification Center was the work with files. Often we need to send a file to the user in the notification.

The simplest example: sending a report by mail. And the simplest thing you can do is attach a file to the letter as an attachment. But if we develop the scenario, we will immediately notice several limitations in this decision.

- After sending, we do not know anything about the further fate of the file. Whether it was opened at all, how many times who opened.

- With mail, everything is easy, but you will not invest the file in the SMS notification.

- Additional load on the mail server when sending files as attachments.

- The same file may be of interest to many users.

The simplest solution to all these questions is to send a link instead of a file. So we don’t load the mail server either, and we can monitor what is happening with the file, and we can even conduct security checks and check that the user can actually see this file.

On the other hand, there is a purely practical sense in this. The file can be bound to the event from the moment the event occurred. For example, when the essence of a business event - received an exchange file from the booking system in air travel for a specific date. Or a file can be generated as a result of processing a batch of events, say, for the last month.

In general, files can exist from the very beginning of an event’s life, and there’s no point in “dragging” heavy content through the entire pipeline of the Notification Center only to send it at the end. You can save the file to a special service at the very beginning, and save its identifier in the event. And you can get the file where it will be needed already when sending a notification.

This is how one more very simple service was born - Blob Storage. You can save the file through a POST request and receive a Guid in reply. You can also send a GET request to the service with the received Guid, and in return it will return the file. And all security checks, statistics gathering and more are internal affairs of the Blob Storage service.

4. Data enrichment

So, at the moment we have considered the event assembly stage and how we store information about events and files. Let us proceed to the stage at which work begins with the data - the stage of enrichment.

The “canonical” events collected earlier fall at the entrance to this stage. At the output, we receive from zero to N ready-made notifications that the recipient already has, the text of the message in a specific language, and it is already clear which channel (email, telegram, sms, etc.) will need to be sent to the notification.

At this stage, the original event may be subjected to the following manipulations.

Addition of the initial data set

Mainly due to this operation, the whole stage was called “enrichment”. The simplest example of adding data is in the "canonical" event, we received a user Id. At this stage, we, by the user's Id, find his name in order to be able to contact him by his name in the notification text, and supplement the original event with the information found.

Each “dresser” is a separate small class, which (by analogy with event collectors) is quickly written and “thrown” into a common pipeline, which will already give all the necessary information to the input and save the result where it is needed.

Aggregation, event sequencing, time analysis

Everything is quite simple here. The approach is the same as in enrichment, but the aggregator classes run on a schedule, rather than reacting to new events in the stream. The result of executing such handlers in the general case is a new event with a different type, which falls into the same canonical stream based on the data from which it was born. Further, this new event can likewise be enriched, aggregated, etc.

After the enrichment is completed, the last stage of work with the event begins:

5. Notification generation

The notification differs from the event in that it is not just JSON-data, but a completely specific message with a well-known recipient, the sending channel (email, sms, etc.), and the notification text.

Notification generation starts immediately after all necessary enrichments have been applied.

At its core, this is a simple join of an incoming event with subscriptions of users for a given type of event and a run of the original JSON data through one or another template engine for generating message text for the specified channel in the subscription. As a result, from one event we receive from 0 to N notifications.

Then it’s easy to do - push the received notifications to the corresponding streams, from where they are subtracted by microservices involved in sending emails, sms messages, etc. But these are all obvious and uninteresting things, and we will not spend on them the attention of readers.

6. Subscriptions

The last module we’ll consider is the subscription customization module by users.

The idea is simple - it is a database that stores information about who is interested in what type of notifications + UI for configuration.

When a user wants to subscribe, he chooses an interesting type of notification and opens the settings window. For each type of notification, one or more sending channels are available. Here the user specifies the minimum number of settings: his email for email or his phone number for Telegram and, if desired, sends a test message to make sure that everything is configured correctly.

That's all. Now we have a subscription of a user with a specific type, and in the pipeline we have an event with the same type. We cross the event and the subscription - and at the exit we get a ready notification.

7. Access control

Another point that we did not consider earlier is access control. Explain by example. Suppose we have a notice that it is time for the counterparty to pay for the goods. This notice applies to a specific counterparty and it should be received by one, not all counterparties. Accordingly, the type of notification described above alone is not enough to determine for whom the event is specifically intended.

The simplest, but also the most unfortunate decision is to introduce into the logic of the Notification Center the concept of a counterparty, which has, for example, a code. And when defining recipients for an event, cut off not only by the type of event, but also by the counterparty code.

Why is that bad? The notification center appeared as a separate system in order to save the business system from the task of sending notifications. But, by bringing such details to the Notification Center, over time we will fill the Notification Center with a bunch of knowledge from business systems.

The correct solution to this issue in our case was still very simple, but flexible.

For authorization in our systems we use ADFS, that is, authorization based on claims . Claim is essentially just an id-value pair. There is a predefined set of claims - username, set of claims with user roles, etc. The keys of these types of claims are known in advance and standard for all applications. We also use custom claim providers to assign different specific attributes to users during authorization.

For example, the counterparty code mentioned above, if this user is a counterparty, can be saved as a claim with its own key. Or the code of the region in which the user is located. Or the owner of a device he is, IPhone or Android. We can save any meaningful markers this way.

This is already half the solution to the problem - we have a set of claims for each user, and therefore subscriptions.

If we add claims to our events, then we can match events and subscriptions not only by type, but also check that both the event and the subscription claims with the same keys have the same values.

Notice that the concept of an event that has a claim X with a value of Y is a much more abstract thing than an event intended for counterparty Y. We do not need to contribute to the Notification Center any specifics of business systems.

The question remains where to fill these claims for events. Since the generation of Claims is still a business specificity in the spirit of “the event of the need for payment must have a claim by the counterparty”, we have placed this logic at the data assembly stage. The collector at least knows where to get the event and its structure. That is, it already has some business context.

On the example of the event of the need to make payment, the counterparty code is most often found in the contract of a business event of the need for payment, which the collector intercepted. Accordingly, the collector can take the counterparty code from the desired contract field and abstract it to the notion of “claim with key X and value Y” before sending the event to the notification center conveyor.

Total

This is how we solved the task of unifying the processes of informing different groups of users about different events that took place in different modules of the system. The conveyor is single, information is omnichannel, dispatch is guaranteed and safe, and the platform itself is open to development.

Source: https://habr.com/ru/post/358198/

All Articles