Improvements in Chrome and Firefox accelerated page reloading by 28-50%

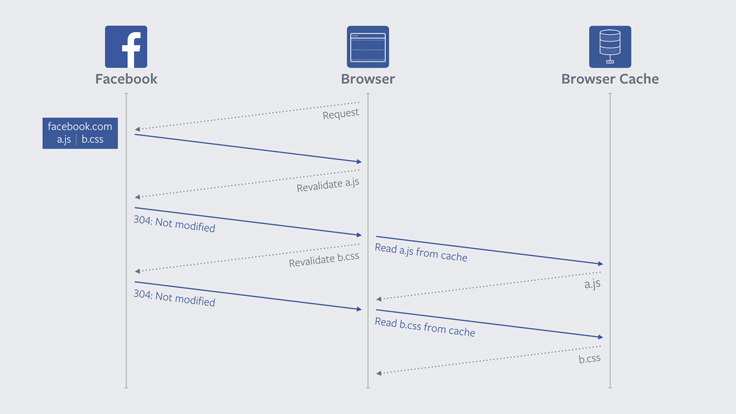

Traditional Resource Validation Model

Google developers yesterday announced the results of a browser optimization project modeled after Facebook and Mozilla, modeled after Firefox. After optimization, reloading pages in mobile and desktop versions of Chrome has significantly accelerated. According to Google, in the latest version of Chrome, reloading pages is accelerated by an average of 28%.

Usually, when the page is reloaded, the browser validates: it sends hundreds of network requests only to check that the pictures and all other resources in the cache are still valid and can be reused. Such a mechanism for reloading pages has existed unchanged for many years , despite all the changes in websites and web development technologies. For small items, the speed at which this request is executed with an HTTP response 304 is roughly the same as that of a regular request with a web page element loaded.

')

It's time to improve browsers and sites.

Google developer Takashi Toyoshima (Takashi Toyoshima) explains that before validation did not cause much trouble, but with the development of web technology, now on each page usually there are hundreds of network requests that go to dozens of different domains . This is especially unpleasant on mobile devices, in which the ping to servers is higher than on the desktop, and the connection is unstable. Due to the large delays and unstable connections, there are serious problems with the performance of mobile browsers and the speed of loading pages.

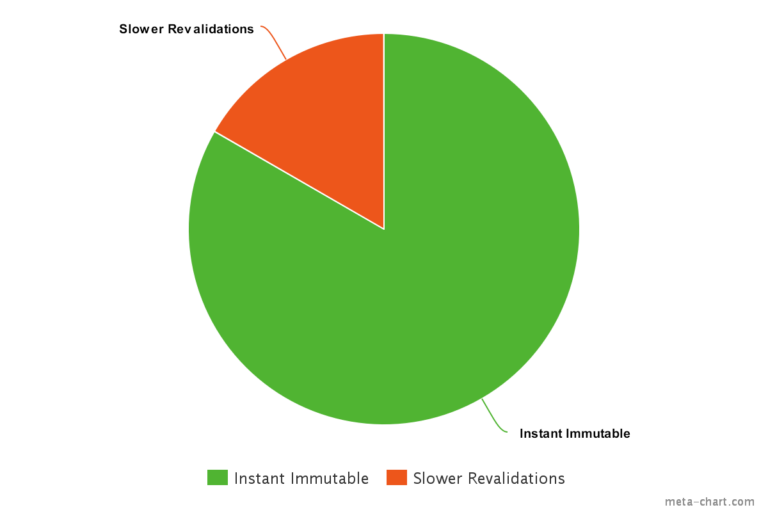

The first to improve the browser thought in Mozilla. Last year they introduced support for the Cache-Control function in Firefox 49 : immutable . With its help, the server can indicate resources that never change. Such items are never requested again. For example, fonts, scripts and style sheets, as well as dozens of images that are included in the page design. So you can optimize any resources at all. If a resource changes, then it is laid out with a different name (Facebook has a system in place where the names of the resources correspond to the hashes of their contents).

In the traditional model,

cache-control sets a limited lifetime for a resource. $ curl https://example.com/foo.png > GET /foo.png < 200 OK < last-modified: Mon, 17 Oct 2016 00:00:00 GMT < cache-control: max-age=3600 <image data> After this period, the browser checks the validity of the resource.

$ curl https://example.com/foo.png -H 'if-modified-since: Mon, 17 Oct 2016 00:00:00 GMT' > GET /foo.png > if-modified-since: Mon, 17 Oct 2016 00:00:00 GMT If the image was not modified < 304 Not Modified < last-modified: Mon, 17 Oct 2016 00:00:00 GMT < cache-control: max-age=3600 If the image was modified < 200 OK < last-modified: Tue, 18 Oct 2016 00:00:00 GMT < cache-control: max-age=3600 <image data> The

immutable parameter removes the obligation from the browser to check the validity of the resource. $ curl https://example.com/foo.png > GET /foo.png < 200 OK < last-modified: Mon, 17 Oct 2016 00:00:00 GMT < cache-control: max-age=3600, immutable <image data> Almost at the same time as the function appeared in Firefox, Facebook began to modify the server infrastructure to support this function. Mozilla is pleased to note that other websites are gradually introducing this feature, just like Facebook.

Chrome developers also followed the example of Firefox. For example, the latest version of Chrome makes 60% less network requests for validating cached resources, which means an acceleration of page loading speed by an average of 28%.

Firefox tests confirm results. Instead of the average 150 requests per page, Firefox sends only 25, and in some cases the page load is accelerated twice.

Why do users usually reload the page? This usually happens in two cases - either because the page has loaded incorrectly, or because its content seems outdated. The update mechanism developed a decade ago took into account only the first reload scenario. In those times, no one could have imagined that static web pages could change every minute. Therefore, the old mechanism inefficiently coped with the second scenario, sending requests for the validation of the same resources at each page reload. The developers believe that such checks are unnecessary.

Now Chrome and Firefox reload the pages in a simplified mode — only the main resources are checked, and then the page loads normally. Such optimization is good in all respects: the download speed is accelerated, the network traffic is reduced, the battery power on the mobile device is saved. Facebook reported that the number of requests to static items on the server is reduced by 60% .

The video shows how a typical page is reloaded onto Amazon from a smartphone at the US cellular operator. It can be seen that the reboot time is reduced from 20 seconds to about 13 seconds, and in the new version of Chrome, after the response from the server arrives, the page is updated on the screen almost instantly, and not loaded in parts.

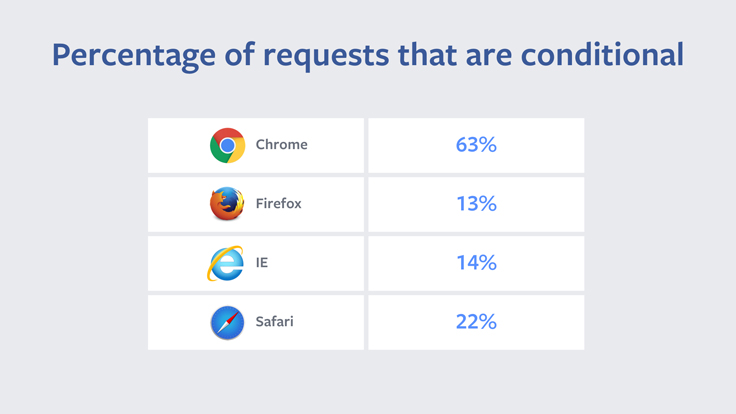

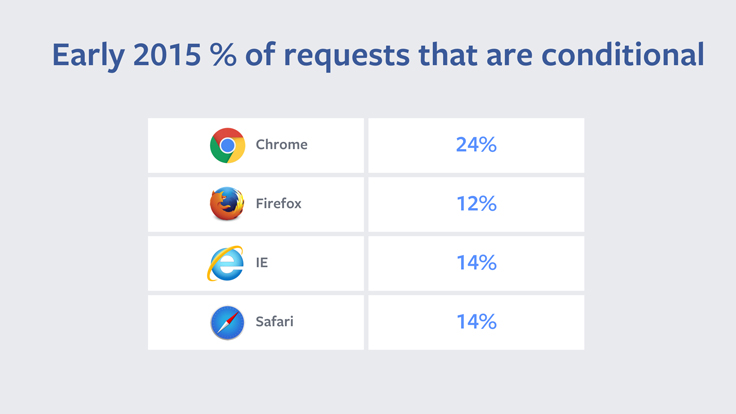

Colleagues from Facebook, who are most interested in improving the browsers, helped in the development. Interestingly, in 2014, Facebook engineers conducted a study and found out that it was Chrome that sent the most requests that return 304 HTTP.

The problem was a completely unnecessary code snippet , unique to the Chrome browser, which very often activates revalidation of resources. After eliminating the extra revalidation in 2015, the situation has improved.

Now the work has continued, and with the advent of "eternal" resources, updates will occur even less often. Chrome developers say that this change required relatively small changes in the code. This is a good example of how small optimizations can produce excellent results.

Simultaneously with the support of immutable elements, Facebook was one of the first sites to implement brotli compression . With it compresses dynamic markup, which can not be cached. Compared to the old gzip, brotli saves about 20% of the traffic.

Support for immutable elements on the server is beneficial for both users and the owner of the server, as the traffic and load on the CPU is reduced.

Source: https://habr.com/ru/post/357676/

All Articles