SmartMailHack. Solution of the 1st place in the problem of logo classification

Two weeks ago, the hackathon for SmartMailHack students that took place in the Mail.Ru Group office ended. The hackathon was offered a choice of three tasks; article from the winners in the second task is already on Habré , but I want to describe the solution of our team that won the first task. All code examples will be in Python & Keras (a popular framework for deep learning).

Task Description

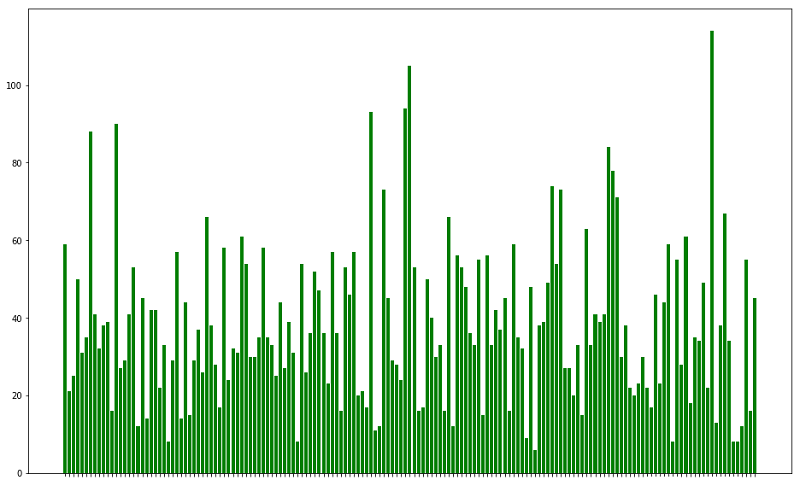

The task was to classify the logos of various companies. The training dataset consisted of 6139 images, marked up for 161 classes (160 different companies + label "other")

The distribution of the number of teaching examples by class

There were two main problems with the data: first, besides the usual .jpeg and .png files, there were both .svg, and .ico, and even .gif files in dataset:

Since OpenCV reads only jpeg & png, and there was no time to deal with other libraries in the framework of the hackathon, we went "head on" - with the help of ImageMagick we converted everything to jpeg, and only the first frame was left of the gifs.

The second problem - a large scatter of images in size - was solved by the line cv2.rescale (): also clearly not the best option, but fast and working.

def _load_sample(self, sample_path): # try to load all files with opencv image = cv2.imread(sample_path) if image is not None: shape = image.shape # normal 3-channel image if shape[-1] == 3: image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) # grayscale image -> RGB if len(shape) == 2 or shape[-1] == 1: image = cv2.cvtColor(image, cv2.COLOR_GRAY2RGB) else: tqdm.write(f"Failed to load {sample_path}") return image def _prepare_sample(self, image): image = cv2.resize(image, (RESCALE_SIZE, RESCALE_SIZE)) return image Methods from the ImageLoader class that are responsible for loading and preparing

The target metric by which the quality of the model was evaluated is F2-measure (it differs from the usual F1-measure by a factor over precision).

Models and transfer learning

For the classification of images of the last 6 years, the "classical" tool is deep convolutional neural networks. It was immediately clear that it did not make sense to experiment with something else, so there was only one question left: to teach any convolutional architecture from scratch, or to use transfer learning? We chose the latter, using the zoo of keras.applications pre-trained on ImageNet models. *

The main idea of transfer learning is to take a neural network pre-trained on some large dataset (in our case, ImageNet, 1.2 million images, 1000 classes), replace the “head” (fully connected classifier after convolutional layers), and then retrain the model already target dataset. Often, higher-quality models are obtained in this way than when learning from scratch, especially if the datasets (on which the pretrain and target were performed) are more or less similar.

class PretrainedCLF: def __init__(self, clf_name, n_class): self.clf_name = clf_name self.n_class = n_class self.module_ = CLF2MODULE[clf_name] self.class_ = CLF2CLASS[clf_name] self.backbone = getattr(globals()[self.module_], self.class_) i = self._input() print(f"Using {self.class_} as backbone") backbone = self.backbone( include_top=False, weights='imagenet', pooling='max' ) x = backbone(i) out = self._top_classifier(x) self.model = Model(i, out) for layer in self.model.get_layer(self.clf_name).layers: layer.trainable = False @staticmethod def _input(): input_ = Input((RESCALE_SIZE, RESCALE_SIZE, 3)) return input_ def _top_classifier(self, x): x = Dense(512, activation='elu')(x) x = Dropout(0.3)(x) x = Dense(256, activation='elu')(x) x = Dropout(0.2)(x) out = Dense(self.n_class, activation='softmax')(x) return out Class collecting classifier based on pre-trained network

Such a model can be retrained in different ways, but we used the standard approach: first, the pre-trained part is “frozen” (the weight in it will not change during the training) and only a fully connected classifier is trained for several epochs, after which all layers are unfrozen and the full model is trained to convergence . The intuition behind such a scheme is that at the very beginning large gradients flowing from a randomly initialized “head” into the main part of the network can greatly change the weights in the convolutional part and negate the effect of pre-learning.

Hackathon

A feature of this hackathon (unlike, for example, Kaggle competitions) was the absence of a public leaderboard - the entire test set was private, and only two hours before the hackathon final was given. It was possible to make two submissions - the first within an hour after issuing the dataset, after which the leaderboard appeared and another submission could try to improve its result.

In order to somehow assess the quality of our models during the hackathon, we have broken down the available dataset into three parts in the proportion of 70/10/20: train, validation and test, respectively. From the very beginning, the goal was to train more different networks, then to average their predictions - from Kaggle's experience, I know that ensembles usually show themselves better than single models.

A separate important point in the training of convolution networks is augmentation: the generation of new teaching examples based on available, by random rotations of images, adding noise, color changes, etc. I do not know how right we were, but we decided to abandon turns and flips, using as a result only Gaussian blur and gamma changes.

def _augment_sample(self, image): # gamma if np.random.rand() < 0.5: gamma = np.random.choice([0.5, 0.8, 1.2, 1.5]) image = adjust_gamma(image, gamma) # blur if np.random.rand() < 0.5: image = cv2.GaussianBlur(image, (3, 3), 0) return image Method that implements augmentation of learning examples

We studied networks on several machines: I removed the instance from the Google Cloud with the Tesla P100, another team member had access to a computer in the lab with Titan X on board, and the rest used Google Collaboratory (free of charge from Tesla K80 from Google). By the time the test data was issued, we had about 15 saved models (trained with different parameters ResNet-50, Xception, DesneNet-169, InceptionResNet-v2, and several models were saved from each launch - a model from the last era + a model with the best accuracy on validation) with an average of F2 on our personal 20% test at ~ 0.8. It all looked pretty good, but the time for the inference was limited, and it was more difficult to generate and collect all the predictions into a single submit than we thought.

Inference issues

The test dataset was almost the same size as the training — 6875 files for which the class label had to be predicted. We thought to consistently drive the pictures through all the models and engage in blending (averaging the results of the predictions), but the problems started at the very first step - converting to jpeg. If our dataset learning script was quietly converted, then for some reason everything broke on the test: some files were broken after conversion, which caused the generating script to crash while loading data. While we were dealing with this, we managed to pass about 45 minutes from the initial hour, by the end of which it was necessary to provide the first submit, and during that time we did not solve the problem of conversion. Since it was necessary to send at least something, I inserted a crutch into the data download, clogging all unreadable examples with zeros, in the hope that there will be not so many broken files and they will not greatly affect the result:

def _load_sample(self, sample_path): # try to load all files with opencv image = cv2.imread(sample_path) if image is not None: shape = image.shape # normal 3-channel image if shape[-1] == 3: image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) # grayscale image -> RGB if len(shape) == 2 or shape[-1] == 1: image = cv2.cvtColor(image, cv2.COLOR_GRAY2RGB) else: image = np.zeros((RESCALE_SIZE, RESCALE_SIZE, 3)) return image After this time, there was absolutely no time left, so I quickly received predictions from our last trained model, InceptionResNet-v2 (which we didn’t even have time to check on our personal test) and, despite the result, collected the submit and sent it to the organizers, barely to the already delayed deadline. Seeing a few minutes later that we were in the first place, and with a large margin (0.77 vs. 0.673 in second place), we relaxed a bit and decided that we would accurately solve technical problems in the remaining 50 minutes and embrace everything that we had time to train.

At this point, it seemed that the problems with conversion were solved (although I didn’t remove the crutch from the code), and we started to load the saved models and generate separate submissions. On the second network, it became clear that we would not have time to get rid of everything precisely - taking into account the loading of the model from the file, the prediction stage and output to the file on one model took about ~ 5-7 minutes (keras oh-oh-oh for a long time loads saved in .h5 models, as I later learned, on stack overflow, it is recommended to keep the model structure and weight separately, so loading will be faster), so the final submission included predictions of only two InceptionResNet-v2, which we believed after the first submission, and three Xception, which had the best on our test quality. Somewhere 10 minutes before the hackathon final, the models worked and we received five separate csv files with predictions that we wanted to mix by the majority voice (for each file, the label that most models voted for is selected). We open jupyter, load csv-shki ... and I understand that somewhere in submit.py there was an error: only the predicted tags were saved in the files, without indicating which file this tag belongs to. I had to, hoping that everything inside our code is always sorted and the order of processing files does not change from launch to launch, find our previous submit, pick up a column with file names from there and quickly average new tags exactly at 16:05 (the organizers gave an additional five minutes) by sending the final submit. As it turned out later, this coding in the jupyter-laptop did not really need speed - our result improved by ~ 0.04%, to 0.7739. The team from the second place added as much as 4% - up to 0.7137, but we still had a large margin in the first place.

Total

It was an interesting weekend at the Mail.Ru office: in parallel with the hackathon, there were still two interesting lectures on machine learning (and a lot of coffee and cookies, of course). We also gained valuable experience that in the conditions of the hackathon, steps that seem obvious can cause many problems.

From what we wanted to have time to try in the task, but did not have time, the most serious idea was instead of mindlessly scaling images to the input size of the models, to study at random. In the middle of the hackathon, I tried to implement it, but in this configuration the networks stopped converging altogether, and there was no time to search for errors. Perhaps, if implemented correctly, this would improve the quality of the models: my colleague from the team was able to train Xception on sprinkles in its implementation, but we did not have time to use this network for the final submission.

All our code from the hackathon is open and available in the github repository.

Team "MADGAN":

- Dmitry Senyushkin, Department of Physics, Moscow State University

- Yan Budakyan, Department of Physics, MSU

- Karim El Haj Dau, Department of Physics, Moscow State University

- Alexander Sidorenko, FIFT MIPT

')

Source: https://habr.com/ru/post/354976/

All Articles