We optimize the web with Vitaly Friedman, - compression, images, fonts, HTTP / 2 features and Resource Hints

We offer you a selection of all kinds of life hacking and tricks to optimize the amount of downloadable code and files, as well as the overall acceleration of loading web pages.

The article is based on the transcript of a speech by Vitaly Friedman from Smashing Magazine at the December conference of Holy JS 2017 Moscow.

So that we would not be bored with you, I decided to submit this story in the format of a small game, calling it Responsive Adventures.

')

The game will have five levels, and we start with a simple level - compression.

Compression is compression, and for example, images, text, fonts, and so on can be compressed into a frontend. If there is a need to optimize the page as much as possible in terms of text, then in practice they usually use the library to compress gzip data. The most common gzip implementation is zlib, which uses a combination of LZ77 and Huffman encoding algorithms.

Usually we are interested in how much the library should compress, because the better it does, the more time this process takes. Usually we choose either fast compression, or good, because at the same time fast and good compression is impossible to achieve. But as developers, we take care of two aspects: the size of files and the speed of compression / decompression - for static and dynamic web content.

There are data compression algorithms Brotli and Zopfli. Zopfli can be considered as more efficient, but at the same time slower version of gzip. Brotli is a new lossless compression and decompression format.

In the future we will be able to use Brotli. But now not all browsers support it, and we need full support.

The strategy is as follows:

But what will we do with the images?

Let's imagine you have a good landing page with fonts and images. It is necessary that the page loads very quickly. And we are talking about the extreme level of image optimization. This is a problem, and it is not contrived. We prefer not to talk about it, because, unlike JS, images do not block rendering of the page. In fact, this is a big problem, because the size of images increases over time. Now there are already 4K screens running, soon there will be 8K.

In general, 90% of users see 5.4 MB of images on a page - that’s a lot. This is a problem that needs to be resolved.

We specify the problem. What if you have a big picture with a transparent shadow, as in the example below.

How to squeeze it? After all, png is hard enough, and after compression the shadow will not look very good. What format to choose? JPEG? Shadow will also not look good enough. What can be done?

One of the best options is to divide the images into two components. The basis of the image is placed in jpeg, and the shadow in png. Next, connect the two pictures in svg.

Why is it good? Because the image, which weighed 1.5 MB, now takes 270 KB. This is a big difference.

But there are a couple more tricks. Here is one of them.

Take two images that are visually displayed on a website with the same proportions.

The first is with very poor quality, it has a real and visual size of 600 x 400 px, and below it is the same, but visually reduced to 300 x 200 px.

Let's compare this image with an image that has a real size of 300 x 200 px, but saved at 80% quality.

Most users are not able to distinguish these images, but the picture on the left weighs 21 KB, and on the right, 7 KB.

There are two problems:

An interesting test that used this technique was conducted by the Swedish online journal Aftonbladet. The initial image quality setting was set at 30%.

As a result, their main page with 40 images using this technique took 450 KB. Impressive!

Here is another good technique.

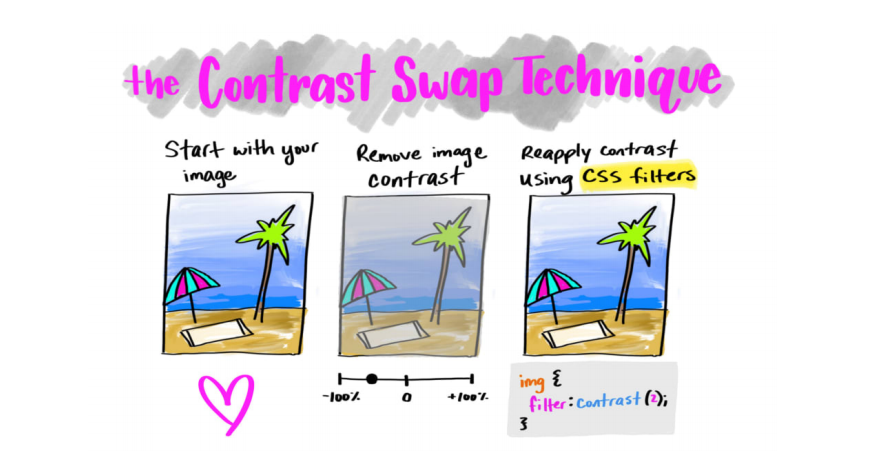

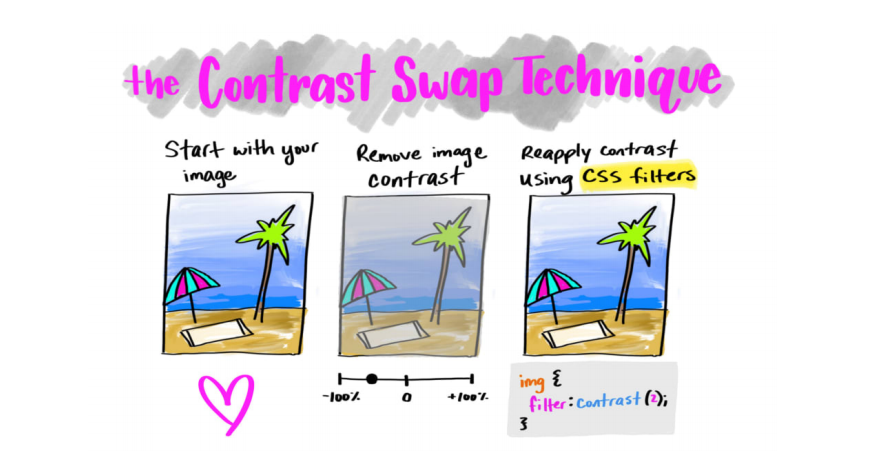

We have a picture, and we need to reduce its size. Due to what it will be better to shrink? Contrast! What if you remove or reduce it significantly, and then return it with the help of CSS filters? But again, the one who wants to download this picture will face poor quality.

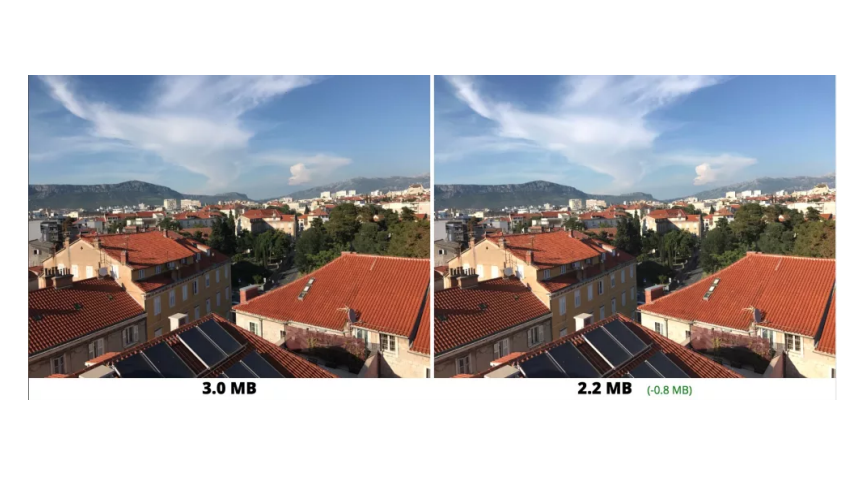

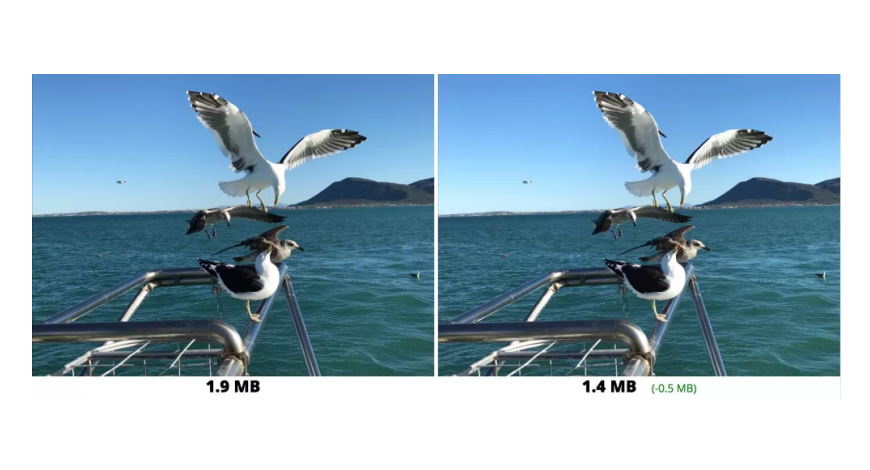

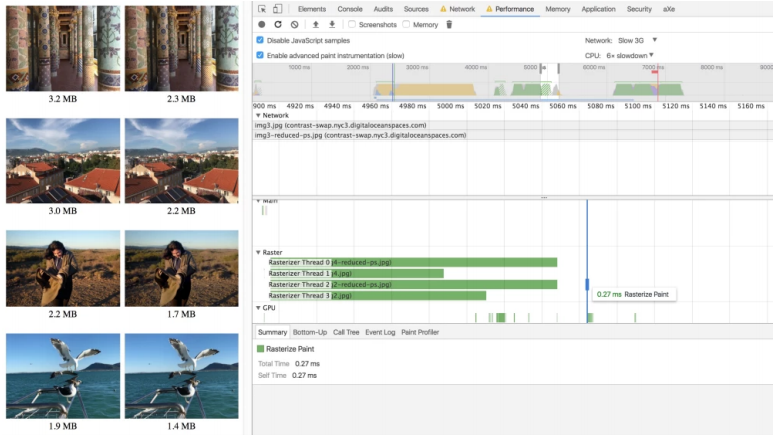

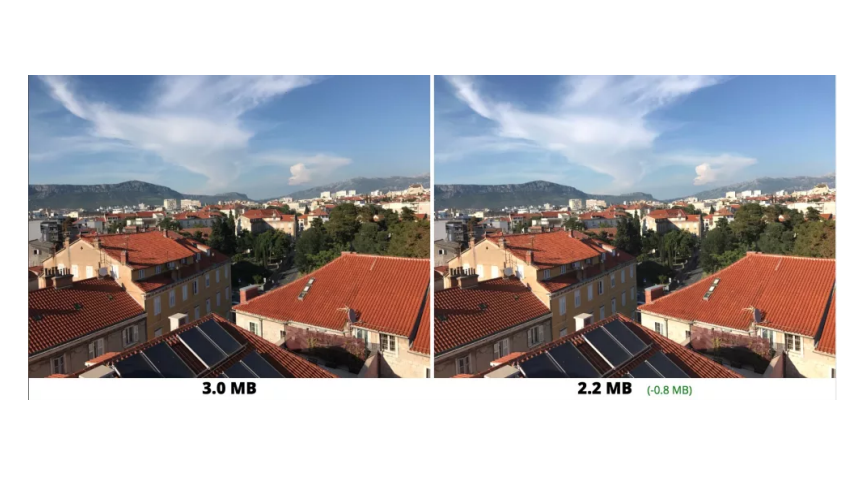

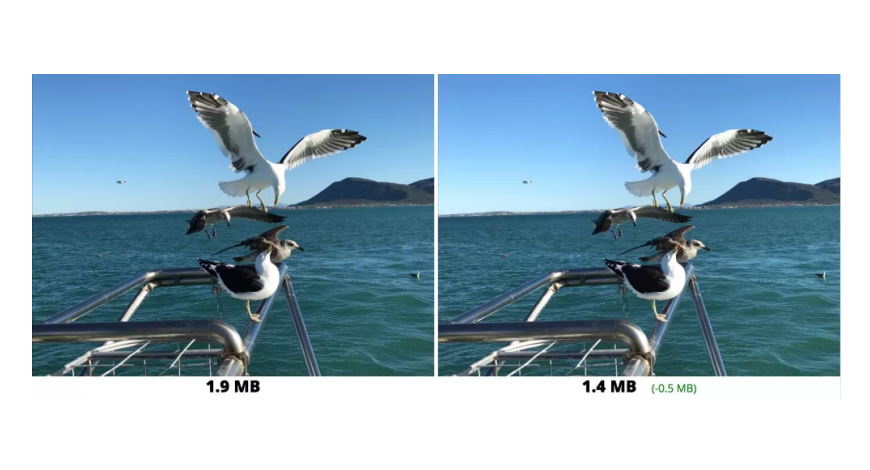

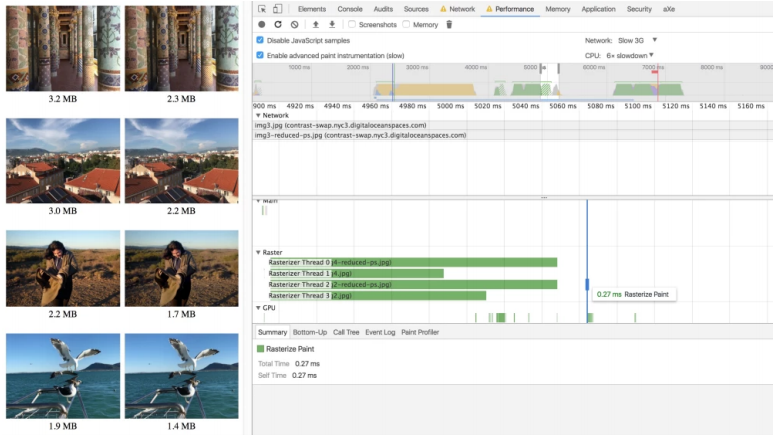

This technique can achieve great results. Here are some examples:

Everything would be fine, but what about the additional rendering delays? After all, the browser has to apply filters to the image. But here everything is quite positive: 27 ms versus 23 ms without the use of filters - the difference is insignificant.

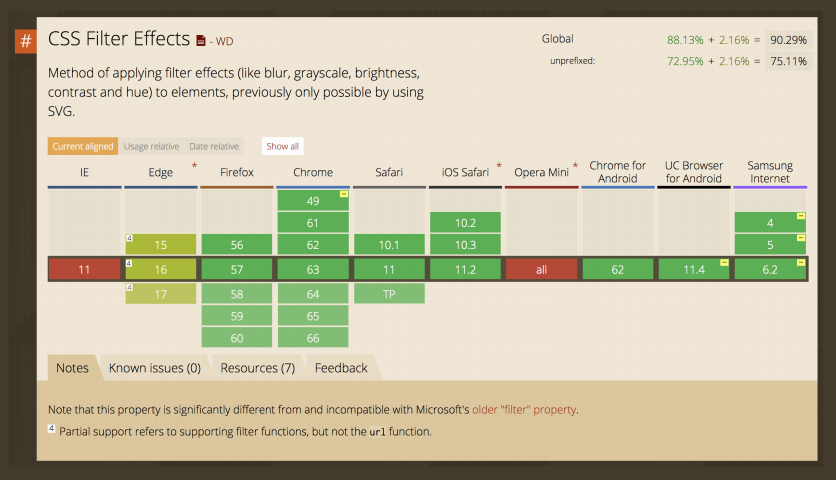

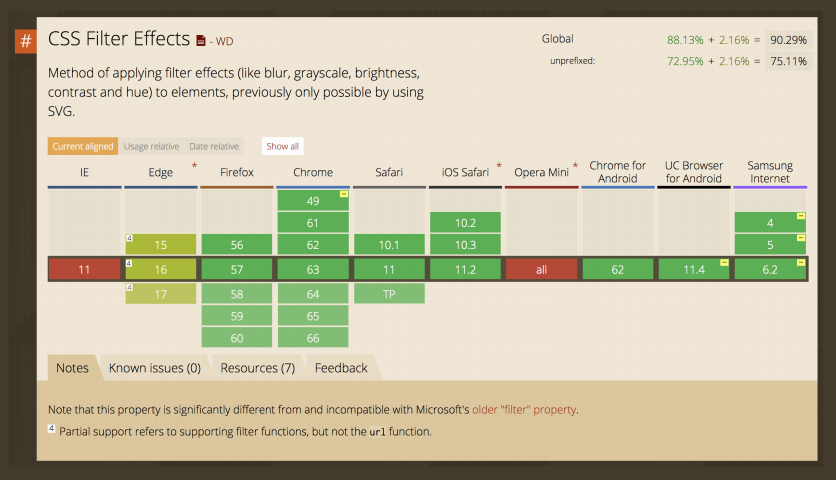

Filters are supported everywhere except IE.

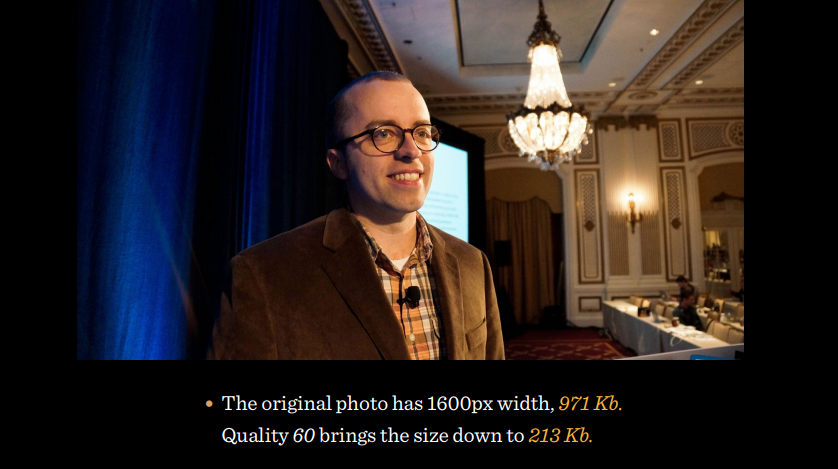

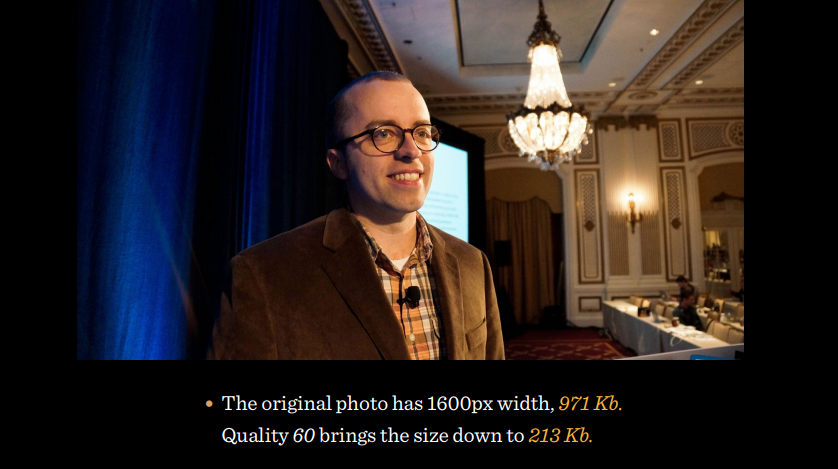

What other tricks are there? Compare two photos:

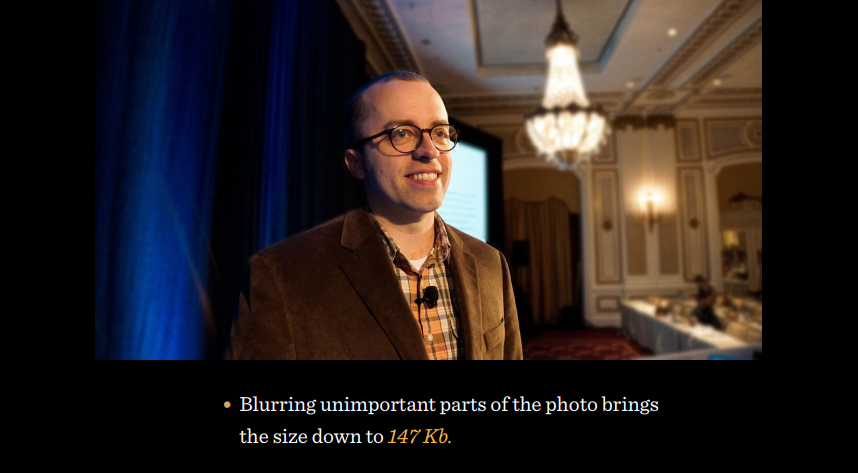

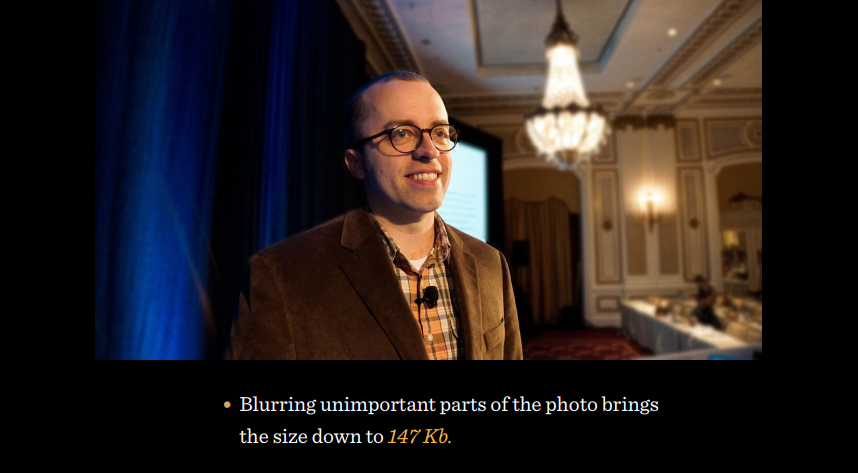

The difference is the blurring of irrelevant details of the photo, which allows you to reduce the size to 147 KB. But this is not enough! Let's go into JPEG encoding. Suppose you have a consistent and progressive jpeg.

Sequential JPEG is loaded on the page line by line, progressive - at first in poor quality all at once, and then the quality gradually improves.

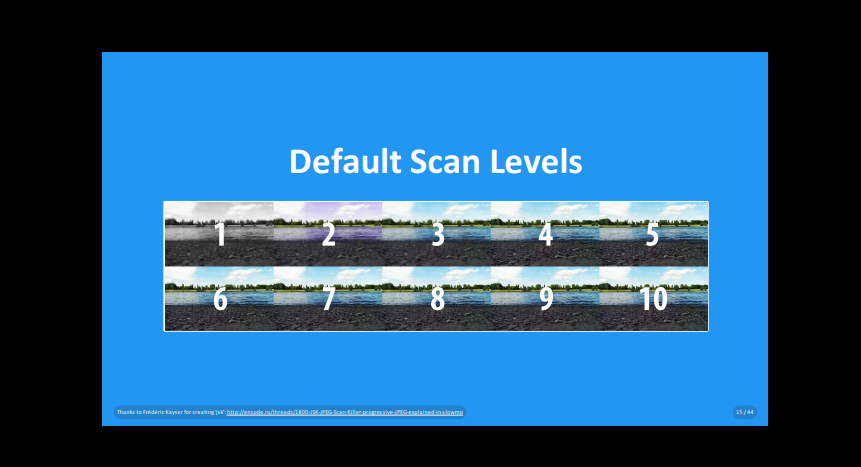

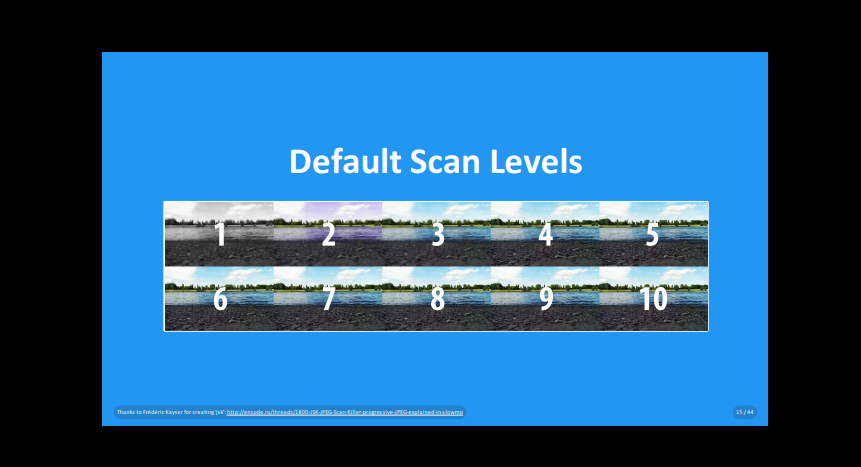

If you look at how encoders work, you can see several levels of scanning.

Many different levels of scanning are in this file. Our goal, as a developer, is to show immediately detailed information about this picture. Then you can make sure that Ships Fast and Shows Soon are with some kind of coefficients that can fit the picture better, and then at the first level we will see the structure, and not just something blurry. And on the second - almost everything.

There are libraries and utilities that allow you to do these tricks: Adept, mozjpeg or Guetzli.

I remember seven or ten years ago - I wanted fonts, added font-face and was done. And now, no, you need to think what I want to do and how to download. So, what is the best method to choose for downloading fonts?

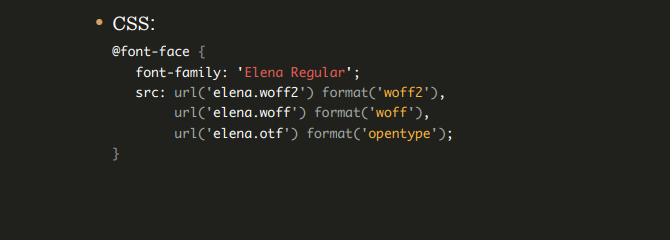

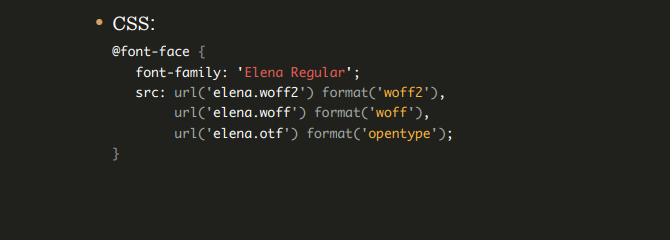

We can use font-face syntax to avoid common pitfalls along the way:

If we want to support only more or less normal browsers, then we can write even shorter:

What happens when we have this font-face in css? Browsers see if there is an indication of the font in the body or somewhere else, and if so, then the browser starts loading it. And we have to wait.

If the fonts are not yet cached, they will be queried, loaded, and applied, moving the rendering back.

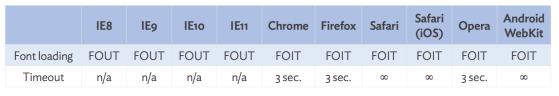

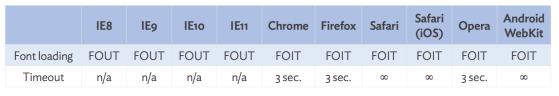

But different browsers act differently. There are FOUT and FOIT mapping approaches.

FOIT (Flash of Invisible Text) - nothing is displayed until the fonts are loaded.

FOUT (Flash Unstyled Text) - the content is displayed immediately with default fonts, and then the necessary fonts are loaded.

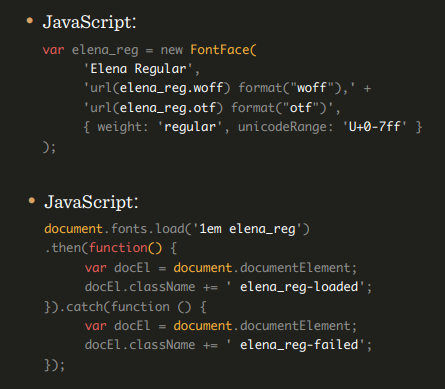

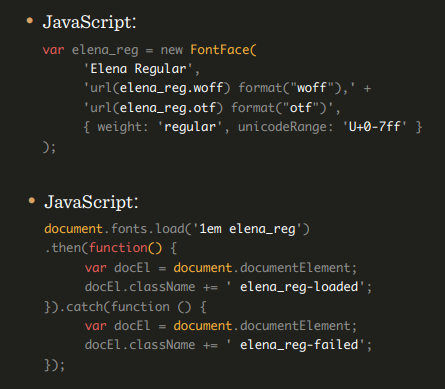

Usually browsers wait for font downloads for three seconds, and if they do not have time to load, default fonts are substituted. There are browsers that do not wait. But the most annoying thing is that there are browsers that wait until it stops. Will not work! There are many different options for how to get around this. One of them is CSS Font Loading API. Create a new font-face in JS. If the fonts are loaded, then we hang them in the appropriate places. If not loaded, hang the standard.

We can also use new properties in CSS, for example, font-rendering, which allows us to emulate either FOIT or FOUT, but in fact we don’t even need them, because there is a Font Rendering Optional.

There is another way - critical FOFT with a Data URI. Instead of loading via the JavaScript API, a web font is embedded directly in the markup as an embedded Data URI.

Two-step rendering: first roman font, and then the rest:

This method will block the initial display, but since we embed only a small subset of the plain font, this is a small price to eliminate FOUT. However, this method has the fastest strategy for downloading fonts to date.

I thought that you could do even better. Instead of using sessionStorage, we embed a web font in the markup and use Service Workers.

For example, we have some kind of font, but we don’t need it all. And we are not doing subsetting, but choosing what is needed for this page. For example, we take italic, reduce it, first load it, display it on the page, and it will look like normal, bold be like normal, everything will be like normal. Then everything is loaded as needed. Next, do subsetting and send it to the Service Workers.

Then, when the user comes to the page for the first time, we check the availability of the font, if not, then we immediately display the text, asynchronously load this font and add it to the Service Workers, for short. When the user enters the second time, the font for the idea should already be in Service Workers. Next, we check if it is there and if so, then we immediately take it from there, and if not, then all these actions occur again.

There is a caching problem here. What is the probability that someone comes to your site and has all the files that should be in the cache are present in it?

The image above demonstrates the results of a 2007 survey that says that 40-60% of users have an empty cache, and 20% of all page views occur with an empty cache. Why is that? Because browsers do not know how to cache? No, we just visit a large number of sites and if everything is cached, the drive of a PC or smartphone would fill up very quickly.

Browsers delete from the cache what they consider no longer necessary.

Let's look at the example of Chrome, what happens in it when we try to open any page on the web. If you look at the fonts line, you can see that the fonts are in memory cache or HTTP cache at best in 70% of cases. In fact, these are unpleasant numbers. If the fonts are downloaded again each time, each time the user comes to the site and watches the change of font style. From the point of view of the UX is not very good.

Care must be taken to ensure that the fonts actually remain in the cache. We used to rely on local storage, but now it is more reasonable to rely on Service Workers. Because if I put something in Service Workers, then it will be there.

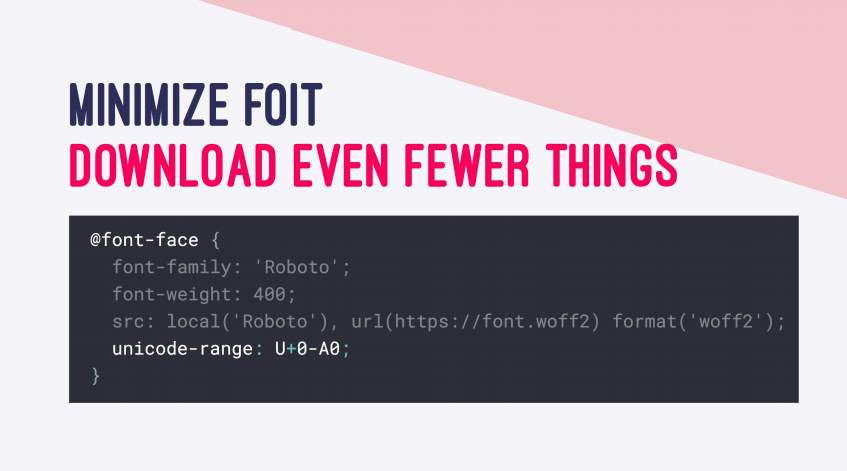

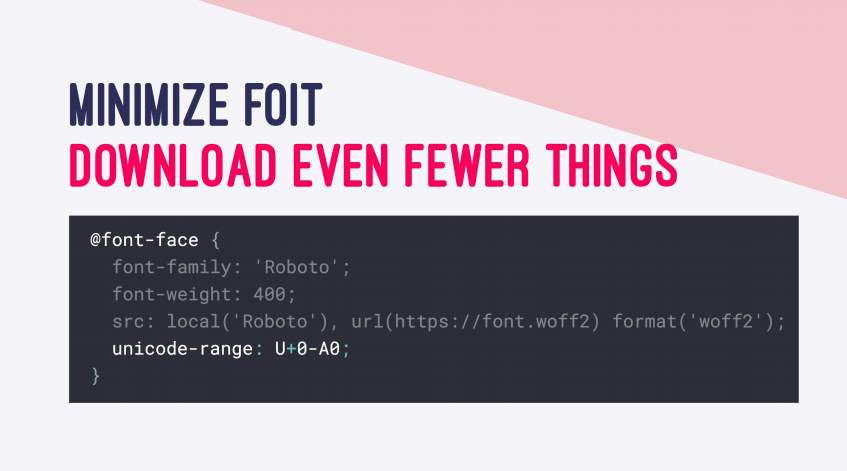

What else can you do? You can use unicode-range. Many people think that there is a dynamic subsetting, that is, we have a font, it is dynamically parsed, and only the specified part in the unicode-range is loaded. In fact, this is not the case, and the entire font is loaded.

Indeed, this is useful when we have unicode-range, for example, for Cyrillic and for English text. Instead of downloading a font that has English and Russian texts, we can break it into several parts and load Russian, if we have Russian text on the page, and do the same with English.

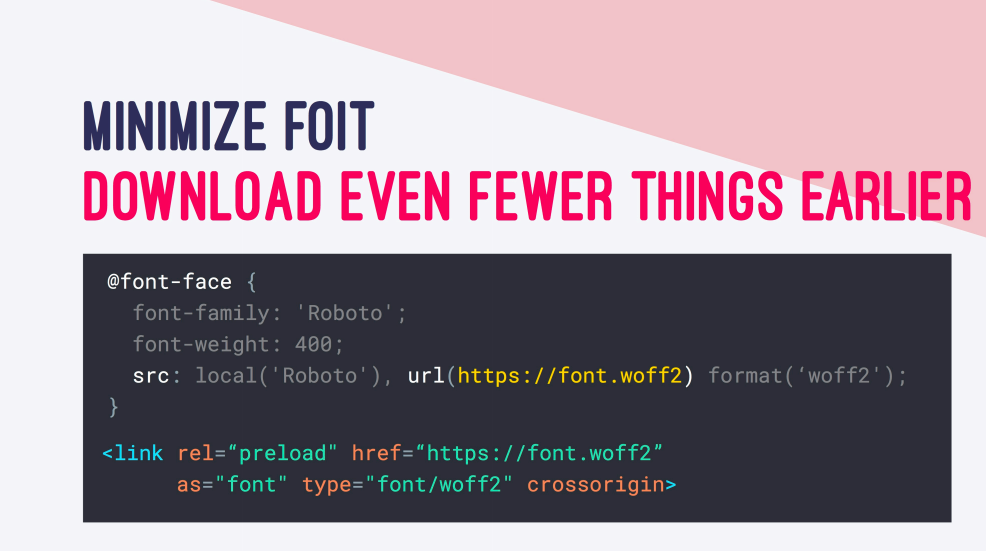

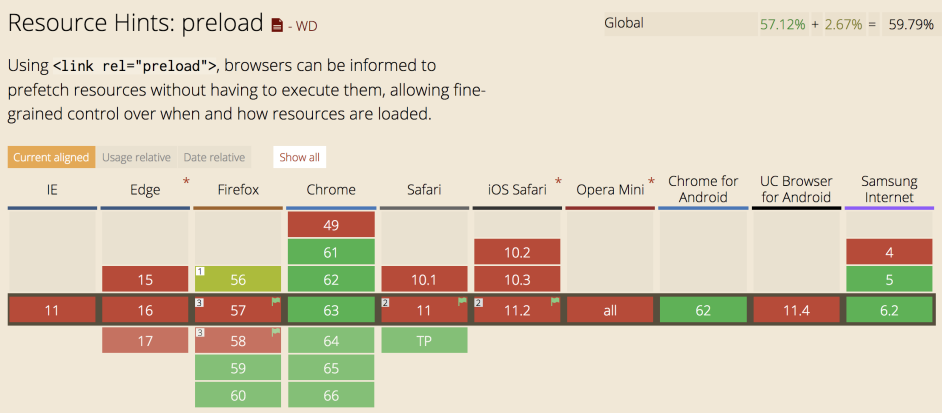

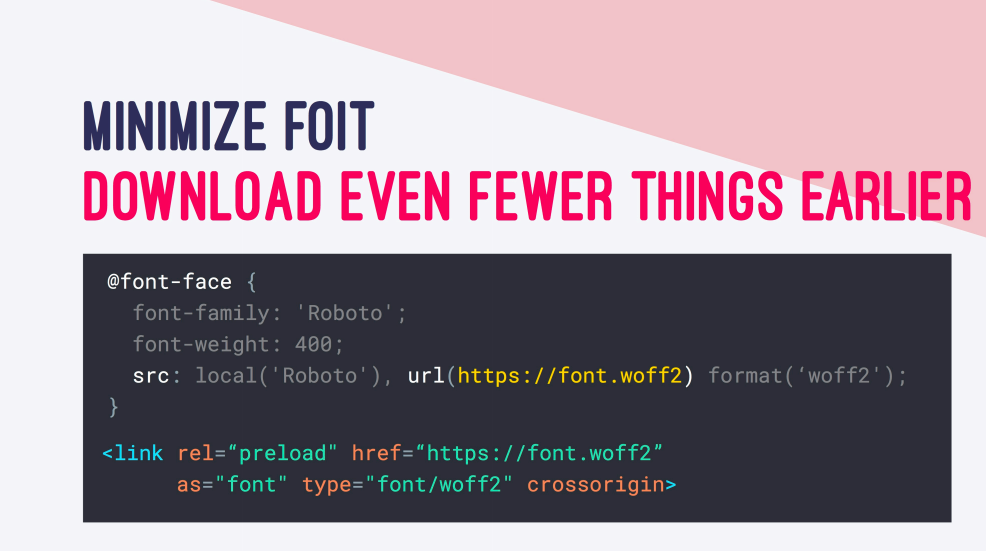

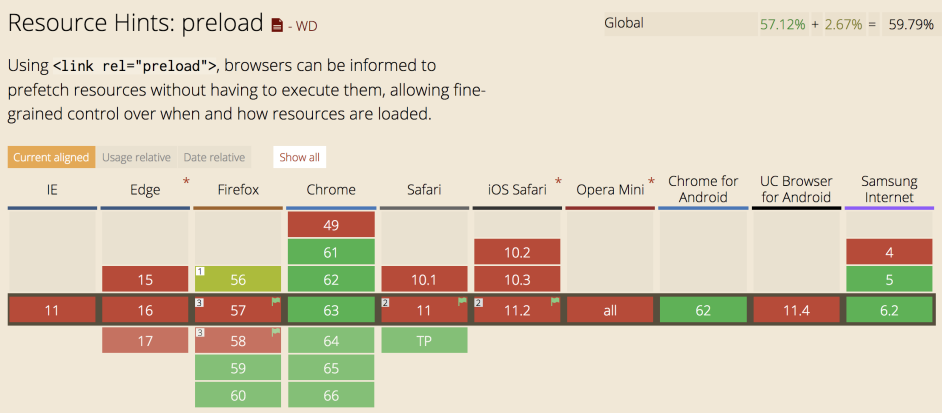

What else can you do? There is a cool thing that you need to use always and everywhere - preload.

Preload allows you to load resources at the beginning of the page load, which in turn makes it less likely to block rendering of the page. This approach improves performance.

We can also use font-display: optional. This is a new property in css. How does it work?

Font-display has several meanings. Let's start with the block. This property sets the font lock for three seconds, during which the font loads, then the font is replaced and then directly displayed.

The swap property works much the same, but with some exceptions. The browser immediately renders the text with a spare font, and when the specified is loaded, it will be replaced.

Fallback sets a small blocking period of 100 ms, the replacement period will be 3 seconds, after which the font will be replaced. If during this time the font is not loaded, the browser will draw the text with a spare font.

And finally we come to optional. The blocking period is 100 ms, if during this time the font has not loaded, the text is displayed immediately. If you have a slow connection, the browser may stop loading the font. When the font is loaded, you will still see the default font. To see the prescribed font, you must reload the page.

There are many techniques that we used before the advent of http / 2, for example, concatenation, sprites, etc. But with the advent of http / 2, the need to use them has disappeared, because unlike http / 1.1, the new version loads almost everything at once, which is great, because you can use many additional features.

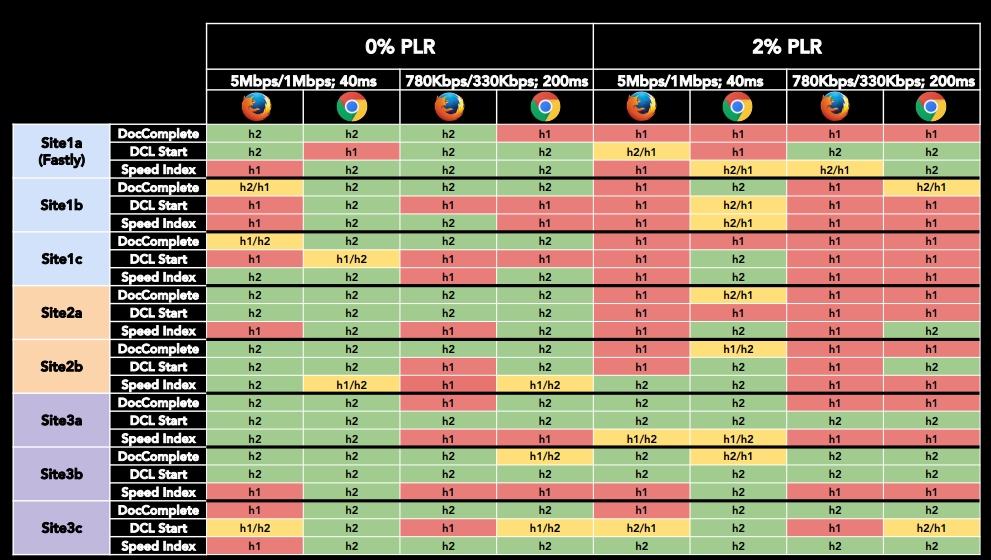

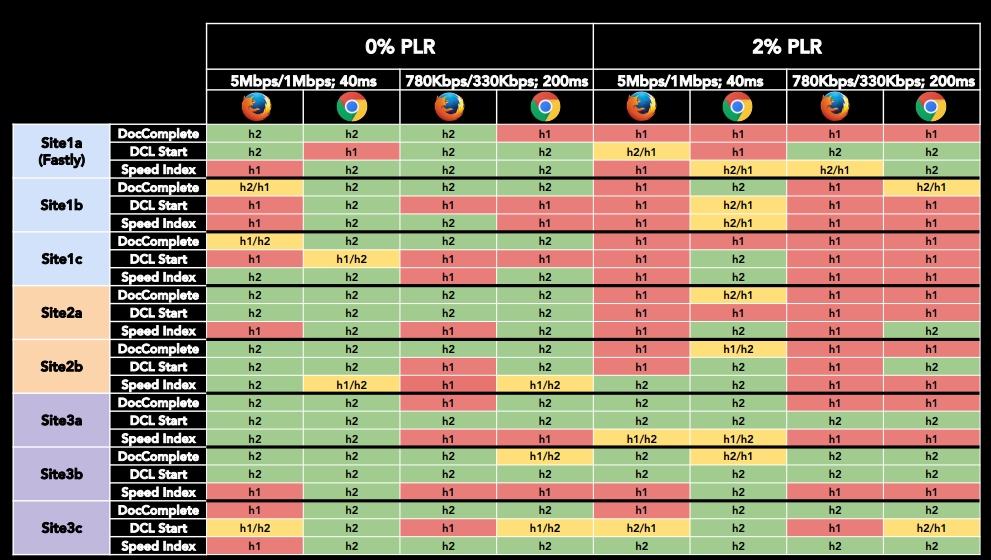

In theory, the transition to http / 2 promises us 64% (23% on mobile) faster loading pages. But in practice, everything works more slowly.

If a large part of your target audience constantly comes to the resource, being in a bus, car, etc., then it is quite possible that http / 1.1 will be in a better position.

Look at the test results below. It shows that in some situations http / 1.1 is faster.

There are wonderful features for http / 2, for example, HPACK, which should be used always and everywhere, and also server push. But there is a small problem. It occurs depending on the browser and server. Suppose we load the page, we have no server push.

If the page is reloaded, then everything is in the cache.

But if we make server push, then our css will reach the user much faster.

But it also means that even if the css is in the cache, they will still be sent.

That is, if you push a lot of files from the server, they will be loaded many times.

Go ahead. There are some recommended time frames for loading pages. For example, for an average device on android it is five seconds. This is not so much, considering that, for example, we have 3G.

If you look at the recommended size limit for uploads that is required to start rendering, which Google mentions, it is 170 KB.

Therefore, when it comes to frameworks, we need to think about parsing, compiling, network quality, runtime costs, etc.

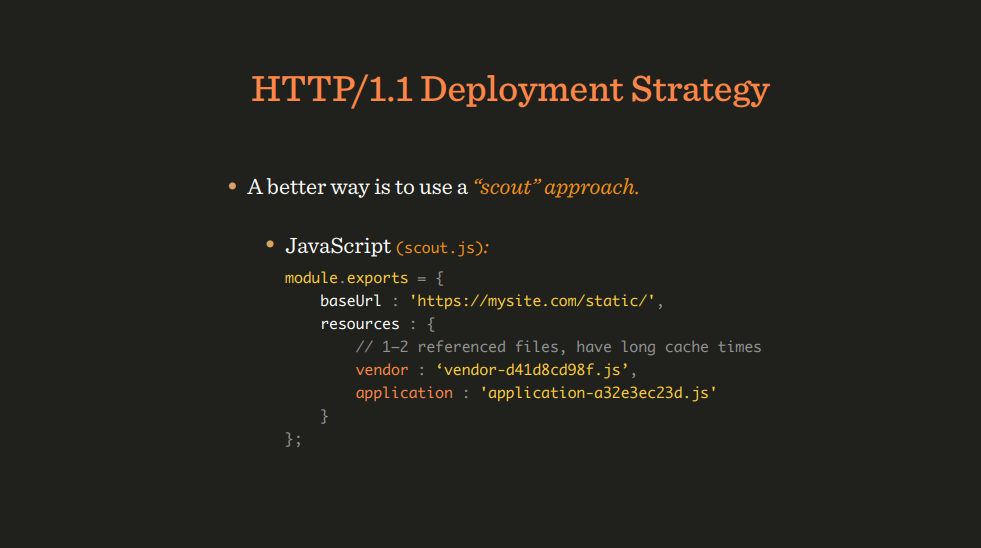

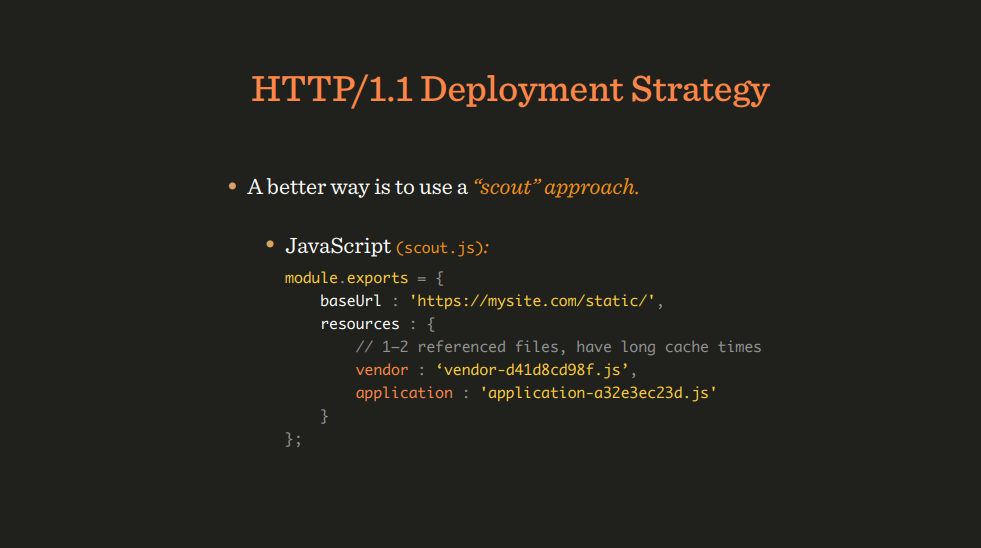

There are various ways to upload files, for example, the classic way, which is a bit outdated - scout. We get the file scout.js, it is in html, we load it. Its task is to make the rest of the environment as cacheable as possible and at the same time timely report on changes in it.

This means that this file needs a little time to store in the cache, and if something changes in the environment, then scout immediately initiates an update. This is an effective way, because every time we do not need to load and replace html.

What to do with http / 2? After all, we know that we can send as many files as we like and there is no need to combine them into packets. Let's load 140 modules then, why not? Actually, this is a very bad idea. First, if we have a lot of files, and we do not use a library, for example gzip for compression, then the files will be larger. Secondly, browsers are not yet optimized for such workflows. As a result, we began to experiment and look for the right amount, and it turned out that it is optimal to send about 10 packets.

Packages are best packaged, based on the frequency of file updates: often updated in some packages, and rarely updated in others, in order to avoid unnecessary downloads. For example, libraries to pack with utilities, etc. Nothing special. And what to do with css, how to download it? Server push is not suitable here.

In the beginning, we all downloaded as minimized files, then we thought that a part should be loaded into critical css, because we only have 14 KB, and they need to be loaded as quickly as possible. Began to do loadCSS, write logic, then add display: none.

But it all looked somehow bad. In http / 2, they thought that it was necessary to split, minify and load all files. It turned out that the best option was the one in the image below.

Unusually! This option works well in Chrome, poorly in IE, in Firefox the work slowed down a bit as they changed the rendering. Thus, we have improved the speed of work for 120 ms.

If you look at work with progressive css and without. Then with progressive css everything loads faster, but in parts, but without using it more slowly, because The css is located in the header and blocks the page as js.

And the last level, which I can not tell you - Resource Hints. This is a great feature that allows you to do many useful things. Lets go through some of them.

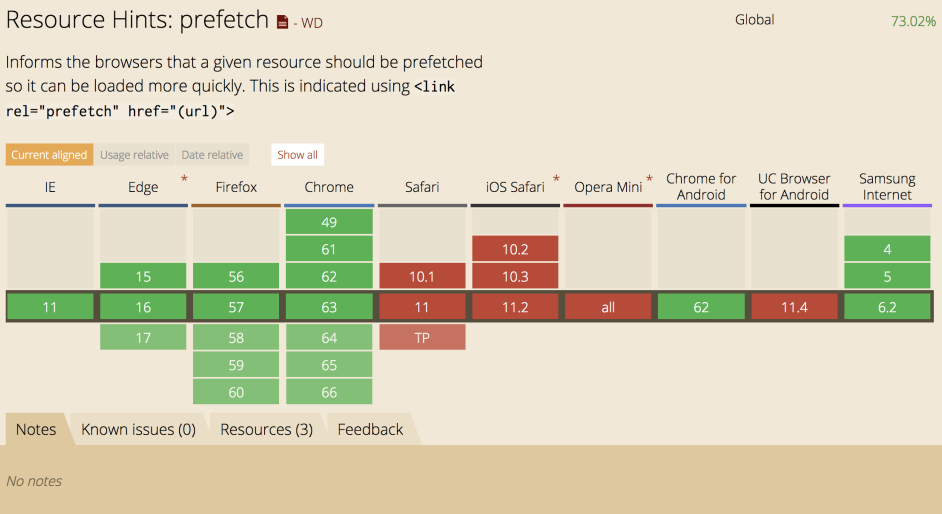

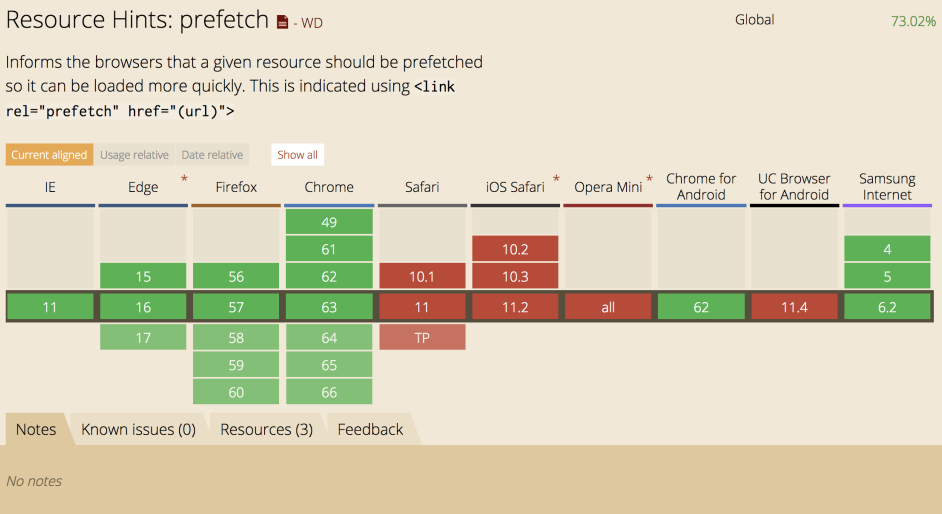

Prefetch - indicates to the browser that we will need one or another file soon, and the browser loads it with a low priority.

Prerender - this function no longer exists, but it helped make the page prerender earlier. Perhaps she will have an alternative ...

Dns-prefetch also speeds up the page loading process. Using dns-prefetch assumes that the browser pre-loads the server address of the specified domain name.

Preconnect allows you to do a preliminary handshake with specified servers.

Preload - tells the browser which resources need to be preloaded with high priority. Preload can be used for scripts and fonts.

I remember in 2009 I read the article " Gmail for Mobile HTML5 Series: Reducing Startup Latency ", and she changed my views on the classic rules. See for yourself! We have a JS code, but we don’t need it all right now. So why don't we comment out most of the JS code, and then, when necessary, uncomment and execute it in eval?

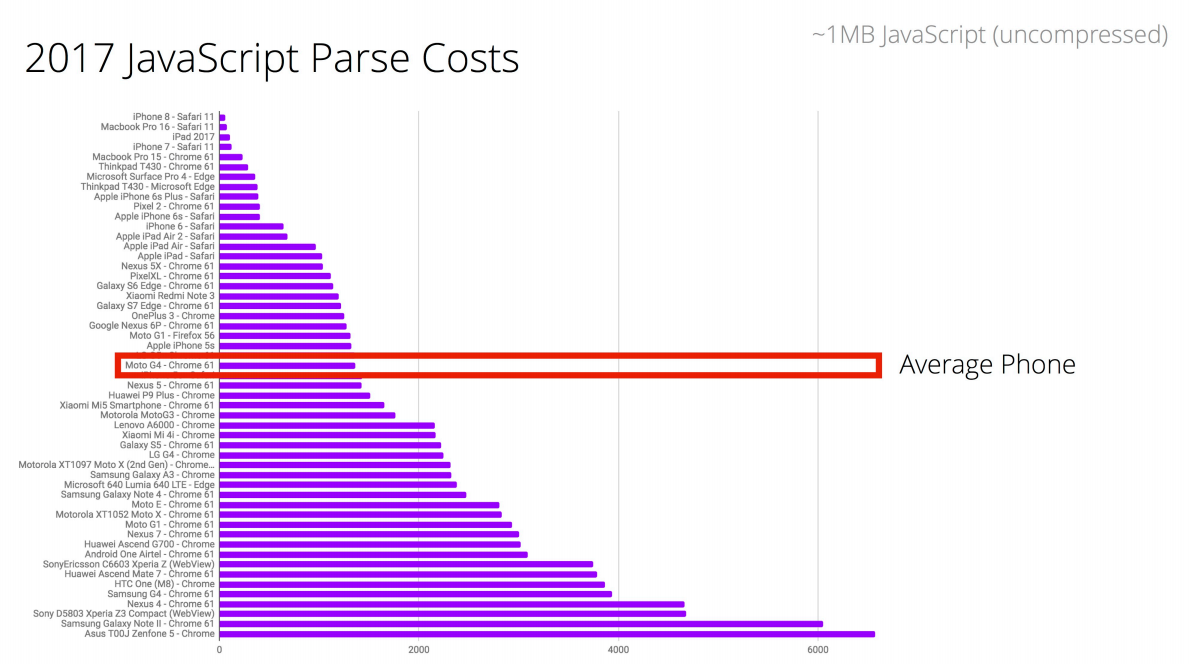

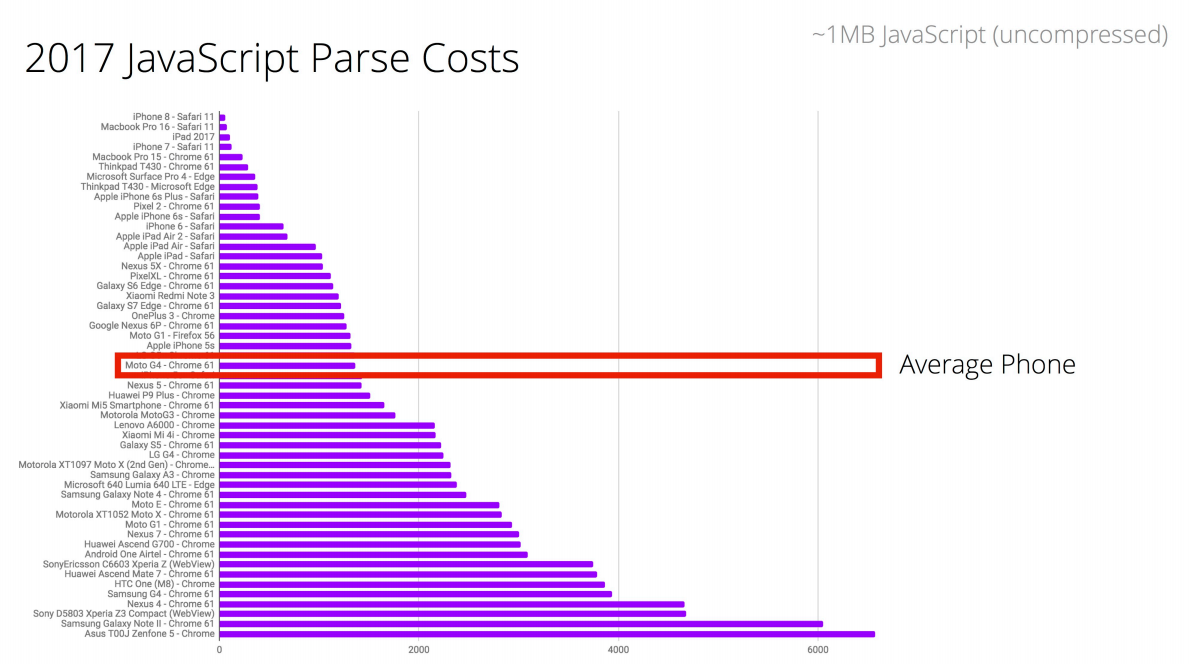

And the reason why they did this is because the average smartphone parsing time is 8-9 times longer than the last iPhone.

Let's turn to statistics. To parse a 1 MB code on an average phone, you need 4 seconds.

This is a lot! But we don't need 1 MB right away. If you again refer to the statistics, it turns out that the sites use only 40% of the JS-code from what they downloaded.

And we can use preload instead of eval for the same situations.

That is, we store the file in the cache, and then, when necessary, we add it to the page.

So, this is only half of what Vitaly Friedman planned to share. The remaining pieces and lifehacks will be in deciphering his second performance at HolyJS 2017 Moscow, which we will also prepare and post in our blog.

And if you like the wrong side of JS just as we do, you will surely be interested in these reports at our May conference HolyJS 2018 Piter , the key ones of which we again wrote Vitaly Friedman's story:

The article is based on the transcript of a speech by Vitaly Friedman from Smashing Magazine at the December conference of Holy JS 2017 Moscow.

So that we would not be bored with you, I decided to submit this story in the format of a small game, calling it Responsive Adventures.

')

The game will have five levels, and we start with a simple level - compression.

Level 1 - Compression

Compression is compression, and for example, images, text, fonts, and so on can be compressed into a frontend. If there is a need to optimize the page as much as possible in terms of text, then in practice they usually use the library to compress gzip data. The most common gzip implementation is zlib, which uses a combination of LZ77 and Huffman encoding algorithms.

Usually we are interested in how much the library should compress, because the better it does, the more time this process takes. Usually we choose either fast compression, or good, because at the same time fast and good compression is impossible to achieve. But as developers, we take care of two aspects: the size of files and the speed of compression / decompression - for static and dynamic web content.

There are data compression algorithms Brotli and Zopfli. Zopfli can be considered as more efficient, but at the same time slower version of gzip. Brotli is a new lossless compression and decompression format.

In the future we will be able to use Brotli. But now not all browsers support it, and we need full support.

Brotli and Zopfli

- Brotli is much slower in data compression compared to gzip, but provides much better compression.

- Brotli is an open source lossless compression format.

- Brotli decompression is fast - comparable to zlib.

- Brotli gives an advantage when working with large files with slow connections.

- Brotli squeezes more efficiently by 14-39%.

- Ideal for HTML, CSS, JavaScript, SVG and all text.

- Brotli support is limited to HTTPS connections.

- Zopfli is often used for on-the-fly compression, but it is a good alternative for compressing static content once.

Brotli / Zopfli compression strategy

The strategy is as follows:

- Precompression of static resources using Brotli + Gzip.

- Compress with Brotli HTML on the fly with a compression level of 1-4.

- Check Brotli support on CDN (KeyCDN, CDN77, Fastly).

- Use Zopfli if it is not possible to install / maintain Brotli on the server.

Level 2 - Images

But what will we do with the images?

Let's imagine you have a good landing page with fonts and images. It is necessary that the page loads very quickly. And we are talking about the extreme level of image optimization. This is a problem, and it is not contrived. We prefer not to talk about it, because, unlike JS, images do not block rendering of the page. In fact, this is a big problem, because the size of images increases over time. Now there are already 4K screens running, soon there will be 8K.

In general, 90% of users see 5.4 MB of images on a page - that’s a lot. This is a problem that needs to be resolved.

We specify the problem. What if you have a big picture with a transparent shadow, as in the example below.

How to squeeze it? After all, png is hard enough, and after compression the shadow will not look very good. What format to choose? JPEG? Shadow will also not look good enough. What can be done?

One of the best options is to divide the images into two components. The basis of the image is placed in jpeg, and the shadow in png. Next, connect the two pictures in svg.

Why is it good? Because the image, which weighed 1.5 MB, now takes 270 KB. This is a big difference.

But there are a couple more tricks. Here is one of them.

Take two images that are visually displayed on a website with the same proportions.

The first is with very poor quality, it has a real and visual size of 600 x 400 px, and below it is the same, but visually reduced to 300 x 200 px.

Let's compare this image with an image that has a real size of 300 x 200 px, but saved at 80% quality.

Most users are not able to distinguish these images, but the picture on the left weighs 21 KB, and on the right, 7 KB.

There are two problems:

- the one who decides to save the picture will keep it in bad quality

- the browser will have to zoom in or out

An interesting test that used this technique was conducted by the Swedish online journal Aftonbladet. The initial image quality setting was set at 30%.

As a result, their main page with 40 images using this technique took 450 KB. Impressive!

Here is another good technique.

We have a picture, and we need to reduce its size. Due to what it will be better to shrink? Contrast! What if you remove or reduce it significantly, and then return it with the help of CSS filters? But again, the one who wants to download this picture will face poor quality.

This technique can achieve great results. Here are some examples:

Everything would be fine, but what about the additional rendering delays? After all, the browser has to apply filters to the image. But here everything is quite positive: 27 ms versus 23 ms without the use of filters - the difference is insignificant.

Filters are supported everywhere except IE.

What other tricks are there? Compare two photos:

The difference is the blurring of irrelevant details of the photo, which allows you to reduce the size to 147 KB. But this is not enough! Let's go into JPEG encoding. Suppose you have a consistent and progressive jpeg.

Sequential JPEG is loaded on the page line by line, progressive - at first in poor quality all at once, and then the quality gradually improves.

If you look at how encoders work, you can see several levels of scanning.

Many different levels of scanning are in this file. Our goal, as a developer, is to show immediately detailed information about this picture. Then you can make sure that Ships Fast and Shows Soon are with some kind of coefficients that can fit the picture better, and then at the first level we will see the structure, and not just something blurry. And on the second - almost everything.

There are libraries and utilities that allow you to do these tricks: Adept, mozjpeg or Guetzli.

Level 3

I remember seven or ten years ago - I wanted fonts, added font-face and was done. And now, no, you need to think what I want to do and how to download. So, what is the best method to choose for downloading fonts?

We can use font-face syntax to avoid common pitfalls along the way:

If we want to support only more or less normal browsers, then we can write even shorter:

What happens when we have this font-face in css? Browsers see if there is an indication of the font in the body or somewhere else, and if so, then the browser starts loading it. And we have to wait.

If the fonts are not yet cached, they will be queried, loaded, and applied, moving the rendering back.

But different browsers act differently. There are FOUT and FOIT mapping approaches.

FOIT (Flash of Invisible Text) - nothing is displayed until the fonts are loaded.

FOUT (Flash Unstyled Text) - the content is displayed immediately with default fonts, and then the necessary fonts are loaded.

Usually browsers wait for font downloads for three seconds, and if they do not have time to load, default fonts are substituted. There are browsers that do not wait. But the most annoying thing is that there are browsers that wait until it stops. Will not work! There are many different options for how to get around this. One of them is CSS Font Loading API. Create a new font-face in JS. If the fonts are loaded, then we hang them in the appropriate places. If not loaded, hang the standard.

We can also use new properties in CSS, for example, font-rendering, which allows us to emulate either FOIT or FOUT, but in fact we don’t even need them, because there is a Font Rendering Optional.

There is another way - critical FOFT with a Data URI. Instead of loading via the JavaScript API, a web font is embedded directly in the markup as an embedded Data URI.

Two-step rendering: first roman font, and then the rest:

- Download full fonts with all weights and styles

- Minimum subset of fonts (AZ, 0-9, punctuation)

- Use sessionStorage for back visits

- Download the Roman Subscript first.

This method will block the initial display, but since we embed only a small subset of the plain font, this is a small price to eliminate FOUT. However, this method has the fastest strategy for downloading fonts to date.

I thought that you could do even better. Instead of using sessionStorage, we embed a web font in the markup and use Service Workers.

For example, we have some kind of font, but we don’t need it all. And we are not doing subsetting, but choosing what is needed for this page. For example, we take italic, reduce it, first load it, display it on the page, and it will look like normal, bold be like normal, everything will be like normal. Then everything is loaded as needed. Next, do subsetting and send it to the Service Workers.

Then, when the user comes to the page for the first time, we check the availability of the font, if not, then we immediately display the text, asynchronously load this font and add it to the Service Workers, for short. When the user enters the second time, the font for the idea should already be in Service Workers. Next, we check if it is there and if so, then we immediately take it from there, and if not, then all these actions occur again.

There is a caching problem here. What is the probability that someone comes to your site and has all the files that should be in the cache are present in it?

The image above demonstrates the results of a 2007 survey that says that 40-60% of users have an empty cache, and 20% of all page views occur with an empty cache. Why is that? Because browsers do not know how to cache? No, we just visit a large number of sites and if everything is cached, the drive of a PC or smartphone would fill up very quickly.

Browsers delete from the cache what they consider no longer necessary.

Let's look at the example of Chrome, what happens in it when we try to open any page on the web. If you look at the fonts line, you can see that the fonts are in memory cache or HTTP cache at best in 70% of cases. In fact, these are unpleasant numbers. If the fonts are downloaded again each time, each time the user comes to the site and watches the change of font style. From the point of view of the UX is not very good.

Care must be taken to ensure that the fonts actually remain in the cache. We used to rely on local storage, but now it is more reasonable to rely on Service Workers. Because if I put something in Service Workers, then it will be there.

What else can you do? You can use unicode-range. Many people think that there is a dynamic subsetting, that is, we have a font, it is dynamically parsed, and only the specified part in the unicode-range is loaded. In fact, this is not the case, and the entire font is loaded.

Indeed, this is useful when we have unicode-range, for example, for Cyrillic and for English text. Instead of downloading a font that has English and Russian texts, we can break it into several parts and load Russian, if we have Russian text on the page, and do the same with English.

What else can you do? There is a cool thing that you need to use always and everywhere - preload.

Preload allows you to load resources at the beginning of the page load, which in turn makes it less likely to block rendering of the page. This approach improves performance.

We can also use font-display: optional. This is a new property in css. How does it work?

Font-display has several meanings. Let's start with the block. This property sets the font lock for three seconds, during which the font loads, then the font is replaced and then directly displayed.

The swap property works much the same, but with some exceptions. The browser immediately renders the text with a spare font, and when the specified is loaded, it will be replaced.

Fallback sets a small blocking period of 100 ms, the replacement period will be 3 seconds, after which the font will be replaced. If during this time the font is not loaded, the browser will draw the text with a spare font.

And finally we come to optional. The blocking period is 100 ms, if during this time the font has not loaded, the text is displayed immediately. If you have a slow connection, the browser may stop loading the font. When the font is loaded, you will still see the default font. To see the prescribed font, you must reload the page.

Level 4

There are many techniques that we used before the advent of http / 2, for example, concatenation, sprites, etc. But with the advent of http / 2, the need to use them has disappeared, because unlike http / 1.1, the new version loads almost everything at once, which is great, because you can use many additional features.

In theory, the transition to http / 2 promises us 64% (23% on mobile) faster loading pages. But in practice, everything works more slowly.

If a large part of your target audience constantly comes to the resource, being in a bus, car, etc., then it is quite possible that http / 1.1 will be in a better position.

Look at the test results below. It shows that in some situations http / 1.1 is faster.

There are wonderful features for http / 2, for example, HPACK, which should be used always and everywhere, and also server push. But there is a small problem. It occurs depending on the browser and server. Suppose we load the page, we have no server push.

If the page is reloaded, then everything is in the cache.

But if we make server push, then our css will reach the user much faster.

But it also means that even if the css is in the cache, they will still be sent.

That is, if you push a lot of files from the server, they will be loaded many times.

Go ahead. There are some recommended time frames for loading pages. For example, for an average device on android it is five seconds. This is not so much, considering that, for example, we have 3G.

If you look at the recommended size limit for uploads that is required to start rendering, which Google mentions, it is 170 KB.

Therefore, when it comes to frameworks, we need to think about parsing, compiling, network quality, runtime costs, etc.

There are various ways to upload files, for example, the classic way, which is a bit outdated - scout. We get the file scout.js, it is in html, we load it. Its task is to make the rest of the environment as cacheable as possible and at the same time timely report on changes in it.

This means that this file needs a little time to store in the cache, and if something changes in the environment, then scout immediately initiates an update. This is an effective way, because every time we do not need to load and replace html.

What to do with http / 2? After all, we know that we can send as many files as we like and there is no need to combine them into packets. Let's load 140 modules then, why not? Actually, this is a very bad idea. First, if we have a lot of files, and we do not use a library, for example gzip for compression, then the files will be larger. Secondly, browsers are not yet optimized for such workflows. As a result, we began to experiment and look for the right amount, and it turned out that it is optimal to send about 10 packets.

Packages are best packaged, based on the frequency of file updates: often updated in some packages, and rarely updated in others, in order to avoid unnecessary downloads. For example, libraries to pack with utilities, etc. Nothing special. And what to do with css, how to download it? Server push is not suitable here.

In the beginning, we all downloaded as minimized files, then we thought that a part should be loaded into critical css, because we only have 14 KB, and they need to be loaded as quickly as possible. Began to do loadCSS, write logic, then add display: none.

But it all looked somehow bad. In http / 2, they thought that it was necessary to split, minify and load all files. It turned out that the best option was the one in the image below.

Unusually! This option works well in Chrome, poorly in IE, in Firefox the work slowed down a bit as they changed the rendering. Thus, we have improved the speed of work for 120 ms.

If you look at work with progressive css and without. Then with progressive css everything loads faster, but in parts, but without using it more slowly, because The css is located in the header and blocks the page as js.

Level 5

And the last level, which I can not tell you - Resource Hints. This is a great feature that allows you to do many useful things. Lets go through some of them.

Prefetch

Prefetch - indicates to the browser that we will need one or another file soon, and the browser loads it with a low priority.

<link rel="prefetch" href="(url)">

Prerender

Prerender - this function no longer exists, but it helped make the page prerender earlier. Perhaps she will have an alternative ...

<link rel="prerender" href="(url)">

Dns-prefetch

Dns-prefetch also speeds up the page loading process. Using dns-prefetch assumes that the browser pre-loads the server address of the specified domain name.

<link rel="dns-prefetch" href="(url)">

Preconnect

Preconnect allows you to do a preliminary handshake with specified servers.

<link rel="preconnect" href="(url)">

Preload

Preload - tells the browser which resources need to be preloaded with high priority. Preload can be used for scripts and fonts.

<link rel="preload" href="(url)" as="(type)">

I remember in 2009 I read the article " Gmail for Mobile HTML5 Series: Reducing Startup Latency ", and she changed my views on the classic rules. See for yourself! We have a JS code, but we don’t need it all right now. So why don't we comment out most of the JS code, and then, when necessary, uncomment and execute it in eval?

And the reason why they did this is because the average smartphone parsing time is 8-9 times longer than the last iPhone.

Let's turn to statistics. To parse a 1 MB code on an average phone, you need 4 seconds.

This is a lot! But we don't need 1 MB right away. If you again refer to the statistics, it turns out that the sites use only 40% of the JS-code from what they downloaded.

And we can use preload instead of eval for the same situations.

var preload = document.createElement("link"); link.href= "myscript.js" ; link.rel= "preload"; link.as= "script"; document.head.appendChild(link); That is, we store the file in the cache, and then, when necessary, we add it to the page.

So, this is only half of what Vitaly Friedman planned to share. The remaining pieces and lifehacks will be in deciphering his second performance at HolyJS 2017 Moscow, which we will also prepare and post in our blog.

And if you like the wrong side of JS just as we do, you will surely be interested in these reports at our May conference HolyJS 2018 Piter , the key ones of which we again wrote Vitaly Friedman's story:

- New Advenures In Front-End, Season 2 (Vitaly Friedman, Smashing Magazine)

- RxJS: Performance and memory leaks in a large application (Evgeny Pozdnyakov Exadel)

- Meinim crypt in the browser: WebWorkers, GPU, WebAssembly and other good things (Denis Radin, Evolution Gaming)

- JavaScript debugging using Chrome DevTools (Alexey Kozyatinsky, Google)

- What to expect from JavaScript in 2018? (Mikhail Poluboyarinov, Health Samurai)

Source: https://habr.com/ru/post/354890/

All Articles