The science of emotion: how smart technologies learn to understand people

Valentina Evtyukhina, the author of the Digital Eva channel, and the specialists of the project company and the R & D laboratory of the Neurodata Lab, specifically for the Netology blog, prepared an article on how technologies are developing in the field of emotion recognition.

The science of emotion became popular not so long ago, and mainly thanks to Paul Ekman, an American psychologist, author of the book Psychology of Lies and consultant to the popular TV show Lie to Me, which is based on the materials of the book.

Paul Ekman and Tim Roth - starring in the TV series "Lie to Me", whose character is written off from the very Ekman

')

The series started in 2009, and at the same time public interest in the topic of emotion recognition has grown significantly. The boom in the startup environment occurred in 2015–2016, when two technology giants — Microsoft and Google — were immediately available to ordinary users for their pilot projects for working with the science of emotions.

Service for the recognition of emotions Emotion Recognition, launched by Microsoft

in 2015

This was the impetus for the creation of a variety of applications and algorithms based on the technology of recognition of emotions. For example, Text Analytics API is one of the Microsoft Cognitive Services package services that allow developers to embed ready-made smart algorithms into their products. Among other services of the package: image recognition, face recognition, speech, and many others. Now, emotions can be identified by text, voice, photo and even video.

He is, but he is young, he still has everything ahead.

Thus, according to the influential agency MarketsandMarkets, the global volume of the emotions market in 2016 amounted to $ 6.72 billion, and it is assumed that by the mid-2020s. it will increase to $ 36.07 billion. The market of emotional technologies is not monopolized. There is a place for corporations, for laboratories, and for startups. Moreover, normal market practices: corporations integrate smaller companies into their solutions.

Emotional and behavioral technologies are in demand in various fields, including medical.

Turning to foreign experience, we recall how Empatica, under the leadership of Rosalind Picard, was the first in the world to obtain permission from US supervisors responsible for clinical trials (FDA clearing) a few weeks ago to use their Embrace wristband, which not only captures physiological data about the state of the owner, but also evaluates his emotional background and predicts the likelihood of the occurrence of difficult situations for the organism. It can help people with autism spectrum disorders, depression and in difficult cases in neurology and medicine.

The Israeli company Beyond Verbal together with the Mayo Clinic is looking for vocal biomarkers in a human voice, which determine not only emotions, but also lays the possibility of predicting coronary coronary diseases, Parkinson's and Alzheimer's diseases, which already bring emotional issues to the topic of gerontology and the search for ways to slow aging.

If we talk about the applicability of technology, then B2B sphere is mainly involved in sectors like intelligent transport, retail, advertising, HR, IoT, gaming.

But there is also a demand in B2C: EaaS (Emotion as a Service) or a cloud-based analytical solution (Human data analytics) will allow anyone to upload a video file and get all the emotional and behavioral statistics for each record fragment.

If we are talking about the election debate for the presidency (whether it be Russia or the United States), then hardly anything is going to disappear from the algorithm. Moreover, in a couple of years, the technology for recognizing emotions will be in every smartphone.

AI boom was predicted for 2025-2027.

The trend will be the creation of smart interfaces for recognizing human emotions - the software will allow you to determine the user's state at an arbitrary point in time using a regular webcam.

This is a promising niche, since the definition of human emotions can be used for commercial purposes: from analyzing the perception of video and audio content to investigating criminal cases.

On the other hand, these are endless possibilities of the entertainment industry. For example, the new iPhone X has Face ID, a face recognition technology that not only unlocks the phone, but also can create emoji with your facial expressions:

The bulk of new products in the field of emotional science is based on seven basic emotions and micro-expression of the face, which reflects our emotions at a level beyond the control of the brain. Consciously, we can hold back a smile, but the slight twitching of the corners of the lips will remain, and this will be a signal for emotion recognition technology.

There is also a block of technologies specializing in the analysis of speech, voice and gaze. The use of these methods in psychiatry or criminal proceedings will allow you to learn the maximum about the emotional state of a person and his true mood thanks to information about the smallest changes in facial expressions and body movements.

Now companies and individual teams can use open scientific data on the recognition of emotions and use them in a stack with technologies, forming the field of emotional computing (affective computing).

The FAANG top five ( Facebook , Apple , Amazon , Netflix , Google ) and technology giants like IBM made a huge contribution to the development of the market of emotional technologies.

There are no direct legal barriers to emotional technologies, and the industry itself is regulated rather weakly and precisely. There are expected barriers and concerns: first of all, it is a problem of privacy and personal data protection.

At the same time, universal digitalization, distribution of gadgets and devices of any kind, universal access to images and videos (several billion videos hit the network daily), publicity on social networks allows you to efficiently extract emotional data from the general stream and use them for analyzing a person - as a consumer of goods and services, and as a user. And all this should take place in the legal field, correctly and ethically.

New European regulations on personal data protection (GDPR) imply a number of limitations: the data for learning and training machine learning algorithms can be used freely if they are:

How the story will unfold with the regulatory framework in Russia, time will tell.

The health industry is actively introducing the most modern methods of collecting and analyzing data about patients or users, as computer algorithms determine symptoms using hundreds and thousands of similar cases.

There are already mobile applications that analyze the psycho-emotional state of the photo and text , and the more a person communicates with the program, the better she learns, “understands” it and gives accurate predictions of treatment.

It’s one thing when a device simply picks up, “understands” at its level your mood and according to it switches on music, adjusts light or makes coffee. The other is when it evaluates the degree of fatigue or determines any deviations from the norm according to your appearance. Or disease. For example, Alzheimer's or Parkinson’s.

Long before its manifestation, the disease begins to affect the muscles of the face, the speed of eye movement, the seemingly insensible changes in the voice and micromovements.

The series "Lie to Me" was released in 2009 and immediately gained worldwide popularity. The protagonist Dr. Lightman can read the truth on face micromimics. This is his “superpowers”, which helps to find the killer and uncover a network of convoluted crimes.

Neural interfaces can all be the same, only better, better and faster. You can rent a person in the interrogation room and then put a special program on the video that predicts the percentage of emotions on his face - anger, fear, bitterness, resentment, and so on. These data will help the investigation to understand at what point a person could cheat or under-commit something.

It is believed that the Internet does not convey emotions, but it is not. From a series of tweets or posts on Facebook, you can determine with high accuracy what kind of mood and state the user was at the time he wrote it.

The simplest example of determining the psycho-emotional state of the text's style is a well-known situation where a person begins to put an end to the end of a message, and his interlocutor perceives this as a signal that something went wrong in the conversation.

Globally, with machine learning, you can create a system that will track outbursts of anger, requests for help, or fear in messages and respond to them — for example, send a signal to rescue services.

Already, global retail networks integrate online into offline as much as possible, trying to find out what the buyer wants and what he is most likely to buy. When neural interfaces reach the level of accurate, highly sensitive emotion recognition, advertising in the storefront of a shopping center will adjust to the mood of people passing by in a fraction of seconds. Such technology is shown in science fiction films, for example, “Minority Report” and “Blade Runner 2049”.

Shot from the movie “Blade Runner 2049”, where holographic advertising

ginoid reacts to the emotions on the face of the protagonist.

About a year ago, in April 2017, a San Francisco research team taught the LSTM neural network to more accurately recognize the emotional component of the text. Now the machine almost correctly identifies the mood in the customer reviews on Amazon and movie reviews of Rotten Tomatoes, which helps to improve the service and predict the popularity of the product among users.

When the first model of Google Glass glasses came out, it was assumed that gesture control would reach a new level - in order to read the text on the inside of the lens, it was enough to hold your eyes from top to bottom so that the system would understand that you had already read this paragraph and you can show the following . Despite the fact that the gadget itself did not go beyond the scope of the prototype, the story of the study of eye movements has moved into a new field - the game.

It is very important for game developers to understand how and at what point the player feels, how special effects and game obstacles affect him. The company-developer of emotion recognition technology Affectiva helped create the game Nevermind, in which the complexity depends on the level of stress playing, and the plot adjusts to the state of stress or the calmness of the player.

After the beginning of 2016, the Envirtue Capital fund team came to the conclusion that in many aspects the existing venture capital market of Russia in terms of emotion recognition technology does not meet investors' expectations, it was decided to develop projects within its R & D laboratory, fully autonomous and funded from its own sources. Thus was born the company Neurodata Lab LLC.

“Since September 2016, our team began to form, including today both research workers - specialists in natural and cognitive sciences, and technical experts with competences and background in the field of computer vision, machine learning, data science. The interdisciplinary nature of the research of emotions predetermined our choice in favor of a mixed team, which allows us to think about solving problems from different points of view, to combine in one contour both a purely technical part, and the views and ideas of biology, psychophysiology and neurolinguistics. ”

George Pliev

Managing Partner of Neurodata Lab

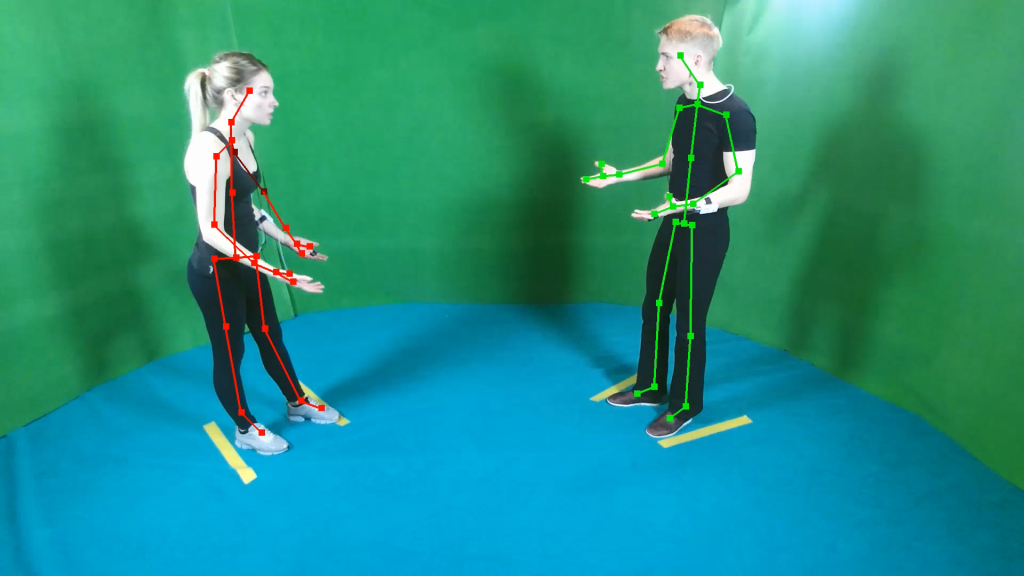

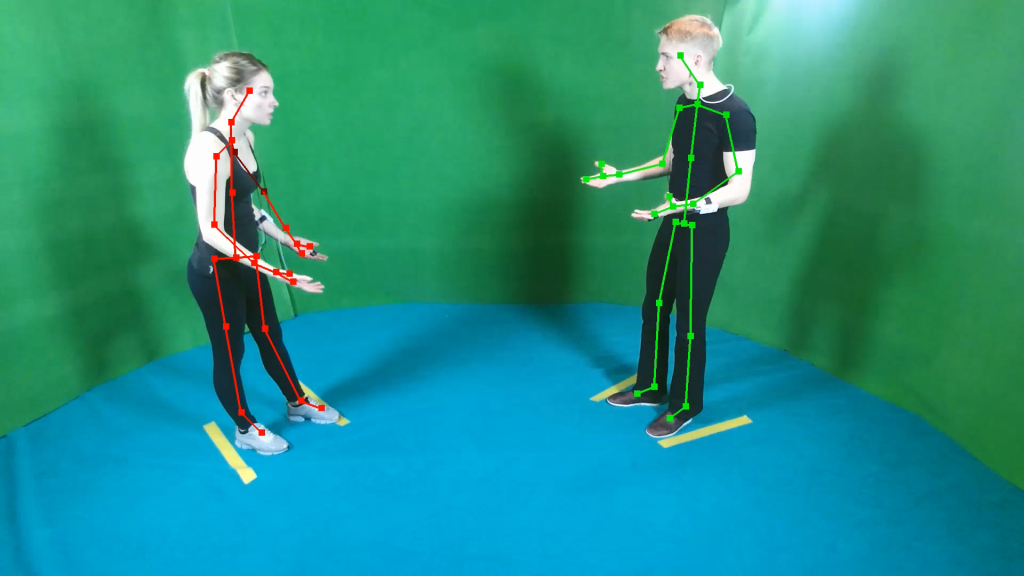

Neurodata Lab develops solutions that cover a wide range of areas in the field of emotion research and their recognition by audio and video, including technologies for voice separation, layer-by-layer analysis and voice identification of the speaker in the audio stream, integrated tracking of body and arm movements, as well as detection and recognition of key points and movements of the muscles of the face in the video stream in real time.

One of these projects is the development of a prototype of the EyeCatcher software that allows you to extract eye and head movement data from video files recorded on a regular camera. This technology opens up new horizons in the study of human eye movements in natural, not laboratory, conditions and significantly expands research capabilities - you can now find out how a person views pictures, reacts to sound, color, taste, what eye movement is when he is happy or surprised . This data will be used as a basis for creating a more advanced technology for recognizing human emotions.

“Our goal is to design a flexible platform and develop technologies that will be in demand by private and corporate clients from various industries, including niche ones. When detecting and recognizing emotions, it is important to take into account that human emotions are a very variable, “elusive” entity, which often changes from person to person, from society to society; There are ethnic, age, gender, socio-cultural differences. To identify patterns, you need to train the algorithms on very large samples of qualitative data. This is the phase on which the team of our laboratory is now focused. ”

George Pliev

Managing Partner of Neurodata Lab

One of the main difficulties that research groups encounter when studying emotions is the limited and “noisy” data to work with emotions in a natural setting or the need to use uncomfortable wearable instruments to track the emotional state of a participant in an experiment that distorts perception. Therefore, as one of its first projects, the Neurodala Lab team collected the Russian-language multimodal dataset RAMAS (The Russian Acted Multimodal Affective Set) - a comprehensive set of data about the emotions experienced, including parallel recording of 12 channels: audio, video, eytreker, wearable motion sensors, etc. about each of the situations of interpersonal interaction. Actors from VGIK who recreate various situations of everyday communication took part in the creation of the dataset. Today, access to the multi-modal RAMAS database is provided free of charge to academic institutions, universities and laboratories.

The presence of a broad database is one of the key factors of high-quality research work with emotions, but it is impossible to accumulate such a database in laboratory conditions and game simulations. To solve this well-known problem, Neurodata Lab specialists developed and launched their own Emotion Miner platform for collecting, marking, analyzing and processing emotional data, which collected more than 20,000 annotator markers, marking data from more than 30 countries. To date, Emotion Miner Data Corpus is one of the world's largest tagged multimodal emotional video data.

Since the establishment of the Neurodata Lab, laboratory staff have collaborated with academic institutions, universities, laboratories and specialized centers of competence in the United States, Europe and Russia, and actively participate in major international conferences, including Interspeech and ECEM, publish academic articles. The company participated in a summit on emotional artificial intelligence promoted jointly by MIT and Affectiva, and in March 2018 organized the first Russian conference “Emotion AI: New Challenges for Science and Education, New Business Opportunities” with NRU ITMO. The plans are to create the Russian Association for Emotion AI, to consolidate the community of scientific experts, laboratories and startups.

“When emotion recognition technology reaches maturity, it will have a significant impact on the entire ecosystem, on the whole technosphere, allowing people to better, deeper and more fully communicate with each other using gadgets and with the world of rapidly“ smarter machines ”with a human-computer interface. The technology carries the potential for the development of mutual understanding and empathy, will allow to solve the problems of people with disabilities (for example, with autism) and will find the keys to alleviate socially critical diseases. However, not only technology is important, but also how people use it. We fully share the ethical imperative and proceed from the fact that the system of checks and balances, including legislative ones, will not transform emotion recognition technology into total control technology. Her mission is to help a person without restricting his freedom, his rights, his personal space. Of course, separate aberrations are inevitable, but are eliminated. ”

George Pliev

Managing Partner of Neurodata Lab

Courses "Netology" on the topic:

The science of emotion became popular not so long ago, and mainly thanks to Paul Ekman, an American psychologist, author of the book Psychology of Lies and consultant to the popular TV show Lie to Me, which is based on the materials of the book.

Paul Ekman and Tim Roth - starring in the TV series "Lie to Me", whose character is written off from the very Ekman

')

The series started in 2009, and at the same time public interest in the topic of emotion recognition has grown significantly. The boom in the startup environment occurred in 2015–2016, when two technology giants — Microsoft and Google — were immediately available to ordinary users for their pilot projects for working with the science of emotions.

Service for the recognition of emotions Emotion Recognition, launched by Microsoft

in 2015

This was the impetus for the creation of a variety of applications and algorithms based on the technology of recognition of emotions. For example, Text Analytics API is one of the Microsoft Cognitive Services package services that allow developers to embed ready-made smart algorithms into their products. Among other services of the package: image recognition, face recognition, speech, and many others. Now, emotions can be identified by text, voice, photo and even video.

Gartner agency claims that our smartphone in 2021-2022 will know us better than our friends and relatives, and interact with us on a subtle emotional level.

The market for emotion recognition technology - what's wrong with it?

He is, but he is young, he still has everything ahead.

Now the emotion detection market is experiencing a boom, and according to Western experts, by 2021 it will grow, according to various estimates, from $ 19 billion to $ 37 billion.

Thus, according to the influential agency MarketsandMarkets, the global volume of the emotions market in 2016 amounted to $ 6.72 billion, and it is assumed that by the mid-2020s. it will increase to $ 36.07 billion. The market of emotional technologies is not monopolized. There is a place for corporations, for laboratories, and for startups. Moreover, normal market practices: corporations integrate smaller companies into their solutions.

Emotional and behavioral technologies are in demand in various fields, including medical.

Turning to foreign experience, we recall how Empatica, under the leadership of Rosalind Picard, was the first in the world to obtain permission from US supervisors responsible for clinical trials (FDA clearing) a few weeks ago to use their Embrace wristband, which not only captures physiological data about the state of the owner, but also evaluates his emotional background and predicts the likelihood of the occurrence of difficult situations for the organism. It can help people with autism spectrum disorders, depression and in difficult cases in neurology and medicine.

The Israeli company Beyond Verbal together with the Mayo Clinic is looking for vocal biomarkers in a human voice, which determine not only emotions, but also lays the possibility of predicting coronary coronary diseases, Parkinson's and Alzheimer's diseases, which already bring emotional issues to the topic of gerontology and the search for ways to slow aging.

If we talk about the applicability of technology, then B2B sphere is mainly involved in sectors like intelligent transport, retail, advertising, HR, IoT, gaming.

But there is also a demand in B2C: EaaS (Emotion as a Service) or a cloud-based analytical solution (Human data analytics) will allow anyone to upload a video file and get all the emotional and behavioral statistics for each record fragment.

If we are talking about the election debate for the presidency (whether it be Russia or the United States), then hardly anything is going to disappear from the algorithm. Moreover, in a couple of years, the technology for recognizing emotions will be in every smartphone.

Technology and science stack

AI boom was predicted for 2025-2027.

The trend will be the creation of smart interfaces for recognizing human emotions - the software will allow you to determine the user's state at an arbitrary point in time using a regular webcam.

This is a promising niche, since the definition of human emotions can be used for commercial purposes: from analyzing the perception of video and audio content to investigating criminal cases.

On the other hand, these are endless possibilities of the entertainment industry. For example, the new iPhone X has Face ID, a face recognition technology that not only unlocks the phone, but also can create emoji with your facial expressions:

The bulk of new products in the field of emotional science is based on seven basic emotions and micro-expression of the face, which reflects our emotions at a level beyond the control of the brain. Consciously, we can hold back a smile, but the slight twitching of the corners of the lips will remain, and this will be a signal for emotion recognition technology.

There is also a block of technologies specializing in the analysis of speech, voice and gaze. The use of these methods in psychiatry or criminal proceedings will allow you to learn the maximum about the emotional state of a person and his true mood thanks to information about the smallest changes in facial expressions and body movements.

Now companies and individual teams can use open scientific data on the recognition of emotions and use them in a stack with technologies, forming the field of emotional computing (affective computing).

The FAANG top five ( Facebook , Apple , Amazon , Netflix , Google ) and technology giants like IBM made a huge contribution to the development of the market of emotional technologies.

Emotion Recognition and Law

There are no direct legal barriers to emotional technologies, and the industry itself is regulated rather weakly and precisely. There are expected barriers and concerns: first of all, it is a problem of privacy and personal data protection.

Emotions are private, fairly personal data about a person, his states, sensations, responses to stimuli, people and the environment, thoughts and intentions, sometimes not fully realized rationally.

At the same time, universal digitalization, distribution of gadgets and devices of any kind, universal access to images and videos (several billion videos hit the network daily), publicity on social networks allows you to efficiently extract emotional data from the general stream and use them for analyzing a person - as a consumer of goods and services, and as a user. And all this should take place in the legal field, correctly and ethically.

New European regulations on personal data protection (GDPR) imply a number of limitations: the data for learning and training machine learning algorithms can be used freely if they are:

- they remain depersonalized, that is, biosensor data are separated from biometrics (identification of people);

- if the group format is respected (analysis of the crowd, of many people, and not of individual subjects);

- if a photograph is being taken, the person must be aware of this and be in agreement with it, otherwise it will be a violation of the regulations and entail responsibility.

How the story will unfold with the regulatory framework in Russia, time will tell.

Where in the coming years will need recognition of emotions

Health and Health tech

The health industry is actively introducing the most modern methods of collecting and analyzing data about patients or users, as computer algorithms determine symptoms using hundreds and thousands of similar cases.

There are already mobile applications that analyze the psycho-emotional state of the photo and text , and the more a person communicates with the program, the better she learns, “understands” it and gives accurate predictions of treatment.

It’s one thing when a device simply picks up, “understands” at its level your mood and according to it switches on music, adjusts light or makes coffee. The other is when it evaluates the degree of fatigue or determines any deviations from the norm according to your appearance. Or disease. For example, Alzheimer's or Parkinson’s.

Long before its manifestation, the disease begins to affect the muscles of the face, the speed of eye movement, the seemingly insensible changes in the voice and micromovements.

Forensics

The series "Lie to Me" was released in 2009 and immediately gained worldwide popularity. The protagonist Dr. Lightman can read the truth on face micromimics. This is his “superpowers”, which helps to find the killer and uncover a network of convoluted crimes.

Neural interfaces can all be the same, only better, better and faster. You can rent a person in the interrogation room and then put a special program on the video that predicts the percentage of emotions on his face - anger, fear, bitterness, resentment, and so on. These data will help the investigation to understand at what point a person could cheat or under-commit something.

Monitoring social activities

It is believed that the Internet does not convey emotions, but it is not. From a series of tweets or posts on Facebook, you can determine with high accuracy what kind of mood and state the user was at the time he wrote it.

The simplest example of determining the psycho-emotional state of the text's style is a well-known situation where a person begins to put an end to the end of a message, and his interlocutor perceives this as a signal that something went wrong in the conversation.

Globally, with machine learning, you can create a system that will track outbursts of anger, requests for help, or fear in messages and respond to them — for example, send a signal to rescue services.

Advertising

Already, global retail networks integrate online into offline as much as possible, trying to find out what the buyer wants and what he is most likely to buy. When neural interfaces reach the level of accurate, highly sensitive emotion recognition, advertising in the storefront of a shopping center will adjust to the mood of people passing by in a fraction of seconds. Such technology is shown in science fiction films, for example, “Minority Report” and “Blade Runner 2049”.

Shot from the movie “Blade Runner 2049”, where holographic advertising

ginoid reacts to the emotions on the face of the protagonist.

About a year ago, in April 2017, a San Francisco research team taught the LSTM neural network to more accurately recognize the emotional component of the text. Now the machine almost correctly identifies the mood in the customer reviews on Amazon and movie reviews of Rotten Tomatoes, which helps to improve the service and predict the popularity of the product among users.

Game industry

When the first model of Google Glass glasses came out, it was assumed that gesture control would reach a new level - in order to read the text on the inside of the lens, it was enough to hold your eyes from top to bottom so that the system would understand that you had already read this paragraph and you can show the following . Despite the fact that the gadget itself did not go beyond the scope of the prototype, the story of the study of eye movements has moved into a new field - the game.

It is very important for game developers to understand how and at what point the player feels, how special effects and game obstacles affect him. The company-developer of emotion recognition technology Affectiva helped create the game Nevermind, in which the complexity depends on the level of stress playing, and the plot adjusts to the state of stress or the calmness of the player.

And what about Russia? Neurodata Lab Experience

After the beginning of 2016, the Envirtue Capital fund team came to the conclusion that in many aspects the existing venture capital market of Russia in terms of emotion recognition technology does not meet investors' expectations, it was decided to develop projects within its R & D laboratory, fully autonomous and funded from its own sources. Thus was born the company Neurodata Lab LLC.

“Since September 2016, our team began to form, including today both research workers - specialists in natural and cognitive sciences, and technical experts with competences and background in the field of computer vision, machine learning, data science. The interdisciplinary nature of the research of emotions predetermined our choice in favor of a mixed team, which allows us to think about solving problems from different points of view, to combine in one contour both a purely technical part, and the views and ideas of biology, psychophysiology and neurolinguistics. ”

George Pliev

Managing Partner of Neurodata Lab

Neurodata Lab develops solutions that cover a wide range of areas in the field of emotion research and their recognition by audio and video, including technologies for voice separation, layer-by-layer analysis and voice identification of the speaker in the audio stream, integrated tracking of body and arm movements, as well as detection and recognition of key points and movements of the muscles of the face in the video stream in real time.

One of these projects is the development of a prototype of the EyeCatcher software that allows you to extract eye and head movement data from video files recorded on a regular camera. This technology opens up new horizons in the study of human eye movements in natural, not laboratory, conditions and significantly expands research capabilities - you can now find out how a person views pictures, reacts to sound, color, taste, what eye movement is when he is happy or surprised . This data will be used as a basis for creating a more advanced technology for recognizing human emotions.

“Our goal is to design a flexible platform and develop technologies that will be in demand by private and corporate clients from various industries, including niche ones. When detecting and recognizing emotions, it is important to take into account that human emotions are a very variable, “elusive” entity, which often changes from person to person, from society to society; There are ethnic, age, gender, socio-cultural differences. To identify patterns, you need to train the algorithms on very large samples of qualitative data. This is the phase on which the team of our laboratory is now focused. ”

George Pliev

Managing Partner of Neurodata Lab

One of the main difficulties that research groups encounter when studying emotions is the limited and “noisy” data to work with emotions in a natural setting or the need to use uncomfortable wearable instruments to track the emotional state of a participant in an experiment that distorts perception. Therefore, as one of its first projects, the Neurodala Lab team collected the Russian-language multimodal dataset RAMAS (The Russian Acted Multimodal Affective Set) - a comprehensive set of data about the emotions experienced, including parallel recording of 12 channels: audio, video, eytreker, wearable motion sensors, etc. about each of the situations of interpersonal interaction. Actors from VGIK who recreate various situations of everyday communication took part in the creation of the dataset. Today, access to the multi-modal RAMAS database is provided free of charge to academic institutions, universities and laboratories.

The presence of a broad database is one of the key factors of high-quality research work with emotions, but it is impossible to accumulate such a database in laboratory conditions and game simulations. To solve this well-known problem, Neurodata Lab specialists developed and launched their own Emotion Miner platform for collecting, marking, analyzing and processing emotional data, which collected more than 20,000 annotator markers, marking data from more than 30 countries. To date, Emotion Miner Data Corpus is one of the world's largest tagged multimodal emotional video data.

Since the establishment of the Neurodata Lab, laboratory staff have collaborated with academic institutions, universities, laboratories and specialized centers of competence in the United States, Europe and Russia, and actively participate in major international conferences, including Interspeech and ECEM, publish academic articles. The company participated in a summit on emotional artificial intelligence promoted jointly by MIT and Affectiva, and in March 2018 organized the first Russian conference “Emotion AI: New Challenges for Science and Education, New Business Opportunities” with NRU ITMO. The plans are to create the Russian Association for Emotion AI, to consolidate the community of scientific experts, laboratories and startups.

“When emotion recognition technology reaches maturity, it will have a significant impact on the entire ecosystem, on the whole technosphere, allowing people to better, deeper and more fully communicate with each other using gadgets and with the world of rapidly“ smarter machines ”with a human-computer interface. The technology carries the potential for the development of mutual understanding and empathy, will allow to solve the problems of people with disabilities (for example, with autism) and will find the keys to alleviate socially critical diseases. However, not only technology is important, but also how people use it. We fully share the ethical imperative and proceed from the fact that the system of checks and balances, including legislative ones, will not transform emotion recognition technology into total control technology. Her mission is to help a person without restricting his freedom, his rights, his personal space. Of course, separate aberrations are inevitable, but are eliminated. ”

George Pliev

Managing Partner of Neurodata Lab

From the Editor

Courses "Netology" on the topic:

- full-time course " Data Scientist ", Moscow;

- Full-time course " Analytics for Managers ", Moscow;

- online program " Big Data: Basics of working with large data arrays ";

- online profession " Python-developer ".

Source: https://habr.com/ru/post/354828/

All Articles