Protection against easy DDoS'a

Most recently, an ad article has appeared on Habré about the fight against DDoS attacks at the application level. I had a similar experience in finding the optimal algorithm for countering attacks, it may be useful to someone - when a person encounters the DDoS of his site for the first time, it causes a shock, so it’s helpful to know in advance that everything is not so terrible.

DDoS - distributed attack to failure - roughly speaking, there are several types. Network level DDoS - IP-TCP-HTTP, application-level DDoS - when the request flow severely reduces server performance or makes it impossible to work, and I would add a hoster-level DDoS - when the site is working, but the server load exceeds the quota set by the hoster, As a result, the site owner also has problems.

If you are at the network level, then you can be congratulated that your business has risen to such heights, and you yourself probably know what to do in this case. We will look at two other types of ddos.

Any CMS can be filled, decorated and tuned so that even one request per second will give an unacceptable load even on the Hezner VDS. Therefore, in the general case, the task is to filter out, if possible, all unnecessary requests to the site. At the same time, a person should be guaranteed to get on the site and not be inconvenienced by the presence of DDoS protection.

')

Bots are of several types. Useful (necessary search), useless (unnecessary search and spiders) and harmful (those that harm). The first two types are respectable bots that tell the truth about themselves in their User-Agent. Useless bots are filtered out in .htaccess, useful ones are passed to the site, and we’ll catch harmful ones. The case when a harmful bot is represented by a Yandex bot, for example, we omit it for simplicity (there is also a solution for it - Yandex by ip gives you the opportunity to find out if they are a bot or not, and you can immediately ban them).

By not allowing harmful bots to the backend, we obtain the necessary load reduction on the server.

Harmful bots can be divided into two types: smart (which understand cookie and javascript) and stupid (which do not understand). There is an opinion that DDoS bots who understand javascript do not exist at all, but this is for serious network DDoS attacks. Under our conditions, even an overly active anonymous spider formally becomes a DDo-bot, which must be neutralized.

For now let's do some stupid bots.

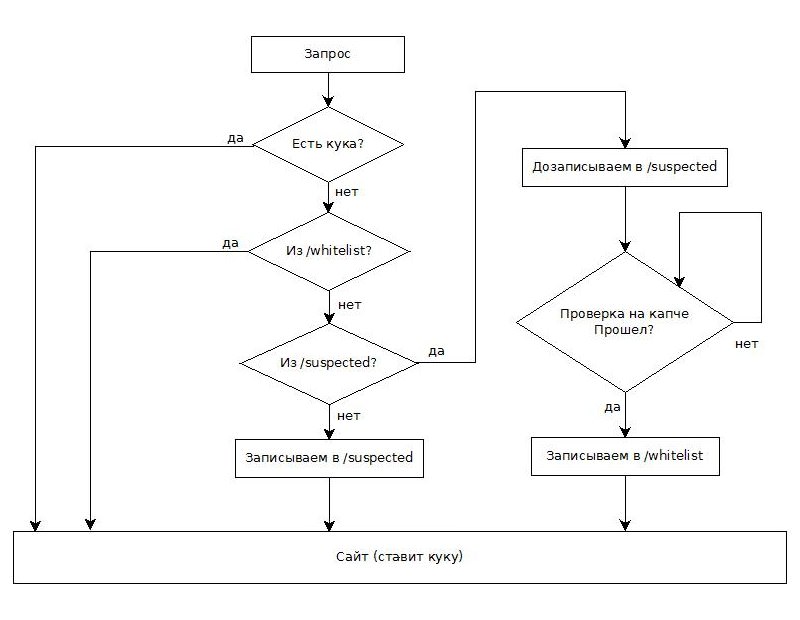

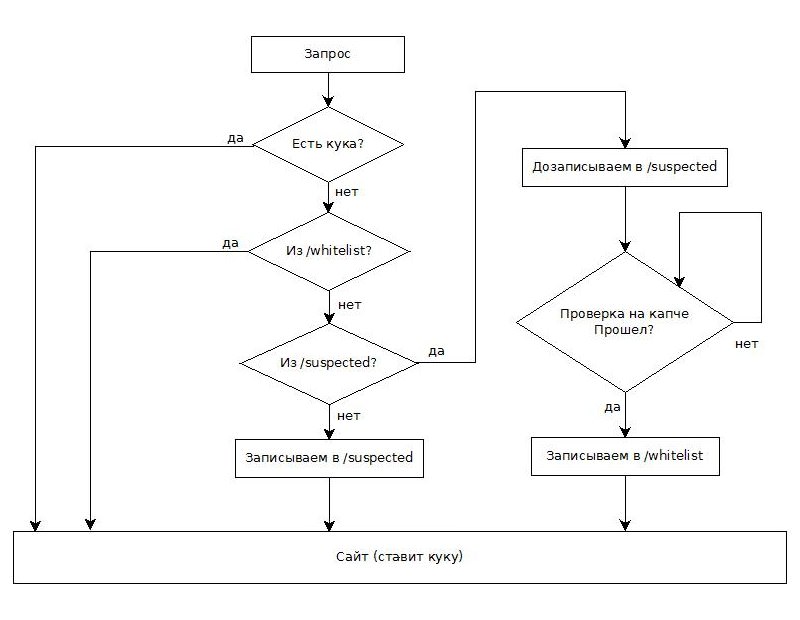

The protection code will not be given, it is simple - a few dozen lines in php; much shorter and simpler than this article. We describe the logic. We will write a cookie to the client (the cookie check method is used even when protecting against powerful DDoS attacks ). With any name and content, you can use a cookie already installed by the site.

For simplicity, we believe that there is a single entry point to the site, we embed our ddos-shield there. Immediately check our cookie request: if it is, we definitely skip to the site. If not, then write a pair of ip and user-agent as a separate file in a separate directory / suspected. The file is named ip-ua.txt, where ua is dechex (crc32 ($ _ SERVER ["HTTP_USER_AGENT"])) is just a short user-agent hash.

In the file itself, we write by separating the line with the request time, the request page (url), User-Agent, and you can also use Sypex Geo or register at maxmind.com and have free access to their geoip base for five days - by ip they give out their geographical location , its also in this file.

If a file with the same name ip-ua.txt already exists, then add all this information to the new request at the end of the file.

One more thing - AJAX requests from our site. If they are, then they, too, must be unconditionally skipped, identifying by their labels. The likelihood that the bots will also be beaten on them is minimal.

Now the missed step - before we write or append ip-ua.txt, we check that the request from this ip has already arrived, and we do not pay attention to the User-Agent:

The point is that we give each ip one chance to get cookies. If it comes a second time without it, then this inequality works, and we redirect the client to a separate check-human.php page, where he will take a Turing test with showcases and vehicles using Google Recaptcha. If it didn’t work out - bye (again, re-captcha), if it’s done - we create the file ip-ua.txt in another special directory / whitelist. And at the very beginning, along with checking the cookies, we check for the hit of the ip-ua pair to our / whitelist - these, too, are definitely missing. With this tactic, we can give the opportunity to work on the site to those people who have cookies disabled in the browser and javascript is enabled, or vice versa - javascript is disabled but cookies work.

In principle, that's all. Stupid bots filtered, now smart. For smart and the approach is already intellectual - open the directory / suspected, sort the files by size. Above the biggest - tens and hundreds of kilobytes of persistent attempts to get through to us. Open and look, make sure that this is really a bot - by ip, by location, by request time, by request periods, by request pages, by user agent changes - this is usually clearly seen, everything is before your eyes. In principle, you can select all files with, for example, 10+ unsuccessful attempts, and send their ip to the ban via .htaccess. But this is not good, it is better to send them to the captcha - after all, it happens that several people go online through one ip.

This is a very good solution to the issue of smart bots. Why? Because if you were ordered, then, most likely, to one ddaser. And they, too, are smart and stupid. And stupid people usually use one botnet for their smart and stupid bots, with some ip. Thus, you will block those bots that accept cookies and execute javascript. In addition, smart bots are much smaller, they cost more and work slower, so this method is effective for dealing with the absolute majority of the attacks under consideration.

According to my observations, according to this scheme, about 1% -2% of the real users of the site had to go through the captcha, the rest did not notice anything at all - which is quite user friendly. In addition, even those who come for the first time do not see any “stubs” (as opposed to using the link at the beginning of the post), but calmly work with the site.

Certain inconveniences are possible in people using a special browser-based anonymization software - dynamically changing ip and the user-agent of the client, erasing cookies, but we do not consider this option.

In my case, the server load dropped immediately. The bots have been dug for some time; a couple of days later, I noticed that they were gone - ddosers also do not like to waste resources on idle. Removed protection.

You can vary the protection logic - add a check for javascript (it will also put a cookie, for example); You can monitor those who were redirected to the captcha and did not pass it in order to prevent the case of "bad" behavior towards a person; you can make personalized cookies, keep track of the number of customer visits and, if you exceed the limit, send it to the captcha as well; can implement a system of tokens; bots can be launched along the redirect chain with temporary delays to slow them down; ip bots can be analyzed and banned with whole grids, like Roskomnadzor - but this is as needed. By law 20-80, the simplest necessary solutions decide simply everything that is needed.

The main thing is to quickly isolating and banning obviously malicious ip from / suspected, you immediately significantly reduce the server load and get time to prepare further actions to repel the attack.

This is the simple way to punish unscrupulous competitors for money.

Disclaimer: this article is written only for lightweight application-level DDoS attacks, mainly for sites on shared hosting, the limit of which resources are available.

DDoS - distributed attack to failure - roughly speaking, there are several types. Network level DDoS - IP-TCP-HTTP, application-level DDoS - when the request flow severely reduces server performance or makes it impossible to work, and I would add a hoster-level DDoS - when the site is working, but the server load exceeds the quota set by the hoster, As a result, the site owner also has problems.

If you are at the network level, then you can be congratulated that your business has risen to such heights, and you yourself probably know what to do in this case. We will look at two other types of ddos.

Bots

Any CMS can be filled, decorated and tuned so that even one request per second will give an unacceptable load even on the Hezner VDS. Therefore, in the general case, the task is to filter out, if possible, all unnecessary requests to the site. At the same time, a person should be guaranteed to get on the site and not be inconvenienced by the presence of DDoS protection.

')

Bots are of several types. Useful (necessary search), useless (unnecessary search and spiders) and harmful (those that harm). The first two types are respectable bots that tell the truth about themselves in their User-Agent. Useless bots are filtered out in .htaccess, useful ones are passed to the site, and we’ll catch harmful ones. The case when a harmful bot is represented by a Yandex bot, for example, we omit it for simplicity (there is also a solution for it - Yandex by ip gives you the opportunity to find out if they are a bot or not, and you can immediately ban them).

By not allowing harmful bots to the backend, we obtain the necessary load reduction on the server.

Harmful bots can be divided into two types: smart (which understand cookie and javascript) and stupid (which do not understand). There is an opinion that DDoS bots who understand javascript do not exist at all, but this is for serious network DDoS attacks. Under our conditions, even an overly active anonymous spider formally becomes a DDo-bot, which must be neutralized.

For now let's do some stupid bots.

Protection

The protection code will not be given, it is simple - a few dozen lines in php; much shorter and simpler than this article. We describe the logic. We will write a cookie to the client (the cookie check method is used even when protecting against powerful DDoS attacks ). With any name and content, you can use a cookie already installed by the site.

For simplicity, we believe that there is a single entry point to the site, we embed our ddos-shield there. Immediately check our cookie request: if it is, we definitely skip to the site. If not, then write a pair of ip and user-agent as a separate file in a separate directory / suspected. The file is named ip-ua.txt, where ua is dechex (crc32 ($ _ SERVER ["HTTP_USER_AGENT"])) is just a short user-agent hash.

In the file itself, we write by separating the line with the request time, the request page (url), User-Agent, and you can also use Sypex Geo or register at maxmind.com and have free access to their geoip base for five days - by ip they give out their geographical location , its also in this file.

If a file with the same name ip-ua.txt already exists, then add all this information to the new request at the end of the file.

One more thing - AJAX requests from our site. If they are, then they, too, must be unconditionally skipped, identifying by their labels. The likelihood that the bots will also be beaten on them is minimal.

Now the missed step - before we write or append ip-ua.txt, we check that the request from this ip has already arrived, and we do not pay attention to the User-Agent:

count(glob(__DIR__ . "/suspected/$ip-*.txt")) > 0 The point is that we give each ip one chance to get cookies. If it comes a second time without it, then this inequality works, and we redirect the client to a separate check-human.php page, where he will take a Turing test with showcases and vehicles using Google Recaptcha. If it didn’t work out - bye (again, re-captcha), if it’s done - we create the file ip-ua.txt in another special directory / whitelist. And at the very beginning, along with checking the cookies, we check for the hit of the ip-ua pair to our / whitelist - these, too, are definitely missing. With this tactic, we can give the opportunity to work on the site to those people who have cookies disabled in the browser and javascript is enabled, or vice versa - javascript is disabled but cookies work.

Botnet

In principle, that's all. Stupid bots filtered, now smart. For smart and the approach is already intellectual - open the directory / suspected, sort the files by size. Above the biggest - tens and hundreds of kilobytes of persistent attempts to get through to us. Open and look, make sure that this is really a bot - by ip, by location, by request time, by request periods, by request pages, by user agent changes - this is usually clearly seen, everything is before your eyes. In principle, you can select all files with, for example, 10+ unsuccessful attempts, and send their ip to the ban via .htaccess. But this is not good, it is better to send them to the captcha - after all, it happens that several people go online through one ip.

This is a very good solution to the issue of smart bots. Why? Because if you were ordered, then, most likely, to one ddaser. And they, too, are smart and stupid. And stupid people usually use one botnet for their smart and stupid bots, with some ip. Thus, you will block those bots that accept cookies and execute javascript. In addition, smart bots are much smaller, they cost more and work slower, so this method is effective for dealing with the absolute majority of the attacks under consideration.

According to my observations, according to this scheme, about 1% -2% of the real users of the site had to go through the captcha, the rest did not notice anything at all - which is quite user friendly. In addition, even those who come for the first time do not see any “stubs” (as opposed to using the link at the beginning of the post), but calmly work with the site.

Certain inconveniences are possible in people using a special browser-based anonymization software - dynamically changing ip and the user-agent of the client, erasing cookies, but we do not consider this option.

In my case, the server load dropped immediately. The bots have been dug for some time; a couple of days later, I noticed that they were gone - ddosers also do not like to waste resources on idle. Removed protection.

Logic development

You can vary the protection logic - add a check for javascript (it will also put a cookie, for example); You can monitor those who were redirected to the captcha and did not pass it in order to prevent the case of "bad" behavior towards a person; you can make personalized cookies, keep track of the number of customer visits and, if you exceed the limit, send it to the captcha as well; can implement a system of tokens; bots can be launched along the redirect chain with temporary delays to slow them down; ip bots can be analyzed and banned with whole grids, like Roskomnadzor - but this is as needed. By law 20-80, the simplest necessary solutions decide simply everything that is needed.

The main thing is to quickly isolating and banning obviously malicious ip from / suspected, you immediately significantly reduce the server load and get time to prepare further actions to repel the attack.

This is the simple way to punish unscrupulous competitors for money.

Disclaimer: this article is written only for lightweight application-level DDoS attacks, mainly for sites on shared hosting, the limit of which resources are available.

Source: https://habr.com/ru/post/354744/

All Articles