Who scans the Internet and does Australia exist

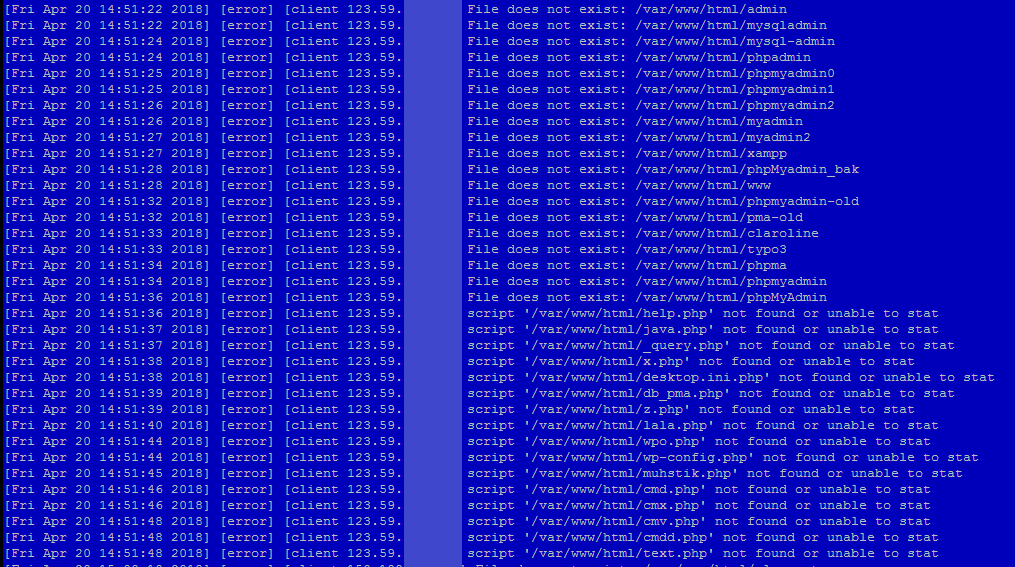

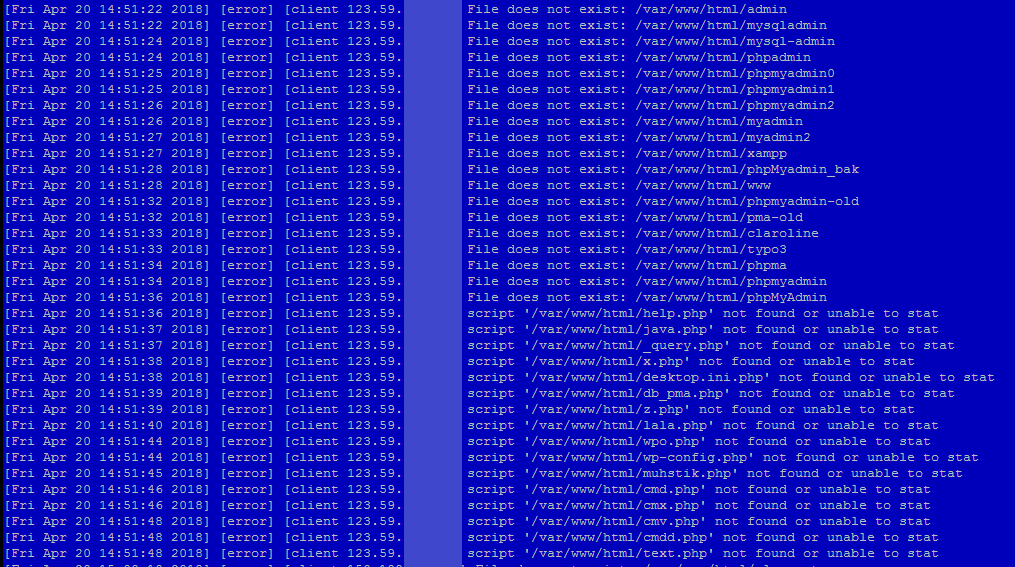

Anyone who has put up sites knows that it’s worth starting a web server, as requests begin to arrive. The DNS also doesn’t really know about it, but the httpd error log file already contains a lot of records like this:

It became interesting to me, and I decided to study this question more deeply. As soon as time appeared, I wrote a web server log parser. Since I like clarity, I put the results on the map.

And here is the picture:

')

On the map, markers indicate locations identified by the IP addresses of scan sources. For information, a free offline library SxGeo was used, and for display - 2GIS API. Of course, due to the free of charge library SxGeo does not have high accuracy, and some addresses fall into the ocean. However, over time (at the moment I have statistics for 2 months), the picture acquires quite clear outlines.

Surely some of the addresses are proxies. At the same time, scan sources almost evenly cover the most developed in terms of IT regions of the world.

However, as our beloved Mikhail Sergeyevich Boyarsky would say - “Thousand devils”, what is wrong with Australia?

In addition to distributing scan sources, I was also interested in collecting target paths that scanners scan. Those turned out to be quite a lot. Below I give only a small part of the target for an example:

Good, but what to do with it? The information we receive, like any other, can be used in different ways.

For example:

And another way to use "cool hackers" for their own purposes is to increase site traffic. This is a joke and no.

It really works if, on the basis of the dictionary of captured targets, to create files on your site, and to place arbitrary text and counter code inside. Of course, this will only work if the scanner can execute JS (addition from apapacy ).

And this is a joke, because there are not so many really unique IP addresses of scanners. In 2 months, 275 got used to me. How many “smart” bots and browsers are not counted among them. Maybe not at all.

It should be noted that with some addresses the scan was observed once, while with others it is performed daily. But this process is ongoing. I wonder how much of the traffic on the Web is scan traffic. Apparently not very big time no one is struggling with this.

Question: Do I need to pay attention to scanning and what should I do to avoid becoming a prey for scanners?

PS

The phenomenon of scanning, though man-made, but it seems has already become a natural process for the Internet. It has been and will be as long as the Network exists. This is a harmful factor, from which you can protect yourself like glasses from solar ultraviolet radiation.

I will summarize some of the discussion:

1. Australia does exist, but the Internet is expensive there and there is little of it. The same with the NZ. But there is the ocean, beaches and kangaroos.

2. Scanning web resources is a special case of scanning real addresses on the Internet in general. Practically all standard ports and standard software serve as targets, and the geography of scanning sources coincides with the geography of having “fast” Internet access.

3. Scanners work for a reason. Scanning is the first stage of hacking. Scanners search for possible vulnerabilities. The presence of such vulnerabilities can be used to infect servers with malware or / and to gain access to personal information.

4. The comments discuss ways of elementary protection against scanners. The main directions are: changing the standard software settings, defining and blocking scanners according to a ban list.

All of the above are obvious things. But the knowledge and understanding of such things precisely helps to create conditions for the safe operation of your resource.

Thank you all.

List of sources:

It became interesting to me, and I decided to study this question more deeply. As soon as time appeared, I wrote a web server log parser. Since I like clarity, I put the results on the map.

And here is the picture:

')

On the map, markers indicate locations identified by the IP addresses of scan sources. For information, a free offline library SxGeo was used, and for display - 2GIS API. Of course, due to the free of charge library SxGeo does not have high accuracy, and some addresses fall into the ocean. However, over time (at the moment I have statistics for 2 months), the picture acquires quite clear outlines.

Surely some of the addresses are proxies. At the same time, scan sources almost evenly cover the most developed in terms of IT regions of the world.

However, as our beloved Mikhail Sergeyevich Boyarsky would say - “Thousand devils”, what is wrong with Australia?

In addition to distributing scan sources, I was also interested in collecting target paths that scanners scan. Those turned out to be quite a lot. Below I give only a small part of the target for an example:

var / www / html / RPC2Often there is a game with the register:

/ var / www / html / SQLite

/ var / www / html / SQLiteManager

/var/www/html/SQLiteManager-1.2.4

/ var / www / html / SQlite

/ var / www / html / Snom

/var/www/html/Version.html

/ var / www / html / Yealink

/ var / www / html / \ xd1 \ x86 \ xd1 \ x80 \ xd1 \ x89 \ xd1 \ x8b \ xd1 \ x81 \ xd1 \ x84 \ xd1 \ x82 \ xd1 \ x8c \ xd1 \ x83

/ var / www / html / _PHPMYADMIN

/ var / www / html / _pHpMyAdMiN

/ var / www / html / _phpMyAdmin

/ var / www / html / _phpmyadmin

/var/www/html/_query.php

/var/www/html/_whatsnew.html

/ var / www / html / adm

/ var / www / html / admin

/var/www/html/admin.php

/ var / www / html / admin888

/ var / www / html / admin_area

/ var / www / html / admin_manage

/ var / www / html / admin_manage_access

/ var / www / html / admindb

/ var / www / html / administrator

/var/www/html/administrator.php

/ var / www / html / adminzone

/ var / www / html / _PHPMYADMINThere are also exotic targets that definitely have no place to take from me:

/ var / www / html / _pHpMyAdMiN

/ var / www / html / _phpMyAdmin

/ var / www / html / _phpmyadmin

/var/www/html/w00tw00t.at.blackhats.romanian.anti-secYou can pick up the full list of targets by the link in the basement. The catching system is now working in automatic mode and therefore it is constantly being replenished.

/ var / www / html / nmaplowercheck1523152976

/var/www/html/elastix_warning_authentication.php

Good, but what to do with it? The information we receive, like any other, can be used in different ways.

For example:

- When creating websites, especially on the basis of standard CMS and using standard DBMS, it is desirable to change the standard names of directories and files. Do not leave installation directories after installation. Do not place configuration files in the root directory or folder named "configuration", "config", etc.

- It can be blocked at the level of iptables addresses that are being scanned. Of course, this is an extreme case, but there is a possibility and it can be automated.

- Using the dictionary of captured targets, you can check the structure of the site for matches. This is done using the simplest script.

And another way to use "cool hackers" for their own purposes is to increase site traffic. This is a joke and no.

It really works if, on the basis of the dictionary of captured targets, to create files on your site, and to place arbitrary text and counter code inside. Of course, this will only work if the scanner can execute JS (addition from apapacy ).

And this is a joke, because there are not so many really unique IP addresses of scanners. In 2 months, 275 got used to me. How many “smart” bots and browsers are not counted among them. Maybe not at all.

It should be noted that with some addresses the scan was observed once, while with others it is performed daily. But this process is ongoing. I wonder how much of the traffic on the Web is scan traffic. Apparently not very big time no one is struggling with this.

Question: Do I need to pay attention to scanning and what should I do to avoid becoming a prey for scanners?

PS

The phenomenon of scanning, though man-made, but it seems has already become a natural process for the Internet. It has been and will be as long as the Network exists. This is a harmful factor, from which you can protect yourself like glasses from solar ultraviolet radiation.

I will summarize some of the discussion:

1. Australia does exist, but the Internet is expensive there and there is little of it. The same with the NZ. But there is the ocean, beaches and kangaroos.

2. Scanning web resources is a special case of scanning real addresses on the Internet in general. Practically all standard ports and standard software serve as targets, and the geography of scanning sources coincides with the geography of having “fast” Internet access.

3. Scanners work for a reason. Scanning is the first stage of hacking. Scanners search for possible vulnerabilities. The presence of such vulnerabilities can be used to infect servers with malware or / and to gain access to personal information.

4. The comments discuss ways of elementary protection against scanners. The main directions are: changing the standard software settings, defining and blocking scanners according to a ban list.

All of the above are obvious things. But the knowledge and understanding of such things precisely helps to create conditions for the safe operation of your resource.

Thank you all.

List of sources:

Source: https://habr.com/ru/post/354726/

All Articles