Hyper-v cluster of two nodes, without external storage or hyperconvergence on the knee

A long time ago, in a galaxy far far away ..., I had the task of organizing the connection of a new branch office to the central office. There were two servers available at the branch, and I thought it would be nice to organize a hyper-v failover cluster from two servers. However, the times were long, even before the release of 2012 server. Cluster organization requires external storage and failing to make two servers was in principle impossible.

A long time ago, in a galaxy far far away ..., I had the task of organizing the connection of a new branch office to the central office. There were two servers available at the branch, and I thought it would be nice to organize a hyper-v failover cluster from two servers. However, the times were long, even before the release of 2012 server. Cluster organization requires external storage and failing to make two servers was in principle impossible.However, recently I came across an article by Romain Serre in which this problem was just solved using Windows Server 2016 and a new feature that is present in it - Storage Spaces Direct (S2D). I just borrowed the picture from this article, because it seemed very appropriate.

Technology Storage Spaces Direct has been repeatedly considered at Habré . But somehow I passed by, and I did not think that it could be used in the “national economy”. However, this is the technology that allows you to assemble a cluster of two nodes, while creating a single shared storage between servers. A single raid from the disks that are on different servers. Moreover, the output of one of the disks or the whole server should not lead to data loss.

')

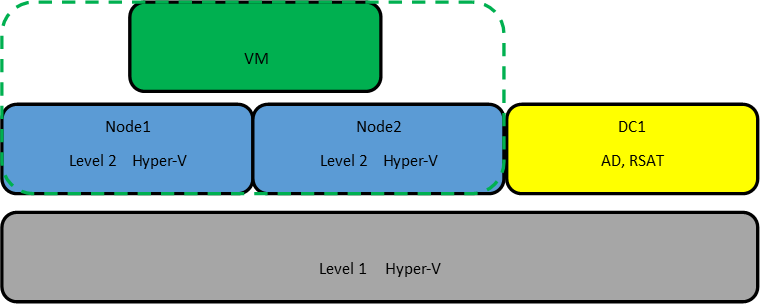

It sounds tempting and I was interested to know how it works. However, I don’t have two test servers, so I decided to make a cluster in a virtual environment. Benefit and nested virtualization in hyper-v recently appeared.

For my experiments, I created 3 virtual machines. On the first virtual machine, I installed Server 2016 with a GUI on which I picked up the AD controller and installed the remote administration tools of the RSAT server. On the virtual machines for the nodes of the cluster, I installed Server 2016 in kernel mode. This month, the mysterious Project Honolulu, turned into a release of the Windows Admin Center and I was also interested to see how convenient it would be to administer the server in kernel mode. The quarter virtual machine will have to run inside the hyper-v cluster in the second level of virtualization.

Windows Server Datacenter 2016 is needed for cluster operation and Storage Spaces Direct service. Separately, pay attention to the hardware requirements for Storage Spaces Direct. Network adapters between cluster nodes must be> 10GB with support for remote direct memory access (RDMA). The number of disks to pool is at least 4 (excluding disks for the operating system). Supported NVMe, SATA, SAS. Work with disks through RAID controllers is not supported. Learn more about the requirements of docs.microsoft.com

If you, like me, have never worked with hyper-v nested virtualization, then there are several nuances in it. First, it is disabled by default on new virtual machines. If you want to enable the hyper-v role in the virtual machine, you will get an error stating that the hardware does not support this role. Secondly, the embedded virtual machine (at the second level of virtualization) will not have access to the network. To organize access, you must either configure nat, or enable spoofing for the network adapter. The third nuance, to create a node of the cluster, you can not use dynamic memory. More on the link .

Therefore, I created two virtual machines - node1, node2 and immediately disabled dynamic memory. Then you need to enable support for nested virtualization:

Set-VMProcessor -VMName node1,node2 -ExposeVirtualizationExtensions $true We enable support for spoofing on VM network adapters:

Get-VMNetworkAdapter -VMName node1,node2 | Set-VMNetworkAdapter -MacAddressSpoofing On

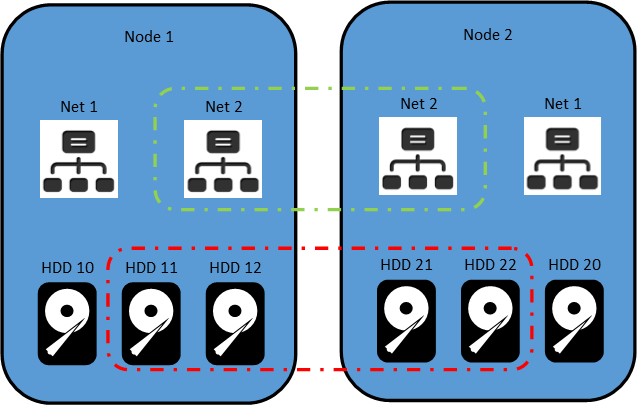

HDD10 and HDD 20 I used as system partitions on nodes. I added the remaining disks for shared storage and did not mark them.

My Net1 network interface is configured to work with an external network and connect to a domain controller. The Net2 interface is configured to operate the internal network, only between the nodes of the cluster.

To shorten the presentation, I will omit the steps necessary to add nodes to the domain and configure the network interfaces. Using the console utility sconfig is not a big deal. I will only clarify that I installed Windows Admin Center using a script:

msiexec /i "C:\WindowsAdminCenter1804.msi" /qn /L*v log.txt SME_PORT=6515 SSL_CERTIFICATE_OPTION=generate On the network from the shared folder, the installation of Admin Center failed. Therefore, we had to turn on the File Server Role service and copy the installer to each server, as they recommend in ms.

When the preparatory part is ready and before starting to create a cluster, I recommend updating the nodes, because without the April updates, the Windows Admin Center will not be able to manage the cluster.

Let's start creating a cluster. Let me remind you that all the necessary consoles are installed on my domain controller. Therefore, I connect to the domain and run Powershell ISE as administrator. Then I install the necessary roles on the nodes to build the cluster using the script:

$Servers = "node1","node2" $ServerRoles = "Data-Center-Bridging","Failover-Clustering","Hyper-V","RSAT-Clustering-PowerShell","Hyper-V-PowerShell","FS-FileServer" foreach ($server in $servers){ Install-WindowsFeature –Computername $server –Name $ServerRoles} And I overload the server after installation.

Run the test to verify node availability:

Test-Cluster –Node "node1","node2" –Include "Storage Spaces Direct", "Inventory", "Network", "System Configuration The report in the html format was formed in the folder C: \ Users \ Administrator \ AppData \ Local \ Temp. The path to the report utility writes only if there are errors.

Finally, we create a cluster with the name hpvcl and shared IP address 192.168.1.100

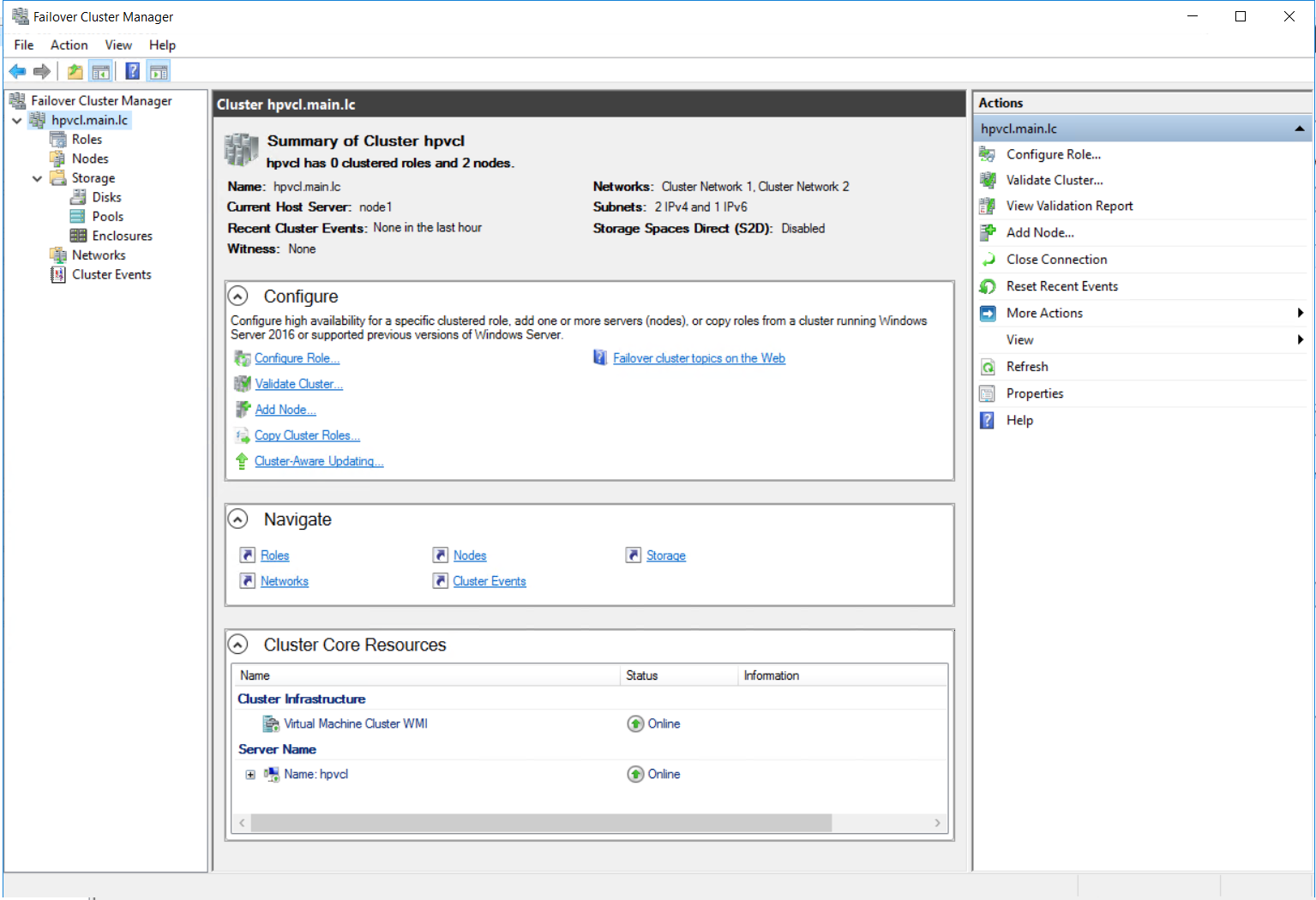

New-Cluster –Name hpvcl –Node "node1","node2" –NoStorage -StaticAddress 192.168.1.100 After that we get the error that in our cluster there is no shared storage for fault tolerance. Run the Failover cluster manager and check what we got.

Turn on (S2D)

Enable-ClusterStorageSpacesDirect –CimSession hpvcl And we get a notification that no disks were found for the cache. Since the test environment is on my SSD, and not on the HDD, we will not worry about this.

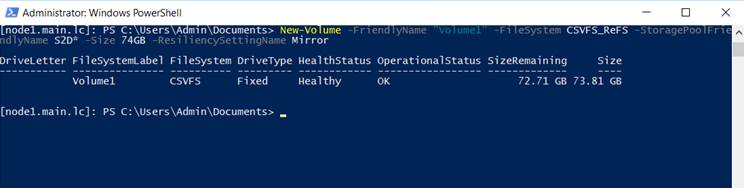

Then we connect to one of the nodes using the powershell console and create a new volume. It should be noted that of 4 disks of 40GB each, about 74GB is available for creating a mirrored volume.

New-Volume -FriendlyName "Volume1" -FileSystem CSVFS_ReFS -StoragePoolFriendlyName S2D* -Size 74GB -ResiliencySettingName Mirror

On each of the nodes, we have a common volume C: \ ClusterStorage \ Volume1.

A shared disk cluster is ready. Now we will create a VM virtual machine on one of the nodes and place it on the shared storage.

For the network settings of the virtual machine, you will need to connect with the hyper-v manager console and create a virtual network with external access on each of the nodes with the same name. Then I had to restart the cluster service on one of the nodes to get rid of the error with the network interface in the failover cluster manager console.

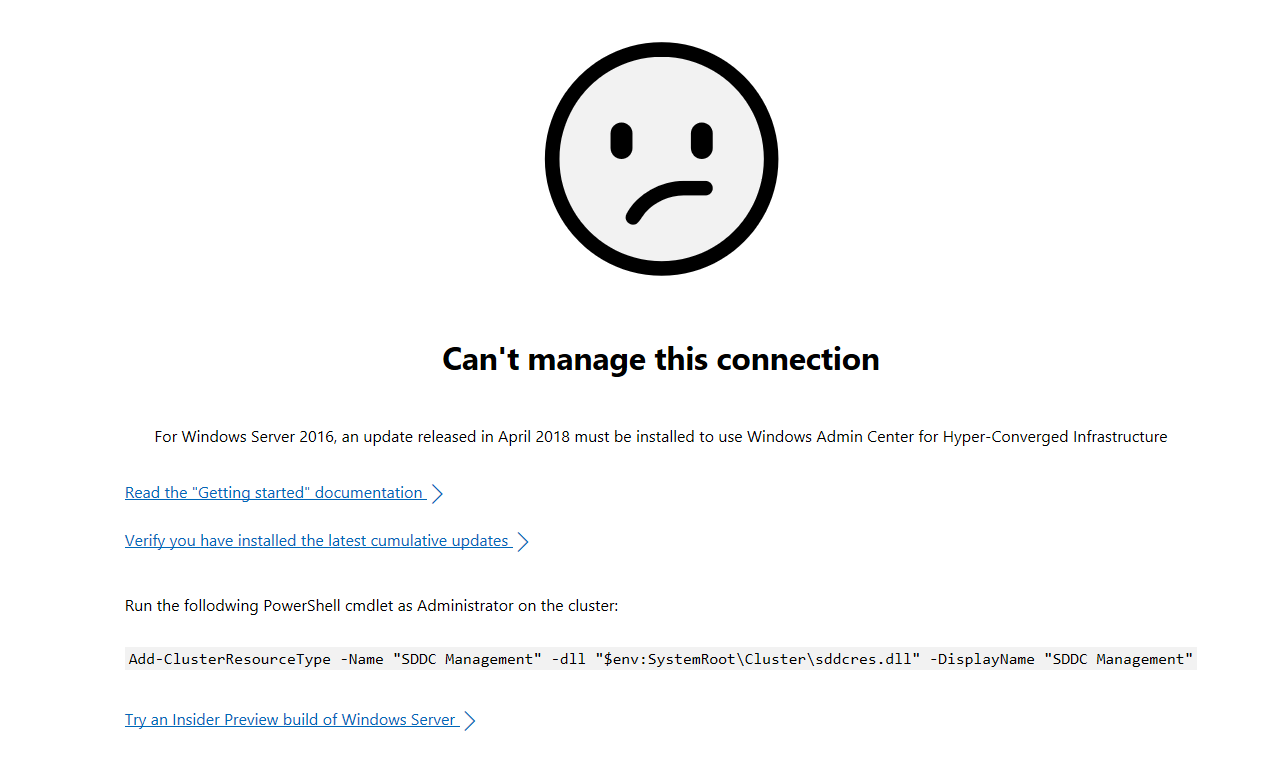

While the system is being installed on the virtual machine, we will try to connect to the Windows Admin Center. We add our hyperconvergent cluster in it and get a sad smiley

Connect to one of the nodes and execute the script:

Add-ClusterResourceType -Name "SDDC Management" -dll "$env:SystemRoot\Cluster\sddcres.dll" -DisplayName "SDDC Management" Check the admin center and this time we get beautiful graphics

After installing the OS on a VM virtual machine inside the cluster, first of all I checked Live migration, moving it to the second node. During the migration, I pinged the machine to check how fast the migration took place. My connection disappeared only for 2 requests, which can be considered a very good result.

And here it is worth adding a few spoons of tar to this hyper-convergent barrel of honey. In a test and virtual environment, everything works well, but how this works on real hardware is an interesting question. It is worth returning to the hardware requirements. 10GB network adapters with RDMA cost about $ 500, which, combined with the Windows Server Datacenter license, makes the decision not so cheap. Of course, this is cheaper than dedicated storage, but the limitation is significant.

The second and main spoon of tar is the news that the function (S2D) will be removed from the next build of server 2016 . I hope, the Microsoft employees reading Habr will comment on it.

In conclusion I would like to say a few words about my impressions. Familiarity with new technologies was a very interesting experience for me. Call it useful until I can. I'm not sure I can put these skills into practice. Therefore, I have questions to the community: are you ready to consider a transition to hyper-convergent solutions in the future? How do you feel about the placement of virtual domain controllers on the nodes themselves?

Source: https://habr.com/ru/post/354228/

All Articles