Another option for generating thumbnails for images using AWS Lambda & golang + nodejs + nginx

Hello dear users of Habr!

My name is Nikita, at the moment I’m working with a backend developer in a mobile app startup. Finally, I had a really non-trivial and quite interesting task, the solution of which I want to share with you.

What is actually going to talk? In the developed mobile application has work with images. As you can easily guess: where there are pictures, there are likely to be previews. Another condition, practically the first general task that was set for me: to make it all work and scale in the cloud on Amazon. If there are some lyrics: there was a telephone conversation with an acquaintance of a business partner in hands-free mode, where I received a packet of valuable instructions whose main idea sounds simple: move away from server-side thinking. Well, ok, we leave so we leave.

')

Image generation is a rather expensive operation in terms of resources. This section of the backend predictably did not perform well on this kind of “load testing” that I conducted on a very dead VDS with almost default LAMP settings, at least without additional tuning, where all non-optimized places will come out immediately and guaranteed. For this reason, I decided to remove this task away from the php backend. Let him do what gives a more or less uniform load, namely queries to the database, application logic and JSON responses, and so on, of uninteresting API shny routine. Those who are familiar with Amazon will say: what's the problem? Why can't I set up scaling EC2 instances in automatic mode and leave this task to PHP? I answer: “so microservice”. But seriously - there are a lot of nuances in the context of the backend architecture beyond this article, so I will leave this question unanswered. Everyone will answer it in the context of their architecture, if it arises. I just want to propose a solution and you are welcome under the cat.

Introductory: images are stored in the conditional s3 bucket.mydomain, hereinafter referred to everywhere as bucket. The content of the bucket is considered static and publicly available, but the listing is prohibited, so each object has a public-read ACL, while the bucket non public read itself, the file structure inside the bucket has the form folder / subfolder / filename.ext.

This article does not fully describe the architecture of the file work of the backend, only a part is described here (simplified) using the example for the photo preview. The remaining parts of the backend work with the file system are beyond the scope of this article.

I am a supporter of solutions when the image of the desired size is pre-generated and simply given from the file system. Although there was an experience of applying watermarks dynamically (i.e., the image was always generated in a new way), which showed quite good results (I expected more work than it turned out). You shouldn’t be directly afraid to do them dynamically, this approach also has the right to life, but in general I consider the most optimal solution to be when the preview is generated 1 time for some event and then given from the file system, if it is there, in case if not, an attempt is made to generate it again. This gives quite good controllability and can be useful if the requirements for the size of the preview have changed. This approach was implemented in the current task. But there is one important point - it is necessary to "agree" (perhaps with yourself) on the uri-scheme. In my case (again, simplified again), it looks like this:

There is a new word preset , what is it? In the process of implementation, I thought, and if you parse the second uri segment for width / height, then it turns out you can dig a hole for yourself. And what will happen if some clever man wants from 1 to over9000 to iterate over the values of the second uri segment? By this, I agreed with the other participants of the development process on the topic of what size thumbnails are needed. It turned out several "presets" of different sizes, the name of which is transmitted as the second segment of the uri. Again, returning to the issue of manageability, if for some reason you need to change the size of the preview, it will be enough to correct the environment variables in the prewmanager, which will be discussed a little later, and delete irrelevant files from the file system.

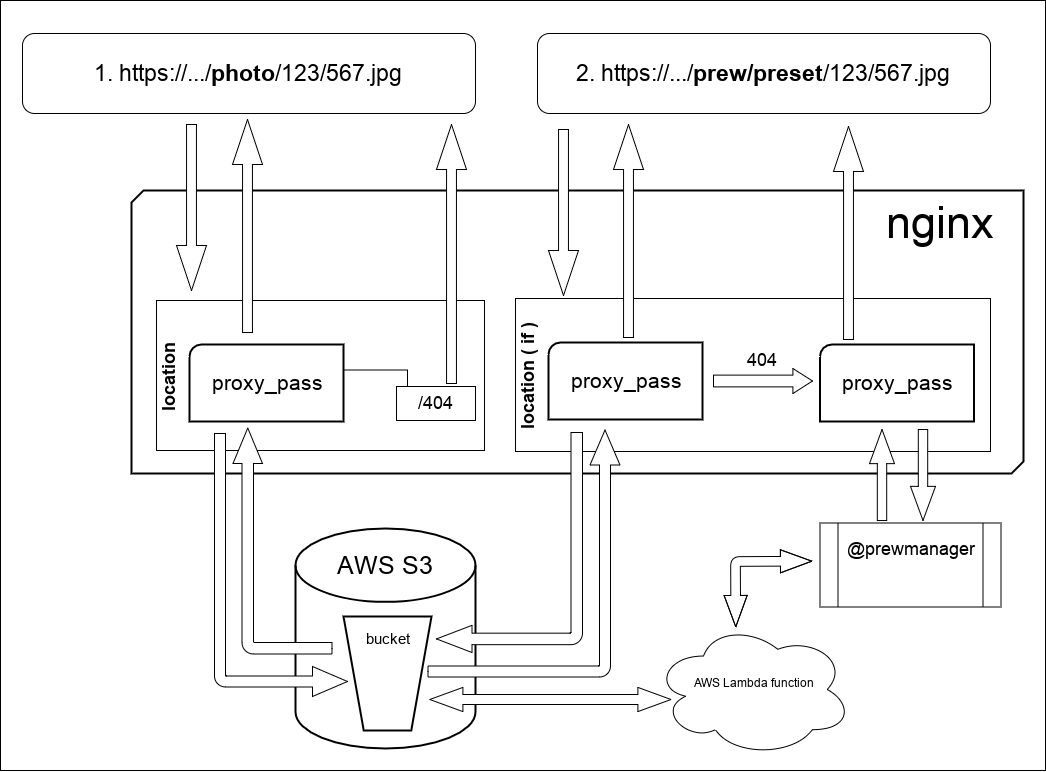

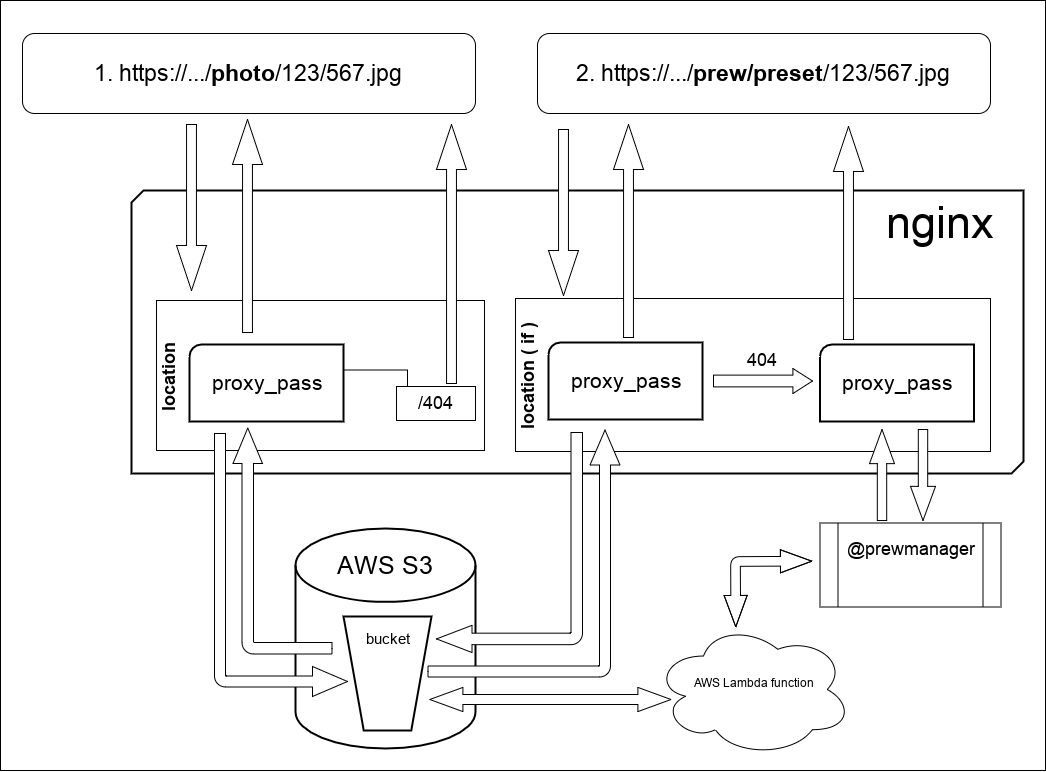

In general, the scheme of work looks like the figure:

What's going on here:

In request 1, which / photo / nginx proxies the request for s3. In principle, it does the same in request 2, since the files and previews themselves are stored in one bucket, since Following the official AWS documentation, the number of objects inside the bucket is unlimited. But there is one difference, if is indicated on the diagram. He is engaged in that changes the way of processing 403/404 response from s3. By the way about 403 answer. The thing is that if you access the repository WITHOUT credentials (my case), i.e. actually having access ONLY to public-read objects, then due to the lack of listing rights (Amazon will give 403 instead of 404, this causes an entry in the config file: error_page 403 404 = 404 /404.jpg; A piece of the config where this work looks like :

As you can see, prewmanager is proxied to some kind of network service. Here it is the whole point of this article. This service, written in nodejs, it launches aws lambda written in go, “blocks” further calls to the processed uri until the lambda function completes and returns the result of aws lambda to everyone who waits. Unfortunately, I can’t give the whole prewmanager code, so I’ll try to illustrate it with separate sections (forgive) the first fully functional version of the script. In the production of a more beautiful version, but alas. However, nevertheless, as “understand the logic of work”, it is possible to use this code as a sketch, in my opinion, quite fit.

Where did radishes come from and why? In this task, I reasoned: since we are in a cloud where instances with radish I can scale as much as I like on one side, and on the other when there was a question about blocking repeated calls of a function with the same parameters, what if not radishes, which also already used in the project? Locally keep in memory and write knee “garbadzh collector”? Why when you can just stick this data (or the blocking flag in radishes) with a certain time to live and this wonderful tool will take care of all this. Well, the same is logical.

And finally, I’ll quote the entire function code for AWS Lambda that was written in Go. I ask you not to kick the pain because this is the third binar after “hello world” and there it’s still in little detail that was written and compiled by me. Here is a link to the githab where it is posted, please pull-requests if something is wrong. But in general, everything works, but as they say there is no limit to perfection. JSON-payload is required for the function to work, if there are requests, I’ll add instructions on how to test the function, a JSON-payloadʻa example, etc.

A few words about setting up AWS Lambda: everything is simple there. Create a function, set enviroments, maximum time and memory allocation. Pour archive and use. But there is a nuance that goes beyond the scope of this article: IAM is his name. The user, the role, the rights will also have to be set up, without this, I fear nothing will come of it.

In conclusion, I want to say that this system has already been tested in production, although I cannot boast with highload, but in general there were no problems at all. In the context of the current political situation: yes, we are one of the first to fall under the blocking of the Amazon. Literally the very first day. But the noise did not raise and distract from the work of lawyers, and set up nginx on the Russian hosting. In general, I believe that Amazon s3 is such a convenient, well-documented and supported storage that due to thebaldness of the brazers memeas advisors and other non-surgeon surgeons, at least it is not worth refusing. And the above nginx config, since all the static on my subdomain is located, almost a line in the line with minimal changes was transferred to a server in the Russian Federation and during the working day everyone forgot about it.

Thank you all for your attention.

My name is Nikita, at the moment I’m working with a backend developer in a mobile app startup. Finally, I had a really non-trivial and quite interesting task, the solution of which I want to share with you.

What is actually going to talk? In the developed mobile application has work with images. As you can easily guess: where there are pictures, there are likely to be previews. Another condition, practically the first general task that was set for me: to make it all work and scale in the cloud on Amazon. If there are some lyrics: there was a telephone conversation with an acquaintance of a business partner in hands-free mode, where I received a packet of valuable instructions whose main idea sounds simple: move away from server-side thinking. Well, ok, we leave so we leave.

')

Image generation is a rather expensive operation in terms of resources. This section of the backend predictably did not perform well on this kind of “load testing” that I conducted on a very dead VDS with almost default LAMP settings, at least without additional tuning, where all non-optimized places will come out immediately and guaranteed. For this reason, I decided to remove this task away from the php backend. Let him do what gives a more or less uniform load, namely queries to the database, application logic and JSON responses, and so on, of uninteresting API shny routine. Those who are familiar with Amazon will say: what's the problem? Why can't I set up scaling EC2 instances in automatic mode and leave this task to PHP? I answer: “so microservice”. But seriously - there are a lot of nuances in the context of the backend architecture beyond this article, so I will leave this question unanswered. Everyone will answer it in the context of their architecture, if it arises. I just want to propose a solution and you are welcome under the cat.

Introductory: images are stored in the conditional s3 bucket.mydomain, hereinafter referred to everywhere as bucket. The content of the bucket is considered static and publicly available, but the listing is prohibited, so each object has a public-read ACL, while the bucket non public read itself, the file structure inside the bucket has the form folder / subfolder / filename.ext.

This article does not fully describe the architecture of the file work of the backend, only a part is described here (simplified) using the example for the photo preview. The remaining parts of the backend work with the file system are beyond the scope of this article.

I am a supporter of solutions when the image of the desired size is pre-generated and simply given from the file system. Although there was an experience of applying watermarks dynamically (i.e., the image was always generated in a new way), which showed quite good results (I expected more work than it turned out). You shouldn’t be directly afraid to do them dynamically, this approach also has the right to life, but in general I consider the most optimal solution to be when the preview is generated 1 time for some event and then given from the file system, if it is there, in case if not, an attempt is made to generate it again. This gives quite good controllability and can be useful if the requirements for the size of the preview have changed. This approach was implemented in the current task. But there is one important point - it is necessary to "agree" (perhaps with yourself) on the uri-scheme. In my case (again, simplified again), it looks like this:

- / photo /some/file.jpg - give the source file

- / prew / preset /some/file.jpg - give preview for file.jpg

There is a new word preset , what is it? In the process of implementation, I thought, and if you parse the second uri segment for width / height, then it turns out you can dig a hole for yourself. And what will happen if some clever man wants from 1 to over9000 to iterate over the values of the second uri segment? By this, I agreed with the other participants of the development process on the topic of what size thumbnails are needed. It turned out several "presets" of different sizes, the name of which is transmitted as the second segment of the uri. Again, returning to the issue of manageability, if for some reason you need to change the size of the preview, it will be enough to correct the environment variables in the prewmanager, which will be discussed a little later, and delete irrelevant files from the file system.

In general, the scheme of work looks like the figure:

What's going on here:

In request 1, which / photo / nginx proxies the request for s3. In principle, it does the same in request 2, since the files and previews themselves are stored in one bucket, since Following the official AWS documentation, the number of objects inside the bucket is unlimited. But there is one difference, if is indicated on the diagram. He is engaged in that changes the way of processing 403/404 response from s3. By the way about 403 answer. The thing is that if you access the repository WITHOUT credentials (my case), i.e. actually having access ONLY to public-read objects, then due to the lack of listing rights (Amazon will give 403 instead of 404, this causes an entry in the config file: error_page 403 404 = 404 /404.jpg; A piece of the config where this work looks like :

location / { set $s3_bucket 'bucket.s3.amazonaws.com'; set $req_proxy_str $s3_bucket$1; error_page 403 404 =404 /404.jpg; if ($request_uri ~* /prew/(.*)){ error_page 403 404 = @prewmanager; } proxy_http_version 1.1; proxy_set_header Authorization ''; proxy_set_header Host $s3_bucket; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_hide_header x-amz-id-2; proxy_hide_header x-amz-request-id; proxy_hide_header Set-Cookie; proxy_ignore_headers "Set-Cookie"; proxy_buffering off; proxy_intercept_errors on; proxy_pass http://$req_proxy_str; } location /404.jpg { root /var/www/error/; internal; } location @prewmanager { proxy_pass http://prewnamager_host:8180; proxy_redirect http://prewnamager_host:8180 /; proxy_set_header Host $host; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; access_log off ; } As you can see, prewmanager is proxied to some kind of network service. Here it is the whole point of this article. This service, written in nodejs, it launches aws lambda written in go, “blocks” further calls to the processed uri until the lambda function completes and returns the result of aws lambda to everyone who waits. Unfortunately, I can’t give the whole prewmanager code, so I’ll try to illustrate it with separate sections (forgive) the first fully functional version of the script. In the production of a more beautiful version, but alas. However, nevertheless, as “understand the logic of work”, it is possible to use this code as a sketch, in my opinion, quite fit.

// requre, process.env.* .. const lambda = new AWS.Lambda({...}); const rc = redis.createClient(...); const getAsync = promisify(rc.get).bind(rc); function make404Response(response) { // 404 -- } function makeErrorResponse(response) { // } // AWS Lambda base64 content-type function makeResultResponse(response, response_payload) { let buff = new Buffer(response_payload.data, 'base64'); response.statusCode = 200; response.setHeader('Content-Type', response_payload.content_type); response.end(buff); return; } http.createServer(async function(request, response) { // uri, .. // redis, (null) AWS lambda // // -- let reply = false; try { reply = await getAsync(redis_key); } catch (err) { } if(reply === null) { // 30 rc.set(redis_key, 'blocked', 'EX', 30); // // , -- 404 switch (preset) { case "preset_name_1": var request_payload = { src_key: "photo/" + aws_ob_key, src_bucket: src_bucket, dst_bucket: dst_bucket, root_folder: dst_root, preset_name: preset, rewrite_part: "photo", width: 1440 }; var params = { FunctionName: "my_lambda_function_name", InvocationType: "RequestResponse", LogType: "Tail", Payload: JSON.stringify(request_payload), }; lambda.invoke(params, function(err, data) { if (err) { makeErrorResponse(response); } else { rc.set(redis_key, data.Payload, 'EX', 30); let response_payload = JSON.parse(data.Payload); if(response_payload.status == true) { makeResultResponse(response, response_payload); } else { console.log(response_payload.error); makeErrorResponse(response); } } }); break; ... default: make404Response(response); } } else if (reply === false) { // makeErrorResponse(response); } else { // 2 // -- blocked // , .. if(reply == 'blocked') { let res; let i = 0; const intervalId = setInterval(async function() { try { res = await getAsync(redis_key); } catch (err) { } if (res != null && res != 'blocked') { let response_payload = JSON.parse(res); if(response_payload.status == true) { makeResultResponse(response, response_payload); } else { console.log(response_payload.error); makeErrorResponse(response); } clearInterval(intervalId); } else { i++; // if(i > 100) { makeErrorResponse(response); clearInterval(intervalId); } } }, 500); } } }).listen(port); Where did radishes come from and why? In this task, I reasoned: since we are in a cloud where instances with radish I can scale as much as I like on one side, and on the other when there was a question about blocking repeated calls of a function with the same parameters, what if not radishes, which also already used in the project? Locally keep in memory and write knee “garbadzh collector”? Why when you can just stick this data (or the blocking flag in radishes) with a certain time to live and this wonderful tool will take care of all this. Well, the same is logical.

And finally, I’ll quote the entire function code for AWS Lambda that was written in Go. I ask you not to kick the pain because this is the third binar after “hello world” and there it’s still in little detail that was written and compiled by me. Here is a link to the githab where it is posted, please pull-requests if something is wrong. But in general, everything works, but as they say there is no limit to perfection. JSON-payload is required for the function to work, if there are requests, I’ll add instructions on how to test the function, a JSON-payloadʻa example, etc.

A few words about setting up AWS Lambda: everything is simple there. Create a function, set enviroments, maximum time and memory allocation. Pour archive and use. But there is a nuance that goes beyond the scope of this article: IAM is his name. The user, the role, the rights will also have to be set up, without this, I fear nothing will come of it.

In conclusion, I want to say that this system has already been tested in production, although I cannot boast with highload, but in general there were no problems at all. In the context of the current political situation: yes, we are one of the first to fall under the blocking of the Amazon. Literally the very first day. But the noise did not raise and distract from the work of lawyers, and set up nginx on the Russian hosting. In general, I believe that Amazon s3 is such a convenient, well-documented and supported storage that due to the

Thank you all for your attention.

Source: https://habr.com/ru/post/354226/

All Articles