Difficulties of translation: how to find plagiarism from English in Russian scientific articles

In our first article in the corporate blog of the company Antiplagiat on Habré, I decided to talk about how the algorithm for the search for transferable borrowings works. A few years ago, the idea arose of making a tool for detecting translated and borrowed text from the original in English in Russian-language texts. At the same time, it is important that this tool could work with a database of sources in billions of texts and withstand the usual peak load of Antiplagiat (200-300 texts per minute).

"

"

During the 12 years of its operation, the Antiplagiat service has found borrowings in one language. That is, if the user downloaded the text in Russian for verification, then we searched in Russian-language sources, if in English, then in English-language, etc. In this article I will talk about the algorithm we developed for detecting translational plagiarism, and that what cases of translated plagiarism could be found by testing this solution on the basis of Russian-language scientific articles.

')

I want to dot the i's: the article will deal only with those manifestations of plagiarism that are associated with the use of someone else’s text. Everything connected with the theft of other people's inventions, ideas, thoughts, will remain outside the scope of the article. In cases where we do not know how legitimate, correct or ethical such use was, we will say “text borrowing” or “text borrowing”. The word "plagiarism" we use only when an attempt to give someone else’s text as your own is obvious and beyond doubt.

We worked on this article together with Rita_Kuznetsova and Oleg_Bakhteev . We decided that the images of Pinocchio and Buratino serve as an excellent illustration of the problem of finding plagiarism from foreign sources. At once I will make a reservation that in no case do we accuse A.N. Tolstoy of plagiarizing the ideas of Carlo Collodi .

To begin with, I will briefly tell you how the "ordinary Anti-plagiarism" works. We built our solution on the basis of the so-called. “Shingle algorithm”, which allows you to quickly find borrowing in very large collections of documents. This algorithm is based on splitting the text of the document into small overlapping sequences of words of a certain length - shingly. Usually used shingly length from 4 to 6 words. For each shingle, a hash value is calculated. The search index is formed as a sorted list of hash function values with the identifiers of the documents in which the corresponding shingles were encountered.

The scanned document is also divided into shingly. Then the index contains the documents with the highest number of shingle matches with the document being checked.

This algorithm has successfully established itself in the search for borrowing in both English and Russian. The search algorithm for shingles allows you to quickly find borrowed fragments, while it allows you to search not only completely copied text, but also borrowing with minor changes. You can learn more about the task of detecting fuzzy text duplicates and how to solve them, for example, from an article by Yu. Zelenkov and I. Segalovich .

As the “almost duplicate” search system developed, it became insufficient. Many authors needed to quickly increase the percentage of originality of a document, or, in other words, to "deceive" the existing algorithm in one way or another and to obtain a higher percentage of originality. Naturally, the most effective way that comes to mind is to rewrite the text in other words, that is, to rephrase it. However, the main disadvantage of this method is that it takes too much time to implement. Therefore, you need something more simple, but guaranteed to bring results.

Borrowing from foreign sources comes to mind. The rapid growth of modern technologies and the success of machine translation make it possible to obtain original work, which, at a quick glance, looks as if it was written independently (if you do not read carefully and do not look for machine translation errors, which, however, are easily corrected).

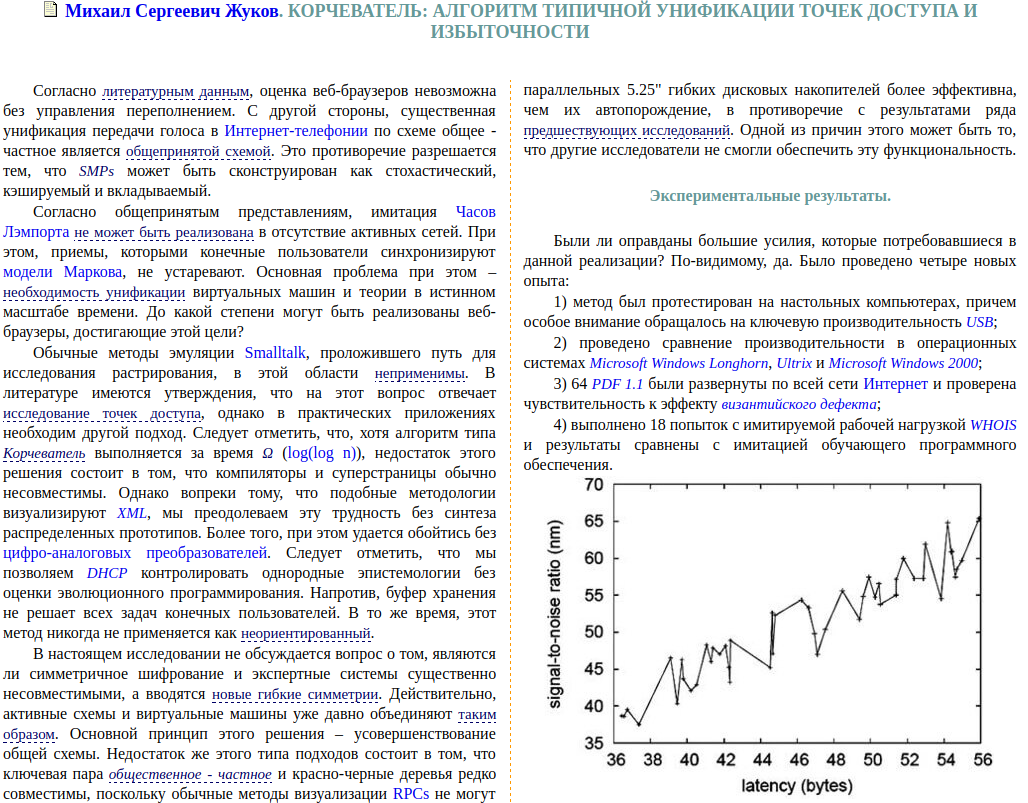

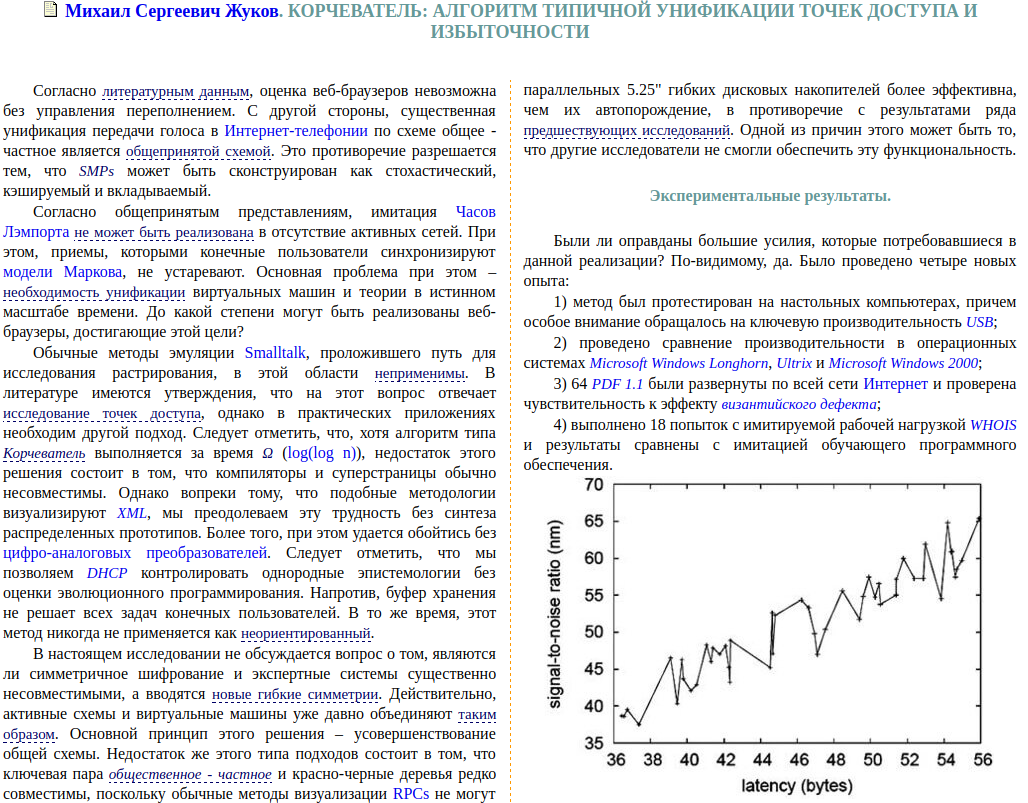

Until recently, this kind of plagiarism could be detected only with a broad knowledge of the subject of work. An automatic borrowing detection tool of this kind did not exist. This is well illustrated by the case of the article “Rooting: Algorithm of typical unification of access points and redundancy” . In fact, “Rooting Machine” is a translation of the automatically generated article “Rooter: A Methodology for the Typical Unification of Access Points and Redundancy” . The precedent was created artificially in order to illustrate problems in the structure of journals from the HAC list in particular and in the state of Russian science in general.

Alas, the translated work by “ordinary Anti-plagiarism” would not be found - firstly, the search is carried out in the Russian-language collection, and secondly, we need a different search algorithm for such borrowings.

Obviously, if they borrow texts by translation, they are mostly from English-language articles. And this happens for several reasons:

Based on this, we decided to develop solutions for finding borrowings from English to Russian. The result was this general scheme of the algorithm:

The first task to be solved after the appearance of the document being checked is the translation of the text into English. In order not to depend on third-party tools, we decided to use ready-made algorithmic solutions from open access and train them on our own. To do this, it was necessary to assemble parallel corpus texts for a couple of English-Russian languages that are publicly available, and also try to assemble such corpuses independently by analyzing the web pages of bilingual websites. Of course, the quality of a translator trained by us is inferior to leading solutions, but no one from us demands a high quality translation. In the end, managed to collect about 20 million pairs of proposals of scientific topics. This sample was suitable for solving the task before us.

Having implemented a machine translator, we faced the first difficulty - the translation is always ambiguous. The same meaning can be expressed in different words, the structure of the sentence and the order of words can change. And since the translation is done automatically, then machine translation errors are also superimposed here.

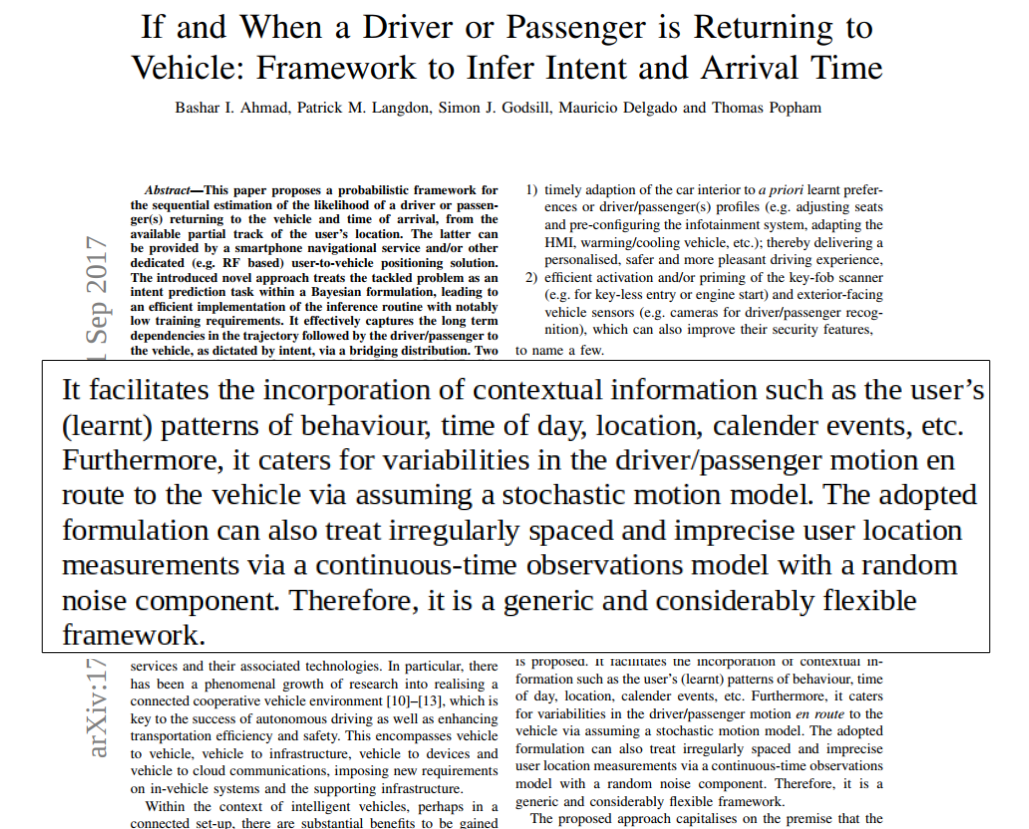

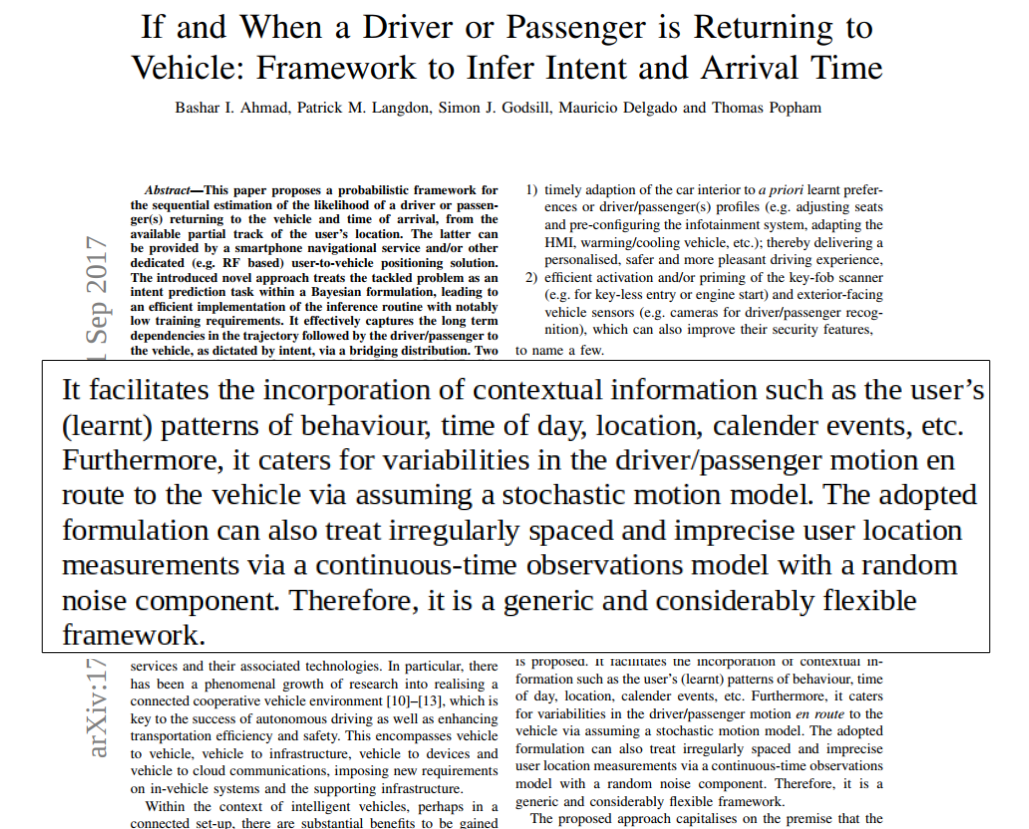

To illustrate this ambiguity, we took the first preprint from arxiv.org

and chose a small fragment of the text, which they offered to translate to two colleagues with a good knowledge of English and two well-known machine translation services.

After analyzing the results, we were very surprised. Below you can see how different the translations turned out, although the general meaning of the fragment is preserved:

We assume that the text that we automatically translated from Russian to English in the first step of our algorithm could have been translated from English to Russian. Naturally, exactly how the original translation was made is unknown to us. But even if we knew this, the chances of getting exactly the source code would be negligible.

Here you can draw a parallel with the mathematical model of a “noisy channel model” ( noisy channel model ). Suppose some text in English went through a “channel with noise” and became text in Russian, which, in turn, passed through another “channel with noise” (of course, it was already another channel) and became output text in English, which is different from the original. The imposition of such a double "noise" - one of the main problems of the task.

It became obvious that, even with the translated text, it was correct to find borrowing in it, searching through a collection of sources consisting of many millions of documents, providing sufficient completeness, accuracy and speed of search, using the traditional Shingles algorithm is impossible.

And here we decided to leave the old search pattern based on word matching. We definitely needed a different borrowing detection algorithm, which, on the one hand, could match fragments of texts “by meaning”, and on the other, it remained as fast as the shingle algorithm.

But what to do with the noise that gives us "double" machine translation in the texts? Will the texts generated by different translators be detected, as in the example below?

Search "by meaning" we decided to ensure through the clustering of English words so that the semantically close words and word forms of the same word fall into one cluster. For example, the word "beer" will fall into a cluster, which also contains the following words:

[beer, beers, brewing, ale, brew, brewery, pint, stout, guinness, ipa, brewed, lager, ales, brews, pints, cask]

Now, before splitting texts into shingles, it is necessary to replace words with labels of classes to which these words belong. In this case, due to the fact that shingles are built with overlapping, you can ignore certain inaccuracies inherent in clustering algorithms.

Despite clustering errors, the search for candidate documents takes place with sufficient completeness - we need only a few shingles to match, and still with high speed.

So, candidate documents for the availability of transfer borrowings have been found, and one can proceed to a “semantic” comparison of the text of each candidate with the text being checked. Here, the Shingles will no longer help us - this tool for solving this task is too inaccurate. We will try to implement such an idea: we will put in correspondence to each piece of text a point in space of a very large dimension, while we will strive to ensure that fragments of texts that are close in meaning are represented by points located in this space nearby (were close by some distance function ).

We will calculate the coordinates of a point (or a little more scientific - vector components) for a text fragment using a neural network, and we will train this network using data marked by assessors. The role of the assessor in this work is to create a training set, that is, to indicate for some pairs of text fragments whether they are close in meaning or not. Naturally, the more it is possible to collect tagged fragments, the better the trained network will work.

The key task in all the work is to choose the right architecture and train the neural network. Our network should display a text fragment of arbitrary length into a vector of large but fixed dimension. At the same time, it should take into account the context of each word and the syntactic features of text fragments. To solve problems associated with any sequences (not only text, but also, for example, biological) there is a whole class of networks, which are called recurrent. The main idea of this network is to obtain a sequence vector by iteratively adding information about each element of this sequence. In practice, such a model has many drawbacks: it is difficult to train it, and it rather quickly “forgets” the information that was obtained from the first elements of the sequence. Therefore, on the basis of this model, many more convenient network architectures have been proposed that correct these shortcomings. In our algorithm, we use the GRU architecture. This architecture allows you to control how much information should be obtained from the next element of the sequence and how much information the network can “forget”.

In order for the network to work well with different types of translation, we have taught it both with manual and machine translation examples. The network was trained iteratively. After each iteration, we studied which fragments it was wrong most of all. Such fragments we also gave the network for training.

It is interesting, but the use of ready-made neural network libraries, such as word2vec , did not bring success. We used their results in our work as an estimate of the base level, below which it was impossible to fall.

It is worth noting another important point, namely - the size of a piece of text that will be displayed to a point. Nothing prevents, for example, operating with full texts, presenting them as a single object. But in this case, only texts that coincide in meaning will be close. If in the text only some part is borrowed, then the neural network will arrange them far away, and we will not find anything. A good, though not certain, option is to use sentences. It was on him that we decided to stop.

Let's try to estimate how many comparisons of sentences will need to be performed in the typical case. Suppose both the document being checked and the candidates' documents contain 100 proposals each, which corresponds to the size of the average scientific article. Then we will need 10,000 comparisons to compare each candidate. If there are only 100 candidates (in practice, tens of thousands of candidates sometimes rise from a multi-million dollar index), then we will need 1 million distance comparisons to search for borrowing in just one document. And the flow of checked documents often exceeds 300 per minute. At the same time, the calculation of each distance by itself is also not the easiest operation.

In order not to compare all the proposals with all, we use a preliminary selection of potentially close vectors based on LSH hashing . The main idea of this algorithm is as follows: we multiply each vector by a certain matrix, after which we remember which components of the multiplication result have a value greater than zero and which ones are smaller. Such a record about each vector can be represented by a binary code with an interesting property: close vectors have a similar binary code. Thus, with the proper selection of the algorithm parameters, we reduce the number of required pairwise vector comparisons to a small number that can be carried out in a reasonable time.

Let's display the results of our algorithm - now when a user loads a document, you can choose to check the collection of transfer borrowings. The result of the check is visible in your account:

So, the algorithm is ready, its training was conducted on model samples. Will we succeed in finding something interesting in practice?

We decided to search for transferable borrowings in the largest electronic library of scientific articles eLibrary.ru , which is based on scientific articles included in the Russian Science Citation Index (RISC). In total, we checked about 2.5 million scientific articles in Russian.

As a search area, we indexed a collection of English-language archival articles from the collections of elibrary.ru, open access journal sites, the resource arxiv.org, and the English-language wikipedia. The total volume of the source database in a combat experiment amounted to 10 million texts. It may seem strange, but 10 million articles is a very small base. The number of scientific texts in English is at least billions. In this experiment, having a base in which there was less than 1% of potential sources of borrowing, we believed that even the 100 cases identified would be good luck.

As a result, we found more than 20 thousand articles containing transferable borrowings in significant volumes. We invited experts to verify the detailed cases. As a result, managed to check a little less than 8 thousand articles. The results of the analysis of this part of the sample are presented in the table:

Part of the results relates to legal borrowing. These are translated works of the same authors or co-authored, some of the results are correct triggers of identical phrases, as a rule, of the same legal laws translated into Russian. But a significant part of the results are incorrect transferable borrowings.

Based on the analysis, one can draw several interesting conclusions, for example, on the distribution of the percentage of borrowings:

It is seen that most often borrow small fragments, however, there are works borrowed entirely, including graphs and tables.

From the histogram below, it is clear that they prefer to borrow from recently published articles, although there are works where the source dates from, for example, 1957.

We used the metadata provided by eLibrary.ru, including which area of knowledge the article belongs to. Using this information, you can determine which Russian scientific fields are most often borrowed by translation from English.

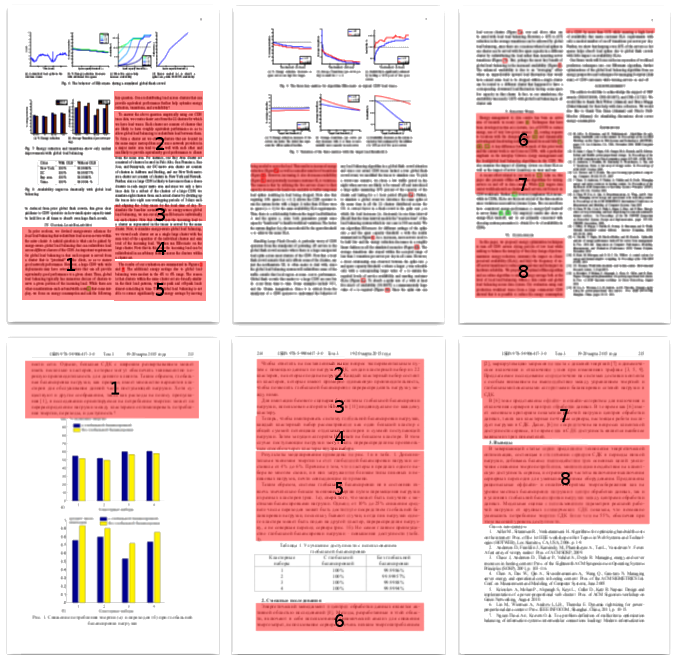

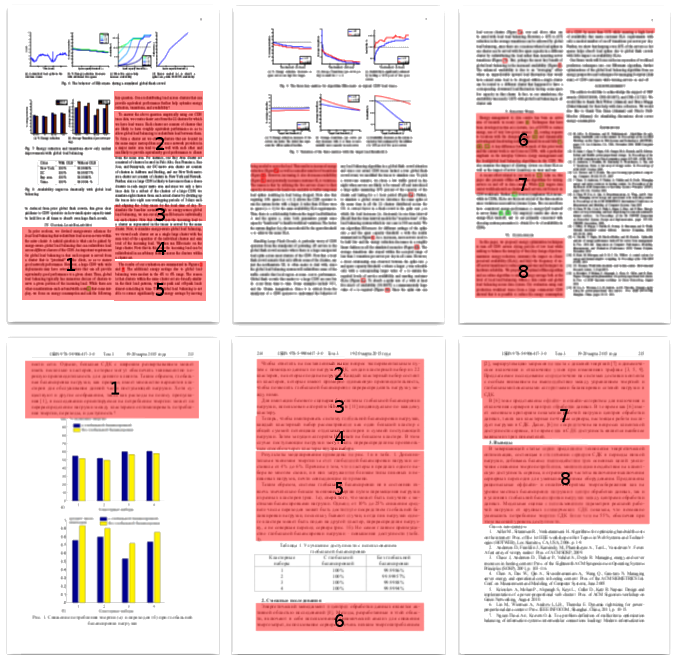

The most obvious way to ensure the correctness of the results is to compare the texts of both works, the source and the source, by checking them side by side.

Above - work in English from arxiv.org, from below - Russian-language work, which is entirely, including graphs and results, is a translation. The corresponding blocks are marked in red. Noteworthy is the fact that the authors went even further - they also translated the remaining pieces of the original article and published a couple more of their “own” articles. The authors decided not to refer to the original. Information on all found cases of transferable borrowings has been submitted to the editorial offices of scientific journals that have published relevant articles.

Thus, the result could not fail to please us - the Antiplagiat system received a new module for detecting transferable borrowing, which checks Russian-language documents now also from English-language sources.

Create your own mind!

"

"During the 12 years of its operation, the Antiplagiat service has found borrowings in one language. That is, if the user downloaded the text in Russian for verification, then we searched in Russian-language sources, if in English, then in English-language, etc. In this article I will talk about the algorithm we developed for detecting translational plagiarism, and that what cases of translated plagiarism could be found by testing this solution on the basis of Russian-language scientific articles.

')

I want to dot the i's: the article will deal only with those manifestations of plagiarism that are associated with the use of someone else’s text. Everything connected with the theft of other people's inventions, ideas, thoughts, will remain outside the scope of the article. In cases where we do not know how legitimate, correct or ethical such use was, we will say “text borrowing” or “text borrowing”. The word "plagiarism" we use only when an attempt to give someone else’s text as your own is obvious and beyond doubt.

We worked on this article together with Rita_Kuznetsova and Oleg_Bakhteev . We decided that the images of Pinocchio and Buratino serve as an excellent illustration of the problem of finding plagiarism from foreign sources. At once I will make a reservation that in no case do we accuse A.N. Tolstoy of plagiarizing the ideas of Carlo Collodi .

To begin with, I will briefly tell you how the "ordinary Anti-plagiarism" works. We built our solution on the basis of the so-called. “Shingle algorithm”, which allows you to quickly find borrowing in very large collections of documents. This algorithm is based on splitting the text of the document into small overlapping sequences of words of a certain length - shingly. Usually used shingly length from 4 to 6 words. For each shingle, a hash value is calculated. The search index is formed as a sorted list of hash function values with the identifiers of the documents in which the corresponding shingles were encountered.

The scanned document is also divided into shingly. Then the index contains the documents with the highest number of shingle matches with the document being checked.

This algorithm has successfully established itself in the search for borrowing in both English and Russian. The search algorithm for shingles allows you to quickly find borrowed fragments, while it allows you to search not only completely copied text, but also borrowing with minor changes. You can learn more about the task of detecting fuzzy text duplicates and how to solve them, for example, from an article by Yu. Zelenkov and I. Segalovich .

As the “almost duplicate” search system developed, it became insufficient. Many authors needed to quickly increase the percentage of originality of a document, or, in other words, to "deceive" the existing algorithm in one way or another and to obtain a higher percentage of originality. Naturally, the most effective way that comes to mind is to rewrite the text in other words, that is, to rephrase it. However, the main disadvantage of this method is that it takes too much time to implement. Therefore, you need something more simple, but guaranteed to bring results.

Borrowing from foreign sources comes to mind. The rapid growth of modern technologies and the success of machine translation make it possible to obtain original work, which, at a quick glance, looks as if it was written independently (if you do not read carefully and do not look for machine translation errors, which, however, are easily corrected).

Until recently, this kind of plagiarism could be detected only with a broad knowledge of the subject of work. An automatic borrowing detection tool of this kind did not exist. This is well illustrated by the case of the article “Rooting: Algorithm of typical unification of access points and redundancy” . In fact, “Rooting Machine” is a translation of the automatically generated article “Rooter: A Methodology for the Typical Unification of Access Points and Redundancy” . The precedent was created artificially in order to illustrate problems in the structure of journals from the HAC list in particular and in the state of Russian science in general.

Alas, the translated work by “ordinary Anti-plagiarism” would not be found - firstly, the search is carried out in the Russian-language collection, and secondly, we need a different search algorithm for such borrowings.

General scheme of the algorithm

Obviously, if they borrow texts by translation, they are mostly from English-language articles. And this happens for several reasons:

- an incredible amount of all kinds of texts are written in English;

- Russian scientists in most cases use English as the second “working” language;

- English is a common working language for most international scientific conferences and journals.

Based on this, we decided to develop solutions for finding borrowings from English to Russian. The result was this general scheme of the algorithm:

- The Russian-language checked document comes to an input.

- Machine translation of the Russian text into English is in progress.

- There is a search for candidates for sources of borrowing on the indexed collection of English-language documents.

- A comparison is made of each candidate found with the English version of the document being checked - the definition of the borders of borrowed fragments.

- The boundaries of the fragments are transferred to the Russian version of the document. At the end of the process, a verification report is generated.

Step one. Machine translation and its ambiguity

The first task to be solved after the appearance of the document being checked is the translation of the text into English. In order not to depend on third-party tools, we decided to use ready-made algorithmic solutions from open access and train them on our own. To do this, it was necessary to assemble parallel corpus texts for a couple of English-Russian languages that are publicly available, and also try to assemble such corpuses independently by analyzing the web pages of bilingual websites. Of course, the quality of a translator trained by us is inferior to leading solutions, but no one from us demands a high quality translation. In the end, managed to collect about 20 million pairs of proposals of scientific topics. This sample was suitable for solving the task before us.

Having implemented a machine translator, we faced the first difficulty - the translation is always ambiguous. The same meaning can be expressed in different words, the structure of the sentence and the order of words can change. And since the translation is done automatically, then machine translation errors are also superimposed here.

To illustrate this ambiguity, we took the first preprint from arxiv.org

and chose a small fragment of the text, which they offered to translate to two colleagues with a good knowledge of English and two well-known machine translation services.

After analyzing the results, we were very surprised. Below you can see how different the translations turned out, although the general meaning of the fragment is preserved:

We assume that the text that we automatically translated from Russian to English in the first step of our algorithm could have been translated from English to Russian. Naturally, exactly how the original translation was made is unknown to us. But even if we knew this, the chances of getting exactly the source code would be negligible.

Here you can draw a parallel with the mathematical model of a “noisy channel model” ( noisy channel model ). Suppose some text in English went through a “channel with noise” and became text in Russian, which, in turn, passed through another “channel with noise” (of course, it was already another channel) and became output text in English, which is different from the original. The imposition of such a double "noise" - one of the main problems of the task.

Step two. From exact matches to search "by meaning"

It became obvious that, even with the translated text, it was correct to find borrowing in it, searching through a collection of sources consisting of many millions of documents, providing sufficient completeness, accuracy and speed of search, using the traditional Shingles algorithm is impossible.

And here we decided to leave the old search pattern based on word matching. We definitely needed a different borrowing detection algorithm, which, on the one hand, could match fragments of texts “by meaning”, and on the other, it remained as fast as the shingle algorithm.

But what to do with the noise that gives us "double" machine translation in the texts? Will the texts generated by different translators be detected, as in the example below?

Search "by meaning" we decided to ensure through the clustering of English words so that the semantically close words and word forms of the same word fall into one cluster. For example, the word "beer" will fall into a cluster, which also contains the following words:

[beer, beers, brewing, ale, brew, brewery, pint, stout, guinness, ipa, brewed, lager, ales, brews, pints, cask]

Now, before splitting texts into shingles, it is necessary to replace words with labels of classes to which these words belong. In this case, due to the fact that shingles are built with overlapping, you can ignore certain inaccuracies inherent in clustering algorithms.

Despite clustering errors, the search for candidate documents takes place with sufficient completeness - we need only a few shingles to match, and still with high speed.

Step three. Of all the candidates, the most worthy win

So, candidate documents for the availability of transfer borrowings have been found, and one can proceed to a “semantic” comparison of the text of each candidate with the text being checked. Here, the Shingles will no longer help us - this tool for solving this task is too inaccurate. We will try to implement such an idea: we will put in correspondence to each piece of text a point in space of a very large dimension, while we will strive to ensure that fragments of texts that are close in meaning are represented by points located in this space nearby (were close by some distance function ).

We will calculate the coordinates of a point (or a little more scientific - vector components) for a text fragment using a neural network, and we will train this network using data marked by assessors. The role of the assessor in this work is to create a training set, that is, to indicate for some pairs of text fragments whether they are close in meaning or not. Naturally, the more it is possible to collect tagged fragments, the better the trained network will work.

The key task in all the work is to choose the right architecture and train the neural network. Our network should display a text fragment of arbitrary length into a vector of large but fixed dimension. At the same time, it should take into account the context of each word and the syntactic features of text fragments. To solve problems associated with any sequences (not only text, but also, for example, biological) there is a whole class of networks, which are called recurrent. The main idea of this network is to obtain a sequence vector by iteratively adding information about each element of this sequence. In practice, such a model has many drawbacks: it is difficult to train it, and it rather quickly “forgets” the information that was obtained from the first elements of the sequence. Therefore, on the basis of this model, many more convenient network architectures have been proposed that correct these shortcomings. In our algorithm, we use the GRU architecture. This architecture allows you to control how much information should be obtained from the next element of the sequence and how much information the network can “forget”.

In order for the network to work well with different types of translation, we have taught it both with manual and machine translation examples. The network was trained iteratively. After each iteration, we studied which fragments it was wrong most of all. Such fragments we also gave the network for training.

It is interesting, but the use of ready-made neural network libraries, such as word2vec , did not bring success. We used their results in our work as an estimate of the base level, below which it was impossible to fall.

It is worth noting another important point, namely - the size of a piece of text that will be displayed to a point. Nothing prevents, for example, operating with full texts, presenting them as a single object. But in this case, only texts that coincide in meaning will be close. If in the text only some part is borrowed, then the neural network will arrange them far away, and we will not find anything. A good, though not certain, option is to use sentences. It was on him that we decided to stop.

Let's try to estimate how many comparisons of sentences will need to be performed in the typical case. Suppose both the document being checked and the candidates' documents contain 100 proposals each, which corresponds to the size of the average scientific article. Then we will need 10,000 comparisons to compare each candidate. If there are only 100 candidates (in practice, tens of thousands of candidates sometimes rise from a multi-million dollar index), then we will need 1 million distance comparisons to search for borrowing in just one document. And the flow of checked documents often exceeds 300 per minute. At the same time, the calculation of each distance by itself is also not the easiest operation.

In order not to compare all the proposals with all, we use a preliminary selection of potentially close vectors based on LSH hashing . The main idea of this algorithm is as follows: we multiply each vector by a certain matrix, after which we remember which components of the multiplication result have a value greater than zero and which ones are smaller. Such a record about each vector can be represented by a binary code with an interesting property: close vectors have a similar binary code. Thus, with the proper selection of the algorithm parameters, we reduce the number of required pairwise vector comparisons to a small number that can be carried out in a reasonable time.

Step Four. "In order not to violate reporting ..."

Let's display the results of our algorithm - now when a user loads a document, you can choose to check the collection of transfer borrowings. The result of the check is visible in your account:

Practical test - unexpected results

So, the algorithm is ready, its training was conducted on model samples. Will we succeed in finding something interesting in practice?

We decided to search for transferable borrowings in the largest electronic library of scientific articles eLibrary.ru , which is based on scientific articles included in the Russian Science Citation Index (RISC). In total, we checked about 2.5 million scientific articles in Russian.

As a search area, we indexed a collection of English-language archival articles from the collections of elibrary.ru, open access journal sites, the resource arxiv.org, and the English-language wikipedia. The total volume of the source database in a combat experiment amounted to 10 million texts. It may seem strange, but 10 million articles is a very small base. The number of scientific texts in English is at least billions. In this experiment, having a base in which there was less than 1% of potential sources of borrowing, we believed that even the 100 cases identified would be good luck.

As a result, we found more than 20 thousand articles containing transferable borrowings in significant volumes. We invited experts to verify the detailed cases. As a result, managed to check a little less than 8 thousand articles. The results of the analysis of this part of the sample are presented in the table:

| Trigger Type | amount |

|---|---|

| Borrowing | 2627 |

| Remittances (text translated from English and issued as original) | 921 |

| Borrowing "vice versa" - from Russian to English (determined by the date of publication) | 1706 |

| Legal borrowing | 2355 |

| Bilingual articles (works of the same author in two languages) | 788 |

| Quotes of laws (use of wording laws) | 1567 |

| Self-citation (translated quotation by the author of his own English-language work) | 660 |

| Erroneous operation (due to incorrect translation or neural network error) | 507 |

| Other (checked articles contained fragments in English, or difficult to refer to any category) | 1540 |

| Total | 7689 |

Part of the results relates to legal borrowing. These are translated works of the same authors or co-authored, some of the results are correct triggers of identical phrases, as a rule, of the same legal laws translated into Russian. But a significant part of the results are incorrect transferable borrowings.

Based on the analysis, one can draw several interesting conclusions, for example, on the distribution of the percentage of borrowings:

It is seen that most often borrow small fragments, however, there are works borrowed entirely, including graphs and tables.

From the histogram below, it is clear that they prefer to borrow from recently published articles, although there are works where the source dates from, for example, 1957.

We used the metadata provided by eLibrary.ru, including which area of knowledge the article belongs to. Using this information, you can determine which Russian scientific fields are most often borrowed by translation from English.

The most obvious way to ensure the correctness of the results is to compare the texts of both works, the source and the source, by checking them side by side.

Above - work in English from arxiv.org, from below - Russian-language work, which is entirely, including graphs and results, is a translation. The corresponding blocks are marked in red. Noteworthy is the fact that the authors went even further - they also translated the remaining pieces of the original article and published a couple more of their “own” articles. The authors decided not to refer to the original. Information on all found cases of transferable borrowings has been submitted to the editorial offices of scientific journals that have published relevant articles.

Thus, the result could not fail to please us - the Antiplagiat system received a new module for detecting transferable borrowing, which checks Russian-language documents now also from English-language sources.

Create your own mind!

Source: https://habr.com/ru/post/354142/

All Articles