DevConf: how VKontakte went to its platform for live-broadcasts

DevConf 2018 will be held May 18 in Moscow, in Digital October. And we continue to publish reports from last year’s conference. Next in line is the report of Alexey Akulovich from VKontakte, where he will tell about what attentive readers have already guessed by sabzh.

In 2015, we used a third-party solution. We embedded his player on the site as youtube and it worked. It didn’t work perfectly, but at that time it suited us in terms of the volume of broadcasts that could be started through it, as well as the quality and delays it gave. But we grew out of it pretty quickly.

The first reason is delays. When viewers write questions in the chat, and the broadcast delay is 40 seconds, in most cases this is unacceptable. The main delay occurred in the transmission of a signal between the computers of the streamer and the viewer (a useful habrapost on this topic ). The main protocols for streaming video: RTMP, RTSP, HLS, DASH. The first two are protocols without repeated requests, i.e. we connect to the server and it just streams the data. The delay is minimal, maybe less than a second, i.e. this is good.

HLS and DASH are HTTP-based protocols that make a new request after each piece of data. And this creates problems. When we began to reproduce the first piece, we need to immediately request the second one, so that when we are finished with the first one, the second one must be downloaded and parsed. This is necessary to ensure continuous playback. Thus, the minimum delay for these protocols is two fragments. One fragment is about a few seconds. Therefore, to achieve an acceptable delay with these formats will not work.

')

We have two options for using stream. The first is through our applications, when we have full control over the resolution, codecs, etc. And we can give this signal to viewers without processing. The second option, when we give the opportunity to stream with anything, whatever software. Of course, in this case we have no control over codecs, resolution, quality. We take this signal at the entrance and must give it to the audience in good quality. For example, if one player streams the game in 4K quality, and the viewer tries to look at the phone in 3G and sees nothing, then we are to blame. Therefore, a third-party signal must be processed by us and given to the viewer at the desired resolution.

Based on the above, we have come to such protocols:

At that time, we were aware of such decisions.

Red5 was considered because they knew about him in the team, but from the bad side. As a result, they did not even test it.

Erlyvideo. Domestic development. Quite popular, the developers spoke at conferences. But they were not interested in cooperation at all. They said: download, understand. We wanted to start everything as quickly as possible, so we decided not to get involved. Left for later, if there is nothing better.

Wowza at that time was used in a friendly project and had the opportunity to ask. And we took her to the tests.

Pros: she really can do a lot of things.

Minuses:

Absolutely cons:

Another domestic development. Was much more friendly. All the features we needed were either ready or planned for the near future. Written in C ++ and theoretically could run even on the Raspberry Pi, i.e. in terms of consumption, it was a cut above java. They also had a library for implementing the player on mobile devices (iphone, android).

How does everything look?

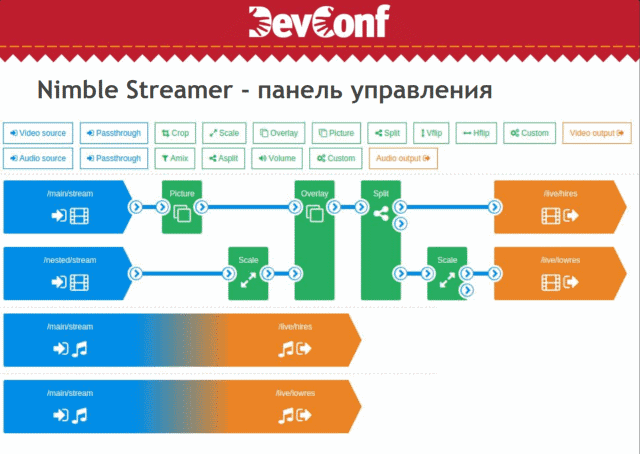

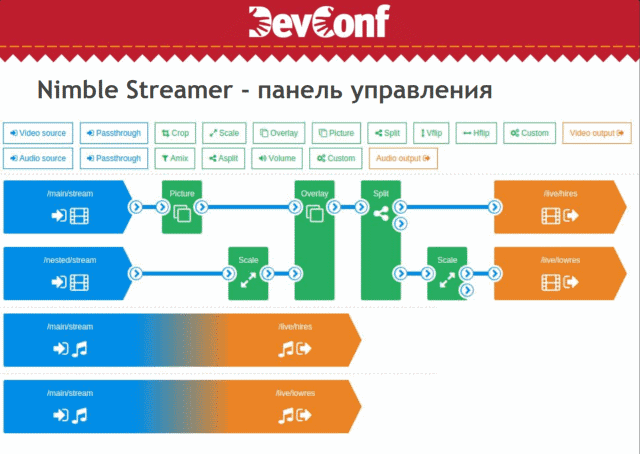

Or customize transcoding and signal processing. Directly with the mouse, you can drag and drop components and set everything visually. Not restarting.

So, the pros:

Minuses:

When we stream into HLS, the player accesses the manifest file with a list of qualities via HTTP. The peculiarity is that Nimble generates this file on the fly, based on what request it came to. If they came via https, then it gives https links. If http, then http. With us he stood not directly for the viewers, but for nginx and the problem is that the viewer comes via https, but he gives the http link and the player cannot play. The only solution is a sub-filter at the nginx level, which changes the addresses of links. Crutch

The second disadvantage is that to control through the API, requests are sent not to the service itself, but to a third-party Nimble service. At the same time, access to it goes via whitelist IP without subnets, and we wanted to go there from about 128 IP subnets. The form in the panel allows you to enter one IP. I had to make a proxy for this API. God knows what a problem, but it is.

There is also a problem with the Nimble asynchronous API. The binary server is synchronized with the API on a schedule. Those. If we add a new streamer, it creates a stream, the settings for quality, but they will be updated only after 30 seconds, let's say.

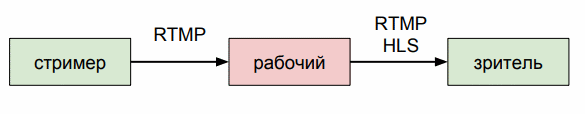

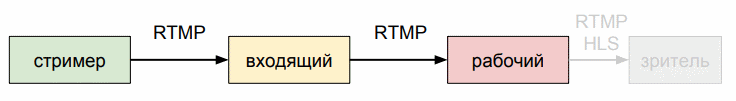

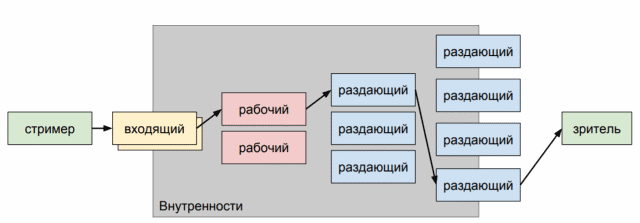

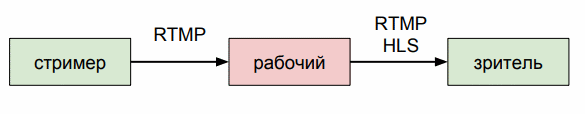

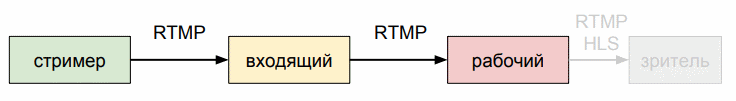

Streamer gives us an RTMP stream. And we have to give it to the viewer in RTMP and HLS. We delivered incoming traffic machines that route it to a specific working machine.

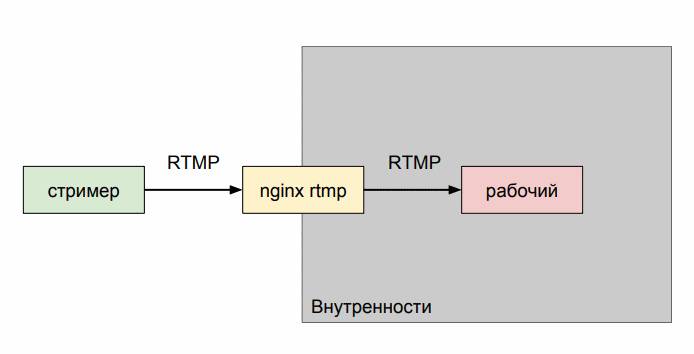

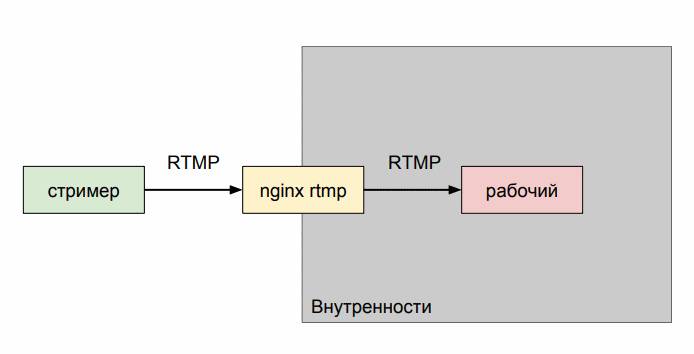

We did this so that we could perform operations with these servers, such as updating software or restarting. We remembered that nginx has an rtmp module that allows you to route rtmp traffic. And we set it as an incoming node.

Thus, we have closed our entire kitchen from streamers. The traffic goes to nginx, and then he will proxy him where necessary. At the level of the module itself, it is possible to rewrite (rewrite) the rtmp-link and redirect the stream there. This is an example from the documentation (no forums and xml!):

He goes to the address on_publish, he rewrites the address to a new one and the stream goes to this address. Several incoming servers sit on the same IP and at the balancer level traffic is distributed across them.

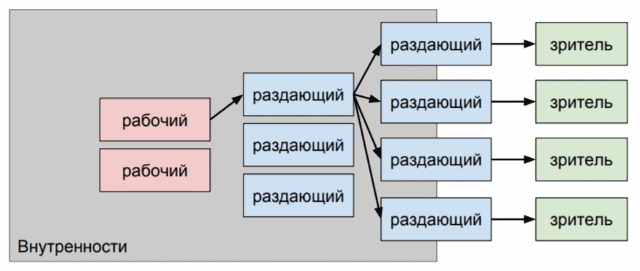

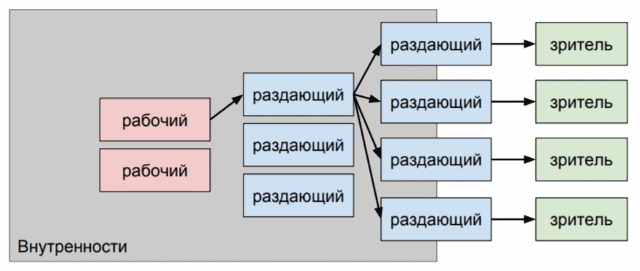

With the distribution of the same. We wanted to hide the insides from the viewers. So that viewers do not go directly to the machine that processes the video. By analogy with the incoming, we have distribution servers. Nginx is also used there. RTMP uses the same rtmp module. And for HLS, proxy_cache with tmpfs is used to store m3u8 and HLS fragments.

In the summer of 2016, International came to us (Dota 2 tournament). And we realized that our scheme ... is bad :)

We had several distribution cars and the audience came to them more or less evenly. It turned out that the same traffic went from the working machine to many distributors and we quickly ran into the outgoing network on the working machine. For a start, we just made an extra layer of caching servers.

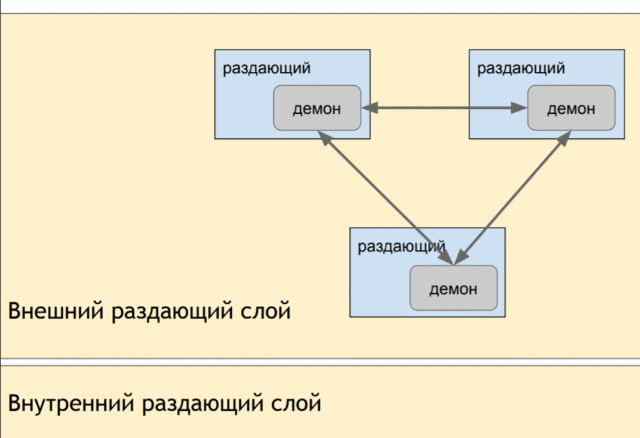

They did it in emergency mode. Live is coming. The network is not enough. Just added machines that reduced outbound traffic from working machines. It was a semi-solution, but at least we began to pull the broadcast dots. The second solution is the consistent delivery of the machines. We do not just give stream to a randomly distributing server, but try to give it from one machine while it copes.

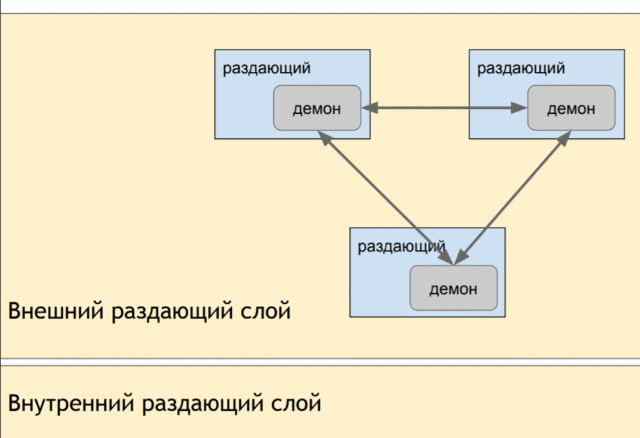

This made it possible to unload the working machines and distributing servers. In order to direct the user to the right machines, we set up a daemon for each distributing server, which polls all the machines of its layer. If this machine is currently overloaded, but the daemon says to nginx: now redirect traffic there.

For the ability to redirect the user, we made a so-called rtmp-redirect. The link leads to https. If the machine is not loaded, then it will redirect to rtmp. Otherwise, on another https. And the player knows when he can play, and when he has to redirect.

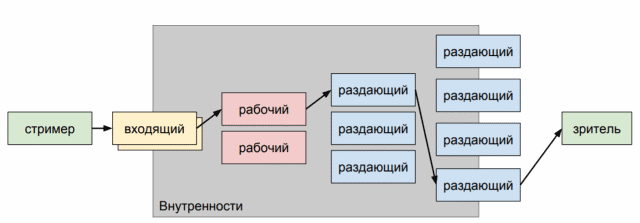

The final scheme came out like this. Streamer streamer on one of the incoming machines, which are the same for him, because they are behind the balancer on the same IP. The incoming server selects a working machine that stores and processes the stream, and also shows it to viewers through two layers of distribution servers.

In this case, not all the working machines we have on Nimble. Where we do not need transcoding, we use the same nginx with an rtmp module.

At the moment, about 200 thousand streams start every day (at the peak of 480 thousand). About 9-14 million viewers every day (at the peak of 22 million). Each stream is recorded, transcoded and available as video.

In the near future (which has probably already arrived, the report last year’s report) it is planned to expand to a million viewers, 3 Tb / s. Fully switch to SSD, because the working machines very quickly run into the disk. Probably replace Nimble and nginx with your bike, because there are still disadvantages that I did not mention.

How does attending a conference differ from viewing / reading a report? You can come to Alexey (and not only to him!) At the conference and find out the specific details that interest you. Talk, share experiences. As a rule, reports only set the direction for interesting conversations.

Come listen to the reports and chat. Habr readers registration discount .

In 2015, we used a third-party solution. We embedded his player on the site as youtube and it worked. It didn’t work perfectly, but at that time it suited us in terms of the volume of broadcasts that could be started through it, as well as the quality and delays it gave. But we grew out of it pretty quickly.

The first reason is delays. When viewers write questions in the chat, and the broadcast delay is 40 seconds, in most cases this is unacceptable. The main delay occurred in the transmission of a signal between the computers of the streamer and the viewer (a useful habrapost on this topic ). The main protocols for streaming video: RTMP, RTSP, HLS, DASH. The first two are protocols without repeated requests, i.e. we connect to the server and it just streams the data. The delay is minimal, maybe less than a second, i.e. this is good.

HLS and DASH are HTTP-based protocols that make a new request after each piece of data. And this creates problems. When we began to reproduce the first piece, we need to immediately request the second one, so that when we are finished with the first one, the second one must be downloaded and parsed. This is necessary to ensure continuous playback. Thus, the minimum delay for these protocols is two fragments. One fragment is about a few seconds. Therefore, to achieve an acceptable delay with these formats will not work.

')

We have two options for using stream. The first is through our applications, when we have full control over the resolution, codecs, etc. And we can give this signal to viewers without processing. The second option, when we give the opportunity to stream with anything, whatever software. Of course, in this case we have no control over codecs, resolution, quality. We take this signal at the entrance and must give it to the audience in good quality. For example, if one player streams the game in 4K quality, and the viewer tries to look at the phone in 3G and sees nothing, then we are to blame. Therefore, a third-party signal must be processed by us and given to the viewer at the desired resolution.

Based on the above, we have come to such protocols:

- For transfer without processing: RTMP to ensure transfer without delay

- With the fallback: HLS processing, since there are already scheduled processing / transcoding delays.

At that time, we were aware of such decisions.

Red5

Red5 was considered because they knew about him in the team, but from the bad side. As a result, they did not even test it.

Erlyvideo

Erlyvideo. Domestic development. Quite popular, the developers spoke at conferences. But they were not interested in cooperation at all. They said: download, understand. We wanted to start everything as quickly as possible, so we decided not to get involved. Left for later, if there is nothing better.

Wowza

Wowza at that time was used in a friendly project and had the opportunity to ask. And we took her to the tests.

Pros: she really can do a lot of things.

Minuses:

- "Forum-oriented" configuration. It has documentation, but in order to find something, you need to google. And in Google, all links lead to the forums. And all the solutions that we found, we found on the forums.

- XML is everywhere. Even it was necessary to set pieces xml in the browser interface.

- In order to receive callback on such simple things as “the user has started broadcasting”, “finished”, “authorization check”, you need to write a module for wowza in java.

Absolutely cons:

- The test machine (16Gb RAM + 4Gb swap) was allowed 4-5 broadcasts and they themselves (without any user traffic) watched some and wowza took up all the memory and the car got stuck. I had to restart it every day.

- Sometimes wowza “beat” the streams when the streamers reconnect. Those. she wrote a record on the disc, but then she herself could not reproduce it. We wrote to the support, but they did not help. Perhaps this was the reason for the rejection of wowza. Because the rest could be lived through.

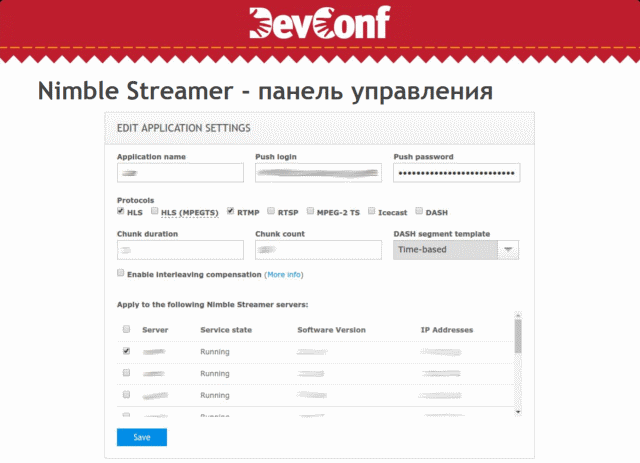

Nimble streamer

Another domestic development. Was much more friendly. All the features we needed were either ready or planned for the near future. Written in C ++ and theoretically could run even on the Raspberry Pi, i.e. in terms of consumption, it was a cut above java. They also had a library for implementing the player on mobile devices (iphone, android).

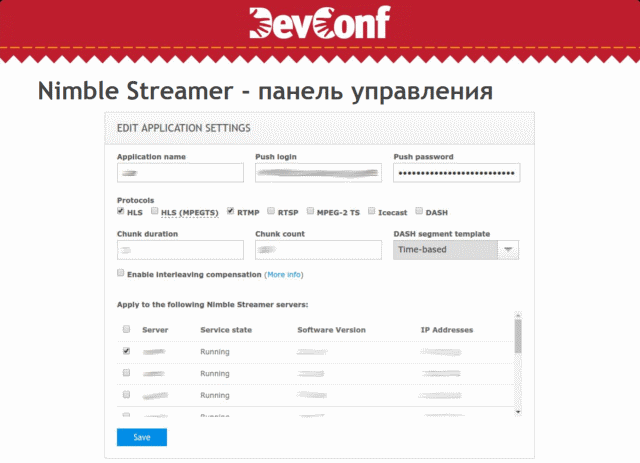

How does everything look?

- Binary server itself. With closed source. Free.

- Paid licenses for transcoder.

- Paid external control panel, which is much more convenient than the wowza panel. There is no need to climb forums. Everything is configured with the mouse.

Or customize transcoding and signal processing. Directly with the mouse, you can drag and drop components and set everything visually. Not restarting.

So, the pros:

- Cool panel.

- Resource consumption is incomparably better than wowza.

- Convenient, good API.

- Totally cheaper than wowza.

Minuses:

When we stream into HLS, the player accesses the manifest file with a list of qualities via HTTP. The peculiarity is that Nimble generates this file on the fly, based on what request it came to. If they came via https, then it gives https links. If http, then http. With us he stood not directly for the viewers, but for nginx and the problem is that the viewer comes via https, but he gives the http link and the player cannot play. The only solution is a sub-filter at the nginx level, which changes the addresses of links. Crutch

The second disadvantage is that to control through the API, requests are sent not to the service itself, but to a third-party Nimble service. At the same time, access to it goes via whitelist IP without subnets, and we wanted to go there from about 128 IP subnets. The form in the panel allows you to enter one IP. I had to make a proxy for this API. God knows what a problem, but it is.

There is also a problem with the Nimble asynchronous API. The binary server is synchronized with the API on a schedule. Those. If we add a new streamer, it creates a stream, the settings for quality, but they will be updated only after 30 seconds, let's say.

Current architecture

Streamer gives us an RTMP stream. And we have to give it to the viewer in RTMP and HLS. We delivered incoming traffic machines that route it to a specific working machine.

We did this so that we could perform operations with these servers, such as updating software or restarting. We remembered that nginx has an rtmp module that allows you to route rtmp traffic. And we set it as an incoming node.

Thus, we have closed our entire kitchen from streamers. The traffic goes to nginx, and then he will proxy him where necessary. At the level of the module itself, it is possible to rewrite (rewrite) the rtmp-link and redirect the stream there. This is an example from the documentation (no forums and xml!):

He goes to the address on_publish, he rewrites the address to a new one and the stream goes to this address. Several incoming servers sit on the same IP and at the balancer level traffic is distributed across them.

With the distribution of the same. We wanted to hide the insides from the viewers. So that viewers do not go directly to the machine that processes the video. By analogy with the incoming, we have distribution servers. Nginx is also used there. RTMP uses the same rtmp module. And for HLS, proxy_cache with tmpfs is used to store m3u8 and HLS fragments.

In the summer of 2016, International came to us (Dota 2 tournament). And we realized that our scheme ... is bad :)

We had several distribution cars and the audience came to them more or less evenly. It turned out that the same traffic went from the working machine to many distributors and we quickly ran into the outgoing network on the working machine. For a start, we just made an extra layer of caching servers.

They did it in emergency mode. Live is coming. The network is not enough. Just added machines that reduced outbound traffic from working machines. It was a semi-solution, but at least we began to pull the broadcast dots. The second solution is the consistent delivery of the machines. We do not just give stream to a randomly distributing server, but try to give it from one machine while it copes.

This made it possible to unload the working machines and distributing servers. In order to direct the user to the right machines, we set up a daemon for each distributing server, which polls all the machines of its layer. If this machine is currently overloaded, but the daemon says to nginx: now redirect traffic there.

For the ability to redirect the user, we made a so-called rtmp-redirect. The link leads to https. If the machine is not loaded, then it will redirect to rtmp. Otherwise, on another https. And the player knows when he can play, and when he has to redirect.

The final scheme came out like this. Streamer streamer on one of the incoming machines, which are the same for him, because they are behind the balancer on the same IP. The incoming server selects a working machine that stores and processes the stream, and also shows it to viewers through two layers of distribution servers.

In this case, not all the working machines we have on Nimble. Where we do not need transcoding, we use the same nginx with an rtmp module.

What's next?

At the moment, about 200 thousand streams start every day (at the peak of 480 thousand). About 9-14 million viewers every day (at the peak of 22 million). Each stream is recorded, transcoded and available as video.

In the near future (which has probably already arrived, the report last year’s report) it is planned to expand to a million viewers, 3 Tb / s. Fully switch to SSD, because the working machines very quickly run into the disk. Probably replace Nimble and nginx with your bike, because there are still disadvantages that I did not mention.

How does attending a conference differ from viewing / reading a report? You can come to Alexey (and not only to him!) At the conference and find out the specific details that interest you. Talk, share experiences. As a rule, reports only set the direction for interesting conversations.

Come listen to the reports and chat. Habr readers registration discount .

Source: https://habr.com/ru/post/354074/

All Articles