Next generation video: introducing AV1

AV1 is a new universal video codec developed by Alliance for Open Media. The Alliance took VPX codec from Google, Thor from Cisco and Daala from Mozilla / Xiph.Org as a basis. The AV1 codec is superior in performance to VP9 and HEVC, which makes it a codec not for tomorrow, but for the day after tomorrow. The AV1 format is free of any royalty and will always remain so with the free and open source software license.

AV1 is a new universal video codec developed by Alliance for Open Media. The Alliance took VPX codec from Google, Thor from Cisco and Daala from Mozilla / Xiph.Org as a basis. The AV1 codec is superior in performance to VP9 and HEVC, which makes it a codec not for tomorrow, but for the day after tomorrow. The AV1 format is free of any royalty and will always remain so with the free and open source software license.Triple platform

Who followed the development of Daala , he knows that after the formation of the Open Media Alliance (AOM), Xiph and Mozilla offered our Daala codec as one of the bases for the new standard. In addition, Google introduced its VP9 codec, and Cisco introduced Thor. The idea was to create a new codec, including on the basis of these three solutions. Since then, I have not published any demo about new technologies in Daala or AV1; for a long time we knew little about the final codec.

About two years ago, AOM voted to establish the fundamental structure of a new codec based on VP9, rather than Daala or Thor. Alliance member companies wanted to get a useful codec without royalties and licenses as soon as possible, so they chose VP9 as the least risky option. I agree with this choice. Although Daala was nominated as a candidate, I still think that the elimination of block artifacts by lapping arrpoach and the frequency domain equipment in Daala then (and even now) are not yet mature enough for real deployment. Daala still had unresolved technical issues, and choosing as the starting point VP9 solved most of these problems.

Due to the fact that VP9 is taken as a basis, the AV1 codec (AOM Video Codec 1) will be basically a clear and familiar codec built on the traditional block transform code. Of course, it also includes some very interesting new things, some of which are taken from Daala! Now that we are rapidly approaching the approval of the final specifications, it is time to present the long-awaited technological demonstrations of the codec in the context of AV1.

')

New look at prediction of chromaticity in brightness (CfL)

Prediction of chromaticity in brightness (Chroma from Luma, abbreviated to CfL) is one of the new prediction methods adopted for AV1. As the name suggests, it predicts colors in an image (chroma) based on brightness values (luma). First, the brightness values are encoded and decoded, and then the CfL performs sound color prediction. If the assumption is good, then this reduces the amount of color information to encode and saves space.

In fact, CfL in AV1 is not a completely new technique. The foundational research paper on CfL was published in 2009, and LG and Samsung jointly proposed the first implementation of CfL called LM Mode , which was rejected at the HEVC design stage. You remember that I wrote about a very advanced version of CfL, which is used in the Daala codec . The Cisco Thor codec also uses the CfL technique, similar to the LM Mode, and HEVC eventually added an improved version, called the Cross-Channel Prediction (CCP), as an extension of the HEVC Range Extension (RExt).

| LM Mode | Thor CFL | Daala cfl | HEVC CCP | AV1 CfL | |

|---|---|---|---|---|---|

| Forecasting area | space salient | space salient | frequency | space salient | space salient |

| Coding | not | not | sign bit | index + signs | joint sign + index |

| Activation mechanism | LM_MODE | threshold | signal | binary flag | CFL_PRED (only in uv mode) |

| Requires PVQ | not | not | Yes | not | not |

| Decoder styling? | Yes | Yes | not | not | not |

LM Mode and Thor are similar in that the encoder and decoder in parallel launch the identical prediction model and do not require any coding of parameters. Unfortunately, this parallel / implicit model reduces the accuracy of the approximation and complicates the decoder.

Unlike others, CfL in Daala operates in the frequency domain. This transmits only the activation bit and the sign bit, and other parameter information is already implicitly encoded through the PVQ.

The final implementation of AV1 CfL is based on the implementation of Daala, borrows the model ideas from Thor and improves both options by implementing the results of additional research. This avoids the increase in complexity in the decoder, realizes the search for a model, which also reduces the complexity of the encoder compared to its predecessors, and especially improves the accuracy and approximation of the coded model.

The need for better intraframe prediction

At a fundamental level, compression is the art of forecasting. Before the latest generation of codecs, video compression relied mainly on interframe encoding prediction , that is, frame coding as a set of changes compared to other frames. Those frames on which interframe coding is based are called reference frames . Predicting interframe coding over the past few decades has become an incredibly powerful tool.

Despite the power of interframe prediction, we still need separate reference frames. By definition, they do not rely on information from any other frames — as a result, they can only use intraframe prediction , which works entirely inside the frame. Since only intraframe prediction is used in the reference frames, they are also often referred to as I-frames (intra-frames). Reference or I-frames allow you to search the video, otherwise we would always have to play the video only from the very beginning * .

A histogram of the size in bits of the first sixty frames of the test video, starting with the reference frame. In this clip, the reference frame is 20–30 larger in size for subsequent intermediate frames. In slow motion or highly static footage, the reference frame can be hundreds of times more intermediate.

Support frames are extremely large compared to intermediate frames, so they are usually used as little as possible and widely spread from each other. Despite this, the intermediate frames are getting smaller and smaller, and the reference frames occupy an increasing part of the bitstream. As a result, video codec research focused on finding new, more powerful forms of intraframe prediction in order to reduce the size of reference frames. And despite its name, intermediate frames can also benefit from intraframe prediction.

Improving intra-frame prediction is a double win!

Chrominance prediction in luminance works solely on the basis of luma blocks within a frame. Thus, this is a technique of intraframe prediction.

Energy is power †

Energy correlation is information

Why do we think it is possible to predict color in brightness?

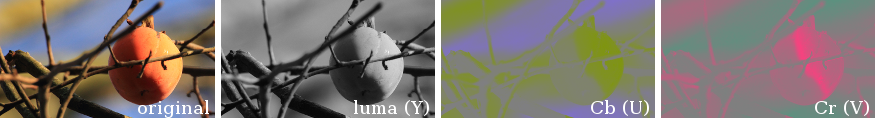

In most implementations, the video channel correlation is reduced due to the use of the YUV type color space. Y is a luminance channel, a version of the original grayscale video signal, generated by adding weighted versions of the original red, green, and blue signals. The U and V chroma channels subtract the luminance signal from blue and red, respectively. The YUV model is simple and significantly reduces duplication of coding on channels.

Image decomposition (leftmost) in the YUV model or, more precisely, in the bt.601 Y'CbCr color space. The second image on the left shows the luminance channel, and the two on the right shows the chroma channels. In YUV, there is less inter-channel redundancy than in the RGB image, but the features of the original image are still clearly visible in all three channels after decomposition of YUV; in all three channels the outlines of the objects are in the same places.

And yet, if you look at the frame decomposition through the YUV channels, it becomes obvious that the outlines of the borders of chromaticity and brightness are still in the same places in the frame. There remains some correlation that can be used to reduce the bitrate. Let's try using some brightness data to predict chroma.

We get colored chalk

Predicting chromaticity in brightness is, in fact, the process of coloring a monochrome image based on reasonable guesses. Approximately how to take an old black and white photo, colored pencils - and start coloring. Of course, CfL forecasts should be accurate, not pure guesses.

The work is facilitated by the fact that modern video codecs break an image into a hierarchy of smaller blocks, performing the main part of the coding independently on each block.

The AV1 encoder splits the frame into separate prediction blocks for maximum coding accuracy, and, last but not least, to simplify the analysis and make adjustments to the prediction as the image is processed.

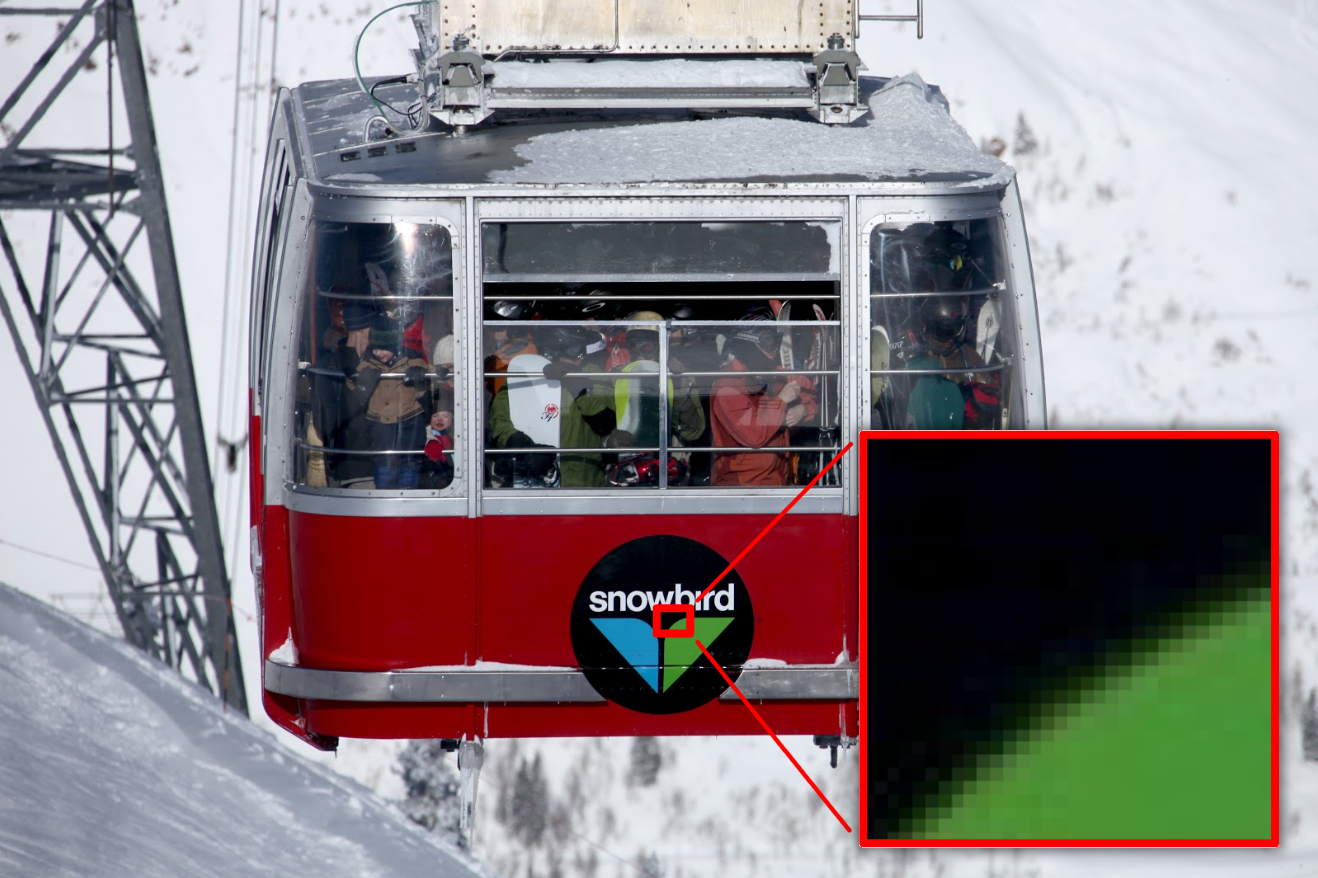

A model that predicts color for the entire image at once would be too cumbersome, complex and error-prone - but we don’t need to predict the entire image. Since the coder works in turn with small fragments, we only need to compare the correlations in small areas — and from them we can predict the chromaticity from the brightness with a high degree of accuracy using a fairly simple model. Consider a small portion of the image below, in the red outline:

A single block in a single video frame illustrates that localizing chroma prediction in small blocks is an effective means to simplify prediction.

In the small range of this example, a good “rule” for coloring the image will be simple: the brighter areas are green, and the saturation decreases with brightness up to black. Most blocks have the same simple colorization rules. We can complicate them to your taste, but the simplest method also manifests itself very well, so let's start with a simple one and approximate the data to a simple ax + β line:

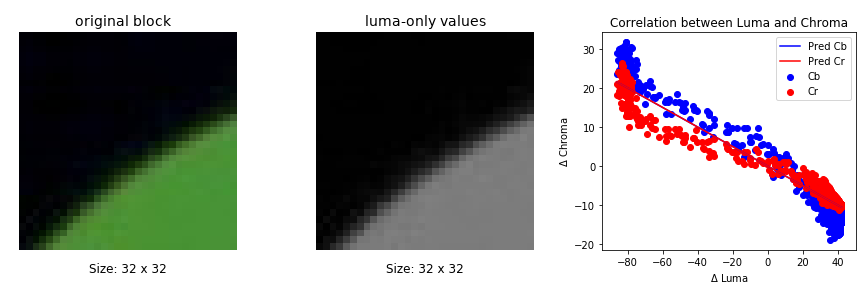

The values of Cb and Cr (U and V) are relative to the brightness (Y) for the pixels in the selected block from the previous image. The quantized and coded linear model is approximated and superimposed as a line on the scatterplot. Note that the approximation consists of two lines; in this example, they overlap.

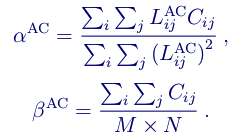

Well, well, we have two lines - one for the U channel (the difference with blue, Cb) and one for the V channel (the difference with red, Cr). In other words, if - these are the restored brightness values, then we calculate the chromaticity values as follows:

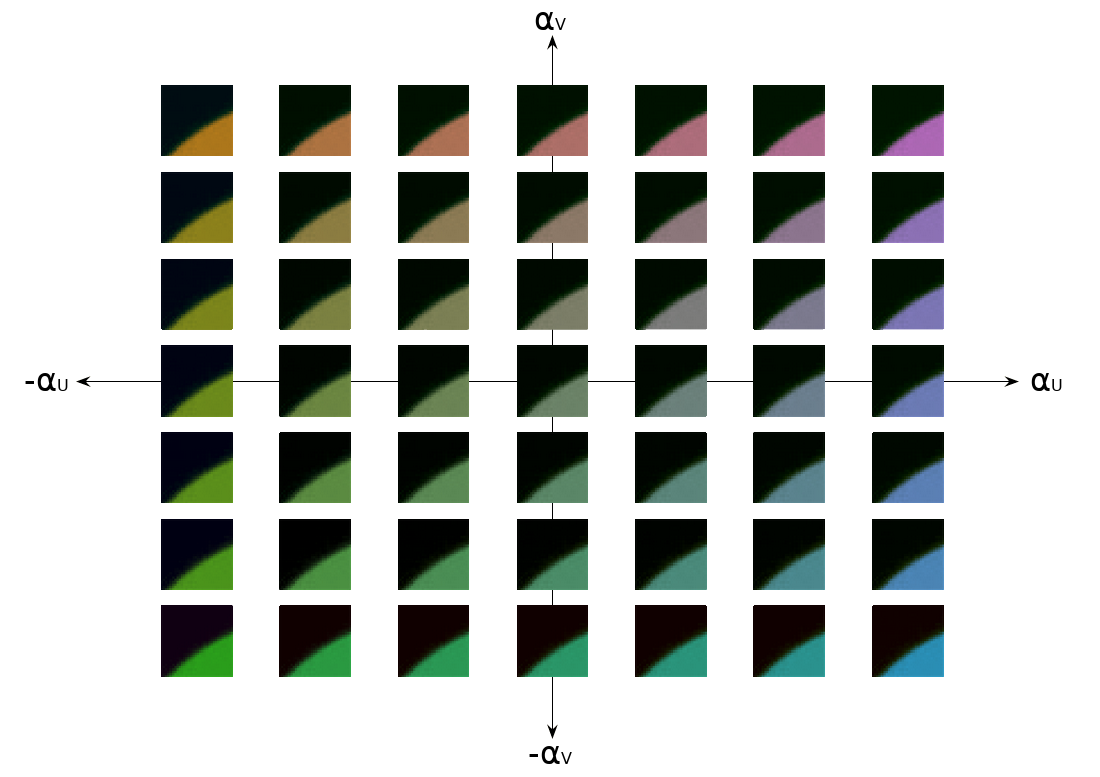

What do these parameters look like? Each corresponds to a certain tone (and anti-tone) from a two-dimensional palette, which are scaled and applied in accordance with the brightness index:

Options in CfL, a tone is chosen for coloring a block from a two-dimensional palette.

Meanings change the point of intersection of zero for the color scale, that is, these are the buttons for switching the minimum and maximum saturation levels when coloring. note that allows you to apply a negative color. This is the opposite of the tone selected by the parameter. .

Now our task is to choose the right ones. and and then encoding them. Here is one example of a straightforward implicit approach for predicting chroma luminance in AV1 :

It looks worse than it actually is. If you translate into a normal language: perform the approximation of the smallest squares of chromaticity values by the restored brightness values to find then use to solve chromaticity bias . At least this is one of the possible approximation methods. It is often used in CfL implementations (such as LM Mode and Thor), which do not transmit a signal neither . In this case, the approximation is performed using the chromaticity values of the neighboring pixels that have already been fully decoded.

Cfl in daala

Daala performs all frequency domain prediction, including CfL, providing the prediction vector as one of the input values for PVQ coding . PVQ is the gain / shape encoding. The luminance vector PVQ encodes the location of the contours and borders in luminance, and we simply reuse it as a predictor for contours and chromaticity borders.

Daala codec does not need to encode value , as it falls into the PVQ gain (except for the sign). Daala also does not need to encode the value. : because Daala applies CfL to AC color coefficients only, always zero. This gives us insight: conceptually it is just a DC offset of chroma values.

In fact, since the Daala codec uses PVQ to encode conversion blocks, it gets CfL with little or no cost, both in terms of bits and in terms of additional calculations in the encoder and decoder.

CfL to AV1

AV1 did not accept PVQ, so the cost of CfL is about the same when calculated in the pixel or frequency domain; There is no longer a special bonus for working in the frequency domain. In addition, the time -to-time resolution switching TF (Time-Frequency resolution switching) , which uses Daala to glue the smallest luma blocks and create sufficiently large sub-sampled chrominance blocks, currently works only with DCT and Walsh-Hadamard transformations. Since AV1 uses another discrete sine transformation and pixel domain identity transform, we cannot easily perform AV1 CfL in the frequency domain, at least when using sub-sampled color.

But, unlike Daala, AV1 does not need to perform CfL in the frequency domain. So for AV1, we move the CfL from the frequency domain back to the pixel domain. This is one of the great features of CfL — the basic equations work the same way in both areas.

CfL in AV1 should reduce the complexity of the reconstruction to a minimum. For this reason, we explicitly encode , so that the decoder does not take the costly approximation of the least squares. Bit costs for explicit coding more than pays off the extra precision obtained by calculating using the chroma pixels of the current block instead of the neighboring reconstructed chroma pixels.

Then we optimize the complexity of the approximation on the side of the encoder. Daala operates in the frequency domain, so we perform CfL only with AC brightness coefficients. AV1 approximates the CfL in the pixel domain, but we can subtract the average (that is, the already calculated DC value) from each pixel, which results in pixel values to zero average, equivalent to the contribution of the AC coefficient in Daala. Zero average luminance values reduce a significant part of the least squares equation, significantly reducing the cost of the calculation:

We can optimize even more. After all - this is just DC chrominance offset, so the encoder and decoder are already performing DC prediction for chroma planes, as this is necessary for other prediction modes. Of course, the predicted DC value will not be as accurate as the explicitly coded DC / , but tests have shown that it still looks good:

Error analysis of the default DC predictor on neighboring pixels and encoding an explicit value by pixels in the current block.

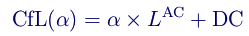

As a result, we simply use the already existing DC color prediction instead of . Now, not only does the need to encode disappear but do not need to explicitly calculate of Neither the encoder nor the decoder. Thus, our final CfL prediction equation is as follows:

In cases where prediction alone is not enough for an accurate result, we encode the residual transform domain. And of course, when the prediction does not give any benefit at all in bits, we simply do not use it.

results

As with any prediction method, the effectiveness of CfL depends on the choice of test. AOM uses a series of standardized test suites hosted on Xiph.Org and available through the “Are We Compressed Yet?”, AWCY automated testing tool.

CfL is an intraframe prediction technique. To better evaluate its effectiveness on reference frames, take a test set of subset-1 images:

| BD-rate | |||||||

| PSNR | PSNR-HVS | SSIM | CIEDE2000 | PSNR Cb | PSNR Cr | MS SSIM | |

| The average | -0.53 | -0.31 | -0.34 | -4.87 | -12.87 | -10.75 | -0.34 |

Most of these metrics are not sensitive to color. They are simply always included, and it's nice to see that the CfL technique doesn't harm them. Of course, it should not, because more efficient color coding simultaneously frees up bits that can be used to better represent the brightness.

However, it makes sense to look at the CIE delta-E 2000 indicator; it shows the metric of perceptual-uniform color error. We see that CfL saves almost 5% in bitrate, taking into account both brightness and chromaticity! This is a stunning result for a single prediction method.

CfL is also available for intraframe blocks inside intermediate frames. During the development of the AV1, objective-1-fast was the standard test suite for evaluating motion sequence indicators:

| BD-rate | |||||||

| PSNR | PSNR-HVS | SSIM | CIEDE2000 | PSNR Cb | PSNR Cr | MS SSIM | |

| The average | -0.43 | -0.42 | -0.38 | -2.41 | -5.85 | -5.51 | -0.40 |

| 1080p | -0.32 | -0.37 | -0.28 | -2.52 | -6.80 | -5.31 | -0.31 |

| 1080p-screen | -1.82 | -1.72 | -1.71 | -8.22 | -17.76 | -12.00 | -1.75 |

| 720p | -0.12 | -0.11 | -0.07 | -0.52 | -1.08 | -1.23 | -0.12 |

| 360p | -0.15 | -0.05 | -0.10 | -0.80 | -2.17 | -6.45 | -0.04 |

As expected, we still see a solid increase, although the contribution of CfL is somewhat weakened due to the prevalence of interpolation. Intraframe blocks are used mainly in reference frames, each of these test sequences encodes only one reference frame, and intraframe coding is not often used in intermediate frames.

The obvious exception is the “1080p-screen” content, where we see a huge decrease in bitrate by 8%. This is logical, because a significant part of the screencasts are quite static, and if the area changes, it is almost always a major update, suitable for intraframe coding, rather than smooth motion, suitable for interframe coding. In these screencasts, intraframe blocks are more actively encoded - and therefore the benefits of CfL are more pronounced.

This applies to both synthetic content and rendering:

| BD-rate | |||||||

| PSNR | PSNR-HVS | SSIM | CIEDE2000 | PSNR Cb | PSNR Cr | MS SSIM | |

| Twitch | -1.01 | -0.93 | -0.90 | -5.74 | -15.58 | -9.96 | -0.81 |

The Twitch test suite consists entirely of video game broadcasts, and here we also see a solid reduction in bitrate.

Of course, the prediction of chromaticity in brightness is not the only technique that the AV1 codec will open for mass use for the first time. In the next article, we will look at a really completely new technique from the AV1: A Constrained Directional Enhancement Filter.

Author: Monty (monty@xiph.org, cmontgomery@mozilla.com). Published on April 9, 2018.

* It is possible to “smudge” the reference frame over other frames using rolling I ( frame rolling). In the case of rolling, the individual reference frames are divided into separate blocks, which are scattered among the previous reference frames. Instead of searching for the reference frame and starting playback from this point, the codec with I-frame support supports searching for the first previous block, reads all other necessary fragments of the reference frame, and starts playback after collecting enough information to restore the full reference frame. Rolling an I-frame does not improve compression; it simply blurs bit rate spikes caused by large reference frames. It can also be used to improve fault tolerance. [return]

† Technically, energy is the product of power and time. When comparing apples and oranges, it is important to express both fruits in watt-hours. [return]

Additional resources

- Open Media Alliance Project Document: CfL in AV1 , Luke Trudeau, David Michael Barr

- The underlying scientific paper on luminance prediction in luminance in the spatial domain: “Intraframe prediction method based on linear communication between channels in YUV 4: 2: 0 intraframe coding” , San Hyun Lee, 16th International IEEE Image Processing Conference (ICIP) , 2009

- LG's proposal to include CfL spatial prediction in HEVC: “A new technique of intraframe chromatic prediction using interchannel correlation” , Kim et al., 2010

- Samsung and LG joint proposal on LM Mode in HEVC: “Intraframe prediction of chromaticity using reconstructed brightness patterns” , Kim et al., 2011

- Realization of CfL in Daala: “Prediction of chromaticity in brightness in the intraframe frequency domain” , Natan Aggie, Jean-Marc Valin, March 10, 2016

- “Prediction of chromaticity in luminance in AV1” , Luke Trudeau, Nathan Aggie, David Barr, January 17, 2018

- Daala's Frequency Resolution Switching Demo Page

- Daf CfL demo page

- CfL presentation from the VideoLan Dev Days 2017 conference: “CfL to AV1” , Luke Trudeau, David Barr, September 2017

- Presentation from the Data Compression Conference: “Intraframe CfL Prediction for AV1” , Luc Trudeau, Nathan Aggie, David Barr, March 2018

- Standard test suites from Xiph.Org at media.xiph.org

- Automated testing system and metrics used in the development of Daala and AV1: “Are we already compressed?”

Source: https://habr.com/ru/post/354044/

All Articles