Automate UI testing on PhoneGap. Payment application case

I do not know about you, but I feel confident in the water. However, recently they decided to teach me how to swim again, using the old Spartan method: they threw me into the water and told me to survive.

But pretty metaphors.

')

Given: PhoneGap-application with iframe, inside which a third-party site is loaded; 1.5 years experience tester; experience programmer 0 years.

The task: to find a way to automate testing of the main business case of the application, because testing manually is long and expensive.

Solution: a lot of crutches, regular calls to programmers for help.

However, the tests work and I learned a lot. And the moral of my retrospective will not be that you should not make my mistakes, but that I dealt with a strange and atypical task — in my own way, but understood.

about the project

I'll start my story with an acquaintance with the project. This is the “All Payments” web service, through which people pay for utility services, communications, car fines, make loan payments and pay for purchases at some online stores. The service works with 30 thousand service operators, this is a great product with a complex history, an impressive number of integrations and its own team.

The Live Taiping company, where I work as a QA-specialist, has developed a cross-platform mobile application for the service. The client had to do MVP to test a number of hypotheses, and the “cross platform” was the only way to quickly and inexpensively fulfill this desire.

Why did the task arise to automate UI tests?

As I said above, the service has a complex and interesting inner life. The web service development team regularly makes changes to its work and rolls them out to the server twice a week. Our team does not participate in what is happening on the back, but participates in the development of the application. We do not know what we will see on the screen of the application after the next release and how the changes made will affect the operation of the application.

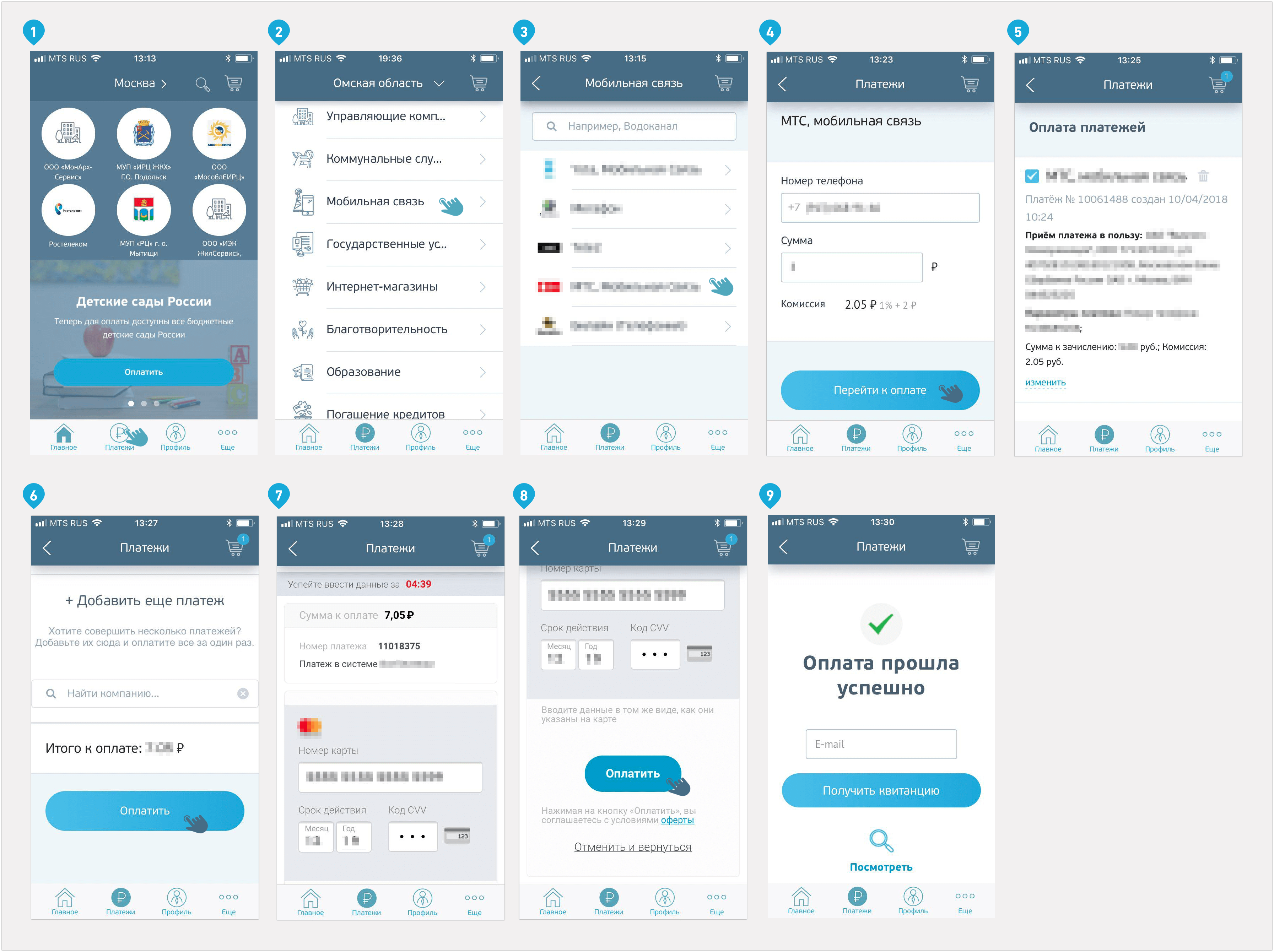

When it comes to MVP work, the maximum task is to support the work of one or several key product features. In the payment service, such possibilities were the payment to the service provider, the operation of the basket and the display of the list of service providers. But payment is more important than others.

In order for the changes made by the developers of the site not to block the execution of key business cases of the application, testing is needed. Smoke is enough: started? not burned? fine. But with such a frequency of releases, testing the application manually would be too expensive.

The hypothesis came to mind: what if this process is automated? And we allocated time and budget to automate a series of manual tests and in the future spend two hours a week for testing instead of six to eight.

Is it possible to automate everything?

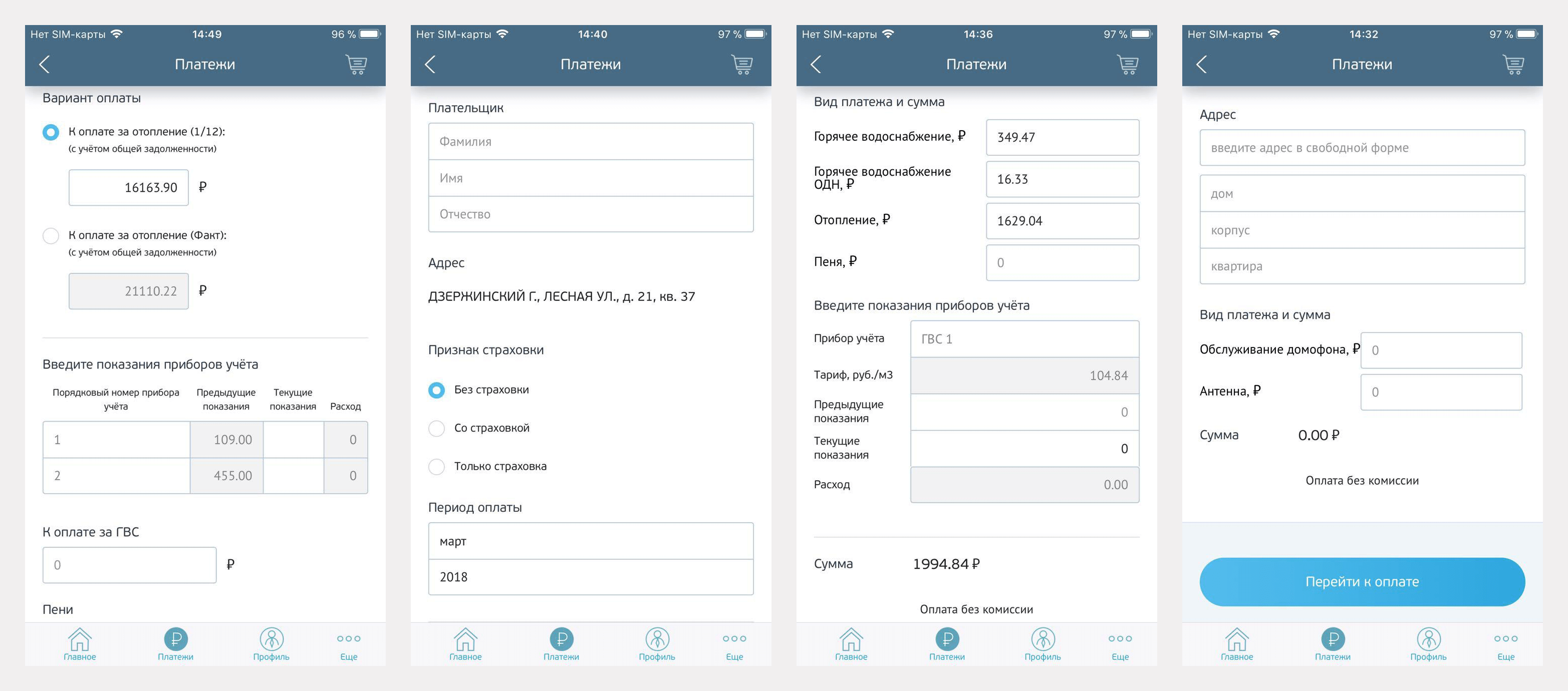

It is important to say that the changes in the work of the site concern not only the UI, but also the UX. We agreed that the analyst from the client will tell us in advance about the planned updates on the site. They can be different, from moving the button to the introduction of a new section. Testing the latter cannot be entrusted to automatics - this is a complex UX-scenario, which has to be found and checked by hand in the old manner.

How we imagined the implementation

We decided to test the main functions through the application interface, armed with the Appium framework. Appium Inspector remembers actions with the interface and converts them into a script, the tester runs this script and sees the test results. So we imagined the work at the beginning.

Here we will briefly return to the metaphorical introduction to my story. To automate tests, you need to be able to program, and here my powers, as they say, everything. Introduction to this world took about four hours: we deployed and set up the environment, the tech-guide assured that everything was simple, using the example of one test showed how to write the rest, and didn’t interfere more specifically with my work on the project. He threw into the water with a lowered lifebuoy and saluted.

I really had no idea what would happen.

I started by compiling test cases to verify payment:

For the basket and the list of suppliers, test cases were also compiled. It was planned to move on to the automation of other, more complex scenarios. But we abandoned such a plan, because there are many types of filling forms for different service providers. For each of the forms would have to do an automated test separately, and it is long and expensive. The client tests them himself.

Another wait was this: once it is an autotest, the test case can be anything complicated. After all, the program will test everything itself, and the tester will only need to specify a sequence of actions. I invented huge, monstrous cases in which several additional checks are inserted into the payment cycle, for example: what happens if you go from the basket to the list of suppliers in the payment, add a new one, then return, delete it and continue to pay.

When I wrote such a test, I saw how huge it is and how unstable it works. It became clear that test cases should be simplified. A short test is easier to maintain and append. And instead of tests with several checks, I started doing tests with one or two checks.

As for software for testing, I presented the work with Appium as follows: I perform some sequence of actions in the recorder, it records it, the framework collects a script from this, I run the resulting code and it repeats my actions in the application.

It sounded good, but it just sounded.

How was the process in reality

And here are the problems I encountered:

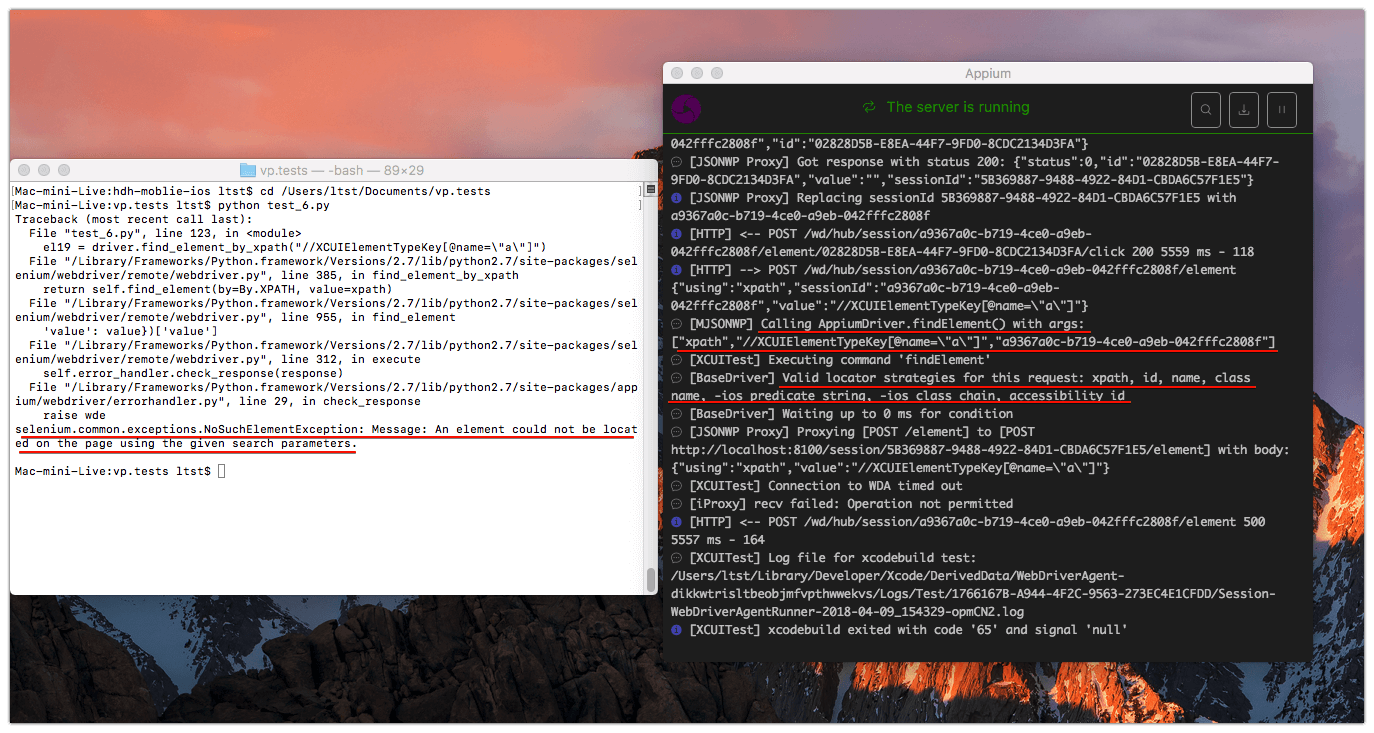

- As soon as I tried to run the script recorded by the recorder, I realized that I could not do without programming. The code that issued the recorder, in its original form, would not work - needed some work. For example, if an input form is being tested, the easiest way is to substitute the data (phone number, personal account, first and last name, etc.). The recorder records each keyboard character as a separate element, so the script enters characters one by one, switching the keyboard where necessary — in general, blindly copies the recorded actions. Here and later, I solved my problems either by the programmer, who suggested what to do, or by surfing for five hours on Google. And this is the main drawback in the whole story.

- At some point, I realized that we needed the conditions under which the script would work. I will explain with an example. The name of the service provider is written in Cyrillic characters, and in order to enter it, you need to turn on the keyboard on the device, switch the language from Latin to Cyrillic and enter the name.

The first time the script performs these actions and it turns out what you need. But when you run the script again, it switches the keyboard from Cyrillic to Latin, cannot find the Russian letter on the keyboard and drops. Therefore, the script needed to set conditions using a code, for example, “try to find a Russian letter, if you can't, switch the keyboard”. It seems to be a simple thing for someone who has been programming for a long time, but not for me at that time. - The script does not understand whether the called element has been loaded, whether it has opened, whether it is visible; he simply presses the buttons that the recorder recorded. Therefore, it is necessary to add delays, scrolling or some additional actions to the script somewhere, and this again requires programming experience.

- The lack of ID elements. What are they needed for? Suppose you have a login button in the application. By the name of this button, the script may not understand what you want to click on it. The presence of ID elements solves this problem.

Appium can find an element by name, but it so happens that an element does not even have one. For example, there is a basket in the application, and the basket button is indicated by an icon; she is not signed, she has no name - nothing. And for the script to click it, it must somehow find it. Without a name, this can not be done, even by brute force - the script has nothing to turn on and it cannot click on the basket button. And if the basket had an ID, the recorder would have seen it in the process of recording actions, and the script could find the button. The solution was ambiguous.

In native development, most of the elements are assigned an ID, to which the recorder addresses without problems.

But do not forget that our product is a cross-platform application. Their main feature is that among the native elements there is a web part, to which the recorder cannot access the same way as the native part. It reads elements unpredictably - somewhere text, somewhere type - and there are no special IDs, since Web ID is used for other purposes. The project was originally written using web development tools (that is, JavaScript), after which Cordova generates native code that is different for iOS and Android platforms, and assigns an ID in only the slave itself.

So the solution. Since I could refer to the elements by their names, I asked the developer to set the name of the buttons in a transparent font. The name is there, the user does not see it, but sees the Appium recorder. The script can refer to such a button and click on it.

- The lack of ID elements slows down autotests. Why? The names of the elements on the screen can be repeated (for example, two input fields have the same name but different purpose). And since the test, in search of the right element, goes through everything with a certain name, it may turn to the wrong element that it needs. If you find an item by name directly, the problem is not solved, because The name is not unique. Therefore, the script must compare not only the names of the elements, but also their type. Because of all this, tests pass slowly.

- This project could not be run on an emulator - only on a physical device. To support the class for displaying web pages in WKWebView applications used in the project, it is necessary that localhost be deployed inside the physical device. Tests on the emulator would work four times faster, but this is not our case.

- The recorder created the tests unstructured and not in the framework of a single project. If I wrote this code from scratch, as programmers do, then all the tests would be in one file, and each of the tests would be described by a separate cycle within one project. A recorder generates each test as a separate file, and there are no cycles. As a result, I received all the tests as separate pieces of code that I could not combine and make structure for them.

- Report on the test results, or logging. Everything is simple: it was not there. I knew about existing tools like py.test or TestNG (depending on the programming language in which the tests were written), but they were also designed for a structured project, but this is not about us again. Therefore, it was impossible to connect something ready, but in writing my own, I had no experience at all. As a result, I came to the following: that message, which the terminal showed me when the test fell, is enough to understand which element the test did not find and why.

I checked the error manually anyway. Logs were, in its minimum performance, but there were. And that is good.

- Last and perhaps the most painful point. When I rested my forehead on some kind of problem, I either went to our technical director and the initiator of the idea to do autotests, or simply googled the problem for five hours and did not understand what was going on. Help tehdir made tasks quick and easy. But at some point, he left me, and every time pulling developers is not cool. I had to handle myself.

Results

Now, when the work on the tests is completed, I look at what happened and think: I would do everything differently: where the recorder generates scripts, I would write the tests manually; I would add an insert of input data where items are searched on the keyboard; would get rid of brute force by adding an ID; would learn a framework that runs tests in a loop; set up and connect CI so that the tests themselves run after each deployment; Would configure logging and send results by mail.

But then I knew almost nothing and checked all my decisions, because I was not sure about them. Sometimes I never imagined what it should be.

Nevertheless, I completed the task - autotests appeared on the project, and we were able to test the application operation against the background of constant changes. In addition, the presence of tests eliminated the need to sit and pierce the same scenarios twice a week, which takes a lot of time with manual testing, because each scenario is repeated 100 times. And I got a powerful experience and understanding of how it was really necessary to do all this. If you have something to advise or add to the above, I will be glad to continue the conversation in the comments.

Source: https://habr.com/ru/post/353962/

All Articles