Compare Draft, Gitkube, Helm, Ksonnet, Metaparticle and Skaffold

Recently, Kubernetes is very popular, and developers are looking for additional ways and methods for deploying applications in a cluster of this system. Even the

kubectl command line kubectl become perceived as a low-level tool, while users continue to look for even simpler ways to interact with the cluster. Draft, Gitkube, Helm, Ksonnet, Metaparticle and Skaffold are just a few of the tools that help developers create and deploy applications in Kubernetes.Draft, Gitkube and Skaffold simplify application development by letting developers launch them as quickly as possible in a Kubernetes cluster. Helm and Ksonnet help in the deployment process, because they can determine whether the application is ready to be sent, as well as manage the release of new versions, the processing of various clusters, etc. Metaparticle is an unusual tool that allows you to work with any formats (YAML, dockerfile) as part of your own code.

So, what to use in a particular situation?

')

Let's get a look.

Draft

Simple application development and deployment in any Kubernetes cluster.

As the name implies, Draft simplifies the development of applications running within Kubernetes clusters. The official statement says that Draft is a tool for developing applications running in Kubernetes, and not for deploying them. In the project documentation of the Draft tool for the deployment of applications it is recommended to use Helm.

The main task of Draft is to transfer the code, on which the developer is still working, from his computer to the Kubernetes cluster before the changes are fixed in the version control system. Their fixation will occur only after the developer is satisfied with the edits added and launched with the help of Draft in the Kubernetes cluster.

Draft is not designed for production deployment, as it is intended solely for rapid application development for Kubernetes. However, this tool integrates well with Helm, as it uses it for laying out changes.

Architecture

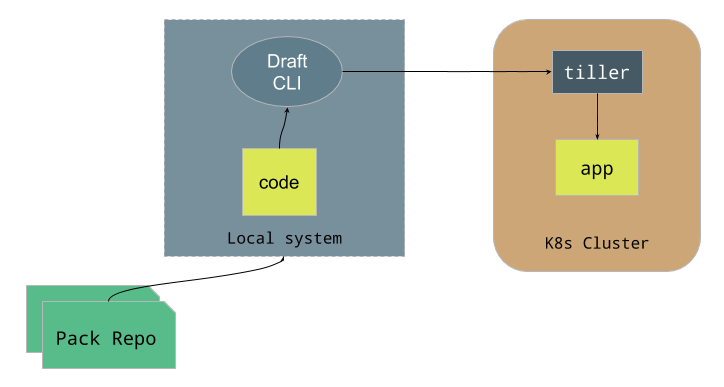

Draft: architecture diagram

As you can see in the diagram, CLI

draft is a key component. It determines the application language used in the source code and applies the appropriate package from the repository. A package is a combination of the dockerfile and the Helm chart that defines the environment for the application. Packages can be defined and added to repositories. Users can define their own packages and repositories , as they are presented as files on local systems or in a Git repository.Any source directory can be expanded if there is a corresponding package for this stack. After the directory is configured using the

draft create command (adds the dockerfile, the Helm chart and the draft.toml file), you can use the draft up command to create a docker image, transfer it to the registry and launch the application using the Helm chart (provided that Helm installed). Execution of this command after each change will lead to the deployment of a new assembly.In addition, there is a

draft connect command that can redirect connections to the local system, as well as transfer logs from the container. It can be used in conjunction with the nginx-Ingress command to provide domain names for each deployable application.From scratch to k8s

Below are the steps that will allow you to run an application written in Python in the k8s cluster using Draft (a more detailed guide is in this document ).

Requirements:

- cluster k8s (hence the kubectl interface);

- Helm CLI;

- Draft CLI;

- Docker;

- Docker repository for storing images.

$ helm init $ draft init $ draft config set registry docker.io/myusername $ git clone https://github.com/Azure/draft $ cd draft/examples/example-python $ draft create $ draft up ## edit code $ draft up Using

- Application development within the Kubernetes cluster.

- Used in the “internal development cycle” before changes in the code are recorded in the version control system.

- Pre-CI: as soon as development using Draft has been completed, the concept of continuous integration and delivery (CI / CD) is applied.

- Not suitable for development in production.

More detail here .

Gitkube

Creating and deploying docker images in Kubernetes using git push

Gitkube is a tool with which you can create and deploy docker images in Kubernetes using the

git push command. Unlike Draft, Gitkube does not have a command line interface, and it runs exclusively in a cluster.Any source repository with dockerfile can be deployed using Gitkube. After Gitkube is installed and opened in a cluster, the developer can create a remote user resource that provides the remote Git URL. Now you can send changes to this address, after which the kubectl assembly of Docker will be deployed in the cluster. Application manifests can be created using any tool (kubectl, helm, etc.)

Everything is focused on plug-and-play installation and the use of popular tools (Git and kubectl). Flexibility with respect to the deployed repository is not allowed. The context of the Docker assembly, the dockerfile path, as well as the updated deployments can be customized.

Authentication for the git remote command is based on the SSH public key. Every time changes made to the code are captured and applied using Git, the build and deployment processes are started.

Architecture

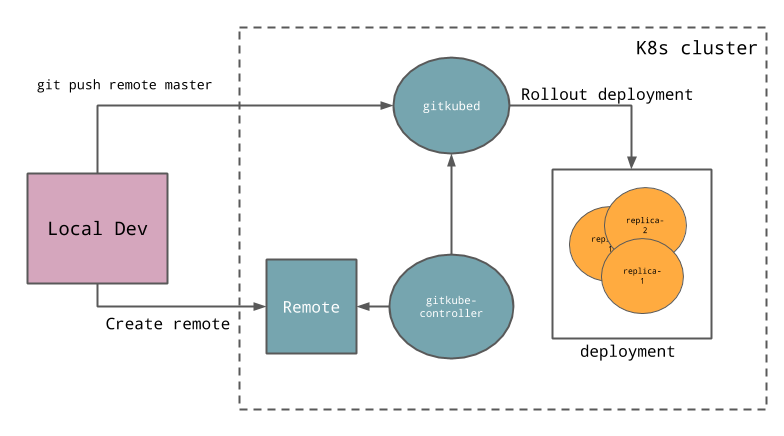

Gitkube: architecture diagram

There are 3 components in the cluster: remote CRD, which defines what should happen when sending to a remote URL; gitkubed , which creates Docker images and updates the deployment process; as well as a gitkube controller that tracks CRD to configure gitkubed .

After these objects appear in the cluster, the developer can create his own applications using kubectl. The next step is to create a remote object that tells Gitkube what should happen when the git push command is executed against a specific remote address. Gitkube writes the remote URL back to the remote object field.

From scratch to k8s

Requirements:

- cluster k8s (kubectl);

- Git;

- Gitkube installed in a cluster (

kubectl createcommand).

Below are the steps required to deploy applications to Kubernetes, including installing Gitkube:

$ git clone https://github.com/hasura/gitkube-example $ cd gitkube-example $ kubectl create -f k8s.yaml $ cat ~/.ssh/id_rsa.pub | awk '$0=" - "$0' >> "remote.yaml" $ kubectl create -f remote.yaml $ kubectl get remote example -o json | jq -r '.status.remoteUrl' $ git remote add example [remoteUrl] $ git push example master ## edit code ## commit and push Using

- Simple deployment using Git (without building Docker).

- Application Development at Kubernetes.

- During development, the current branch (WIP, work in progress) can be “pushed” many times to quickly get results.

→ More details here.

Helm

Package Manager for Kubernetes

The Helm tool, as stated in the description, allows you to manage applications on Kubernetes using charts . Helm creates Kubernetes manifests and manages their versions, providing the ability to roll back to any object (and not just for deployments). Charts can include deploy, services, ConfigMap interfaces, etc. They also support the use of templates so that variables can be easily changed. Charts can be used for complex applications with many dependencies.

Helm is primarily intended for the deployment of manifests and their management in the production environment. Unlike Draft or Gitkube, which help in application development, Helm focuses solely on production and deployment. There is a wide range of embedded charts that can be used with Helm.

Architecture

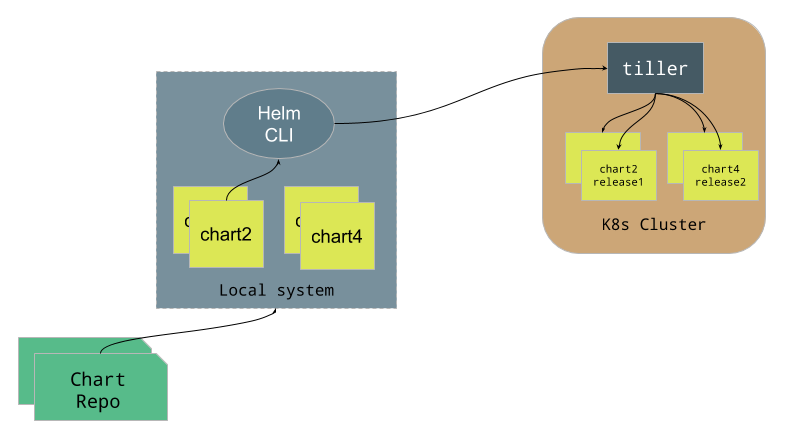

Helm: architecture diagram

First, consider the charts themselves. As mentioned earlier, a chart is a collection of information necessary to create an instance of the Kubernetes application. It can contain deployments, services, ConfigMap interfaces, Secret plugins, ingress controller, etc. All of them are defined as YAML files, which, in turn, are templates. Developers can also determine the dependence of some charts on others, or include some charts in the others. Charts can be published or merged into a charts repository.

Helm has two main components: the Helm command line interface and the Tiller server. The command line assists in managing charts and repositories, and also interacts with Tiller to deploy these charts.

Tiller is a component running in a cluster that communicates with the k8s server API to create and manage real objects. He also plays the charts for the release assembly. When the developer executes the

helm install <chart-name> command, the client contacts the server and reports the name of the chart. After that, Tiller finds a chart, creates a template and expands it in a cluster.Helm does not process the source code. To create an image, you will need any CI / CD system, after which you can use Helm for deployment.

From scratch to k8s

Requirements:

- cluster k8s;

- Helm CLI;

Example of deploying Wordpress in a k8s cluster using Helm:

$ helm init $ helm repo update $ helm install stable/wordpress ## make new version $ helm upgrade [release-name] [chart-name] Using

- Layout of complex applications (with many k8s objects).

- Reusable charts repository.

- Easy to deploy in multiple environments.

- Embedding Charts: Addictions.

- Patterns: easy parameter changes.

- Distribution and reusability.

- Deployment at the last stage: continuous delivery.

- Deploy an already created image.

- Joint update and rollback of multiple k8s objects - lifecycle management.

More detail here .

Ksonnet

CLI-enabled infrastructure for creating flexible Kubernetes cluster configurations

The Ksonnet tool provides an alternative way to define application configuration for Kubernetes. It uses jsonnet (JSON template creation language) instead of standard YAML files to define the k8s manifests. The Ksonnet command line generates the final YAML file and then applies it to the cluster.

Ksonnet is designed to identify components that can be reused when building applications.

Architecture

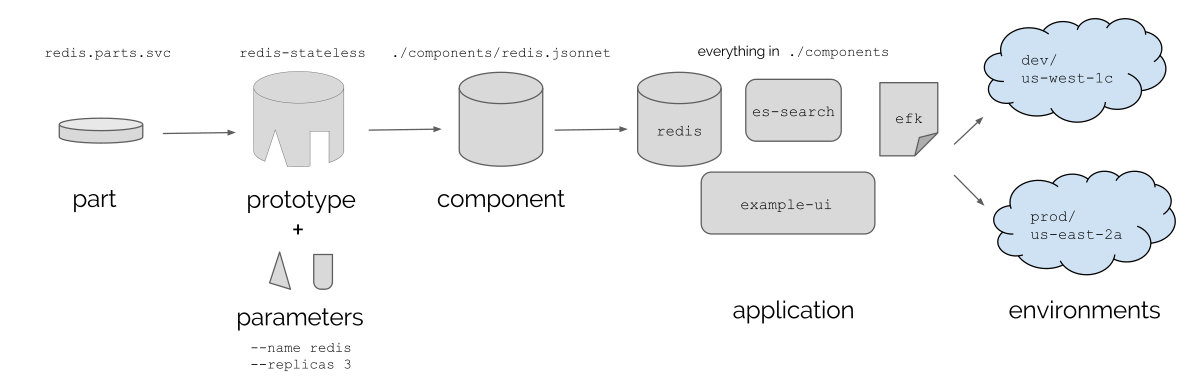

Ksonnet: Review

The main elements are called parts. Combining parts, we create prototypes . After adding parameters to the prototype , it becomes a component, and the components are grouped together to form an application . Applications can be deployed in multiple environments .

There are three main stages of Ksonnet: creating an application directory (

ks init command), automatic manifest generation (or writing your own) for a component ( ks generate command), deploying an application in a cluster / environment ( ks apply <env> command). Various environments are managed using the ks env command.In short, Ksonnet helps manage applications as a group of components using Jsonnet, and then deploy them in various Kubernetes clusters.

Like Helm, Ksonnet does not process source code. This is a tool for defining applications for Kubernetes using Jsonnet.

From scratch to k8s

Requirements:

- cluster k8s;

- Ksonnet CLI.

Guestbook example:

$ ks init $ ks generate deployed-service guestbook-ui \ --image gcr.io/heptio-images/ks-guestbook-demo:0.1 \ --type ClusterIP $ ks apply default ## make changes $ ks apply default Using

- Flexibility in writing configurations through the use of Jsonnet.

- Layout: Complex applications can be assembled by combining and matching components.

- The prototype library and the ability to reuse components (eliminate duplication).

- Easy to deploy in multiple environments.

- Deployment at the last stage: the stage of continuous delivery.

More detail here .

Metaparticle

Standard Library for Cloud Applications Running in Containers and Kubernetes

Metaparticle is a standard library for cloud applications. It helps to apply standard patterns for the implementation of proven models of development of distributed systems using programming language interfaces.

Metaparticle provides interfaces for idiomatic languages that help create systems that can containerize and deploy applications in Kubernetes, develop replicable load-balanced services, and much more. There is no need to define a dockerfile or Kubernetes manifest. Everything is processed using idioms characteristic of the programming language used.

For example, to create a web application in Python, you need to add the

containerize decorator (imported from the Metaparticle package) to the main function. After execution of the Python code in the Kubernetes cluster, the docker image is assembled and deployed in accordance with the parameters specified in the decorator. To connect to the cluster uses the standard context kubectl. Thus, a change of environment will mean a change in the current context.Similar primitives are available for NodeJS, Java and .NET. Work is underway to add support for more languages.

Architecture

The

Metaparticle library for the corresponding language requires patterns and dependencies to create code in the form of docker images, send it to the registry, create k8s YAML files, and deploy to the cluster.The Metaparticle package contains language idiomatic bindings for creating containers. Metaparticle Sync is a library inside Metaparticle for synchronizing several containers running on different platforms.

JavaScript / NodeJS, Python, Java and .NET are currently supported.

From scratch to k8s

- Requirements:

- cluster k8s;

- Metaparticle library for a supported language;

- Docker;

- Docker repository for storing images.

An example for Python (only the relevant part) is the creation of docker images and deployment in a k8s cluster:

@containerize( 'docker.io/your-docker-user-goes-here', options={ 'ports': [8080], 'replicas': 4, 'runner': 'metaparticle', 'name': 'my-image', 'publish': True }) def main(): Handler = MyHandler httpd = SocketServer.TCPServer(("", port), Handler) httpd.serve_forever() Using

- Developing applications without the need for YAML or dockerfile files.

- You no longer need to learn many tools and file formats to take full advantage of containers and Kubernetes.

- The rapid development of replicable, load-balanced services.

- Synchronization management, for example, blocking and selection of master copies in distributed networks.

- Simple development of cloud patterns, such as segmented systems.

More detail here .

Skaffold

Simple and repeatable development in Kubernetes

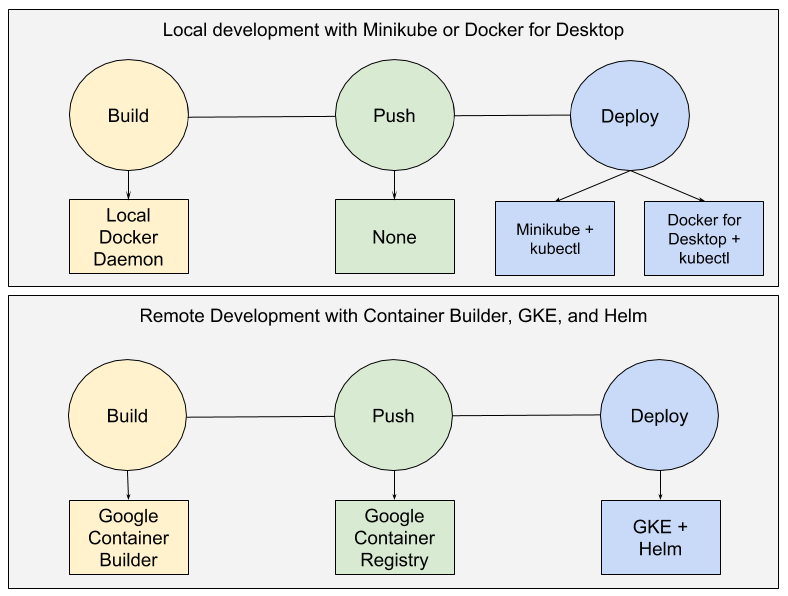

Skaffold manages the process of creating, storing and deploying applications in Kubernetes. Skaffold, like Gitkube, allows you to deploy any directory with dockerfile in a k8s cluster.

Skaffold creates a local docker image, sends it to the registry and deploys it using the

skaffold command line skaffold . It also monitors the status of the directory and, when the code inside it changes, builds and redeploys it. In addition, it sends logs from containers.The process of creating, transmitting and deploying is configured using a YAML file, so the developer can use the most convenient combination of tools at these stages. For example, you can choose docker build or Google Container Builder to create, kubectl or Helm to deploy, etc.

Architecture

Skaffold Review

Skaffold CLI does all the work. We refer to the file

skaffold.yaml , which defines the necessary actions. A typical example is creating a docker image with a dockerfile in the directory where skaffold dev is skaffold dev , tagging with the sha256 hash, transferring the image, installing it into the k8s manifest pointing to the YAML file, and applying the manifest to the cluster. This process runs continuously, responding to every change in the directory. Logs from the running container are transferred to the same viewing window.Skaffold is very similar to Draft and Gitkube, but it is a more flexible tool, as it allows you to manage different chains of build-push-deploy processes, as shown in the example above.

From scratch to k8s

Requirements:

- cluster k8s;

- Skaffold CLI;

- Docker;

- Docker repository for storing images.

Steps that must be performed to deploy an application that displays the string hello-world:

$ git clone https://github.com/GoogleCloudPlatform/skaffold $ cd examples/getting-started ## edit skaffold.yaml to add docker repo $ skaffold dev ## open new terminal: edit code Using

- Rapid deployment.

- Cyclic build is a continuous build / deploy cycle.

- Application Development at Kubernetes.

- Defining build-push-deploy chains in a CI / CD stream

More detail here .

***

You can write to the author if he missed something or was mistaken somewhere. The article does not mention tools like Ksync and Telepresence , since another article about them is planned in the near future. But if you know about other useful tools belonging to the same category that are mentioned here, write about it in the comments.

A discussion of the article on Hacker News can be found here .

Source: https://habr.com/ru/post/353958/

All Articles