"Calendar of the tester" for April. Metrics in QA service

The April article from the “Calendar of the Tester” series is devoted to metrics. Kirill Ratkin, Kontur.Externa tester, will tell you how to increase the effectiveness of testing with their help and not go to extremes.

How often do you have to evaluate something? Probably every day. Good or bad weather today, whether the cat behaves tolerably, whether you like this shirt. At work, you assess your goals and results: this is done well, and here you can do better. Such assessments are often based on subjective feeling. But these estimates can not improve the efficiency of processes, and need more detail. Then metrics come to the rescue.

How can you characterize your workflows and practices? They are good? Bad? How much? Why do you think so?

I can’t hold back and quote Lord Kelvin’s words:

“If you can measure what you are talking about and express it in numbers, then you know something about this subject. But if you cannot express it quantitatively, your knowledge is extremely limited and unsatisfactory. ”

No process can be considered mature until it becomes transparent and manageable.

I have seen two extremes:

- People think that everything is good / bad and without radar. "Well, this is already clear" (c).

- Each step is hung with numbers, but most of them are dead weight and are not used at all.

The truth, as always, is in the middle. To get closer to it, you need to understand the fundamental thing: metrics are not a tribute to fashion and not a panacea for bad processes. Metrics are just a tool, and the results of the work of this tool are not an end in itself, but only some basis for further steps.

Metrics are needed for:

- Enhance objectivity. For example, nothing will be able to characterize the stability of your autotests more precisely than the dry numbers of the percentage of passing.

- Visualization

- Estimates of the dynamics of change. If you cannot do everything you have planned, then choose more effective tasks from the list. To make an informed decision, you need to understand what practices have a higher efficiency.

Properly gash metrics is not easy. First, you need to clearly describe the causal relationships, identify all the factors influencing the process, and give them your weight. Secondly, to technically implement the collection itself.

Autotest metrics

If you are going to enter metrics in autotests, you need to understand why it is for you.

The specifics of our autotests:

- Presence of guvy user scenarios.

- Integration checks with test stands of third-party services.

- Cross-browser compatibility.

- Configuring CI and test virtualok.

- Providing our product AT to other service teams.

We spent a lot of energy on maintaining stability and speed, and even appointed a duty officer for autotests. When we no longer understand how good or bad everything is, how much resources we spend on it, we began to envelop our system with metrics.

Metrics attendant for autotests

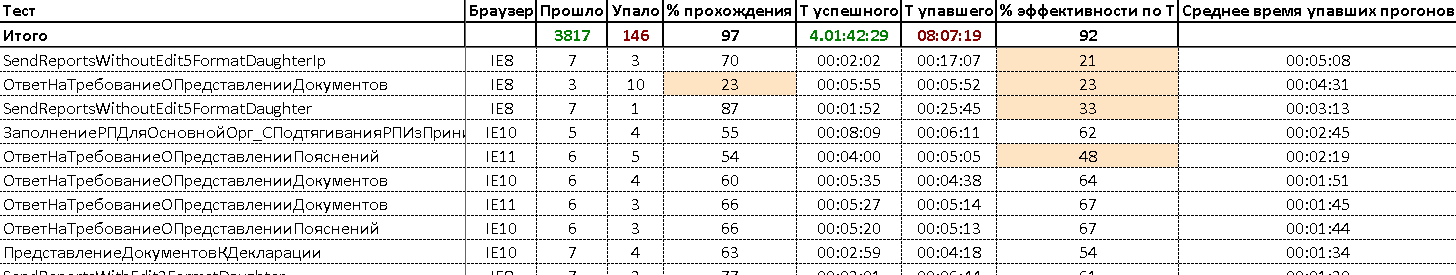

We began to pay attention not only to the stability of the test and run time, but also to:

- red run time

Shows how long the test falls. Runs that fall for a long time keep the queue on virtuals. Sometimes WebDriver throws exceptions, and such tests could hang for hours. Such situations are solved a level higher for all tests, but you can get rid of incorrect timeouts and expectations only by touching a specific test. We are required to deal with runs that have this metric for more than 20 minutes. - test time effectiveness

How much the agent spends on the real run, and not on instability. This metric is about noisy tests. We wanted to understand how much time is spent on green and red runs, and calculate the ratio. Tests with low efficiency are first in line for refactoring. - relative time lost

This metric shows how many minutes are lost for each run of this test due to instability. In our team, it is considered the second most important after stability.

Plus, we took into account a number of specific features for us:

- The unique key for us was not the name of the test, but a couple of test - the browser.

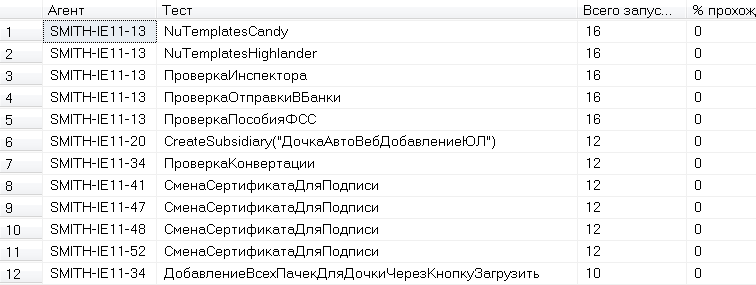

The same UI test behaves differently in fast and slow browsers, different JS interpreters, etc. Therefore, the tester rules not Test1, but Test1 in Browser1. - Some agents with seemingly identical environments (OS, browser, etc.) differed from each other in the stability of passing tests.

There are difficulties with the generation of absolutely identical environments. Plus, virtuals are not closed from developers or testers who want to "podobezhit something." Therefore, while these problems have not been solved, it is necessary to make samples for running tests in the context of each agent. If some test passes on a specific agent is noticeably worse, then this triggers the person on duty to communicate more closely with this virtual machine.

Search for corrupted virtual machines

Most teams can collect metrics using CI. We didn’t have enough of it - we’ve too tricky to set up our categories and launchers. I had to write a small wrapper, which in TearDown sent to the database the build meta with all the indicators.

Our goals:

- Assessment of the situation

- Information for targeted response

Metrics made it possible to understand by what indicators we subside. Next we set weights, benchmarks and work out the order of achieving the goal.

Once a fortnight at the bat with the attendant we:

- choose which characteristic will be influenced in the first place;

- discuss the causes of instability;

- we prioritize tasks;

- pretending to work front;

- plan targets.

These metrics are collected automatically every morning after nightly runs, consisting of a set of repeated test runs.

Autotest Coverage Evaluation

Another big task is to assess coverage. No other option for calculating this indicator suited me completely. What coverage do you think? Share in the comments.

Someone considers coverage on hand-written cases. There are a number of drawbacks. First, it is the human factor. It is good if the task is taken by a competent analyst who will rely on representative statistics. But in this case, you can skip something, do not take into account. Secondly, as the system under test grows, this approach becomes too expensive to maintain.

Someone considers code coverage. But it does not take into account with which parameters we call this or that method. Indeed, by going to a method that divides A into B with some “safe” arguments, we cannot say that the method is covered and tested. And if the division by zero? And if overflow? The criticality of the uncovered code is also not taken into account, and therefore the motivation to increase this coverage is unclear.

An ideal option for us is to consider covering popular user yuzkeys. And we will consider the state of the system and atomic transitions between them. The main difference from the first version of the calculation is the absence of the human factor. Cases will be generated by a script. Those. switching to a specific page or some action on it sends a request to our metrics service. This is a feature of our system under test, so statistics are collected automatically.

Next, the principle is simple:

- we calculate the top N of the popular states of our test system from combat sites,

- consider pairwise transitions between these states,

- sort them by the number of transitions,

- go from top to bottom and check if we have such a transition to AT.

This algorithm is restarted once a month to update the top. For all the missing tests, tasks are added to the backlog and cleared out in a separate thread.

Release Cycle Metrics

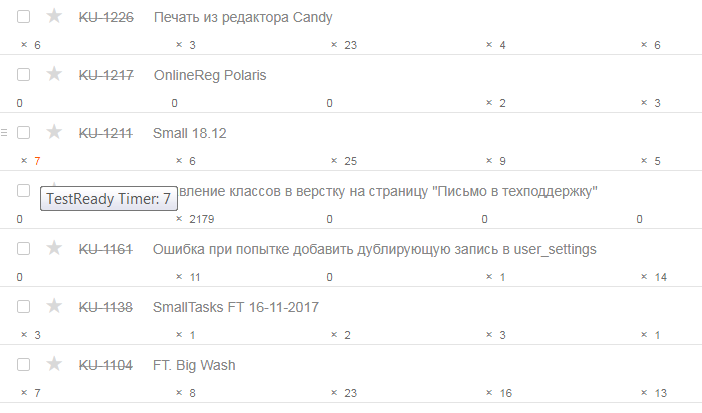

Another place of application of metrics served as our release cycle. I do not know about you, but in our team the testers resource is limited. Therefore, updates that are ready for testing are not taken immediately, but with a delay. At some point, this time lag began to cause discomfort, and we decided to assess the situation.

We began to consider how long the task is at each stage of the release cycle.

Collection of release cycle metrics based on YouTrack

From all this we managed:

- calculate how many testers should work on the release of releases, and how many can switch to other tasks, such as AT refactoring, finishing infrastructure, tools, etc.,

- understand the optimal ratio of roles and, as a result, predict the number of vacancies,

- determine the points of application of force for such an intra-team goal as a technical release in an hour.

Now we have the ratio of writing developers to testers producing them in the region of 3 (± 0.1), 2.5 is considered to be comfortable. Given the ongoing automation of the release cycle, we want to reach a ratio of 3.5 and 3.

Our implementation of the collection of metrics was based on the editor of the rules of the internal team bugtracker. This is YouTrack Workflow. In your team, you can use the same capabilities of your trackers, or write bikes with web hooks.

We brought private fields for counters for task and every hour we increment the value in them. We took into account that the meter should tick only during working hours and only on weekdays - this is our working schedule. Everything is cunning! =)

Metrics for the sake of metrics

Typical antipatterns are “metrics for the sake of metrics.” As I noted above, metrics are only a tool that helps quantify how well you are achieving goals.

Suppose you never understood such a metric as the number of tests. What for? What does the indicator say to you that in some team there are 12345 tests? Do they test well? Is not a fact. Their auto tests can be trusted? Hardly guys even know what they are checking. Does it show autotest performance? Perhaps there are minuses from support more than any profit - what kind of efficiency are we talking about? In fact, this metric implies covering and trusting your test system. But, damn, this is not the same thing. If you want to talk about coverage, then they are operated.

Always remember that collecting metrics takes you some time and effort. Appreciate it and approach this practice wisely!

It is very interesting to us, and which metrics you use, and which, on the contrary, you refused. Write in the comments!

List of calendar articles:

Try a different approach

Reasonable pair testing

Feedback: as it happens

Optimize tests

Read the book

Analytics testing

The tester must catch the bug, read Kaner and organize a move.

Load service

Metrics in QA service

Test security

Know your customer

Disassemble backlog

')

Source: https://habr.com/ru/post/353928/

All Articles