Concepts of distributed architecture, which I met when building a large payment system

I joined Uber two years ago as a mobile developer with some backend development experience. Here I was engaged in the development of functional payments in the application - and along the way I rewrote the application itself . After that, I moved to the management of developers and headed the team itself. Thanks to this, I was able to get much closer acquainted with the backend, since my team is responsible for many of our backend systems that allow making payments.

Before my work at Uber, I had no experience with distributed systems. I received a traditional education in Computer Science, after which I worked in full-stack development for a dozen years. Therefore, even though I could draw various diagrams and talk about tradeoffs in the systems, by that time I didn’t understand and understood concepts of distribution, such as consistency , availability or idempotency. .

In this post, I’m going to talk about several concepts that I needed to learn and put into practice when building a large-scale, highly available distributed payment system that today works in Uber. This is a system with a load of up to several thousand requests per second, in which the critical functionality of payments should work correctly, even in those cases when individual parts of the system stop working.

')

Is this a complete list? Most likely no. However, if I personally knew about these concepts earlier, it would make my life much easier.

So, let's get down to our immersion in the SLA, consistency, durability of data, message integrity, idempotency, and some other things that I needed to learn in my new job.

In large systems that process millions of events a day, some things are simply by definition not required to go according to plan. That is why, before diving into system planning, the most important step is to take a decision on what the “healthy” system means to us. The degree of "health" should be something that can actually be measured. SLA ( service level agreements ) are the generally accepted way to measure the “health” of a system. Here are some of the most common types of SLA I've encountered in practice:

Why is SLA needed when creating a large payment system? We are creating a new system that replaces the existing one. To make sure that we are doing everything right and that our new system will be “better” than its predecessor, we used the SLA to determine our expectations from it. Accessibility was one of the most important requirements. Once we had a goal, we needed to deal with the trade-offs in architecture in order to achieve these indicators.

As the business that uses our newly created system grows, the load on it will only increase. At some point, the existing installation will not be able to withstand a further increase in load, and we will need to increase the allowable load. The two generally accepted scaling strategies are vertical or horizontal scaling.

Horizontal scaling is to add more machines (or nodes) to the system to increase throughput ( capacity ). Horizontal scaling is the most popular way of scaling distributed systems.

Vertical scaling is essentially “buying a bigger / stronger machine” - a (virtual) machine with a larger number of cores, better computing power and more memory. In the case of distributed systems, vertical scaling is usually less popular because it can be more expensive than horizontal scaling. However, some well-known large sites, such as Stack Overflow, successfully scaled vertically to match the load.

Why does scaling strategy make sense when you create a large payment system? We decided at an early stage that we would build a system that would scale horizontally. Despite the fact that vertically scaling is permissible to use in some cases, our payment system has already reached the projected load by that time and we are pessimistic about the assumption that the only super-expensive mainframe can withstand this load today, not to mention the future . In addition, there were several people in our team who worked for major payment service providers and had a negative experience trying to scale vertically even on the most powerful machines that could be bought with money in those years.

The availability of any of the systems is important. Distributed systems are often built from machines whose individual availability is lower than the availability of the entire system. Let our goal is to build a system with 99.999% availability (downtime is approximately 5 minutes / year). We use machines / nodes that have an average availability of 99.9% (they are in downtime for about 8 hours / year). The direct way to achieve the availability indicator we need is to add several more such machines / nodes to the cluster. Even if some of the nodes are “down”, others will remain in service and the overall availability of the system will be higher than the availability of its individual components.

Consistency is a key issue in high-availability systems. The system is consistent if all nodes see and return the same data at the same time. Unlike our previous model, when we added more nodes to achieve greater availability, making sure that the system remains consistent is far from trivial. To make sure that each node contains the same information, they must send messages to each other in order to be constantly synchronized. However, messages sent by them to each other may not be delivered - they may be lost and some of the nodes may be inaccessible.

Consistency is a concept that took me the most time to realize before I understood it and appreciated it. There are several types of consistency , the most widely used in distributed systems is strong consistency , weak consistency and eventual consistency . You can read a useful practical analysis of the advantages and disadvantages of each of the models in this article . Usually, the weaker the required level of consistency, the faster the system can work - but the more likely it is that it will not return the most recent data set.

Why consistency should be considered when creating a large payment system? Data in the system must be consistent. But how agreed? For some parts of the system, only strongly consistent data will do. For example, we need to keep in a strongly coordinated form the information that the payment was initiated. For other parts of the system that are not so important, consistency can ultimately be considered a reasonable compromise.

It illustrates well this listing of recent transactions: they can be implemented using eventual consistency — that is, the last transaction may appear in some parts of the system only some time later, but due to this the list request will return a result with less delay or will require less resources to perform.

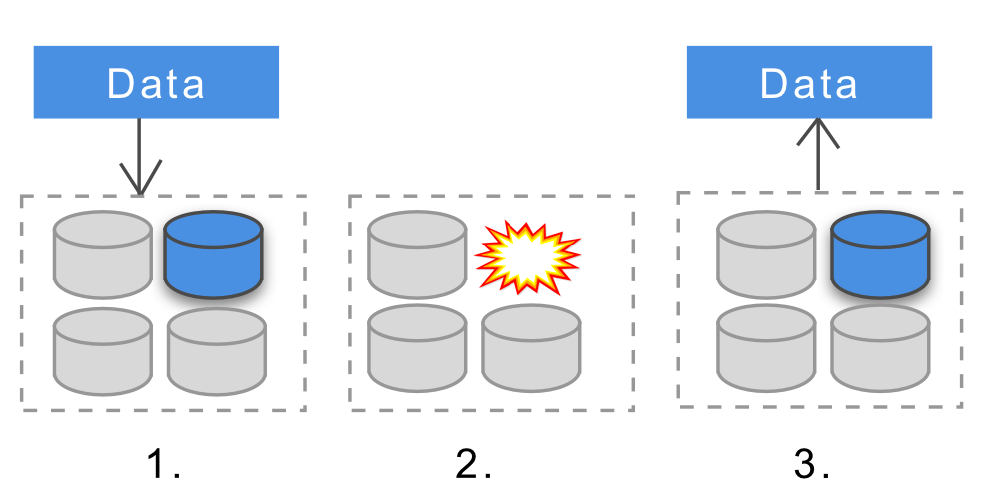

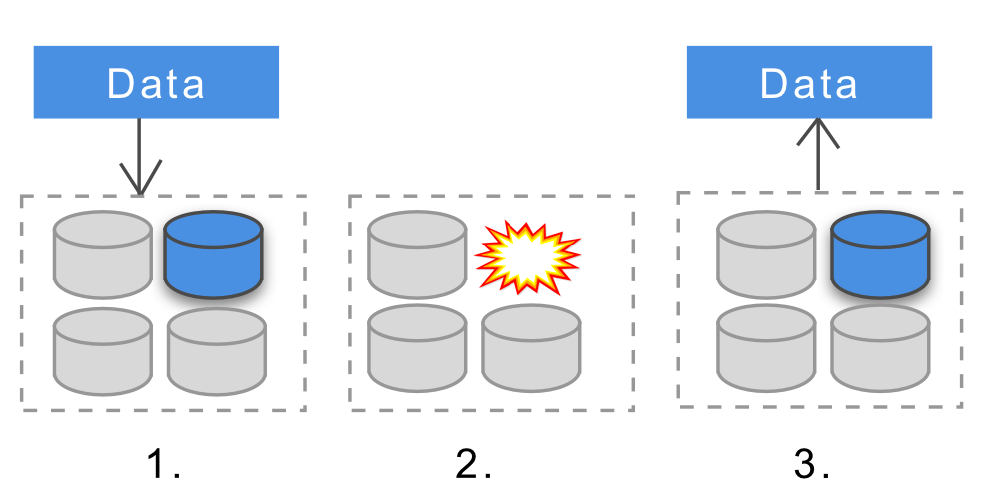

Durability means that as soon as data is successfully added to the data warehouse, it will be available to us in the future. This will be true even if the nodes of the system go offline, they will fail or the data nodes will be damaged.

Different distributed databases have different levels of data longevity. Some of them support data durability at the machine / node level, others do it at the cluster level, and some do not provide this functionality out of the box. Some form of replication is usually used to increase longevity — if data is stored on several nodes and one of the nodes stops working, the data will still be available. Here is a good article explaining why achieving longevity in distributed systems can be a serious challenge.

Why does data longevity matter when building a payment system? If the data is critical (for example, these are payments), then we cannot afford to lose them in many of the parts of our system. The distributed data warehouses that we built had to maintain data longevity at the cluster level — so even if the instances crash, completed transactions will persist. Nowadays, most distributed data storage services — like Cassandra, MongoDB, HDFS, or Dynamodb — all maintain longevity at various levels and can all be configured to provide cluster-level longevity.

Nodes in distributed systems perform calculations, store data, and send messages to each other. A key characteristic of sending messages is how reliably these messages will arrive. For critical systems, there is often a requirement that none of the messages be lost.

In the case of distributed systems, messaging is usually performed using some distributed message service — RabbitMQ, Kafka, or others. These message brokers can support (or are configured to support) various levels of message delivery reliability.

Preservation of the message means that when a failure occurs on the node processing the message, the message will still be available for processing after the problem is resolved. Message longevity is typically used at the message queue level. With a durable message queue, if the queue (or node) goes offline when the message is sent, it will still receive the message when it returns to the online one. A good detailed article on this issue is available at the link .

Why the safety and durability of messages are important in the construction of large payment systems? We had messages that we couldn’t afford to lose — for example, a message that a person initiated payment for a trip payment. This meant that the messaging system that we had to use had to work without loss: every message had to be delivered once. However, creating a system that delivers each message exactly once than at least once - these are tasks that differ significantly in their difficulty. We decided to implement a messaging system that delivers at least once, and chose a messaging bus , on top of which we decided to build it (we chose Kafka, creating a lossless cluster, which was required in our case).

In the case of distributed systems, anything may go wrong - connections may fall off in the middle or requests may time out. Customers will repeat these requests frequently. The idempotent system ensures that no matter what happens, and no matter how many times a specific query is executed, the actual execution of this query occurs only once. A good example is making a payment. If the client creates a request for payment, the request is successful, but if the client is in time out, then the client can repeat the same request. In the case of an idempotent system, money will not be written off twice from the person making the payment; but for a non-idemonet system this is quite possible.

Designing idempotent distributed systems requires some kind of distributed locking strategy. This is where the concepts we discussed earlier come into play. Let's say we intend to implement idempotency with optimistic locking to avoid parallel updates. In order for us to resort to optimistic blocking, the system must be strictly consistent - so that during the execution of the operation we can check whether another operation was started using some form of versioning.

There are many ways to achieve idempotency, and each particular choice will depend on the limitations of the system and the type of operation performed. Designing idempotent approaches is a worthy challenge for a developer — just look at Ben Nadel's posts , in which he talks about the different strategies he used , which include both distributed locks and database constraints . When you design a distributed system, idempotency can easily be one of the parts that you have missed from your attention. In our practice, we have come across cases in which my team “burned out” because it was not convinced that there was a correct idempotency for some key operations.

Why does idempotency matter when building a large payment system? Most importantly: to avoid double debits and double refunds. Given that our messaging system has a “at least once, without loss” type of delivery, we must assume that all messages can be delivered several times and the systems must guarantee idempotency. We made decisions to handle this using versioning and optimistic locking, where our systems implement idempotent behavior using strictly consistent storage as their data source.

Distributed systems often need to store much more data than a single node can afford. So how do we save the data set on the right number of machines? The most popular technique for this is sharding . The data is horizontally partitioned using some kind of hash assigned to the partition. Let many distributed databases today implement sharding in their “under the hood”, it is in itself an interesting topic that should be studied - especially resharing . In 2010, Foursquare had a 17-hour downtime due to hitting a regional sharding event, after which the company shared an interesting post-mortem that sheds light on the root of the problem.

Many distributed systems have data or calculations that replicate across multiple nodes. In order to make sure that operations are performed in a consistent manner, a voting approach is defined, in which, in order to recognize the operation as successful, it is necessary that a certain number of nodes get the same result. This process is called quorum.

Why quorum and sharding make sense when building a large payment system in Uber? Both of these concepts are simple and are used almost everywhere. I met them when we set up replication in Cassandra. Cassandra (and other distributed systems) uses quorum and local quorum to ensure consistency between clusters.

The familiar vocabulary we use to describe programming practices — things like variables, interfaces, method calls — involves systems from one machine. When we talk about distributed systems, we have to use other approaches. A common way to describe such systems is the model of actors , within which the code is seen in terms of communication. This model is popular due to the fact that it coincides with the mental model of how we envision, for example, the interaction of people in an organization. Another equally popular way of describing distributed systems is CSP, interacting sequential processes .

The model of actors is based on the actors who send each other messages and react to them. Each actor can do a limited set of things — create other actors, send messages to others, or decide what to do with the next message. With the help of a few simple rules, we can fairly well describe complex distributed systems that can restore themselves after the actor falls. If you are not familiar with this approach, then I recommend you an article Model of actors for 10 minutes by Brian Storty . For many languages, there are libraries or frameworks that implement the model of actors . For example, in Uber, we use Akka for some of our systems.

Why does it make sense to apply the model of actors in a large payment system? Many engineers have participated in the development of our system, most of whom have already had experience working on distributed systems. We decided to follow the standard distributed model instead of engaging in “bikes” and inventing our own distributed principles.

When building large distributed systems, the goal is usually to make them fault-tolerant, resilient, and scalable. Whether it is a payment system or some other high-load system, the patterns for achieving the desired can be the same. Those who deal with such systems regularly discover and disseminate the best practices of their construction - and the reactive architecture is a similar popular and widely used pattern.

To get acquainted with the reactive architecture, I suggest reading the Reactive Manifest ( in Russian ) and watching the 12-minute video here .

Why does it make sense to use a reactive architecture if you create a large payment system? Akka , the library we used to create most of our new payment system, is heavily influenced by reactive architecture. Many of our engineers involved in building this system were already familiar with the best practices of reactive programming. Following the reactive principles - creating a responsive, fault-tolerant, flexible message-driven system based on messages, we arrived at this conclusion in a natural way. The ability to have a model on which you can rely and with which you can assess the progress of development and its direction, has proved extremely useful, and I will rely on it in the future when creating new systems.

I was lucky to take part in the restructuring of a large-scale, distributed and critical system: the one that allows you to work with payments in Uber. Working in this environment, I became acquainted with many distributed concepts that I had not used before. I collected them here in the hope that others will find my story useful for starting the study of distributed systems or learn something new for themselves.

This post was devoted exclusively to the planning and architecture of such systems. There are many different subtleties that are worth telling about building, deploying and migrating high load systems, and also about their reliable operation; I am going to raise all these topics in subsequent posts.

Before my work at Uber, I had no experience with distributed systems. I received a traditional education in Computer Science, after which I worked in full-stack development for a dozen years. Therefore, even though I could draw various diagrams and talk about tradeoffs in the systems, by that time I didn’t understand and understood concepts of distribution, such as consistency , availability or idempotency. .

In this post, I’m going to talk about several concepts that I needed to learn and put into practice when building a large-scale, highly available distributed payment system that today works in Uber. This is a system with a load of up to several thousand requests per second, in which the critical functionality of payments should work correctly, even in those cases when individual parts of the system stop working.

')

Is this a complete list? Most likely no. However, if I personally knew about these concepts earlier, it would make my life much easier.

So, let's get down to our immersion in the SLA, consistency, durability of data, message integrity, idempotency, and some other things that I needed to learn in my new job.

SLA

In large systems that process millions of events a day, some things are simply by definition not required to go according to plan. That is why, before diving into system planning, the most important step is to take a decision on what the “healthy” system means to us. The degree of "health" should be something that can actually be measured. SLA ( service level agreements ) are the generally accepted way to measure the “health” of a system. Here are some of the most common types of SLA I've encountered in practice:

- Availability : the percentage of time that the service is operational. Let there be a temptation to achieve 100% accessibility, the achievement of this result can be a truly difficult task, and, moreover, very expensive. Even large and critical systems like a network of VISA, Gmail or Internet service providers do not have 100% availability - over the years they accumulate seconds, minutes or hours spent in downtime. For many systems, availability at four nines (99.99%, or roughly 50 minutes of downtime per year ) is considered high availability. In order to get to this level, you have to pretty sweat.

- Accuracy : Is data loss acceptable or inaccurate? If so, what percentage is acceptable? For the payment system I worked on, this indicator should have been 100%, since it was impossible to lose data.

- Capacity : what load should the system withstand? This indicator is usually expressed in requests per second.

- Latency : how long should the system respond? How much time should be served 95% and 99% of requests? In such systems, usually many of the queries are “noise”, so p95 and p99 delays are more practical in the real world.

Why is SLA needed when creating a large payment system? We are creating a new system that replaces the existing one. To make sure that we are doing everything right and that our new system will be “better” than its predecessor, we used the SLA to determine our expectations from it. Accessibility was one of the most important requirements. Once we had a goal, we needed to deal with the trade-offs in architecture in order to achieve these indicators.

Horizontal and vertical scaling

As the business that uses our newly created system grows, the load on it will only increase. At some point, the existing installation will not be able to withstand a further increase in load, and we will need to increase the allowable load. The two generally accepted scaling strategies are vertical or horizontal scaling.

Horizontal scaling is to add more machines (or nodes) to the system to increase throughput ( capacity ). Horizontal scaling is the most popular way of scaling distributed systems.

Vertical scaling is essentially “buying a bigger / stronger machine” - a (virtual) machine with a larger number of cores, better computing power and more memory. In the case of distributed systems, vertical scaling is usually less popular because it can be more expensive than horizontal scaling. However, some well-known large sites, such as Stack Overflow, successfully scaled vertically to match the load.

Why does scaling strategy make sense when you create a large payment system? We decided at an early stage that we would build a system that would scale horizontally. Despite the fact that vertically scaling is permissible to use in some cases, our payment system has already reached the projected load by that time and we are pessimistic about the assumption that the only super-expensive mainframe can withstand this load today, not to mention the future . In addition, there were several people in our team who worked for major payment service providers and had a negative experience trying to scale vertically even on the most powerful machines that could be bought with money in those years.

Consistency

The availability of any of the systems is important. Distributed systems are often built from machines whose individual availability is lower than the availability of the entire system. Let our goal is to build a system with 99.999% availability (downtime is approximately 5 minutes / year). We use machines / nodes that have an average availability of 99.9% (they are in downtime for about 8 hours / year). The direct way to achieve the availability indicator we need is to add several more such machines / nodes to the cluster. Even if some of the nodes are “down”, others will remain in service and the overall availability of the system will be higher than the availability of its individual components.

Consistency is a key issue in high-availability systems. The system is consistent if all nodes see and return the same data at the same time. Unlike our previous model, when we added more nodes to achieve greater availability, making sure that the system remains consistent is far from trivial. To make sure that each node contains the same information, they must send messages to each other in order to be constantly synchronized. However, messages sent by them to each other may not be delivered - they may be lost and some of the nodes may be inaccessible.

Consistency is a concept that took me the most time to realize before I understood it and appreciated it. There are several types of consistency , the most widely used in distributed systems is strong consistency , weak consistency and eventual consistency . You can read a useful practical analysis of the advantages and disadvantages of each of the models in this article . Usually, the weaker the required level of consistency, the faster the system can work - but the more likely it is that it will not return the most recent data set.

Why consistency should be considered when creating a large payment system? Data in the system must be consistent. But how agreed? For some parts of the system, only strongly consistent data will do. For example, we need to keep in a strongly coordinated form the information that the payment was initiated. For other parts of the system that are not so important, consistency can ultimately be considered a reasonable compromise.

It illustrates well this listing of recent transactions: they can be implemented using eventual consistency — that is, the last transaction may appear in some parts of the system only some time later, but due to this the list request will return a result with less delay or will require less resources to perform.

Data durability

Durability means that as soon as data is successfully added to the data warehouse, it will be available to us in the future. This will be true even if the nodes of the system go offline, they will fail or the data nodes will be damaged.

Different distributed databases have different levels of data longevity. Some of them support data durability at the machine / node level, others do it at the cluster level, and some do not provide this functionality out of the box. Some form of replication is usually used to increase longevity — if data is stored on several nodes and one of the nodes stops working, the data will still be available. Here is a good article explaining why achieving longevity in distributed systems can be a serious challenge.

Why does data longevity matter when building a payment system? If the data is critical (for example, these are payments), then we cannot afford to lose them in many of the parts of our system. The distributed data warehouses that we built had to maintain data longevity at the cluster level — so even if the instances crash, completed transactions will persist. Nowadays, most distributed data storage services — like Cassandra, MongoDB, HDFS, or Dynamodb — all maintain longevity at various levels and can all be configured to provide cluster-level longevity.

Preservation of messages (message persistence) and durability (durability)

Nodes in distributed systems perform calculations, store data, and send messages to each other. A key characteristic of sending messages is how reliably these messages will arrive. For critical systems, there is often a requirement that none of the messages be lost.

In the case of distributed systems, messaging is usually performed using some distributed message service — RabbitMQ, Kafka, or others. These message brokers can support (or are configured to support) various levels of message delivery reliability.

Preservation of the message means that when a failure occurs on the node processing the message, the message will still be available for processing after the problem is resolved. Message longevity is typically used at the message queue level. With a durable message queue, if the queue (or node) goes offline when the message is sent, it will still receive the message when it returns to the online one. A good detailed article on this issue is available at the link .

Why the safety and durability of messages are important in the construction of large payment systems? We had messages that we couldn’t afford to lose — for example, a message that a person initiated payment for a trip payment. This meant that the messaging system that we had to use had to work without loss: every message had to be delivered once. However, creating a system that delivers each message exactly once than at least once - these are tasks that differ significantly in their difficulty. We decided to implement a messaging system that delivers at least once, and chose a messaging bus , on top of which we decided to build it (we chose Kafka, creating a lossless cluster, which was required in our case).

Idempotency

In the case of distributed systems, anything may go wrong - connections may fall off in the middle or requests may time out. Customers will repeat these requests frequently. The idempotent system ensures that no matter what happens, and no matter how many times a specific query is executed, the actual execution of this query occurs only once. A good example is making a payment. If the client creates a request for payment, the request is successful, but if the client is in time out, then the client can repeat the same request. In the case of an idempotent system, money will not be written off twice from the person making the payment; but for a non-idemonet system this is quite possible.

Designing idempotent distributed systems requires some kind of distributed locking strategy. This is where the concepts we discussed earlier come into play. Let's say we intend to implement idempotency with optimistic locking to avoid parallel updates. In order for us to resort to optimistic blocking, the system must be strictly consistent - so that during the execution of the operation we can check whether another operation was started using some form of versioning.

There are many ways to achieve idempotency, and each particular choice will depend on the limitations of the system and the type of operation performed. Designing idempotent approaches is a worthy challenge for a developer — just look at Ben Nadel's posts , in which he talks about the different strategies he used , which include both distributed locks and database constraints . When you design a distributed system, idempotency can easily be one of the parts that you have missed from your attention. In our practice, we have come across cases in which my team “burned out” because it was not convinced that there was a correct idempotency for some key operations.

Why does idempotency matter when building a large payment system? Most importantly: to avoid double debits and double refunds. Given that our messaging system has a “at least once, without loss” type of delivery, we must assume that all messages can be delivered several times and the systems must guarantee idempotency. We made decisions to handle this using versioning and optimistic locking, where our systems implement idempotent behavior using strictly consistent storage as their data source.

Sharding and Quorum

Distributed systems often need to store much more data than a single node can afford. So how do we save the data set on the right number of machines? The most popular technique for this is sharding . The data is horizontally partitioned using some kind of hash assigned to the partition. Let many distributed databases today implement sharding in their “under the hood”, it is in itself an interesting topic that should be studied - especially resharing . In 2010, Foursquare had a 17-hour downtime due to hitting a regional sharding event, after which the company shared an interesting post-mortem that sheds light on the root of the problem.

Many distributed systems have data or calculations that replicate across multiple nodes. In order to make sure that operations are performed in a consistent manner, a voting approach is defined, in which, in order to recognize the operation as successful, it is necessary that a certain number of nodes get the same result. This process is called quorum.

Why quorum and sharding make sense when building a large payment system in Uber? Both of these concepts are simple and are used almost everywhere. I met them when we set up replication in Cassandra. Cassandra (and other distributed systems) uses quorum and local quorum to ensure consistency between clusters.

Actor model

The familiar vocabulary we use to describe programming practices — things like variables, interfaces, method calls — involves systems from one machine. When we talk about distributed systems, we have to use other approaches. A common way to describe such systems is the model of actors , within which the code is seen in terms of communication. This model is popular due to the fact that it coincides with the mental model of how we envision, for example, the interaction of people in an organization. Another equally popular way of describing distributed systems is CSP, interacting sequential processes .

The model of actors is based on the actors who send each other messages and react to them. Each actor can do a limited set of things — create other actors, send messages to others, or decide what to do with the next message. With the help of a few simple rules, we can fairly well describe complex distributed systems that can restore themselves after the actor falls. If you are not familiar with this approach, then I recommend you an article Model of actors for 10 minutes by Brian Storty . For many languages, there are libraries or frameworks that implement the model of actors . For example, in Uber, we use Akka for some of our systems.

Why does it make sense to apply the model of actors in a large payment system? Many engineers have participated in the development of our system, most of whom have already had experience working on distributed systems. We decided to follow the standard distributed model instead of engaging in “bikes” and inventing our own distributed principles.

Reactive architecture

When building large distributed systems, the goal is usually to make them fault-tolerant, resilient, and scalable. Whether it is a payment system or some other high-load system, the patterns for achieving the desired can be the same. Those who deal with such systems regularly discover and disseminate the best practices of their construction - and the reactive architecture is a similar popular and widely used pattern.

To get acquainted with the reactive architecture, I suggest reading the Reactive Manifest ( in Russian ) and watching the 12-minute video here .

Why does it make sense to use a reactive architecture if you create a large payment system? Akka , the library we used to create most of our new payment system, is heavily influenced by reactive architecture. Many of our engineers involved in building this system were already familiar with the best practices of reactive programming. Following the reactive principles - creating a responsive, fault-tolerant, flexible message-driven system based on messages, we arrived at this conclusion in a natural way. The ability to have a model on which you can rely and with which you can assess the progress of development and its direction, has proved extremely useful, and I will rely on it in the future when creating new systems.

Conclusion

I was lucky to take part in the restructuring of a large-scale, distributed and critical system: the one that allows you to work with payments in Uber. Working in this environment, I became acquainted with many distributed concepts that I had not used before. I collected them here in the hope that others will find my story useful for starting the study of distributed systems or learn something new for themselves.

This post was devoted exclusively to the planning and architecture of such systems. There are many different subtleties that are worth telling about building, deploying and migrating high load systems, and also about their reliable operation; I am going to raise all these topics in subsequent posts.

Source: https://habr.com/ru/post/353734/

All Articles