Aitracking, Emotions and VR: Technology Convergence and Current Research

Virtual reality, emotion recognition, and lighttracking — three independently developing areas of knowledge and new, commercially attractive technology markets — are increasingly being considered in recent years to focus on convergence, merging, and synthesis of approaches to create new generation products. And in this natural process of rapprochement there is hardly anything surprising: besides the results, which can be spoken with caution, but also considerable user enthusiasm (by the way, the recent film “The First Player to Prepare” by Steven Spielberg literally visualizes many of the expected scenarios ). Let's discuss in more detail.

Virtual reality is, first of all, the opportunity to experience the most complete immersion in a fantastic, frankly speaking, world: fight with evil spirits, make friends with unicorns and dragons, imagine yourself with a huge centipede, go through the endless space to new planets. However, in order for virtual reality to be perceived - at the sensory level - really as a reality, right down to mixing with the surrounding reality, it must contain elements of natural communication: emotions and eye contact. Also in VR, a person can and should have additional supernormal abilities (for example, moving objects with a glance) as something self-evident. But everything is not so simple - the implementation of these opportunities is worth a lot of emerging problems and interesting technological solutions designed to remove them.

The gaming industry is rapidly adopting a host of technological know-how. Let us assume that simple aytrekery (in the form of game controllers) have already become available to a wide range of users of computer games, while in other areas the widespread use of these developments is still to be. It is obvious in this connection that one of the most rapidly developing trends in VR was the combination of virtual reality and light tracking systems. Recently, the Swedish eytroking giant Tobii joined the collaboration with Qualcomm , as reported in detail, the developer of virtual reality systems Oculus (owned by Facebook) teamed up with the well-known low cost startup of the eytreker The Eye Tribe (you can watch a demonstration of the Oculus with the integrated eytreker here ), traditionally the secret mode has acquired the large German IT-tracking company SMI (although no one knows the motive yet and what exactly the choice was due to ...).

Integration of the game controller's function into virtual reality allows the user to control objects with a glance (in the simplest case, to grab something or select an item from the menu, you just need to look at the object for a little more than a couple of moments to use the threshold method of fixation duration). However, this generates a so-called. The Midas-Touch Problem problem, when the user wants only to carefully examine the object, but in the end involuntarily activates any programmed function that he did not want to use initially.

')

Scientists from the University of Hong Kong (Pi & Shi, 2017) to solve the problem of Midas suggest using a dynamic threshold for fixation duration using a probabilistic model. This model takes into account the previous choices of the subject and calculates the probability of choice for the next object, while for objects with a high probability a shorter threshold duration of fixations is established than for objects with a low probability. The proposed algorithm demonstrated its advantages in terms of speed compared to the fixed threshold method of fixation duration. It should be noted that the mentioned system was designed for typing with the help of a glance, that is, it can only be applicable in interfaces where the number of objects does not change over time.

A group of developers from the Technical University of Munich (Schenk, Dreiser, Rigoll, & Dorr, 2017) proposed a system of interaction with computer interfaces using GazeEverywhere, which included the development of SPOCK (Schenk, Tiefenbacher, Rigoll, & Dorr, 2016) to solve the problem Midas. SPOCK is an object selection method consisting of two steps: after fixing on an object, the duration of which is above the set threshold, two circles appear above and below the object, slowly moving in different directions: the test subject’s task is to track the movement of one of them ( detection of the slow trace of eye movement just activates the selected object). Also, the GazeEverywhere system is curious in that it includes an online recalibration algorithm, which significantly improves the accuracy of localization of the look at the object.

As an alternative to the threshold method of fixation duration for selecting an object and for solving the Midas problem, a group of scientists from Weimar (Huckauf, Goettel, Heinbockel, & Urbina, 2005) considers it appropriate to use anti-saccades as an action of choice (anti-saccades are making eye movements in the opposite direction from the target). This method contributes to a more rapid selection of an object than the threshold method of the duration of fixations, however, it has less accuracy. Also, its use outside the laboratory raises questions: after all, looking at the desired object for a person is more natural behavior than not specifically looking in his direction.

Wu et al. (Wu, Wang, Lin, & Zhou, 2017) developed an even simpler method to solve the Midas problem - they suggested using a wink with one eye (i.e. a clear pattern: one eye is open and the other is closed) as a marker for selecting objects . However, in a dynamic gameplay, the integration of this method of selecting objects is hardly possible due to its discomfort, at least.

Pfeuffer et al. (Pfeuffer, Mayer, Mardanbegi, & Gellersen, 2017) suggest moving away from the idea of creating an interface, where the selection and manipulation of the object is carried out only through eye movements, and use Gaze + pinch interaction instead. This method is based on the fact that the choice of an object is carried out by localizing the gaze on it in combination with the gesture of folded fingers in a “pinch” (of both one hand and both hands). Gaze + pinch interaction technique has advantages both in comparison with “virtual hands”, because it allows you to manipulate virtual objects at a distance, as well as with controller devices, because it frees the user's hands and creates the option to extend the functionality by adding other gestures.

For successful virtual communication, social networks and multiplayer VR games, first of all, user avatars must be able to naturally express emotions. Now we can distinguish two types of solutions for adding emotionality to VR: installing additional devices and purely software solutions.

Thus, the project MindMaze Mask offers a solution in the form of sensors that measure the electrical activity of the facial muscles located inside the helmet. Also, its creators demonstrated an algorithm that, based on the current electrical activity of the muscles, is able to predict facial expressions for high-quality avatar drawing. Development from FACEteq ( emotion sensing in VR ) is built on similar principles.

Startup Veeso added virtual reality glasses with another camera that captures the movements of the lower half of the face. Combining the images of the lower half of the face and the eye area, they recognize the facial expressions of the entire face to transfer it to a virtual avatar.

A team from the Georgia Institute of Technology in partnership with Google (Hickson, Dufour, Sud, Kwatra, & Essa, 2017) offered a more elegant solution and developed a technology for recognizing human emotions in virtual reality glasses without adding any additional hardware devices to the frame. This algorithm is based on a convolutional neural network and recognizes facial expressions (“anger”, “joy”, “surprise”, “neutral facial expression”, “closed eyes”) on average in 74% of cases. For her training, the developers used their own dataset of eye images from infrared cameras of the integrated eytreker, recorded during the visualization of a particular emotion by the participants (there were 23 of them all) in glasses of virtual reality. In addition, dataset included emotional expressions of the entire face of study participants, recorded on a regular camera. As a method for the detection of facial expressions, they chose a well-known system based on recognition of action units - FACS (although they took only the action units of the upper half of the face). Each action unit was recognized by the proposed algorithm in 63.7% of cases without personalization, and in 70.2% with personalization.

Similar developments in the field of detecting emotions only in images of the eye area are without reference to virtual reality (Priya & Muralidhar, 2017; Vinotha, Arun, & Arun, 2013). For example, (Priya & Muralidhar, 2017) also designed a system based on action units and derived an algorithm for recognizing seven emotional facial expressions (joy, sadness, anger, fear, disgust, surprise and neutral facial expression) at seven points (three along the eyebrow, two in the corners of the eye and two more in the center of the upper and lower eyelids), which works with an accuracy of 78.2%.

Global research and experiments are ongoing, and the Neurodata Lab team on eytracking is sure to tell you about them. Stay with us.

Bibliography:

Hickson, S., Dufour, N., Sud, A., Kwatra, V., & Essa, I. (2017). Eyemotion: Classifying facial expressions in VR using eye-tracking cameras. Retrieved from arxiv.org/abs/1707.07204

Huckauf, A., Goettel, T., Heinbockel, M., & Urbina, M. (2005). Anti-saccades can reduce the amount of touch-problem. In Proceedings of the 2nd Symposium on Applied Perception in Graphics and Visualization, APGV 2005, A Coruña, Spain, August 26-28, 2005 (pp. 170–170). doi.org/10.1145/1080402.1080453

Pfeuffer, K., Mayer, B., Mardanbegi, D., & Gellersen, H. (2017). Gaze + pinch interaction in virtual reality. Proceedings of the 5th Symposium on Spatial User Interaction - SUI '17, (October), 99–108. doi.org/10.1145/3131277.3132180

Pi, J., & Shi, BE (2017). Probabilistic adjustment of dwell time for eye typing. Proceedings - 2017 10th International Conference on Human System Interactions, HSI 2017, 251–257. doi.org/10.1109/HSI.2017.8005041

Priya, VR, & Muralidhar, A. (2017). Facial Emotion Recognition Using Eye. International Journal of Applied Engineering Research ISSN, 12 (September), 5655–5659. doi.org/10.1109/SMC.2015.387

Schenk, S., Dreiser, M., Rigoll, G., & Dorr, M. (2017). GazeEverywhere: Enabling Your Computer for Everyday Scenarios. CHI '17 Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, 3034–3044. doi.org/10.1145/3025453.3025455

Schenk, S., Tiefenbacher, P., Rigoll, G., & Dorr, M. (2016). Spock. CHI EA '16, 2681–2687 Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems. doi.org/10.1145/2851581.2892291

Vinotha, SR, Arun, R., & Arun, T. (2013). Emotion Recognition from Human Eye Expression. International Journal of Research in Computer and Communication Technology, 2 (4), 158–164.

Wu, T., Wang, P., Lin, Y., & Zhou, C. (2017). Non Resistant Eye Control Approach For Disabled People Based On Kinect 2.0 Sensor. IEEE Sensors Letters, 1 (4), 1–4. doi.org/10.1109/LSENS.2017.2720718

Material author:

Maria Konstantinova, Researcher at Neurodata Lab , biologist, physiologist, specialist in visual sensory system, oculography and ocular motorics.

Virtual reality is, first of all, the opportunity to experience the most complete immersion in a fantastic, frankly speaking, world: fight with evil spirits, make friends with unicorns and dragons, imagine yourself with a huge centipede, go through the endless space to new planets. However, in order for virtual reality to be perceived - at the sensory level - really as a reality, right down to mixing with the surrounding reality, it must contain elements of natural communication: emotions and eye contact. Also in VR, a person can and should have additional supernormal abilities (for example, moving objects with a glance) as something self-evident. But everything is not so simple - the implementation of these opportunities is worth a lot of emerging problems and interesting technological solutions designed to remove them.

The gaming industry is rapidly adopting a host of technological know-how. Let us assume that simple aytrekery (in the form of game controllers) have already become available to a wide range of users of computer games, while in other areas the widespread use of these developments is still to be. It is obvious in this connection that one of the most rapidly developing trends in VR was the combination of virtual reality and light tracking systems. Recently, the Swedish eytroking giant Tobii joined the collaboration with Qualcomm , as reported in detail, the developer of virtual reality systems Oculus (owned by Facebook) teamed up with the well-known low cost startup of the eytreker The Eye Tribe (you can watch a demonstration of the Oculus with the integrated eytreker here ), traditionally the secret mode has acquired the large German IT-tracking company SMI (although no one knows the motive yet and what exactly the choice was due to ...).

Integration of the game controller's function into virtual reality allows the user to control objects with a glance (in the simplest case, to grab something or select an item from the menu, you just need to look at the object for a little more than a couple of moments to use the threshold method of fixation duration). However, this generates a so-called. The Midas-Touch Problem problem, when the user wants only to carefully examine the object, but in the end involuntarily activates any programmed function that he did not want to use initially.

')

Virtual King Midas

Scientists from the University of Hong Kong (Pi & Shi, 2017) to solve the problem of Midas suggest using a dynamic threshold for fixation duration using a probabilistic model. This model takes into account the previous choices of the subject and calculates the probability of choice for the next object, while for objects with a high probability a shorter threshold duration of fixations is established than for objects with a low probability. The proposed algorithm demonstrated its advantages in terms of speed compared to the fixed threshold method of fixation duration. It should be noted that the mentioned system was designed for typing with the help of a glance, that is, it can only be applicable in interfaces where the number of objects does not change over time.

A group of developers from the Technical University of Munich (Schenk, Dreiser, Rigoll, & Dorr, 2017) proposed a system of interaction with computer interfaces using GazeEverywhere, which included the development of SPOCK (Schenk, Tiefenbacher, Rigoll, & Dorr, 2016) to solve the problem Midas. SPOCK is an object selection method consisting of two steps: after fixing on an object, the duration of which is above the set threshold, two circles appear above and below the object, slowly moving in different directions: the test subject’s task is to track the movement of one of them ( detection of the slow trace of eye movement just activates the selected object). Also, the GazeEverywhere system is curious in that it includes an online recalibration algorithm, which significantly improves the accuracy of localization of the look at the object.

As an alternative to the threshold method of fixation duration for selecting an object and for solving the Midas problem, a group of scientists from Weimar (Huckauf, Goettel, Heinbockel, & Urbina, 2005) considers it appropriate to use anti-saccades as an action of choice (anti-saccades are making eye movements in the opposite direction from the target). This method contributes to a more rapid selection of an object than the threshold method of the duration of fixations, however, it has less accuracy. Also, its use outside the laboratory raises questions: after all, looking at the desired object for a person is more natural behavior than not specifically looking in his direction.

Wu et al. (Wu, Wang, Lin, & Zhou, 2017) developed an even simpler method to solve the Midas problem - they suggested using a wink with one eye (i.e. a clear pattern: one eye is open and the other is closed) as a marker for selecting objects . However, in a dynamic gameplay, the integration of this method of selecting objects is hardly possible due to its discomfort, at least.

Pfeuffer et al. (Pfeuffer, Mayer, Mardanbegi, & Gellersen, 2017) suggest moving away from the idea of creating an interface, where the selection and manipulation of the object is carried out only through eye movements, and use Gaze + pinch interaction instead. This method is based on the fact that the choice of an object is carried out by localizing the gaze on it in combination with the gesture of folded fingers in a “pinch” (of both one hand and both hands). Gaze + pinch interaction technique has advantages both in comparison with “virtual hands”, because it allows you to manipulate virtual objects at a distance, as well as with controller devices, because it frees the user's hands and creates the option to extend the functionality by adding other gestures.

VR Emotions

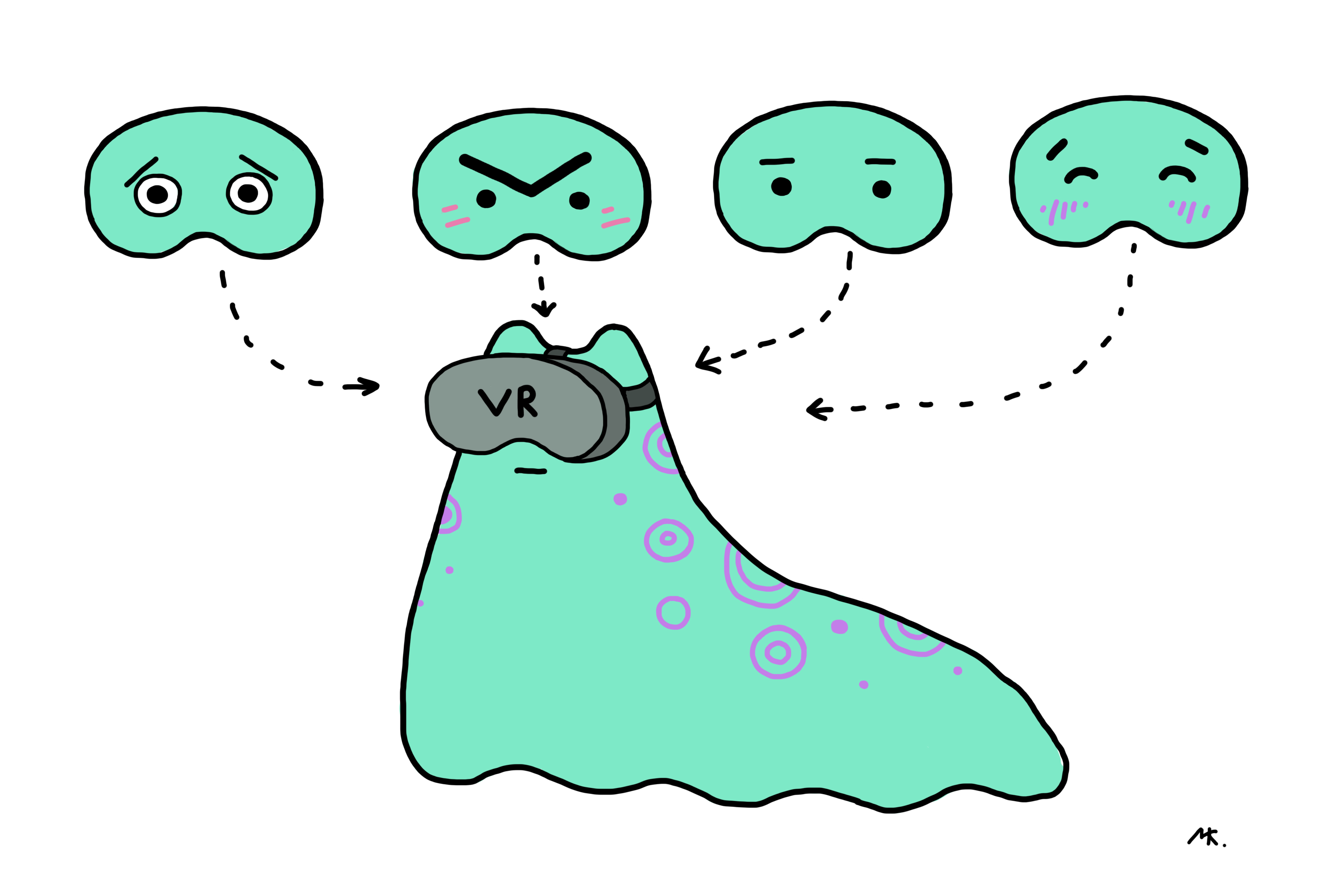

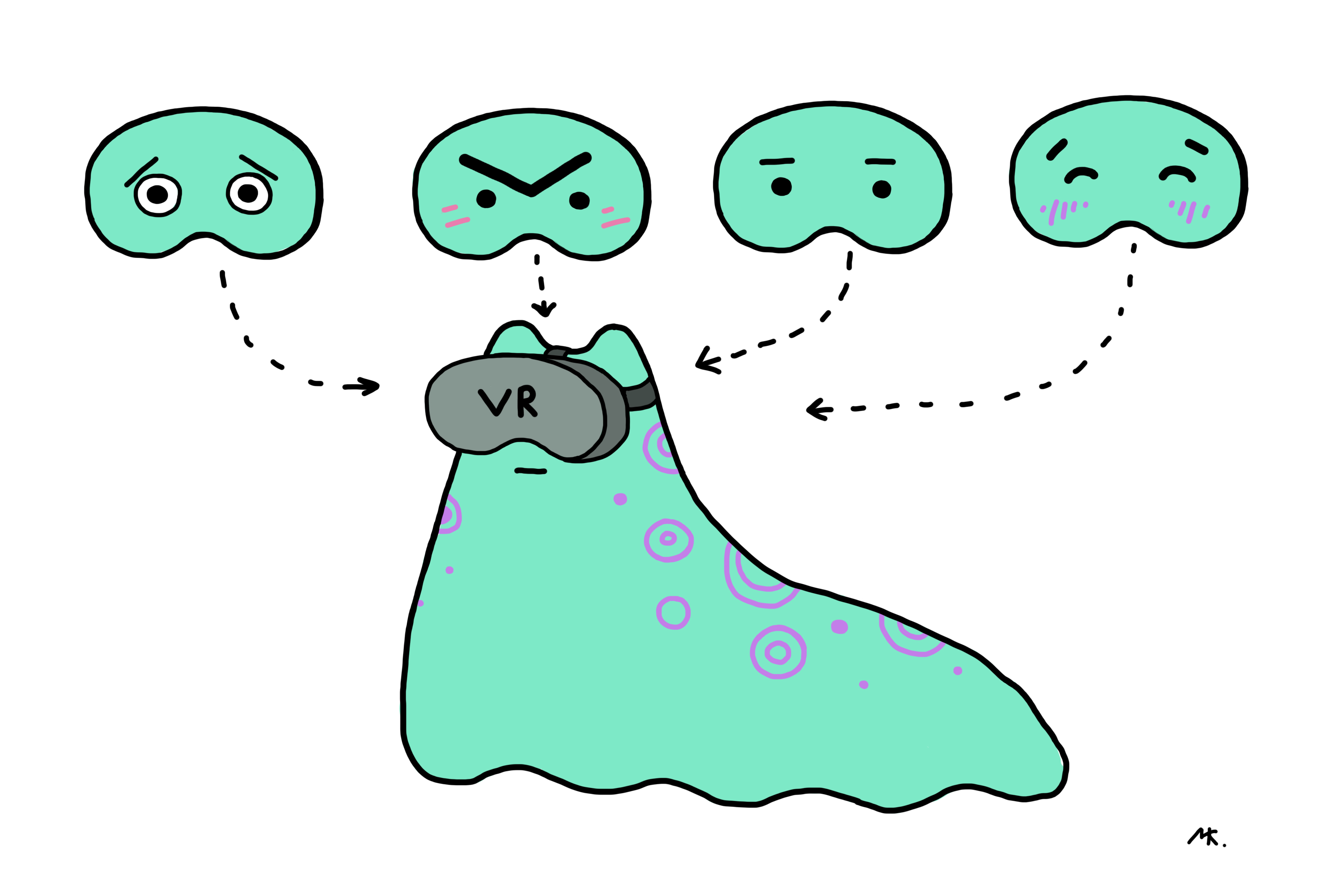

For successful virtual communication, social networks and multiplayer VR games, first of all, user avatars must be able to naturally express emotions. Now we can distinguish two types of solutions for adding emotionality to VR: installing additional devices and purely software solutions.

Thus, the project MindMaze Mask offers a solution in the form of sensors that measure the electrical activity of the facial muscles located inside the helmet. Also, its creators demonstrated an algorithm that, based on the current electrical activity of the muscles, is able to predict facial expressions for high-quality avatar drawing. Development from FACEteq ( emotion sensing in VR ) is built on similar principles.

Startup Veeso added virtual reality glasses with another camera that captures the movements of the lower half of the face. Combining the images of the lower half of the face and the eye area, they recognize the facial expressions of the entire face to transfer it to a virtual avatar.

A team from the Georgia Institute of Technology in partnership with Google (Hickson, Dufour, Sud, Kwatra, & Essa, 2017) offered a more elegant solution and developed a technology for recognizing human emotions in virtual reality glasses without adding any additional hardware devices to the frame. This algorithm is based on a convolutional neural network and recognizes facial expressions (“anger”, “joy”, “surprise”, “neutral facial expression”, “closed eyes”) on average in 74% of cases. For her training, the developers used their own dataset of eye images from infrared cameras of the integrated eytreker, recorded during the visualization of a particular emotion by the participants (there were 23 of them all) in glasses of virtual reality. In addition, dataset included emotional expressions of the entire face of study participants, recorded on a regular camera. As a method for the detection of facial expressions, they chose a well-known system based on recognition of action units - FACS (although they took only the action units of the upper half of the face). Each action unit was recognized by the proposed algorithm in 63.7% of cases without personalization, and in 70.2% with personalization.

Similar developments in the field of detecting emotions only in images of the eye area are without reference to virtual reality (Priya & Muralidhar, 2017; Vinotha, Arun, & Arun, 2013). For example, (Priya & Muralidhar, 2017) also designed a system based on action units and derived an algorithm for recognizing seven emotional facial expressions (joy, sadness, anger, fear, disgust, surprise and neutral facial expression) at seven points (three along the eyebrow, two in the corners of the eye and two more in the center of the upper and lower eyelids), which works with an accuracy of 78.2%.

Global research and experiments are ongoing, and the Neurodata Lab team on eytracking is sure to tell you about them. Stay with us.

Bibliography:

Hickson, S., Dufour, N., Sud, A., Kwatra, V., & Essa, I. (2017). Eyemotion: Classifying facial expressions in VR using eye-tracking cameras. Retrieved from arxiv.org/abs/1707.07204

Huckauf, A., Goettel, T., Heinbockel, M., & Urbina, M. (2005). Anti-saccades can reduce the amount of touch-problem. In Proceedings of the 2nd Symposium on Applied Perception in Graphics and Visualization, APGV 2005, A Coruña, Spain, August 26-28, 2005 (pp. 170–170). doi.org/10.1145/1080402.1080453

Pfeuffer, K., Mayer, B., Mardanbegi, D., & Gellersen, H. (2017). Gaze + pinch interaction in virtual reality. Proceedings of the 5th Symposium on Spatial User Interaction - SUI '17, (October), 99–108. doi.org/10.1145/3131277.3132180

Pi, J., & Shi, BE (2017). Probabilistic adjustment of dwell time for eye typing. Proceedings - 2017 10th International Conference on Human System Interactions, HSI 2017, 251–257. doi.org/10.1109/HSI.2017.8005041

Priya, VR, & Muralidhar, A. (2017). Facial Emotion Recognition Using Eye. International Journal of Applied Engineering Research ISSN, 12 (September), 5655–5659. doi.org/10.1109/SMC.2015.387

Schenk, S., Dreiser, M., Rigoll, G., & Dorr, M. (2017). GazeEverywhere: Enabling Your Computer for Everyday Scenarios. CHI '17 Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, 3034–3044. doi.org/10.1145/3025453.3025455

Schenk, S., Tiefenbacher, P., Rigoll, G., & Dorr, M. (2016). Spock. CHI EA '16, 2681–2687 Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems. doi.org/10.1145/2851581.2892291

Vinotha, SR, Arun, R., & Arun, T. (2013). Emotion Recognition from Human Eye Expression. International Journal of Research in Computer and Communication Technology, 2 (4), 158–164.

Wu, T., Wang, P., Lin, Y., & Zhou, C. (2017). Non Resistant Eye Control Approach For Disabled People Based On Kinect 2.0 Sensor. IEEE Sensors Letters, 1 (4), 1–4. doi.org/10.1109/LSENS.2017.2720718

Material author:

Maria Konstantinova, Researcher at Neurodata Lab , biologist, physiologist, specialist in visual sensory system, oculography and ocular motorics.

Source: https://habr.com/ru/post/353476/

All Articles