How to use the geodata analysis to predict the number of emergency calls in different parts of the city?

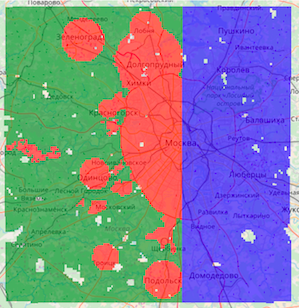

Try to solve the problem from the online hackathon Geohack.112 . Given: the territory of Moscow and the Moscow region was divided into squares of sizes from 500 to 500 meters. The initial data is the average number of emergency calls per day (numbers 112, 101, 102, 103, 104, 010, 020, 030, 040). The region under consideration was divided into western and eastern parts. Participants are invited, having learned from the western part, to predict the number of emergency calls for all squares in the east.

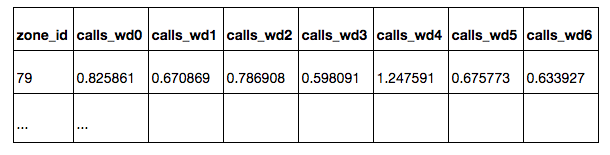

The zones.csv table lists all the squares with their coordinates. All squares are located in Moscow, or at a short distance from Moscow. The squares located in the western part of the sample are designed to train the model — the average number of emergency calls from a square per day is known for these squares:

calls_daily : all days

calls_workday : on workdays

calls_weekend : on weekends

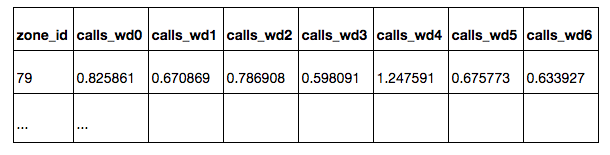

calls_wd {D} : By the day of the week D (0 - Monday, 6 - Sunday)

')

On the squares from the eastern part of the sample, it is necessary to construct a forecast of the number of calls for all days of the week. The quality assessment of the prediction will be made on a subset of squares, which does not include squares, from where calls are received extremely rarely. A subset of target squares has is_target = 1 in the table. For test squares, the values calls_ * and is_target are hidden.

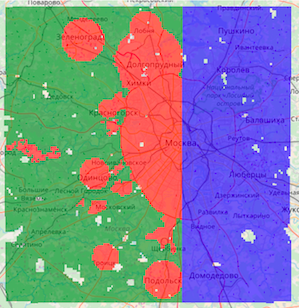

The map shows squares of three types:

Green - from the training part, not targeted

Red - from the training part, the target

Blue - test, it is necessary to build a forecast for them

As a solution, it is necessary to provide a CSV table with predictions for all test squares, for each square - for all days of the week.

Quality is evaluated only by a subset of target squares. The participants do not know which of the squares are target, however, the principle of choosing target squares in the training and test parts is identical. During the competition, the quality is estimated at 30% of the test target squares (chosen randomly), at the end of the competition the results are summed up on the remaining 70% of the squares.

The prediction quality metric, Kendall's Tau-b rank correlation coefficient, is calculated as the proportion of pairs of objects with incorrectly ordered predictions corrected for pairs with the same value of the target variable. The metric estimates the order in which the predictions relate to each other, rather than their exact values. Different days of the week are considered independent elements of the sample, i.e. the correlation coefficient is calculated according to the predictions for all test pairs (zone_id, day of the week).

Predicting the number of calls can be done using machine learning methods. To do this, each square needs to construct a vector with a feature description. Having trained a model in the marked western part of Moscow, it can be used to predict the target variable in the eastern part.

Under the terms of the competition, the data for solving the problem can be taken from any open source. When it comes to describing a small area on a map, the first thing that comes to mind is OpenStreetMap, an open, non-commercial electronic map that is created by the hands of the community.

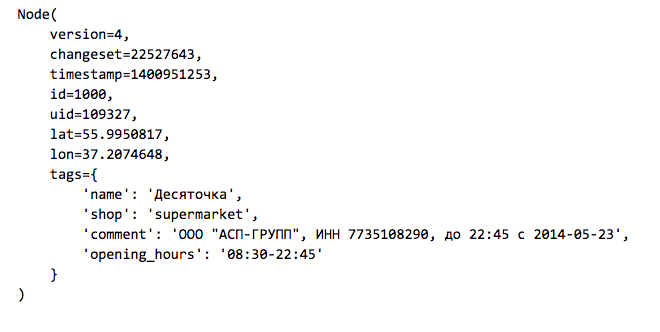

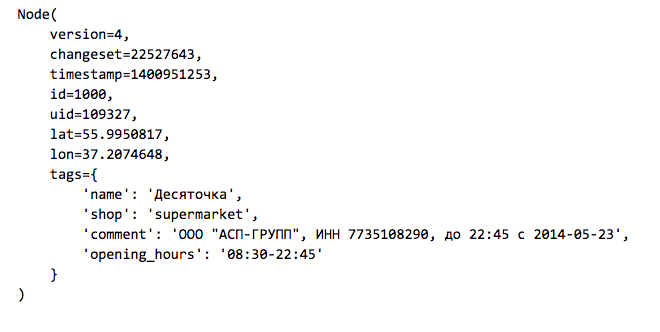

An OSM card is a collection of elements of three types:

Node: points on the map

Way: roads, squares, given by a set of points

Relation: links between elements, for example combining a multi-part road

Elements may have a set of tags - key-value pairs. Here is an example of a store that is listed on the map as an element of type Node:

The actual upload of OpenStreetMap data can be taken from the site GIS-Lab.info . Since we are interested in Moscow and its immediate environment, we’ll upload the RU-MOS.osm.pbf file - part of OpenStreetMap from the corresponding region in the binary format osm.pbf . There is a simple osmread library for reading such files from Python.

Before we start working, we consider from OSM all elements of the Node type from the area we need, which have tags (we will restrict the others and will not use them later).

The organizers of the competition prepared a baseline, which is available here . All the code that follows is contained in this baseline.

Working in Python, geodata can be quickly visualized on an interactive map using the folium library, which has Leaflet.js , the standard solution for displaying OpenStreetMap, under the hood.

We aggregate the resulting set of points by squares and construct simple signs:

1. Distance from the center of the square to the Kremlin

2. The number of points in the radius R from the center of the square (for different values of R)

a. all points tagged

b. railway stations

c. of stores

d. public transport stops

3. The maximum and average distance from the center of the square to the nearest points of the above species.

To quickly find points in proximity to the center of the square, we will use the kd tree data structure implemented in the SciKit-Learn package in the NearestNeighbors class.

As a result, for each square from the training and test sample, we have a trait description that can be used for prediction. A small modification of the proposed code can be taken into account in the signs of objects of other types. Participants can add their features, thereby giving the model more information about urban development.

Call number prediction

Experienced data analytics participants know that in order to get a high place, it is important not only to look at a public leaderboard, but also to do your own validation on a training set. We make a simple division of the training part of the sample into subsamples for training and validation in the ratio of 70/30.

Take only target squares and train a random forest model (RandomForestRegressor) to predict the average number of calls per day.

Let us evaluate the quality on the validation subsample, for all days of the week we construct the same prediction. The quality metric is a non-parametric correlation coefficient of Kendall tau-b, it is implemented in the SciPy package as a function of scipy.stats.kendalltau.

It turns out Validation score: 0.656881482683

This is not bad, because a metric value of 0 means no correlation, and 1 means a complete monotonic correspondence between real values and predicted ones.

Before joining the ranks of participants who receive an invitation to Data Fest, there remains one small step: to build a table with predictions on all test squares and send them to the system.

Why analyze geodata?

The main sources of data, not only spatial (geographic), but also temporal, for any telecom company are the complexes of equipment for receiving and transmitting a signal located throughout the countries of the service - Base Stations (BS). Spatial analysis of data is more difficult technically, but it can bring significant benefits and get signs that add a significant contribution to the effectiveness of machine learning models.

At MTS, using geographic data helps expand the knowledge base. They can be used to improve the quality of the service provided when analyzing subscribers' complaints about the quality of communication, improving the coverage of the communication signal, planning the development of the network and solving other problems where space-time communication is an important factor.

Increasing, especially in large cities, population density, the rapid development of infrastructure and road network, satellite images and vector maps, the number of buildings and POIs (points of interest) from open area maps related to the provision of services - all these spatial data can be obtained from external sources. Such data can be used as an additional source of information, as well as provide an objective picture, regardless of network coverage.

Did you like the task? Then we invite you to take part in the online hackathon on geohack.112 geographic data analysis . Register and upload your solutions to the site until April 24th. The authors of the three best results will receive cash prizes. Separate nominations are provided for participants who submitted the best public solution of the problem and the best visualization of data journalism. The total prize fund of MTS GeoHack is 500,000 rubles . We hope to see new interesting approaches to the generation of spatial features, visualization of geodata and the use of new open sources of information.

The winners will be awarded on April 28 at the DataFest conference.

The zones.csv table lists all the squares with their coordinates. All squares are located in Moscow, or at a short distance from Moscow. The squares located in the western part of the sample are designed to train the model — the average number of emergency calls from a square per day is known for these squares:

calls_daily : all days

calls_workday : on workdays

calls_weekend : on weekends

calls_wd {D} : By the day of the week D (0 - Monday, 6 - Sunday)

')

On the squares from the eastern part of the sample, it is necessary to construct a forecast of the number of calls for all days of the week. The quality assessment of the prediction will be made on a subset of squares, which does not include squares, from where calls are received extremely rarely. A subset of target squares has is_target = 1 in the table. For test squares, the values calls_ * and is_target are hidden.

The map shows squares of three types:

Green - from the training part, not targeted

Red - from the training part, the target

Blue - test, it is necessary to build a forecast for them

As a solution, it is necessary to provide a CSV table with predictions for all test squares, for each square - for all days of the week.

Quality is evaluated only by a subset of target squares. The participants do not know which of the squares are target, however, the principle of choosing target squares in the training and test parts is identical. During the competition, the quality is estimated at 30% of the test target squares (chosen randomly), at the end of the competition the results are summed up on the remaining 70% of the squares.

The prediction quality metric, Kendall's Tau-b rank correlation coefficient, is calculated as the proportion of pairs of objects with incorrectly ordered predictions corrected for pairs with the same value of the target variable. The metric estimates the order in which the predictions relate to each other, rather than their exact values. Different days of the week are considered independent elements of the sample, i.e. the correlation coefficient is calculated according to the predictions for all test pairs (zone_id, day of the week).

Reading a table of squares with Pandas

import pandas df_zones = pandas.read_csv('data/zones.csv', index_col='zone_id') df_zones.head() Extracting features from OpenStreetMap

Predicting the number of calls can be done using machine learning methods. To do this, each square needs to construct a vector with a feature description. Having trained a model in the marked western part of Moscow, it can be used to predict the target variable in the eastern part.

Under the terms of the competition, the data for solving the problem can be taken from any open source. When it comes to describing a small area on a map, the first thing that comes to mind is OpenStreetMap, an open, non-commercial electronic map that is created by the hands of the community.

An OSM card is a collection of elements of three types:

Node: points on the map

Way: roads, squares, given by a set of points

Relation: links between elements, for example combining a multi-part road

Elements may have a set of tags - key-value pairs. Here is an example of a store that is listed on the map as an element of type Node:

The actual upload of OpenStreetMap data can be taken from the site GIS-Lab.info . Since we are interested in Moscow and its immediate environment, we’ll upload the RU-MOS.osm.pbf file - part of OpenStreetMap from the corresponding region in the binary format osm.pbf . There is a simple osmread library for reading such files from Python.

Before we start working, we consider from OSM all elements of the Node type from the area we need, which have tags (we will restrict the others and will not use them later).

The organizers of the competition prepared a baseline, which is available here . All the code that follows is contained in this baseline.

Loading objects from OpenStreetMap

import pickle import osmread from tqdm import tqdm_notebook LAT_MIN, LAT_MAX = 55.309397, 56.13526 LON_MIN, LON_MAX = 36.770379, 38.19270 osm_file = osmread.parse_file('osm/RU-MOS.osm.pbf') tagged_nodes = [ entry for entry in tqdm_notebook(osm_file, total=18976998) if isinstance(entry, osmread.Node) if len(entry.tags) > 0 if (LAT_MIN < entry.lat < LAT_MAX) and (LON_MIN < entry.lon < LON_MAX) ] # with open('osm/tagged_nodes.pickle', 'wb') as fout: pickle.dump(tagged_nodes, fout, protocol=pickle.HIGHEST_PROTOCOL) # with open('osm/tagged_nodes.pickle', 'rb') as fin: tagged_nodes = pickle.load(fin) Working in Python, geodata can be quickly visualized on an interactive map using the folium library, which has Leaflet.js , the standard solution for displaying OpenStreetMap, under the hood.

An example of visualization with folium

import folium fmap = folium.Map([55.753722, 37.620657]) # / for node in tagged_nodes: if node.tags.get('railway') == 'station': folium.CircleMarker([node.lat, node.lon], radius=3).add_to(fmap) # calls_thresh = df_zones.calls_daily.quantile(.99) for _, row in df_zones.query('calls_daily > @calls_thresh').iterrows(): folium.features.RectangleMarker( bounds=((row.lat_bl, row.lon_bl), (row.lat_tr, row.lon_tr)), fill_color='red', ).add_to(fmap) # fmap.save('map_demo.html') We aggregate the resulting set of points by squares and construct simple signs:

1. Distance from the center of the square to the Kremlin

2. The number of points in the radius R from the center of the square (for different values of R)

a. all points tagged

b. railway stations

c. of stores

d. public transport stops

3. The maximum and average distance from the center of the square to the nearest points of the above species.

To quickly find points in proximity to the center of the square, we will use the kd tree data structure implemented in the SciKit-Learn package in the NearestNeighbors class.

Building a table with a characteristic description of the squares

import collections import math import numpy as np from sklearn.neighbors import NearestNeighbors kremlin_lat, kremlin_lon = 55.753722, 37.620657 def dist_calc(lat1, lon1, lat2, lon2): R = 6373.0 lat1 = math.radians(lat1) lon1 = math.radians(lon1) lat2 = math.radians(lat2) lon2 = math.radians(lon2) dlon = lon2 - lon1 dlat = lat2 - lat1 a = math.sin(dlat / 2)**2 + math.cos(lat1) * math.cos(lat2) * \ math.sin(dlon / 2)**2 c = 2 * math.atan2(math.sqrt(a), math.sqrt(1 - a)) return R * c df_features = collections.OrderedDict([]) df_features['distance_to_kremlin'] = df_zones.apply( lambda row: dist_calc(row.lat_c, row.lon_c, kremlin_lat, kremlin_lon), axis=1) # , POINT_FEATURE_FILTERS = [ ('tagged', lambda node: len(node.tags) > 0), ('railway', lambda node: node.tags.get('railway') == 'station'), ('shop', lambda node: 'shop' in node.tags), ('public_transport', lambda node: 'public_transport' in node.tags), ] # X_zone_centers = df_zones[['lat_c', 'lon_c']].as_matrix() for prefix, point_filter in POINT_FEATURE_FILTERS: # coords = np.array([ [node.lat, node.lon] for node in tagged_nodes if point_filter(node) ]) # neighbors = NearestNeighbors().fit(coords) # " R " for radius in [0.001, 0.003, 0.005, 0.007, 0.01]: dists, inds = neighbors.radius_neighbors(X=X_zone_centers, radius=radius) df_features['{}_points_in_{}'.format(prefix, radius)] = \ np.array([len(x) for x in inds]) # " K " for n_neighbors in [3, 5, 10]: dists, inds = neighbors.kneighbors(X=X_zone_centers, n_neighbors=n_neighbors) df_features['{}_mean_dist_k_{}'.format(prefix, n_neighbors)] = \ dists.mean(axis=1) df_features['{}_max_dist_k_{}'.format(prefix, n_neighbors)] = \ dists.max(axis=1) df_features['{}_std_dist_k_{}'.format(prefix, n_neighbors)] = \ dists.std(axis=1) # " " df_features['{}_min'.format(prefix)] = dists.min(axis=1) # features.csv df_features = pandas.DataFrame(df_features, index=df_zones.index) df_features.to_csv('data/features.csv') df_features.head() As a result, for each square from the training and test sample, we have a trait description that can be used for prediction. A small modification of the proposed code can be taken into account in the signs of objects of other types. Participants can add their features, thereby giving the model more information about urban development.

Call number prediction

Experienced data analytics participants know that in order to get a high place, it is important not only to look at a public leaderboard, but also to do your own validation on a training set. We make a simple division of the training part of the sample into subsamples for training and validation in the ratio of 70/30.

Take only target squares and train a random forest model (RandomForestRegressor) to predict the average number of calls per day.

Isolation of the validation subsample and learning by RandomForest

from sklearn.model_selection import train_test_split from sklearn.ensemble import RandomForestRegressor df_zones_train = df_zones.query('is_test == 0 & is_target == 1') idx_train, idx_valid = train_test_split(df_zones_train.index, test_size=0.3) X_train = df_features.loc[idx_train, :] y_train = df_zones.loc[idx_train, 'calls_daily'] model = RandomForestRegressor(n_estimators=100, n_jobs=4) model.fit(X_train, y_train) Let us evaluate the quality on the validation subsample, for all days of the week we construct the same prediction. The quality metric is a non-parametric correlation coefficient of Kendall tau-b, it is implemented in the SciPy package as a function of scipy.stats.kendalltau.

It turns out Validation score: 0.656881482683

This is not bad, because a metric value of 0 means no correlation, and 1 means a complete monotonic correspondence between real values and predicted ones.

Forecast and quality assessment on validation

from scipy.stats import kendalltau X_valid = df_features.loc[idx_valid, :] y_valid = df_zones.loc[idx_valid, 'calls_daily'] y_pred = model.predict(X_valid) target_columns = ['calls_wd{}'.format(d) for d in range(7)] df_valid_target = df_zones.loc[idx_valid, target_columns] df_valid_predictions = pandas.DataFrame(collections.OrderedDict([ (column_name, y_pred) for column_name in target_columns ]), index=idx_valid) df_comparison = pandas.DataFrame({ 'target': df_valid_target.unstack(), 'prediction': df_valid_predictions.unstack(), }) valid_score = kendalltau(df_comparison['target'], df_comparison['prediction']).correlation print('Validation score:', valid_score) Before joining the ranks of participants who receive an invitation to Data Fest, there remains one small step: to build a table with predictions on all test squares and send them to the system.

Building predictions on the test

idx_test = df_zones.query('is_test == 1').index X_test = df_features.loc[idx_test, :] y_pred = model.predict(X_test) df_test_predictions = pandas.DataFrame(collections.OrderedDict([ (column_name, y_pred) for column_name in target_columns ]), index=idx_test) df_test_predictions.to_csv('data/sample_submission.csv') df_test_predictions.head() Why analyze geodata?

The main sources of data, not only spatial (geographic), but also temporal, for any telecom company are the complexes of equipment for receiving and transmitting a signal located throughout the countries of the service - Base Stations (BS). Spatial analysis of data is more difficult technically, but it can bring significant benefits and get signs that add a significant contribution to the effectiveness of machine learning models.

At MTS, using geographic data helps expand the knowledge base. They can be used to improve the quality of the service provided when analyzing subscribers' complaints about the quality of communication, improving the coverage of the communication signal, planning the development of the network and solving other problems where space-time communication is an important factor.

Increasing, especially in large cities, population density, the rapid development of infrastructure and road network, satellite images and vector maps, the number of buildings and POIs (points of interest) from open area maps related to the provision of services - all these spatial data can be obtained from external sources. Such data can be used as an additional source of information, as well as provide an objective picture, regardless of network coverage.

Did you like the task? Then we invite you to take part in the online hackathon on geohack.112 geographic data analysis . Register and upload your solutions to the site until April 24th. The authors of the three best results will receive cash prizes. Separate nominations are provided for participants who submitted the best public solution of the problem and the best visualization of data journalism. The total prize fund of MTS GeoHack is 500,000 rubles . We hope to see new interesting approaches to the generation of spatial features, visualization of geodata and the use of new open sources of information.

The winners will be awarded on April 28 at the DataFest conference.

Source: https://habr.com/ru/post/353334/

All Articles