Docker. Start

My colleagues and I felt about the same emotions when we started working with Docker. In the overwhelming majority of cases, this was due to a lack of understanding of the underlying mechanisms, so its behavior seemed unpredictable to us. Now passions have subsided and outbursts of hatred are occurring less and less. Moreover, we gradually evaluate its merits in practice and we start to like it ... In order to reduce the degree of primary rejection and to achieve the maximum effect from use, you need to look into the Docker’s kitchen and take a good look around there.

Let's start with what we need Docker for:

- isolated application launch in containers

- simplified development, testing and deployment of applications

- no need to configure the environment to run - it comes with the application - in a container

- Simplifies application scalability and management through container orchestration systems.

Prehistory

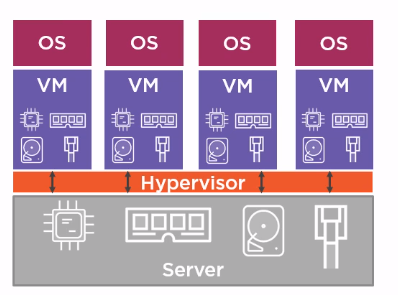

You can use virtual machines to isolate processes running on the same host, launch applications designed for different platforms. Virtual machines share the physical resources of the host:

')

- CPU,

- memory,

- disk space,

- network interfaces.

On each VM, we install the necessary OS and run the applications. The disadvantage of this approach is that a significant part of the host resources is spent not on the payload (application operation), but on the work of several operating systems.

Containers

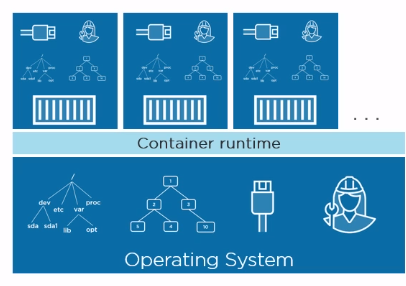

An alternative approach to isolating applications is containers. The concept of containers is not new and has long been known in Linux. The idea is to select an isolated area within one OS and launch an application in it. In this case, we are talking about virtualization at the OS level. Unlike VM containers, they separately use their own piece of OS:

- file system

- process tree

- network interfaces

- and etc.

So An application running in a container thinks that it is one in the entire OS. Isolation is achieved by using Linux mechanisms such as namespaces and control groups . Simply put, namespaces provide isolation within the operating system, and control groups set limits on the host's resource container consumption to balance the distribution of resources between running containers.

So containers themselves are not something new, just the Docker project, firstly, hid the complex mechanisms of namespaces, control groups, and secondly, it is surrounded by an ecosystem that provides convenient use of containers at all stages of software development.

Images

The image in the first approximation can be considered as a set of files. The image includes everything you need to run and run an application on a bare docking machine: OS, runtime, and application ready for deployment.

But with such a consideration, the question arises: if we want to use several images on one host, then it would be irrational both in terms of download and storage, so that each image dragged everything necessary for its work, because most of the files will be repeated, and vary — only the application being started and possibly the runtime. File duplication allows to avoid image duplication.

The image consists of layers, each of which is an immutable file system, and a simple set of files and directories. The image as a whole is a unified file system (Union File System), which can be viewed as a result of merging layer file systems. A combined file system can handle conflicts, for example, when there are files and directories with the same name in different layers. Each next layer adds or removes some files from the previous layers. In this context, “deletes” can be viewed as “shadows”, i.e. the file in the underlying layer remains, but it will not be visible in the integrated file system.

You can draw an analogy with Git: layers are like separate commits, and the image as a whole is the result of the squash operation. As we will see, this parallels with Git does not end there. There are various implementations of a unified file system, one of them is AUFS.

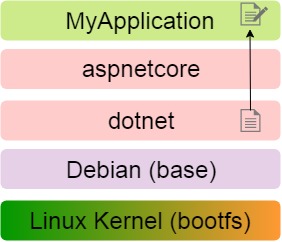

For example, consider the image of an arbitrary .NET application MyApplication: the first layer is the Linux kernel, followed by the layers of the OS, the execution environment, and the application itself.

The layers are read only and if in the MyApplication layer you need to change the file located in the dotnet layer, the file is first copied to the desired layer and then changed in it, remaining in the original layer in its original form.

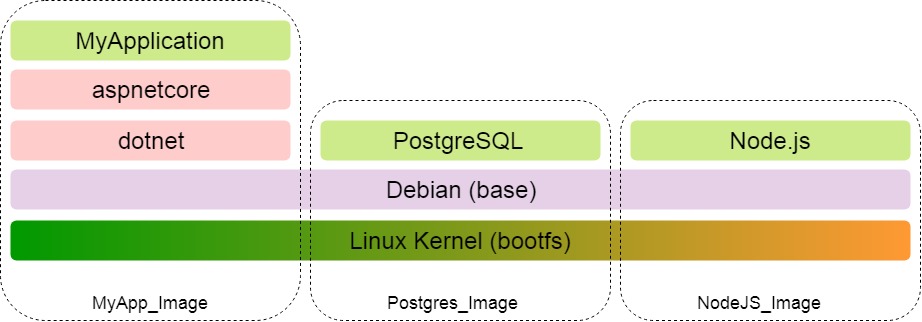

Immutability of layers allows you to use them with all images on the host. Suppose MyApplication is a web application that uses a database and interacts also with the NodeJS server.

Sharing is also evident when downloading an image. The first is loaded manifest, which describes which layers are included in the image. Further, only those layers from the manifest that are not yet locally downloaded are downloaded. So if we have already downloaded the kernel and OS for MyApplication, then for PostgreSQL and Node.js these layers will not be loaded already.

Summing up:

- An image is a set of files necessary for an application to run on a bare machine with Docker installed.

- The image consists of unchangeable layers, each of which adds / deletes / changes files from the previous layer.

- Immutability of layers allows you to use them together in different images.

Docker containers

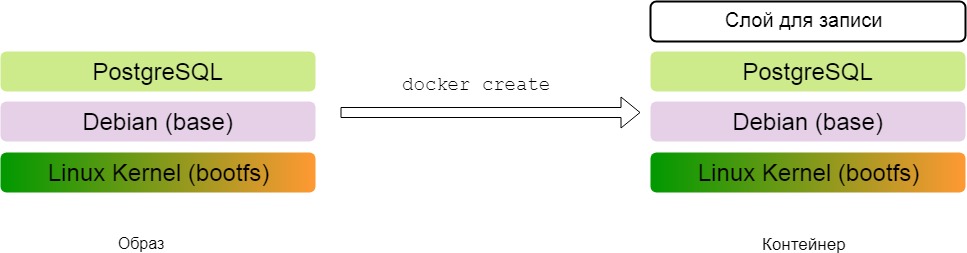

Docker-container is based on the image. The essence of converting an image into a container is to add a top layer for which writing is allowed. The results of the application (files) are written in this layer.

For example, we created a container based on an image with a PostgreSQL server and launched it. When we create a database, the corresponding files appear in the upper layer of the container - the layer for recording.

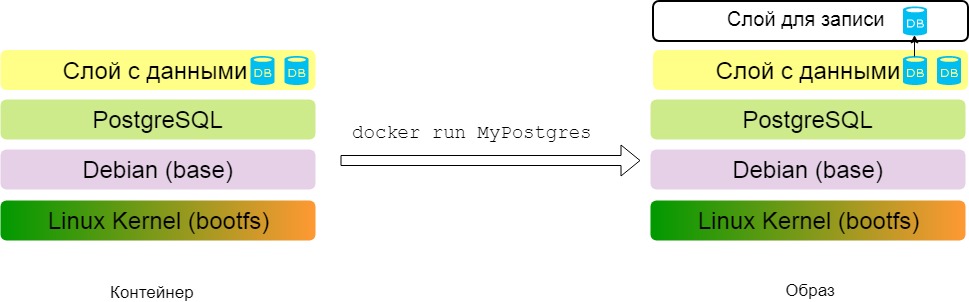

You can perform the reverse operation: from the container to make an image. The top layer of the container differs from the others only by its write permission, otherwise it is a regular layer - a set of files and directories. By making the top layer read only, we will convert the container into an image.

Now I can transfer the image to another machine and run it. At the same time on the PostgreSQL server you can see the databases created in the previous step. When changes are made during the operation of the container, the database file will be copied from the unchanged data layer to the recording layer and there it is already changed.

Docker

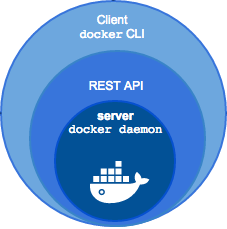

When we install the docker to the local machine, we get a client (CLI) and an http server that acts as a daemon. The server provides the REST API, and the console simply converts the commands entered into http requests.

Registry

Registry is a repository of images. The most famous is DockerHub. It resembles GitHub, only contains images, not source code. DockerHub also has repositories, public and private, you can download images (pull), upload changes to images (push). Once downloaded, images and containers based on them are stored locally until manually deleted.

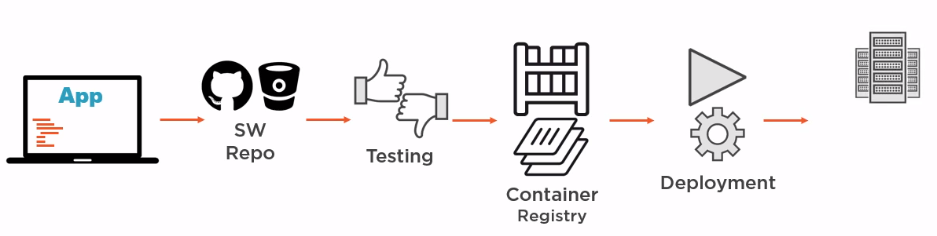

It is possible to create your own image repository, then, if necessary, Docker will search there for images that are not yet available locally. It should be noted that when using Docker, the image storage becomes the most important link in CI / CD: the developer commits to the repository, tests are run. If the tests were successful, then on the basis of a commit, an existing image is updated or a new image is assembled with a subsequent deployment. Moreover, the registry does not update the whole images, but only the necessary layers.

It is important not to limit the perception of the image as a kind of box in which the application is simply delivered to the destination and then launched. The application can be assembled inside the image (it is more accurate to say inside the container, but more on that later). In the diagram above, the server that builds the images can only have Docker installed, and not the various environments, platforms and applications needed to build the various components of our application.

Dockerfile

Dockerfile is a set of instructions on the basis of which a new image is built. Each instruction adds a new layer to the image. For example, consider the Dockerfile, on the basis of which an image of the previously considered .NET-application MyApplication could be created:

FROM microsoft/aspnetcore WORKDIR /app COPY bin/Debug/publish . ENTRYPOINT["dotnet", "MyApplication.dll"] Consider separately each instruction:

- we define the base image on the basis of which we will build our own. In this case, take microsoft / aspnetcore - the official image from Microsoft, which can be found on DockerHub

- set the working directory inside the image

- Copy the pre-spawn application MyApplication to the working directory inside the image. First, the source directory is written - the path relative to the context specified in the

docker build, and the second argument is the target directory inside the image, in this case the dot denotes the working directory - configuring the container as executable: in our case, the

dotnet MyApplication.dllcommand will be executed to start the container

If in the directory with Dockerfile execute the

docker build , we will get an image based on microsoft / aspnetcore, to which three more layers will be added.

Consider another Dockerfile, which demonstrates the excellent Docker feature that provides lightweight images. A similar file is generated by VisualStudio 2017 for a project with container support and it allows you to build an image from the application source code.

FROM microsoft/aspnetcore-build:2.0 AS publish WORKDIR /src COPY . . RUN dotnet restore RUN dotnet publish -o /publish FROM microsoft/aspnetcore:2.0 WORKDIR /app COPY --from=publish /publish . ENTRYPOINT ["dotnet", "MyApplication.dll"] Instructions in the file are divided into two sections:

- Defining an image for building an application: microsoft / aspnetcore-build. This image is designed to build, publish and run .NET applications and, according to DockerHub with the 2.0 tag, is 699 MB in size. Next, the application source files are copied into the image and the

dotnet restoreanddotnet buildcommands are executed inside it, placing the results in the / publish directory within the image. - The base image is defined, in this case it is microsoft / aspnetcore, which contains only the execution environment and, according to DockerHub with the 2.0 tag, is only 141 MB in size. Next, the working directory is determined and the result of the previous stage is copied into it (its name is specified in the

--fromargument), the container launch command is defined and that's all — the image is ready.

As a result, initially having the source code of the application, the application was saved on the basis of a heavy image with the SDK, and then the result is placed on top of a light image containing only the execution environment!

Finally, I want to note that intentionally, for simplicity, I used the concept of an image, considering the work with Dockerfile. In fact, the changes made by each instruction occur, of course, not in the image (after all, there are only unchanging layers in it), but in the container. The mechanism is as follows: a container is created from the base image (a layer is added for recording), the instruction is executed in this layer (it can add files to the layer for recording:

COPY or not: ENTRYPOINT ), the docker commit command is called and an image is obtained. The process of creating a container and commit to an image is repeated for each instruction in the file. As a result, in the process of forming the final image, as many intermediate images and containers are created as there are instructions in the file. All of them are automatically deleted after the end of the assembly of the final image.Conclusion

Of course, Docker is not a panacea and its use should be justified and motivated not only by the desire to use modern technology, which many people are talking about. At the same time, I am sure that Docker, applied correctly and in place, can bring many benefits at all stages of software development and make life easier for all participants in the process.

I hope I was able to reveal the basic points and interest to further study the issue. Of course, this article is not enough for mastering Docker, but I hope it will become one of the puzzle elements for understanding the general picture of what is happening in the world of containers under Docker control.

Links

- Docker Documentation

- Namespaces mechanism

- Mechanism control groups

- Docker Article

Source: https://habr.com/ru/post/353238/

All Articles